Computational Neuroscience Final Project * Depth Vision

advertisement

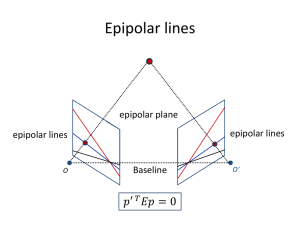

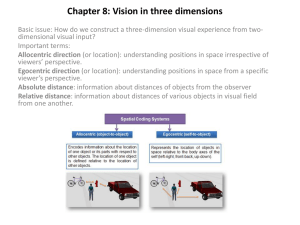

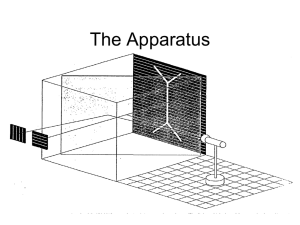

COMPUTATIONAL NEUROSCIENCE FINAL PROJECT – DEPTH VISION Omri Perez 2013 INTRO DEPTH CUES Pictorial Depth Cues Physiological Depth Cues Motion Parallax Stereoscopic Depth Cues PHYSIOLOGICAL DEPTH CUES Two Physiological Depth Cues: 1. Accommodation 2. Convergence PHYSIOLOGICAL DEPTH CUES Accommodation – PHYSIOLOGICAL DEPTH CUES Accommodation relaxed lens = far away accommodating lens = near What must the visual system be able to compute unconsciously? PHYSIOLOGICAL DEPTH CUES Convergence – PHYSIOLOGICAL DEPTH CUES Convergence small angle of convergence = far away large angle of convergence = near – – What two sensory systems is the brain integrating? What happens to images closer or farther away from fixation point? MOTION DEPTH CUES Parallax MOTION DEPTH CUES – Parallax Points at different locations in the visual field move at different speeds depending on their distance from fixation http://www.youtube.com/watch?v=ktdnA6 y27Gk&NR=1 Seeing Seeing in in Stereo Stereo SEEING IN STEREO It’s It’svery veryhard hardto toread readwords wordsififthere there are aremultiple multipleimages imageson onyour yourretina retina SEEING IN STEREO It’s It’svery veryhard hardto toread readwords wordsififthere there are aremultiple multipleimages imageson onyour yourretina retina But how many images are there on your retinae? BINOCULAR DISPARITY Your eyes have a different image on each retina hold pen at arms length and fixate the spot how many pens do you see? which pen matches which eye? BINOCULAR DISPARITY Your eyes have a different image on each retina now fixate the pen how many spots do you see? which spot matches which eye? BINOCULAR DISPARITY Binocular disparity is the difference between the two images BINOCULAR DISPARITY Binocular disparity is the difference between the two images Disparity depends on where the object is relative to the fixation point: objects closer than fixation project images that “cross” objects farther than fixation project images that do not “cross” BINOCULAR DISPARITY Corresponding retinal points BINOCULAR DISPARITY Corresponding retinal points BINOCULAR DISPARITY Corresponding retinal points BINOCULAR DISPARITY Corresponding retinal points BINOCULAR DISPARITY Points in space that have corresponding retinal points define a plane called the horopter or Panum’s fusional area The Horopter BINOCULAR DISPARITY Points not on the horopter will be disparate on the retina (they project images onto non-corresponding points) BINOCULAR DISPARITY Points not on the horopter will be disparate on the retina (they project images onto non-corresponding points) The nature of the disparity depends on where they are relative to the horopter BINOCULAR DISPARITY points nearer than horopter have crossed disparity points farther than horopter have uncrossed disparity BINOCULAR DISPARITY Why don’t we see double vision? BINOCULAR DISPARITY Why don’t we see double vision? Images with a small enough disparity are fused into a single image BINOCULAR DISPARITY Why don’t we see double vision? Images with a small enough disparity are fused into a single image The region of space that contains images with close enough disparity to be fused is called Panum’s Area BINOCULAR DISPARITY Panum’s Area extends just in front of and just behind the horopter STEREOPSIS Our brains interpret crossed and uncrossed disparity as depth That process is called stereoscopic depth perception or simply stereopsis STEREOPSIS Stereopsis requires that the brain can encode the two retinal images independently STEREOPSIS Primary visual cortex (V1) has bands of neurons that keep input from the two eyes separate CORTICAL HYPER COLUMNS IN V1 The basic processing unit of depth perception The cortical column consists of a complete set of orientation columns over a cycle of 180º and of right and left dominance columns in the visual cortex. A hypercolumn may be about 1 mm wide. OUR GOAL To compute the binocular depth of stereo images Left Right SIMULATING RECEPTIVE FIELDS IN V1 To emulate the receptive fields of V1 neurons we use the Gabor function. Sinus Even symmetry .* 10 10 20 20 20 30 30 30 40 40 40 50 10 Odd symmetry Gabor 10 50 2D Gaussian 20 30 40 50 50 10 20 30 40 50 10 10 10 20 20 20 30 30 30 40 40 40 50 50 10 20 30 40 50 10 20 30 40 50 10 20 30 40 50 50 10 20 30 40 50 FILTERING THE IMAGES WITH GABOR FILTERS Left Right We filter by doing a 2D convolution of the filters with the image. The different results are averaged together. ESTIMATION OF BINOCULAR DISPARITY 2D cross correlation (xcorr2) Tip: In most cases, peak cross correlation results in the x axis (columns) between the left and right eye should only be positive! BASIC ALGORITHM – MAXIMUM OF CROSS CORRELATION RANDOM DOT STEREOGRAM (RDS) 20 40 60 80 100 120 140 50 100 150 200 3 VARIATIONS: 1. maximum of cross correlation 2. First neuron to fire in a 2D LIF array (winner take all). The input is the cross correlation result. 3. Population vector of 2D LIF array after X simulation steps. Same input. Notice the horizontal smearing in 2 and 3 is because of cross over activity when switching patches YOUR TASK 1. 2. 3. 4. Load a pair of stereo images. You can use the supplied function image_loader.m which makes sure the image is in grayscale and has a proper dynamic range. Generate the filters. You can use the supplied function generate_filters.m to generate the array of filters. I urge you to try out different sizes (3rd parameter) than the default ones in the function. Filter each of the two images using the filters from the previous stage. You can use the function filter_with_all_filters.m to do this. Now, using the two filtered images, iterate over patches, calculate the cross correlation matrix and determine the current depth using the methods 1-3 described in the previous slide. You can tweak the overlap of the patches to reduce computation time. Note for methods 2 and 3: a. you can use the supplied function LIF_one_step_multiple_neurons.m to simulate the LIF neurons b. For methods 2 and 3 it is wise to normalize the xcorr2 results, e.g. by dividing by the maximum value or some such normalization. YOUR TASK - CONTINUED 5. Incorporate all these into a function of the form: result = find_depth_with_LIF( Left_im_name,Right_im_name, method_num,patch_size, use_filters ) Where result is the matrix representing the estimated depth (pixel shifts), Left and Right_im_name are the names of the stereo images. method_num is the number of the method (1-3, see above). patch_size the ratio of the patch size to the image dimensions. E.g. in an image that is 640x480 a ratio of 1/15 will produce a patch of size ~43x32. Please note that in matlab the indexing convention is rowsxcols (and not x,y) so the image is actually 480x640. use_filters is a flag that determines whether to filter the images (step 3 in previous slide) before computing the depth map (useful for debugging, however should be set to true when generating the final results). In addition to the supplied stereo image pairs, you should also generate a left and right random dot stereogram image pair using the supplied function RDS_generator.m together with a mask (I supplied you with an example mask, RDS_Pac-Man_mask.png) You can find other stereo image pairs online, e.g. http://vasc.ri.cmu.edu/idb/html/stereo/index.html Bonus: You can add a fourth depth estimation method of our choice. This can be something you read somewhere or an original idea. For example you can use one of the methods 1-3 but change the patch scan so it won’t be an orderly right to left then down one row and right to left. Instead it can be a random scan which, among other things, will cause several regions to be left uncalculated but other regions more tightly sampled. WHAT TO HAND IN (E-MAIL IN) 1. 2. Your code together with any images (regular and RDS) you used and the supplied images. A document showing the depth results on the two supplied stereo image pairs and one RDS you generated, for each of the 3 methods . (If you chose to do the bonus then show the results for the bonus method as well). Don’t forget when showing results for the RDS to relate them to the mask used to generate it. The document should contain a concise explanation of what you did, your algorithms and interpretation of the results. HOW TO HAND IN The project should be submitted by mail to Omri. Good Luck and a succesful test period (and vacation?) !!!