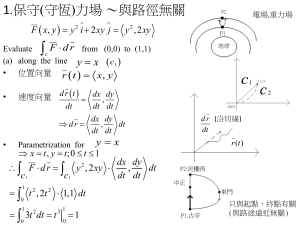

D 2 /d 2 + D 1 /d 1

advertisement

TETRA-project: SMART DATA CLOUDS (2014 – 2016) . Flemish Agency for Innovation by Science and Technology Contact persons: luc.mertens@uantwerpen.be gunther.steenackers@uantwerpen.be rudi.penne@uantwerpen.be Website: https://www.uantwerpen.be/op3mech/ TETRA: Smart Data Clouds (2014-2016): a. Security, track and trace b. Traffic control and classifications c. (Smart) Navigation d. Dynamic 3D body scan industrial cases [Haven van Antwerpen]. [Macq, LMS, Melexis] [Wheelshairs, PIAM, mo-Vis, Automotive LMS] [RSscan, ICRealisations, ODOS] Data Fusion: ind. Vision linked with CAE. Data from RGB, IR, ToF and HS cameras. TETRA: Smart Data Clouds (2014-2016): a. Wheelchair control - navigation: b. Gesture & Body-analysis: healthcare applications [mo-Vis, PIAM, Absolid] [RSScan, ICrealisation, Phaer, ODOS] ‘Data Fusion’ usable for future Healthcare . 03D2xx-cameras IFM-Electronics 64x50 pix. PMD[Vision]CamCube 3.0 352 x 288 pix! Recent: pmd PhotonICs® 19k-S3 Swiss Ranger 4500 MESA 176 x 144 pix. BV-ToF 128x120 pix. DepthSense 325 ( 320 x 240 pix ) Fotonic RGB_C-Series 160 x 120 pixel Previous models: P – E series. Melexis: EVK75301 80x60 pix. Near Future: MLX75023 automotive QVGA ToF sensor . ODOS: 1024x1248 pix. Real.iZ-1K vision system ToF VISION: world to image, image to world - conversion 1 1 P = [ tg(φ) tg(ψ) 1 d/D ] , j J/2 i P’ = [ tg(φ) tg(ψ) 1 ] . ( f can be chosen to be the unit.) j0 J φ i0 I/2 (0,0,f) f=1 z I x y ψ R A u.ku r D v.kv d D/d = x/uk = y/vk = z/f Every world point is unique with respect to a lot important coordinates: x, y, z, Nx, Ny, Nz, kr, kc, R, G, B, NR , NG , NB , t° , t ... The basis of our TETRA-project: ‘Smart Data Clouds’ kr ,kc N u = j – j0 ; uk = ku*u v = i – i0 ; vk = kv*v tg φ = uk/f tg ψ = vk/f r = √(uk²+f²) d = √(uk²+vk²+f²) D/d-related calculations. (1) For navigation purposes, the free floor area can easily be found from: di/Di = e/E = [ f.sin(a0) + vi.cos(a0) ] / E = [ tg(a0) + tg(ψi) ].f.cos(a0)/E . Since (d/D)i = f /zi this is equivalent with: zi . [ tg(a0) + tg(ψi) ] = E/cos(a0) . Camera sensor Camera inclination = a0 . f di e ψi vi Di Camera bounded parallel to the floor. a0 E zi Floor. D/d-related calculations. (2) Fast calculations !! 1 D The world normal vector n , at a random image position (v,u) . 4 3 d v d² = u² + v² + f². O n u f nx ~ f.(D4/d4 - D3/d3)/(D4/d4 + D3/d3) = f.(z4 – z3)/(z4 + z3) ny ~ f.(D2/d2 - D1/d1)/(D2/d2 + D1/d1) = f.(z2 – z1)/(z2 + z1) nz ~ - (u.nx + v.ny + 1 ) ▪ 2 Coordinate transformations yPc 1. Camera x // World x // Robot x 2. World (yw , zw) = Robot (yr , zr) + ty vt 3. yPw = - (z0 – zPc).sin(a) + yPc.cos(a) = yPr + ty f 4. zPw = (z0 – zPc).cos(a) + yPc.sin(a) = zPr a zw P zPc A camera re-calibration for ToF cameras is easy and straightforward !! zr a yw Work plane = reference. yr ty Pepper handling First image = empthy world plane Next images = random pepper collections. Connected peppers can be distinguished by means of local gradients. Gradients can easily be derived from D/d-ratios. Thickness in millimeter Calculations are ‘distance’ driven, x, y and z aren’t necessary. Fast calculations ! YouTube: KdGiVL Bin picking & 3D-OCR Analyse ‘blobs’ one by one. Find the centre of gravity XYZ, the normal direction components Nx, Ny, Nz , the so called ‘Tool Centre Point’ and the WPS coordinates. Beer barrel inspection. IDS uEye UI-1240SE-C O3D2xx x y x y z ToF - RGB correspondency vc,P/F – kv.vt/f = tx.√(z²P+y²P) uc/F = ku.ut/F . MESA SR4000 RGBd DepthSense 311 1. Find the z –discontinuity 2. Look for vertical and forward oriented regions 3. Check the collineraity 4. Use geometrical laws in order to find x, y, z and b. ToF IFM O3D2xx. 1. Remove weak defined pixels. 2. Find the z –discontinuity 3. Look for vertical and forward oriented regions 4. Check the collineraity 5. Use geometrical laws in order to find x, y, z and b. CamBoard Nano Basic tasks of ToF cameras in order to support Healthcare Applications: • Guide an autonomous wheelchair along the wall of a corridor. • Avoid collisions between an AGV and unexpected objects. • Give warnings about obstacles (‘mind the step’…, ‘kids’, stairs…) • Take position in front of a table, a desk or a TV screen. • Drive over a ramp at a front door or the door leading to the garden. • Drive backwards based on a ToF camera. • Automatic parking of a wheelchair (e.g. battery load). • Command a wheelchair to reach a given position. • Guide a patient in a semi automatic bad room. • Support the supervision over elderly people. • Fall detection of (elderly) people. Imagine a wheelchair user likes to reach 8 typical locations at home: 1. Sitting on the table. 2. Watching TV. 3. Looking through the window. 4. Working on a PC. 5. Reaching the corridor 6. Command to park the wheelchair. 7. Finding some books on a shelf. 8. Reaching the kitchen and the garden. ToF guided navigation for AGV’s. D2 = DP ? Instant Eigen Motion: translation. Distance correspondence during a pure translation + horizontal camera. d1 = sqrt(u1² + v1² + f²) RMS error. x1 = u1* D1/d1; y1 = v1* D1/d1; z1 = f* D1/d1 Distance correspondence during a pure translation + horizontal camera. D22 = D1² + δ² - 2.δ.D1.cos(A) D22 = D1² + δ² - 2.δ.z1 u2/f = x2/(z1 - δ); δ ( x2 = x1 ! ) For every δ there is a D2 . For one single δ the error D2 – DP is minimal. v2/f = y1/(z1 - δ) . Measurement at (v2,u2) DP . δ d1 Random world f f v1,u1 v2,u2 D1 A y1 D2 = DP ? P Every Random point P can be used in order to estimate de value δ. More points make a statistical approach possible. Random points P! (In contrast: Stereo Vision must find edges, so texture is pre-assumed ) ToF guided Navigation of AGV’s. Instant Eigen Motion: planar rotation. D2 = DP ? Image data: tg(β1) = u1/f ; tg(β2) = u2/f . Next sensor position P Here: x<0 R>0. D1 z1 Previous sensor position Task: find the correspondence β1 β2 ; Procedure: With 0 < |α| < α0 z2 D2 DP With |x| < x0 Projection rules for a random point P : β2 β1 α xP 2 D2 .sin( 2 ) D1.sin( 1 ) x.cos( ) z P 2 D2 .cos( 2 ) D1.cos( 1 ) x.sin( ) R+ tan( 2 ) δ1 α x Parallel processing possible! D1.sin( 1 ) x.cos( ) D1.cos( 1 ) x.sin( ) D2² = xP2² + zP2² D22 D12 x2 2 2.D1.sin(1 ) 2. D1.cos( 1 ) x.sin( ) ToF guided navigation of AGV’s. Instant Eigen Motion: planar rotation. Previous image Next image White spots are random selected points Minimum RMS = 9.4211 e.g. Make use of 100 Random points. Minimum RMS = 6.4 R = 1152 alfa = 0.24 ratio = 1 Result = Radius & Angle 30 20 D2,i = DP,i ? 10 0 60 40 20 0 Value x. 0 20 40 60 80 Angle A. CPUtime = 0.11202 100 Research FTI Master Electromechanics Info: luc.mertens@uantwerpen.be ; Conrad: DJI Phantom RTF Quadrocopter gunther.steenackers@uantwerpen.be Research: ToF driven Quadrocopters Combinations with IR/RGB Security flights over industrial areas. TETRA-project 2014-2016 ‘Smart Data Clouds’ Quadrocopter navigation based on ToF cameras z_world xc P zc_camera α xc BV-ToF 128x120 pix. zc0 x1 x-world The target is to ‘hover’ above the end point of a black line. If ‘yaw’ is present it should be compensated by an overall torque moment. Area seen by the camera Camera Horizon Q(x1,y1) (vc,uc)P(x,y,z) P(x,y) t (Vc,Uc) t Q(x1,y1) Meanwhile the translation t can be evaluated. The global effect of the roll and pitch angles represent themselves by means of the points P and Q. The actual copter speed v is in the direction PQ. At the end P and Q need to come together without oscillations, while |t| becomes oriented in the ydirection. _world y_world An efficient path can be followed up by means of Fuzzy Logic principals.