Lec-03x

advertisement

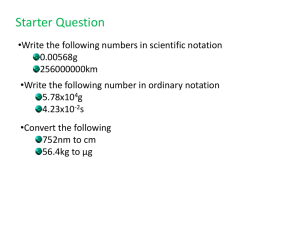

CS 253: Algorithms Chapter 3 Growth of Functions Credit: Dr. George Bebis Analysis of Algorithms Goal: To analyze and compare algorithms in terms of running time and memory requirements (i.e. time and space complexity) In other words, how does the running time and space requirements change as we increase the input size n ? (sometimes we are also interested in the coding complexity) Input size (number of elements in the input) ◦ size of an array or a matrix ◦ # of bits in the binary representation of the input ◦ vertices and/or edges in a graph, etc. 2 Types of Analysis Worst case ◦ Provides an upper bound on running time ◦ An absolute guarantee that the algorithm would not run longer, no matter what the inputs are Best case ◦ Provides a lower bound on running time ◦ Input is the one for which the algorithm runs the fastest Average case ◦ Provides a prediction about the running time ◦ Assumes that the input is random Lower Bound ≤ Running Time ≤ Upper Bound 3 Computing the Running Time Measure the execution time ? Not a good idea ! It varies for different microprocessors! Count the number of statements executed? Yes, but you need to be very careful! High-level programming languages have statements which require a large number of low-level machine language instructions to execute (a function of the input size n). For example, a subroutine call can not be counted as one statement; it needs to be analyzed separately Associate a "cost" with each statement. Find the "total cost“ by multiplying the cost with the total number of times each statement is executed. (we have seen examples before) 4 Example Algorithm X sum = 0; for(i=0; i<N; i++) for(j=0; j<N; j++) sum += arrY[i][j]; Cost c1 c2 c3 c4 ------------ Total Cost = c1 + c2 * (N+1) + c3 * N * (N+1) + c4 * N2 5 Asymptotic Analysis To compare two algorithms with running times f(n) and g(n), we need a rough measure that characterizes how fast each function grows with respect to n In other words, we are interested in how they behave asymptotically (i.e. for large n) (called rate of growth) Big O notation: asymptotic “less than” or “at most”: f(n)=O(g(n)) implies: f(n) “≤” g(n) notation: asymptotic “greater than” or “at least”: f(n)= (g(n)) implies: f(n) “≥” g(n) notation: asymptotic “equality” or “exactly”: f(n)= (g(n)) implies: f(n) “=” g(n) 6 Big-O Notation We say fA(n) = 7n+18 is order n, or O (n) It is, at most, roughly proportional to n. fB(n) = 3n2+5n +4 is order n2, or O(n2). It is, at most, roughly proportional to n2. In general, any O(n2) function is fastergrowing than any O(n) function. Function value fB(n) fA(n) Increasing n 7 More Examples … n4 + 100n2 + 10n + 50 10n3 + 2n2 O(n3) n3 - n2 O(n3) constants O(n4) 10 is O(1) 1273 is O(1) what is the rate of growth for Algorithm X studied earlier (in Big O notation)? Total Time = c1 + c2*(N+1) + c2 * N*(N+1) + c3*N2 If c1, c2, c3 , and c4 are constants then Total Time = O(N2) 8 Definition of Big O O-notation Big-O example, graphically Note that 30n+8 is O(n). Can you find a c and n0 which can be used in the formal definition of Big O ? You can easily see that 30n+8 isn’t less than n anywhere (n>0). But it is less than 31n everywhere to the right of n=8. So, one possible (c , n0) pair that can be used in the formal definition: c = 31, n0 = 8 cn =31n 30n+8 Function value n n0=8 n 30n+8 O(n) Big-O Visualization O(g(n)) is the set of functions with smaller or same order of growth as g(n) 11 No Uniqueness There is no unique set of values for n0 and c in proving the asymptotic bounds Prove that 100n + 5 = O(n2) (i) 100n + 5 ≤ 100n + n = 101n ≤ 101n2 for all n ≥ 5 You may pick n0 = 5 and c = 101 to complete the proof. (ii) 100n + 5 ≤ 100n + 5n = 105n ≤ 105n2 for all n ≥ 1 You may pick n0 = 1 and c = 105 to complete the proof. 12 Definition of (g(n)) is the set of functions with larger or same order of growth as g(n) Examples ◦ 5n2 = (n) c, n0 such that: 0 cn 5n2 cn 5n2 c = 1 and n > n0=1 ◦ 100n + 5 ≠ (n2) c, n0 such that: 0 cn2 100n + 5 since 100n + 5 100n + 5n n1 cn2 105n n(cn – 105) 0 Since n is positive (cn – 105) 0 n 105/c contradiction: n cannot be smaller than a constant ◦ n = (2n), n3 = (n2), n = (logn) Definition of -notation (g(n)) is the set of functions with the same order of growth as g(n) 15 Examples ◦ n2/2 –n/2 = (n2) ½ n2 - ½ n ≤ ½ n 2 n ≥ 0 c2= ½ ¼ n2 ≤ ½ n2 - ½ n n ≥ 2 c1= ¼ ◦ n ≠ (n2): c1 n2 ≤ n ≤ c2 n2 only holds for: n ≤ 1/c1 ◦ 6n3 ≠ (n2): c1 n2 ≤ 6n3 ≤ c2 n2 only holds for: n ≤ c2 /6 ◦ n ≠ (logn): c1 logn ≤ n ≤ c2 logn c2 ≥ n/logn, n≥ n0 – impossible Relations Between Different Sets Subset relations between order-of-growth sets. RR O( f ) ( f ) •f ( f ) 17 Common orders of magnitude 18 Common orders of magnitude 19 Logarithms and properties In algorithm analysis we often use the notation “log n” without specifying the base Binary logarithm lg n log Natural logarithm ln n log e n 2 n log x y log x y log xy log x log y log x log x log y y lg n (lg n ) k k lg lg n lg(lg n ) a log b x log b x x log b a log a x log a b More Examples For each of the following pairs of functions, either f(n) is O(g(n)), f(n) is Ω(g(n)), or f(n) = Θ(g(n)). Determine which relationship is correct. ◦ f(n) = log n2; g(n) = log n + 5 f(n) = (g(n)) ◦ f(n) = n; f(n) = (g(n)) g(n) = log n2 ◦ f(n) = log log n; ◦ f(n) = n; g(n) = log n g(n) = log2 n f(n) = O(g(n)) f(n) = (g(n)) ◦ f(n) = n log n + n; g(n) = log n f(n) = (g(n)) ◦ f(n) = 10; g(n) = log 10 f(n) = (g(n)) ◦ f(n) = 2n; g(n) = 10n2 f(n) = (g(n)) ◦ f(n) = 2n; g(n) = 3n f(n) = O(g(n)) Properties Theorem: f(n) = (g(n)) f = O(g(n)) and f = (g(n)) Transitivity: ◦ f(n) = (g(n)) and g(n) = ◦ Same for O and (h(n)) f(n) = (h(n)) Reflexivity: ◦ f(n) = (f(n)) ◦ Same for O and Symmetry: ◦ f(n) = (g(n)) if and only if g(n) = (f(n)) Transpose symmetry: ◦ f(n) = O(g(n)) if and only if g(n) = (f(n)) 22