Document

advertisement

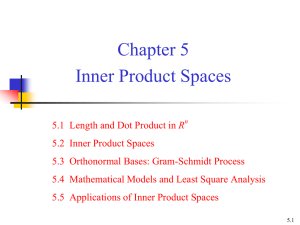

Chapter 5

Inner Product Spaces

n

5.1 Length and Dot Product in R

Notes: The length of a vector is also called its norm.

Notes:

1

v 0

2

v 1 v

3

v 0 iff

is called a unit vector.

v 0

5-1

5-2

5-3

• Notes:

The process of finding the unit vector in the direction of v is

called normalizing the vector v.

• A standard unit vector in Rn:

e 1 , e 2 , , e n 1,0 , ,0 , 0 ,1, ,0 , 0 ,0 , ,1

Ex:

the standard unit vector in R2: i , j 1, 0 , 0 ,1

the standard unit vector in R3:

i ,

j, k

1, 0 , 0 , 0 ,1, 0 , 0 , 0 ,1

5-4

Notes: (Properties of distance)

(1)

d (u , v ) 0

(2)

d (u , v ) 0

(3)

d (u , v ) d ( v , u )

if and only if

u v

5-5

5-6

• Euclidean n-space:

Rn was defined to be the set of all order n-tuples of real

numbers. When Rn is combined with the standard

operations of vector addition, scalar multiplication,

vector length, and the dot product, the resulting vector

space is called Euclidean n-space.

5-7

Dot product and matrix multiplication:

u1

u2

u

u n

v1

v2

v

v n

u v u v [u 1

T

u2

(A vector u ( u1 , u 2 , , u n ) in Rn

is represented as an n×1 column matrix)

v1

v2

[u v u v u v ]

un ]

1 1

2 2

n n

v n

5-8

Note: The angle between the zero vector and another vector

is not defined.

5-9

Note: The vector 0 is said to be orthogonal to every vector.

5-10

Note:

Equality occurs in the triangle inequality if and only if

the vectors u and v have the same direction.

5-11

5.2 Inner Product Spaces

• Note:

u v dot product ( Euclidean

n

inner product for R )

u , v general inner product for vector

space V

5-12

Note:

A vector space V with an inner product is called an inner

product space.

Vector space:

V ,

,

Inner product space:

V ,

, , ,

5-13

5-14

Note: || u || 2 〈 u , u 〉

5-15

Properties of norm:

(1) || u || 0

(2) || u || 0 if and only if

u0

(3) || c u || | c | || u ||

5-16

Properties of distance:

(1) d ( u , v ) 0

(2) d ( u , v ) 0 if and only if u v

(3) d ( u , v ) d ( v , u )

5-17

Note:

If v is a init vector, then 〈 v , v 〉 || v || 2 1.

The formula for the orthogonal projection of u onto v

takes the following simpler form.

proj v u u , v v

5-18

5-19

5.3 Orthonormal Bases: Gram-Schmidt Process

S v 1 , v 2 , , v n V

S v 1 , v 2 , , v n V

vi, v j 0

1

vi, v j

0

i j

i j

Note:

If S is a basis, then it is called an orthogonal basis or an

orthonormal basis.

5-20

5-21

5-22

5-23

5-24

5-25

5.4 Mathematical Models and Least Squares

Analysis

5-26

Orthogonal complement of W:

Let W be a subspace of an inner product space V.

(a) A vector u in V is said to orthogonal to W,

if u is orthogonal to every vector in W.

(b) The set of all vectors in V that are orthogonal to W is

called the orthogonal complement of W.

W

{v V | v , w 0 , w W }

W

Notes:

(1)

0

V

(read “ W perp”)

(2) V

0

5-27

• Notes:

W is a subspace

(1) W

is a subspace

(2) W W

(3)

of V

of V

0

(W ) W

Ex:

If V R , W x axis

2

Then (1) W

y - axis

(2) W W

(3) (W

is a subspace

of R

2

( 0 , 0 )

) W

5-28

5-29

5-30

5-31

• Notes:

(1) Among all the scalar multiples of a vector u, the

orthogonal projection of v onto u is the one that is

closest to v.

(2) Among all the vectors in the subspace W, the vector

proj W v is the closest vector to v.

5-32

• The four fundamental subspaces of the matrix A:

N(A): nullspace of A

N(AT): nullspace of AT

R(A): column space of A

R(AT): column space of AT

5-33

5-34

Least squares problem:

Ax b

m n n 1 m 1

(A system of linear equations)

(1) When the system is consistent, we can use the Gaussian

elimination with back-substitution to solve for x

(2) When the system is inconsistent, how to find the “best

possible” solution of the system. That is, the value of x for

which the difference between Ax and b is small.

Least squares solution:

Given a system Ax = b of m linear equations in n unknowns,

the least squares problem is to find a vector x in Rn that

minimizes

Ax b

with respect to the Euclidean inner

product on Rn. Such a vector is called a least squares

solution of Ax = b.

5-35

A M

x R

mn

n

A x CS ( A ) ( CS A is a subspace

m

of R )

W CS ( A )

Let A xˆ proj W b

( b A xˆ ) CS ( A )

b A xˆ ( CS ( A ))

NS ( A )

A ( b A xˆ ) 0

i.e.

A A xˆ A b

(the normal equations of the least squares

problem Ax = b)

5-36

• Note:

The problem of finding the least squares solution of A x b

is equal to he problem of finding an exact solution of the

associated normal system A A xˆ A b .

Thm:

For any linear system A x b , the associated normal system

A A xˆ A b

is consistent, and all solutions of the normal system are least

squares solution of Ax = b. Moreover, if W is the column space

of A, and x is any least squares solution of Ax = b, then the

orthogonal projection of b on W is

proj W b A x

5-37

• Thm:

If A is an m×n matrix with linearly independent column vectors,

then for every m×1 matrix b, the linear system Ax = b has a

unique least squares solution. This solution is given by

x ( A A)

1

A b

Moreover, if W is the column space of A, then the orthogonal

projection of b on W is

proj W b A x A ( A A )

1

A b

5-38

5.5 Applications of Inner Product Spaces

5-39

5-40

• Note: C[a, b] is the inner product space of all continuous

functions on [a, b].

5-41

5-42