Chapter 5 Orthogonality

advertisement

5.1

Orthogonality

Definitions

A set of vectors is called an orthogonal set if all pairs of

distinct vectors in the set are orthogonal.

An orthonormal set is an orthogonal set of unit vectors.

An orthogonal (orthonormal) basis for a subspace W of

n

R is a basis for W that is an orthogonal (orthonormal) set.

An orthogonal matrix is a square matrix whose columns

form an orthonormal set.

Examples

1) Is the following set of vectors orthogonal? orthonormal?

a) 1

2

3 2 1

, 4 , 1

1 2

b) { e1 , e 2 ,..., e n }

2) Find an orthogonal basis and an orthonormal basis

n

for the subspace W of R

x

W y : x y 2 z 0

z

Theorems

All vectors in an orthogonal set are linearly independent.

Let {v1, v2,…, vk } be an orthogonal basis for a subspace

n

W of R and w be any vector in W. Then the unique

scalars c1 ,c2 , …, ck such that w = c1v1 + c2v2 + …+ ckvk

are given by

w vi

ci

for i 1,..., k

vi vi

Proof: To find ci we take the dot product with vi

w vi = (c1v1 + c2v2 + …+ ckvk ) vi

Examples

3) The orthogonal basis for the subspace W in previous

example is 1 1

1 , 1

0 1

Pick a vector in W and express it in terms of the vectors

in the basis.

4) Is the following matrix orthogonal?

3

A

1

2

2

4

1

1

1

2

0

B 1

0

0

0

1

1

0

0

cos

C

sin

sin

cos

If it is orthogonal, find its inverse and its transpose.

Theorems on Orthogonal Matrix

The following statements are equivalent for a matrix A :

1) A is orthogonal

-1

T

2) A = A

n

3) ||Av|| = ||v|| for every v in R

n

4) Av1∙ Av2 = v1∙ v2 for every v1 ,v2 in R

Let A be an orthogonal matrix. Then

1) its rows form an orthonormal set.

-1

2) A is also orthogonal.

3) |det(A)| = 1

4) |λ| = 1 where λ is an eigenvalue of A

5) If A and B are orthogonal matrices, then so is AB

5.2

Orthogonal Complements

and Orthogonal Projections

Orthogonal Complements

Recall: A normal vector n to a plane is orthogonal to

every vector in that plane. If the plane passes through

the origin, then it is a subspace W of R3 .

Also, span(n) is also a subspace of R3

Note that every vector in span(n) is orthogonal to

every vector in subspace W . Then span(n) is called

orthogonal complement of W.

Definition:

A vector v is said to be orthogonal to a subspace W of

n

R if it is orthogonal to all vectors in W.

The set of all vectors that are orthogonal to W is called

the orthogonal complement of W, denoted W ┴ . That is

W perp

W

{v R

n

: v w 0 w W}

http://www.math.tamu.edu/~yvorobet/MATH304-2011C/Lect3-02web.pdf

Example

3

1) Find the orthogonal complements for W of R .

1

a) W span 2

3

b) W plane with direction

c) (subspace

x

W y : x y 2 z 0

z

spanned

by) vectors

1

0

1 and 1

0

1

Theorems

n

Let W be a subspace of R .

n

┴

1) W is a subspace of R .

2) (W ┴)┴ = W

3) W ∩ W ┴ = {0}

4) If W = span(w1,w2,…,wk), then v is in W ┴ iff v∙wi = 0

for all i =1,…,k.

Let A be an m x n matrix. Then

(row(A))┴ = null(A)

and

(col(A))┴ = null(AT)

Proof?

Example

2) Use previous theorem to find the orthogonal complements

3

for W of R .

1

0

a) W plane w ith direction (subspace spann ed by) vectors 1 and 1

0

1

b)

3

2

W subspace spanned by vectors 0 ,

1

4

1

2

2 an d

0

1

3

2

6

2

5

Orthogonal Projections

u v

w 1 proj v u

v 2

u

w2

w1

u v

v

v v

v

w 2 = perp v u u - w 1

v

Let u and v be nonzero vectors.

w1 is called the vector component of u along v

(or projection of u onto v), and is denoted by projvu

w2 is called the vector component of u orthogonal to v

Orthogonal Projections

n

Let W be a subspace of R with an orthogonal basis

{u1, u2,…, uk }, the orthogonal projection of v onto W is

defined as:

projW v = proju v + proju v + … + proju v

1

2

k

The component of v orthogonal to W is the vector

perpW v = v – projw v

n

n

Let W be a subspace of R and v be any vector in R .

Then there are unique vectors w1 in W and w2 in W ┴

such that v = w1 + w2 .

Examples

3) Find the orthogonal projection of v = [ 1, -1, 2 ] onto W and

the component of v orthogonal to W.

1

a) W span 2

3

1

-1

b) W subspace spanned by 1 and

1

0

1

x

c) W y : x y 2 z 0

z

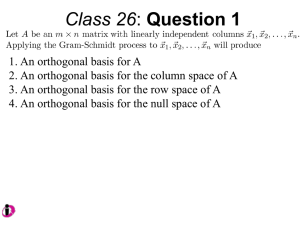

5.3

The Gram-Schmidt Process

And the QR Factorization

The Gram-Schmidt Process

Goal: To construct an orthogonal (orthonormal) basis for

n

any subspace of R .

We start with any basis {x1, x2,…, xk }, and “orthogonalize”

each vector vi in the basis one at a time by finding the

component of vi orthogonal to W = span(x1, x2,…, xi-1 ).

Let {x1, x2,…, xk } be a basis for a subspace W. Then

choose the following vectors:

v1 = x1,

v2 = x2 – projv x2

1

v3 = x3 – projv x3 – projv x3

1

2

… and so on

Then {v1, v2,…, vk } is orthogonal basis for W .

We can normalize each vector in the basis to form an

orthonormal basis.

Examples

1) Use the following basis to find an orthonormal basis for R

3 1

, ,

1 2

3

2) Find an orthogonal basis for R that contains the vector

1

1

1

2 ,

1

, 0

1

1

1

2

The QR Factorization

If A is an m x n matrix with linearly independent columns,

then A can be factored as A = QR where R is an invertible

upper triangular matrix and Q is an m x n orthogonal

n

matrix. In fact columns of Q form orthonormal basis for R

which can be constructed from columns of A by using

Gram-Schmidt process.

Note: Since Q is orthogonal, Q-1 = QT and we have R = QT A

Examples

3) Find a QR factorization for the following matrices.

3

A

1

1

2

1

A 2

1

-1

1

-1

- 1

0

1

5.4

Orthogonal Diagonalization

of Symmetric Matrices

Example

1) Diagonalize the matrix.

3

A

2

2

6

Recall:

A square matrix A is symmetric if AT = A.

A square matrix A is diagonalizable if there exists a

matrix P and a diagonal matrix D such that P-1AP = D.

Orthogonal Diagonalization

Definition:

A square matrix A is orthogonally diagonalizable if there

exists an orthogonal matrix Q and a diagonal matrix D

such that Q-1AQ = D.

Note that Q-1 = QT

Theorems

1. If A is orthogonally diagonalizable, then A is symmetric.

2. If A is a real symmetric matrix, then the eigenvalues of A

are real.

3. If A is a symmetric matrix, then any two eigenvectors

corresponding to distinct eigenvalues of A are orthogonal.

A square matrix A is orthogonally diagonalizable

if and only if it is symmetric.

Example

2) Orthogonally diagonalize the matrix

0

A 1

1

1

0

1

1

1

0

and write A in terms of matrices Q and D.

Theorem

If A is orthogonally diagonalizable, and QTAQ = D

then A can written as

A 1 q1 q1 2 q 2 q 2 ... n q n q n

T

T

T

where qi is the orthonormal column of Q, and λi is

the corresponding eigenvalue.

This fact will help us construct the matrix A given

eigenvalues and orthogonal eigenvectors.

Example

3) Find a 2 x 2 matrix that has eigenvalues 2 and 7, with

corresponding eigenvectors

v1

1

2

v

2

1

2

5.5

Applications

Quadratic Forms

A quadratic form in x and y :

a

T

2

2

ax by cxy x 1

2 c

c

x

b

1

2

A quadratic form in x,y and z:

a

2

2

2

ax by cz dxy exz fyz x T 12 d

12 e

where x is the variable (column) matrix.

1

2

d

b

1

2

f

e

1

f

x

2

c

1

2

Quadratic Forms

A quadratic form in n variables is a function

n

f : R R of the form:

f (x) x Ax

T

where A is a symmetric n x n matrix and x is in R

A is called the matrix associated with f.

z f ( x , y ) x y 8 xy

2

2

z f ( x, y ) 2 x 5 y

2

2

n

The Principal Axes Theorem

Every quadratic form can be diagonalized. In fact,

if A is a symmetric n x n matrix and if Q is an

orthogonal matrix so that QTAQ = D then the change

of variable x = Qy transforms the quadratic form into

x A x y D y 1 y1 2 y 2 ... n y n

T

T

2

2

2

Example: Find a change of variable that transforms the

Quadratic into one with no cross-product terms.

z f ( x , y ) x y 8 xy

2

2

z f ( x, y ) 2 x 5 y

2

2