MM_Ch05x

advertisement

Chapter 5

Inner Product Spaces

n

5.1 Length and Dot Product in R

5.2 Inner Product Spaces

5.3 Orthonormal Bases: Gram-Schmidt Process

5.4 Mathematical Models and Least Square Analysis

5.5 Applications of Inner Product Spaces

5.1

n

5.1 Length and Dot Product in R

Length (長度):

The length of a vector v ( v1 , v 2 , , v n ) in Rn is given by

|| v ||

v1 v 2

2

2

vn

2

( || v || is a real num ber)

Notes: The length of a vector is also called its norm (範數)

Properties of length (or norm)

(1) v 0

(2) v 1 v

is called a unit vector (單位向量)

(3) v 0 if and only if v 0

(4) c v c

v (proved in T heoerm 5.1)

5.2

Ex 1:

(a) In R5, the length of v ( 0 , 2 , 1 , 4 , 2 ) is given by

|| v ||

0 (2) 1 4 (2)

2

2

2

(b) In R3, the length of v (

2

|| v ||

2

2

17

2

,

2

17

2

,

3

17

2

25 5

) is given by

2

2

3

17

17

17

17

1

17

(If the length of v is 1, then v is a unit vector)

5.3

n

A standard unit vector (標準單位向量) in R : only one

component of the vector is 1 and the others are 0 (thus the length

of this vector must be 1)

R : e1 , e 2

2

1, 0 , 0,1

R : e1 , e 2 , e 3

3

R : e1 , e 2 ,

n

1, 0, 0 , 0,1, 0 , 0, 0,1

, e n 1, 0,

, 0 , 0,1,

,0,

, 0, 0,

,1

Notes: Two nonzero vectors are parallel if u cv

(1) c 0

(2) c 0

u and v have the same direction

u and v have the opposite directions

5.4

Theorem 5.1: Length of a scalar multiple

Let v be a vector in Rn and c be a scalar. Then

|| c v || | c | || v ||

Pf:

v ( v1 , v 2 , , v n )

c v ( cv 1 , cv 2 , , cv n )

|| c v || || ( cv 1 , cv 2 , , cv n ) ||

( cv 1 ) ( cv 2 ) ( cv n )

c ( v1 v 2 v n )

2

|c |

2

2

2

2

2

2

v1 v 2 v n

2

2

2

| c | || v ||

5.5

Theorem 5.2: How to find the unit vector in the direction of v

n

If v is a nonzero vector in R , then the vector u

v

|| v ||

has length 1 and has the same direction as v. This vector u

is called the unit vector in the direction of v

Pf:

v is nonzero v 0

1

0

v

If u

1

v

|| u ||

v (u has the same direction as v)

v

|| v ||

|| c v || | c | || v ||

1

|| v || 1 (u has length 1)

|| v ||

5.6

Notes:

(1) The vector

v

|| v ||

is called the unit vector in the direction of v

(2) The process of finding the unit vector in the direction of v

is called normalizing the vector v

5.7

Ex 2: Finding a unit vector

Find the unit vector in the direction of v = (3, –1, 2), and verify

that this vector has length 1

Sol:

v (3 , 1 , 2) v

v

3

,

v

1

14

14

3 ( 1) 2

2

2

,

1

2

2

14

(3 , 1 , 2)

14

14

2

2

1

14

14

3

2

2

(3 , 1 , 2)

2

|| v ||

3 1 2

2

14

2

14

1

14

is a unit vector

v

5.8

Distance between two vectors:

n

The distance between two vectors u and v in R is

d ( u , v ) || u v ||

Properties of distance

(1) d ( u , v ) 0

(2) d ( u , v ) 0 if and only if u = v

(3) d ( u , v ) d ( v , u )

(commutative property of the function of distance)

5.9

Ex 3: Finding the distance between two vectors

The distance between u = (0, 2, 2) and v = (2, 0, 1) is

d ( u , v ) || u v || || ( 0 2 , 2 0 , 2 1) ||

(2) 2

2

2

1

2

3

5.10

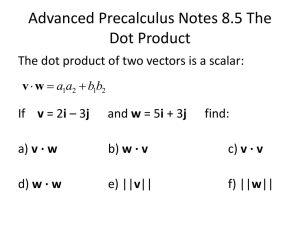

Dot product (點積) in Rn:

The dot product of u ( u 1 , u 2 , , u n ) and v ( v1 , v 2 , , v n )

returns a scalar quantity

u v u1v1 u 2 v 2

u n v n ( u v is a real number)

(The dot product is defined as the sum of component-by-component

multiplications)

Ex 4: Finding the dot product of two vectors

The dot product of u = (1, 2, 0, –3) and v = (3, –2, 4, 2) is

u v (1)( 3 ) ( 2 )( 2 ) ( 0 )( 4 ) ( 3 )( 2 ) 7

Matrix Operations in Excel

SUMPRODUCT: calculate the dot product of two vectors

5.11

Theorem 5.3: Properties of the dot product

If u, v, and w are vectors in Rn and c is a scalar,

then the following properties are true

(1) u v v u

(commutative property of the dot product)

(distributive property of the dot product

(2) u ( v w ) u v u w over vector addition)

(3) c ( u v ) ( c u ) v u ( c v )

(associative property of the scalar

multiplication and the dot product)

(4) v v || v || 2

(5) v v 0 , and v v 0 if and only if v 0

※ The proofs of the above properties follow simply from the definition

of dot product in Rn

5.12

Euclidean n-space:

–

–

In section 4.1, Rn was defined to be the set of all order ntuples of real numbers

When Rn is combined with the standard operations of

vector addition, scalar multiplication, vector length,

and dot product, the resulting vector space is called

Euclidean n-space (歐幾里德 n維空間)

5.13

Ex 5: Find dot products

u ( 2 , 2 ) , v ( 5 , 8 ), w ( 4 , 3 )

(a) u v

(b) ( u v ) w (c) u ( 2 v ) (d) || w || 2

(e) u ( v 2 w )

Sol:

( a ) u v ( 2 )( 5 ) ( 2 )( 8 ) 6

( b ) ( u v ) w 6 w 6 ( 4 , 3 ) ( 24 , 18 )

( c ) u ( 2 v ) 2 ( u v ) 2 ( 6 ) 12

( d ) || w || w w ( 4 )( 4 ) ( 3 )( 3 ) 25

2

( e ) v 2 w ( 5 ( 8 ) , 8 6 ) (13 , 2 )

u ( v 2 w ) ( 2 )( 13 ) ( 2 )( 2 ) 26 4 22

5.14

Ex 6: Using the properties of the dot product

Given u u 39, u v 3, v v 79,

find ( u 2 v ) ( 3u v )

Sol:

( u 2 v ) ( 3u v ) u ( 3u v ) 2 v ( 3u v )

u ( 3u ) u v ( 2 v ) ( 3u ) ( 2 v ) v

3(u u ) u v 6 ( v u ) 2 ( v v )

3(u u ) 7 (u v ) 2 ( v v )

3 ( 39 ) 7 ( 3 ) 2 ( 79 ) 254

5.15

Theorem 5.4: The Cauchy-Schwarz inequality (科西-舒瓦茲不等式)

If u and v are vectors in Rn, then

| u v | || u || || v || ( | u v | denotes the absolute value of u v )

(The geometric interpretation for this inequality is shown on the next slide)

Ex 7: An example of the Cauchy-Schwarz inequality

Verify the Cauchy-Schwarz inequality for u = (1, –1, 3)

and v = (2, 0, –1)

Sol:

u v 1, u u 11, v v 5

u v 1 1

u v

u u

vv

11 5

55

uv u v

5.16

Dot product and the angle between two vectors

To find the angle ( 0 ) between two nonzero vectors

u = (u1, u2) and v = (v1, v2) in R2, the Law of Cosines can be

applied to the following triangle to obtain

vu

2

v

2

u

2

2 v u cos

(The length of the subtense (對邊) of θ can be expressed in

terms of the lengths of the adjacent sides (鄰邊) and cos θ)

vu

v

u

2

( u 1 v1 ) ( u 2 v 2 )

2

v1 v 2

2

u1 u 2

cos

2

2

2

2

2

u 1 v1 u 2 v 2

v u

2

uv

v u

※ You can employ the fact that |cos θ| 1 to

prove the Cauchy-Schwarz inequality in R2

5.17

The angle between two nonzero vectors in Rn:

cos

uv

,0

|| u || || v ||

Opposite

direction

uv 0

cos 1

2

cos 0

uv 0

uv 0

Same

direction

2

cos 0

0

2

cos 0

0

cos 1

Note:

The angle between the zero vector and another vector is

not defined (since the denominator cannot be zero)

5.18

Ex 8: Finding the angle between two vectors

v ( 2 , 0 , 1 , 1)

u (4 , 0 , 2 , 2)

Sol:

u

u u

4

v

vv

2 0 1 1

2

0 2 2

2

2

2

2

2

2

2

24

6

u v ( 4 )( 2 ) ( 0 )( 0 ) ( 2 )( 1) ( 2 )( 1) 12

cos

uv

|| u || || v ||

12

24

6

12

1

144

u and v have opposite directions

(In fact, u = –2v and according to the

arguments on Slide 5.4, u and v are with

different directions)

5.19

Orthogonal (正交) vectors:

Two vectors u and v in Rn are orthogonal (perpendicular) if

uv0

Note:

The vector 0 is said to be orthogonal to every vector

5.20

Ex 10: Finding orthogonal vectors

Determine all vectors in Rn that are orthogonal to u = (4, 2)

Sol:

u (4 , 2)

Let v ( v 1 , v 2 )

u v ( 4 , 2 ) ( v1 , v 2 )

4 v1 2 v 2

0

v1

t

2

, v2 t

t

v

,t , t R

2

5.21

Theorem 5.5: The triangle inequality (三角不等式)

If u and v are vectors in Rn, then || u v || || u || || v ||

Pf:

|| u v || ( u v ) ( u v )

2

u (u v ) v (u v ) u u 2 (u v ) v v

|| u || 2 ( u v ) || v || || u || 2 | u v | || v ||

2

2

2

2

(c |c|)

2

2

|| u || 2 || u || || v || || v || (Cauchy-Schwarz inequality)

(|| u || || v ||)

2

(The geometric representation of the triangle inequality:

|| u v || || u || || v || for any triangle, the sum of the lengths of any two sides is

larger than the length of the third side (see the next slide))

Note:

Equality occurs in the triangle inequality if and only if

the vectors u and v have the same direction (in this

situation, cos θ = 1 and thus u v u v 0 )

5.22

Theorem 5.6: The Pythagorean (畢氏定理) theorem

If u and v are vectors in Rn, then u and v are orthogonal

if and only if

|| u v || || u || || v ||

2

2

2

(This is because u·v = 0 in the

proof for Theorem 5.5)

※ The geometric meaning: for any right triangle, the sum of the squares of the

lengths of two legs (兩股) equals the square of the length of the hypotenuse (斜邊).

|| u v || || u || || v ||

|| u v || || u || || v ||

2

2

2

5.23

Similarity between dot product and matrix multiplication:

u1

u2

u

u n

v1

v2

v

v n

u v u v [ u1

T

u2

(A vector u = (u1, u2,…, un) in Rn can be

represented as an n×1 column matrix)

v1

v2

[u v u v

un ]

1 1

2 2

vn

u nvn ]

(The result of the dot product of u and v is the same as the result

of the matrix multiplication of uT and v)

5.24

Keywords in Section 5.1:

length: 長度

norm: 範數

unit vector: 單位向量

standard unit vector: 標準單位向量

distance: 距離

dot product: 點積

Euclidean n-space: 歐基里德n維空間

Cauchy-Schwarz inequality: 科西-舒瓦茲不等式

angle: 夾角

triangle inequality: 三角不等式

Pythagorean theorem: 畢氏定理

5.25

5.2 Inner Product Spaces

Inner product (內積): represented by angle brackets〈 u , v 〉

Let u, v, and w be vectors in a vector space V, and let c be

any scalar. An inner product on V is a function that associates

a real number〈 u , v 〉with each pair of vectors u and v and

satisfies the following axioms (abstraction definition from

the properties of dot product in Theorem 5.3 on Slide 5.12)

(1)〈 u , v 〉〈 v , u 〉(commutative property of the inner product)

(distributive property of the inner product

(2)〈 u , v w 〉〈 u , v 〉〈 u , w 〉over vector addition)

property of the scalar multiplication and the

(3) c 〈 u , v 〉〈 c u , v 〉(associative

inner product)

(4)〈 v , v 〉 0 and〈 v , v 〉 0 if and only if v 0

5.26

Note:

u v dot product (Euclidean inner product for R )

n

u , v general inner product for a vector space V

Note:

A vector space V with an inner product is called an inner

product space (內積空間)

Vector space: (V , , )

Inner product space: (V , , , , > )

5.27

Ex 1: The Euclidean inner product for Rn

Show that the dot product in Rn satisfies the four axioms

of an inner product

Sol:

u ( u1 , u 2 , , u n ) , v ( v1 , v 2 , , v n )

〈 u , v 〉 u v u1v1 u 2 v 2 u n v n

By Theorem 5.3, this dot product satisfies the required four axioms.

Thus, the dot product can be a sort of inner product in Rn

5.28

Ex 2: A different inner product for Rn

Show that the following function defines an inner product

on R2. Given u ( u 1 , u 2 ) and v ( v1 , v 2 ) ,

〈 u , v 〉 u 1 v 1 2 u 2 v 2

Sol:

(1) 〈 u , v 〉 u1v1 2 u 2 v 2 v1u1 2 v 2 u 2 〈 v , u 〉

(2)

w ( w1 , w 2 )

〈 u , v w 〉 u 1 ( v1 w1 ) 2 u 2 ( v 2 w 2 )

u 1 v1 u 1 w1 2 u 2 v 2 2 u 2 w 2

( u 1 v1 2 u 2 v 2 ) ( u 1 w1 2 u 2 w 2 )

〈 u , v 〉〈 u , w 〉

5.29

(3)

c〈 u , v 〉 c ( u1v1 2 u 2 v 2 ) ( cu1 ) v1 2( cu 2 ) v 2 〈 c u , v 〉

(4) 〈 v , v 〉 v1 2 v 2 0

2

2

〈 v , v 〉 0 v1 2 v 2 0

2

2

v1 v 2 0 ( v 0 )

Note: Example 2 can be generalized such that

〈 u , v 〉 c1u 1 v1 c 2 u 2 v 2

c n u n v n , ci 0

can be an inner product on Rn

5.30

Ex 3: A function that is not an inner product

Show that the following function is not an inner product on R3

〈 u , v 〉 u 1 v1 2 u 2 v 2 u 3 v 3

Sol:

Let

v (1 , 2 , 1)

T hen 〈 v , v 〉 (1)(1) 2(2)(2) (1)(1) 6 0

Axiom 4 is not satisfied

Thus this function is not an inner product on R3

5.31

Theorem 5.7: Properties of inner products

Let u, v, and w be vectors in an inner product space V, and

let c be any real number

(1)〈0 , v 〉〈 v , 0〉 0

(2)〈 u v , w 〉〈 u , w 〉〈 v , w 〉

(3)〈 u , c v 〉 c〈 u , v 〉

※ To prove these properties, you can use only basic properties for

vectors the four axioms in the definition of inner product (see

Slide 5.26)

Pf:

(3)

(1)〈0 , v 〉 =〈0 u , v 〉 0〈 u , v 〉 0

(1)

(2)

(1)

(2)〈 u v , w 〉〈 w , u v 〉〈 w , u 〉 +〈 w , v 〉〈 u , w 〉 +〈 v , w 〉

(1)

(3)

(3) 〈 u , c v 〉〈 c v , u 〉 〈

c u, v〉

5.32

※ The definition of norm (or length), distance, angle, orthogonal, and

normalizing for general inner product spaces closely parallel to

those based on the dot product in Euclidean n-space

Norm (length) of u:

|| u || 〈 u , u 〉

Distance between u and v:

d ( u , v ) || u v ||

u v , u v

Angle between two nonzero vectors u and v:

〈 u , v〉

cos

, 0

|| u || || v ||

Orthogonal: ( u v )

u and v are orthogonal if 〈 u , v 〉 0

5.33

Normalizing vectors

(1) If || v || 1 , then v is called a unit vector

(Note that

(2) v 0

(if v is not a

zero vector)

v

is defined as

N orm alizing

v, v

v

v

)

(the unit vector in the

direction of v)

5.34

Ex 6: An inner product in the polynomial space

For p a 0 a1 x a n x and q b 0 b1 x b n x ,

n

and

p , q a 0 b 0 a1b1

n

a n b n is an inner product

Let p ( x ) 1 2 x , q ( x ) 4 2 x x

2

(b ) || q || ?

2

be polynom ials in P2

(c) d ( p , q ) ?

(a )

p,q ?

(a )

p , q (1)(4 ) (0 )( 2 ) ( 2 )(1) 2

Sol:

(b) || q ||

(c)

q,q

4 ( 2) 1

2

p q 3 2 x 3 x

2

p q, p q

( 3) 2 ( 3)

2

21

2

d ( p , q ) || p q ||

2

2

2

22

5.35

Properties of norm: (the same as the properties for the dot

product in Rn on Slide 5.2)

(1) || u || 0

(2) || u || 0 if and only if

u0

(3) || c u || | c | || u ||

Properties of distance: (the same as the properties for the dot

product in Rn on Slide 5.9)

(1) d ( u , v ) 0

(2) d ( u , v ) 0 if and only if u v

(3) d ( u , v ) d ( v , u )

5.36

Theorem 5.8:

Let u and v be vectors in an inner product space V

(1) Cauchy-Schwarz inequality:

〈

| u , v 〉| || u || || v ||

Theorem 5.4

(2) Triangle inequality:

|| u v || || u || || v ||

Theorem 5.5

(3) Pythagorean theorem:

u and v are orthogonal if and only if

|| u v || || u || || v ||

2

2

2

Theorem 5.6

5.37

Orthogonal projections (正交投影): For the dot product function

n

in R , we define the orthogonal projection of u onto v to be

projvu = av (a scalar multiple of v), and the coefficient a can be

derived as follows

C onsider a 0,

a v a v a v u cos

u

projv u av, a 0

|| u || || v ||

uv

v

|| u || || v ||

v

v

a

uv

v

|| u || || v || cos

2

uv

vv

proj v u

uv

vv

uv

v

v

For inner product spaces:

Let u and v be two vectors in an inner product space V.

If v 0 , then the orthogonal projection of u onto v is

given by

proj v u

u , v

v, v

v

5.38

Ex 10: Finding an orthogonal projection in R3

Use the Euclidean inner product in R3 to find the

orthogonal projection of u = (6, 2, 4) onto v = (1, 2, 0)

Sol:

u , v ( 6 )( 1) ( 2 )( 2 ) ( 4 )( 0 ) 10

v , v 1 2 0 5

2

proj v u

2

u , v

v , v

2

v

uv

vv

v

10

(1 , 2 , 0) (2 , 4 , 0)

5

5.39

Theorem 5.9: Orthogonal projection and distance

Let u and v be two vectors in an inner product space V,

and if v ≠ 0, then

d ( u , proj v u ) d ( u , c v ) , c

u , v

v , v

u

u

d (u, cv )

d (u, projv u)

v

v

projv u

cv

※ Theorem 5.9 can be inferred straightforwardly by the Pythagorean Theorem,

i.e., in a right triangle, the hypotenuse (斜邊) is longer than both legs (兩股)

5.40

Keywords in Section 5.2:

inner product: 內積

inner product space: 內積空間

norm: 範數

distance: 距離

angle: 夾角

orthogonal: 正交

unit vector: 單位向量

normalizing: 單位化

Cauchy-Schwarz inequality: 科西-舒瓦茲不等式

triangle inequality: 三角不等式

Pythagorean theorem: 畢氏定理

orthogonal projection: 正交投影

5.41

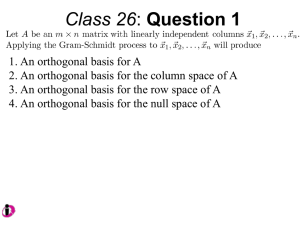

5.3 Orthonormal Bases: Gram-Schmidt Process

Orthogonal set (正交集合):

A set S of vectors in an inner product space V is called an

orthogonal set if every pair of vectors in the set is orthogonal

S v 1 , v 2 , , v n V

v i , v j 0 , for i j

Orthonormal set (單位正交集合):

An orthogonal set in which each vector is a unit vector is

called orthonormal set

S v 1 , v 2 , , v n V

For i j , v , v v , v v

i

j

i

i

i

For i j , v i , v j 0

2

1

5.42

Note:

– If S is also a basis, then it is called an orthogonal basis (正

交基底) or an orthonormal basis (單位正交基底)

n

– The standard basis for R is orthonormal. For example,

S (1 ,0 ,0 ) ,( 0 ,1 ,0 ) ,( 0 ,0 ,1)

is an orthonormal basis for R3

This section identifies some advantages of orthonormal bases,

and develops a procedure for constructing such bases, known

as Gram-Schmidt orthonormalization process

5.43

Ex 1: A nonstandard orthonormal basis for R3

Show that the following set is an orthonormal basis

v1

S

v2

1

1

,

,

0

,

2

2

2

2 2 2

,

,

6

6

3

v3

,

2 1

2

,

,

3 3

3

Sol:

First, show that the three vectors are mutually orthogonal

v1 v 2

v1 v 3

1

6

2

00

2

3 2

v2 v3

1

6

2

9

00

3 2

2

9

2 2

0

9

5.44

Second, show that each vector is of length 1

|| v 1 ||

v1 v1

|| v 2 ||

v2 v2

2

36

|| v 3 ||

v3 v3

4

9

1

2

1

2

4

9

0 1

2

36

8

9

1

1

9

1

Thus S is an orthonormal set

Because these three vectors are linearly independent (you can

check by solving c1v1 + c2v2 + c3v3 = 0) in R3 (of dimension 3), by

Theorem 4.12 (given a vector space with dimension n, then n

linearly independent vectors can form a basis for this vector space),

these three linearly independent vectors form a basis for R3.

S is a (nonstandard) orthonormal basis for R3

5.45

Ex : An orthonormal basis for P2(x)

In P2 ( x ) , with the inner product p , q a 0 b0 a1b1 a 2 b2,

the standard basis B {1, x , x 2 } is orthonormal

Sol:

v1 1 0 x 0 x ,

2

v2 0 x 0x ,

2

v3 0 0x x ,

2

Then

v 1 , v 2 (1)(0) (0)(1) (0)(0) 0

v 1 , v 3 (1)(0) (0)(0) (0)(1) 0

v 2 , v 3 (0)(0) (1)(0) (0)(1) 0

1 1 0 0 0 0

v1

v1 , v1

1

v2

v2, v2

0 0 1 1 0 0

1

v3

v3, v3

0 0 0 0 1 1

1

5.46

Theorem 5.10: Orthogonal sets are linearly independent

If S { v 1 , v 2 , , v n } is an orthogonal set of nonzero vectors

in an inner product space V, then S is linearly independent

Pf:

S is an orthogonal set of nonzero vectors,

i.e., v i , v j 0 for i j , and v i , v i 0

For c1 v 1 c 2 v 2

(If there is only the trivial solution for c ’s,

i

c n v n 0 i.e., all c ’s are 0, S is linearly independent)

i

cn v n , v i 0, v i 0

i

c1 v 1 c 2 v 2

c1 v 1 , v i c 2 v 2 , v i

ci v i , v i

cn v n , v i

c i v i , v i 0 (because S is an orthogonal set of nonzero vectors)

vi, vi 0

ci 0

i

S is lin early in d ep en d en t

5.47

Corollary to Theorem 5.10:

If V is an inner product space with dimension n, then any

orthogonal set of n nonzero vectors is a basis for V

1. By Theorem 5.10, if S = {v1, v2, …, vn} is an orthogonal set of n

vectors, then S is linearly independent

2. According to Theorem 4.12, if S = {v1, v2, …, vn} is a linearly

independent set of n vectors in V (with dimension n), then S is a

basis for V

※ Based on the above two arguments, it is straightforward to

derive the above corollary to Theorem 5.10

5.48

Ex 4: Using orthogonality to test for a basis

Show that the following set is a basis for R 4

v1

v2

v3

v4

S {( 2 , 3 , 2 , 2 ) , (1 , 0 , 0 , 1) , ( 1 , 0 , 2 , 1) , ( 1 , 2 , 1 , 1)}

Sol:

v 1 , v 2 , v 3 , v 4 : nonzero vectors

v1 v 2 2 0 0 2 0

v 2 v 3 1 0 0 1 0

v1 v 3 2 0 4 2 0

v 2 v 4 1 0 0 1 0

v1 v 4 2 6 2 2 0

v3 v4 1 0 2 1 0

S is orthogonal

S is a basis for R

4

(by Corollary to Theorem 5.10)

※ The corollary to Thm. 5.10 shows an advantage of introducing the concept of

orthogonal vectors, i.e., it is not necessary to solve linear systems to test

whether S is a basis (e.g., Ex 1 on 5.44) if S is a set of orthogonal vectors

5.49

Theorem 5.11: Coordinates relative to an orthonormal basis

If B { v 1 , v 2 , , v n } is an orthonormal basis for an inner

product space V, then the unique coordinate representation of a

vector w with respect to B is

w w , v1 v1 w , v 2 v 2

w , v n v n

※ The above theorem tells us that it is easy to derive the coordinate

representation of a vector relative to an orthonormal basis, which is

another advantage of using orthonormal bases

Pf:

B { v 1 , v 2 , , v n } is an orthonormal basis for V

w k 1 v 1 k 2 v 2 k n v n V (unique representation from Thm. 4.9)

1

S ince v i , v j

0

i j

i j

, then

5.50

w , v i ( k1 v 1 k 2 v 2

k1 v 1 , v i

ki

k n v n ), v i

ki v i , v i

kn v n , vi

for i = 1 to n

w w , v1 v1 w , v 2 v 2 w , v n v n

Note:

If B { v 1 , v 2 , , v n } is an orthonormal basis for V and w V ,

Then the corresponding coordinate matrix of w relative to B is

w B

w , v1

w , v 2

w , v n

5.51

Ex

For w = (5, –5, 2), find its coordinates relative to the standard

basis for R3

w , v 1 w v 1 ( 5 , 5 , 2 ) (1 , 0 , 0 ) 5

w , v 2 w v 2 ( 5, 5 , 2 ) ( 0 , 1 , 0 ) 5

w , v 3 w v 3 ( 5 , 5 , 2 ) ( 0 , 0 , 1) 2

[w ]B

5

5

2

※ In fact, it is not necessary to use Thm. 5.11 to find the coordinates relative

to the standard basis, because we know that the coordinates of a vector

relative to the standard basis are the same as the components of that vector

※ The advantage of the orthonormal basis emerges when we try to find the

coordinate matrix of a vector relative to an nonstandard orthonormal basis

(see the next slide)

5.52

Ex 5: Representing vectors relative to an orthonormal basis

Find the coordinates of w = (5, –5, 2) relative to the following

orthonormal basis for R 3

v1

B {( 53 ,

4

5

v2

, 0) , (

4

5

,

3

5

v3

, 0 ) , ( 0 , 0 , 1)}

Sol:

w , v 1 w v 1 ( 5 , 5 , 2 ) ( 53 ,

4

5

, 0) 1

w , v 2 w v 2 ( 5, 5 , 2 ) (

,

3

5

4

5

, 0) 7

w , v 3 w v 3 ( 5 , 5 , 2 ) ( 0 , 0 , 1) 2

[w ]B

1

7

2

5.53

The geometric intuition of the Gram-Schmidt process to find an

orthonormal basis in R2

v2

w2

w1 v1

v2

v1

projw1 v 2

v1 , v 2 is a basis for R 2

{

w1

w1

,

w2

w 2 v 2 projw1 v 2 is

orthogonal to w1 v1

} is an orthonorm al basis for R

2

w2

5.54

Gram-Schmidt orthonormalization process:

B { v 1 , v 2 , , v n } is a basis for an inner product space V

Let

S1 span({w1})

w 1 v1

w 2 v 2 proj S1 v 2 v 2

w 3 v 3 proj S 2 v 3 v 3

v2 , w1

w1, w1

v3 , w1

w1 , w1

n 1

w n v n proj S n 1 v n v n

i 1

S2 span({w1 , w 2 })

w1

w1

vn , wi

wi , wi

v3 , w 2

w2 , w2

wi

w2

The orthogonal projection onto a

subspace is actually the sum of

orthogonal projections onto the vectors

in an orthogonal basis for that subspace

(I will prove it on Slides 5.67 and 5.68)

B ' { w 1 , w 2 , , w n } is an orthogonal basis

B '' {

w1

w1

,

w2

w2

,

,

wn

wn

}

is an orthonormal basis

5.55

Ex 7: Applying the Gram-Schmidt orthonormalization process

Apply the Gram-Schmidt process to the following basis for R3

B

v1

v2

v3

{( 1 , 1 , 0 ) ,

(1 , 2 , 0 ) ,

( 0 , 1 , 2 )}

Sol:

w 1 v 1 (1 , 1 , 0 )

w 2 v2

w 3 v3

v2 w1

w1 w1

v3 w1

w1 w1

(0 , 1 , 2)

1

2

w 1 (1 , 2 , 0 )

w1

v3 w 2

w2 w2

(1 , 1 , 0 )

3

(1 , 1 , 0 ) (

2

1

2

,

1

, 0)

2

w2

1/ 2

1/ 2

(

1

2

,

1

2

, 0) (0 , 0 , 2)

5.56

Orthogonal basis

1 1

B ' { w 1 , w 2 , w 3 } { (1, 1, 0), (

, , 0), (0, 0, 2) }

2 2

Orthonormal basis

B'' {

w1

w1

,

w2

w2

,

w3

w3

} {(

1

2

,

1

2

, 0), (

1

2

,

1

, 0), (0, 0, 1) }

2

5.57

Ex 10: Alternative form of Gram-Schmidt orthonormalization process

Find an orthonormal basis for the solution space of the

homogeneous system of linear equations

x1 x 2

7 x4 0

2 x1 x 2 2 x 3 6 x 4 0

Sol:

1

2

1

0

7

1

2

6

0

0

G .-J.E

1

0

0

2

1

1

2

8

0

0

x1 2 s t

2

1

x2

2 s 8t

2

8

s

t

x3

1

0

s

x

t

0

1

4

5.58

Thus one basis for the solution space is

B { v 1 , v 2 } {( 2 , 2 , 1 , 0 ) , (1 , 8 , 0 , 1)}

w 1 v 1 and u 1

w1

w1

2 2 1

2,

2,

1,

0

, , ,0

3

3 3 3

1

w 2 v 2 v 2 , u 1 u 1 (due to w 2 v 2

v 2 , u1

u1 , u1

u 1 and u 1 , u 1 1)

2 2 1 2 2 1

1, 8, 0, 1 1, 8, 0, 1

, , , 0

, , ,0

3 3 3 3 3 3

3, 4, 2, 1

u2

w2

w2

1

30

3,

4, 2, 1

2 2 1 3

4

B ' '

, , ,0 ,

,

,

30

3 3 3 30

2

30

,

1

※ In this alternative form,

we always normalize wi

to be ui before

processing wi+1

※ The advantage of this

method is that it is

easier to calculate the

orthogonal projection

of wi+1 on u1, u2,…, ui

30

5.59

Alternative form of the Gram-Schmidt orthonormalization process:

B {v1, v 2 , , v n}

u1

w1

u2

w2

u3

w3

un

wn

w1

w2

w3

is a basis for an inner product space V

v1

v1

, w here w 2 v 2 v 2 , u 1 u 1

, w here w 3 v 3 v 3 , u 1 u 1 v 3 , u 2 u 2

wn

{u 1 , u 2 ,

n 1

, w here w n v n

vn , ui ui

i 1

, u n } is an orthonorm al basis for V

5.60

Keywords in Section 5.3:

orthogonal set: 正交集合

orthonormal set: 單範正交集合

orthogonal basis: 正交基底

orthonormal basis: 單位正交基底

linear independent: 線性獨立

Gram-Schmidt Process: Gram-Schmidt過程

5.61

5.4 Mathematical Models and Least Squares Analysis

Orthogonal complement (正交補集) of V:

Let S be a subspace of an inner product space V

(a) A vector v in V is said to be orthogonal to S, if v is

orthogonal to every vector in S, i.e., v , w 0 , w S

(b) The set of all vectors in V that are orthogonal to S is

called the orthogonal complement of S

S

(S

{v V | v , w 0 , w S }

(read " S perp" ))

Notes:

(1)

0

V

(2) V

0

(This is because 0 , v 0 for any vector v in V)

5.62

Notes:

G iven S to be a subspace of V ,

(1) S

is a subspace of V

(2) S S

0

(3) ( S ) S

Ex:

If V R ,

2

T hen (1) S

S x -axis

y -axis is a subspace of R

(2) S S

(0, 0)

(3) ( S ) S

2

※ Any vector in x-axis (or y-axis)

can be represented as (t, 0) (or

(0, s)). It is straightforward to

see that any vector in y-axis is

orthogonal to every vector in xaxis since (0, s) · (t, 0) = 0

※ On Slide 4.32, any subset

consisting of all points on a line

passing through the origin is a

subspace of R2. Therefore, both

x- and y-axes are subspaces of

R2

※ In the above example, it can be observed that any vector in R2 can be

uniquely written as a sum of a vector from S and a vector from S

5.63

Direct sum (直和):

Let S1 and S2 be two subspaces of V. If each vector x V

can be uniquely written as a sum of a vector v1 from S1

and a vector v2 from S2, i.e., x = v1+ v2, then V is the

direct sum of S1 and S2 , and we can write

V S1 S 2

5.64

Theorem 5.13: Properties of orthogonal subspaces

Let S be a subspace of V (with dimension n). Then the

following properties are true

(1) dim( S ) dim( S ) n

(2) V S S

(3) ( S ) S

※ You can memorize this theorem by understanding the example

on Slide 5.63 or by considering V as the xyz-space (with

dimension of 3), S as the xy-plane (with dimension of 2), and S

as the z-axis (with dimension of 1)

※ The theorem states that every inner product space V can be

represented as the direct sum of a subspace S and its

corresponding orthogonal complement S

5.65

※ In Section 5.2, we studied the orthogonal projection of

one vector onto another vector. That can be generalized to

the orthogonal projection of a vector onto a subspace S

1. The vector v1 is the projection of v onto the subspace S

and denoted as v1 = projSv

2. v2 = v – v1 = v – projSv, which implies that the vector v2 =

v – projSv is orthogonal to the subspace S, i.e., v 2 S

※ For example, v = (1, 2, 3), S is the xy-plane with the standard basis of {e1,

e2} = {(1, 0, 0), (0, 1, 0)}, and S is the z-axis with the standard basis {e3}

= {(0, 0, 1)} v (1, 2, 0) (0, 0, 3) v 1 v 2

※ Geometrically speaking, v1 = (1, 2, 0) = projSv, so v2 = v – v1 = v – projSv

= (0, 0, 3). In addition, v2 projSv since (0, 0, 3) · (1, 2, 0) = 0

※ Since any vector 𝐯 ∈ 𝑉 can expressed as v1 + v2 and 𝐯1 ∈ 𝑆, 𝐯2 ∈ 𝑆 ⊥ , so

we can obtain 𝑉 = 𝑆⨁𝑆 ⊥

5.66

Theorem 5.14: Projection onto a subspace

(Here we will formally prove that the orthogonal projection onto a subspace is the sum

of orthogonal projections onto the vectors in an orthonormal basis for that subspace)

If {u 1 , u 2 , , u t } is an orthonormal basis for the

subspace S of V, and v V , then

proj S v v , u 1 u 1 v , u 2 u 2 v , u t u t

Pf:

L et v V , v v 1 v 2 , v 1 S , v 2 S

u 1 ,

, u t is an orthonorm al basis for S

w e can have v 1 c1u 1

v , u i ( c1 u 1

(Since the vector space v can be expressed as the

direct sum of S and S , according to the results

on Slide 5.66, v1 is the orthogonal projection of v

onto S, and v2 is orthogonal to any vector in S.)

c t u t and thus v v 1 v 2 c1u 1

ct u t v 2

c t u t v 2 ), u i

c i u i , u i c i , for i = 1 to t

proj S v v 1 c1u 1

ct u t v , u 1 u 1 v , u 2 u 2

v , u t u t

5.67

Corollary to Theorem 5.14: Consider an orthogonal basis

If { w 1 , w 2 , , w t } is an orthogonal basis for the

subspace S of V, and v V , then

proj S v

v, w1

w1, w1

w1

v, w 2

w 2 , w 2

w2

v, w t

w t , w t

wt

Pf:

If u 1

w1

w1

,u2

w2

, and u t

,

w2

wt

,

wt

w e can derive proj S v v , u 1 u 1 v , u 2 u 2

R eplacing u i w ith

proj S v

wi

wi

v, w1

w1, w1

, v , u i u i v ,

w1

v, w 2

w 2 , w 2

w2

wi

wi

v, u t u t .

wi

wi

v, w t

w t , w t

v, w i

wi

2

wi

v, w i

w i , w i

wi

wt

※ So, the orthogonal projection onto a subspace is the sum of orthogonal projections onto the

vectors in an orthogonal (or orthonormal) basis for that subspace

※ This formula is what we use in the Gram-Schmidt orthonormalization process on Slide 5.55 5.68

Ex 5: Projection onto a subspace (在子空間的投影)

w 1 0 , 3 , 1 , w 2 2 , 0 , 0 , v 1, 1, 3

Find the projection of v onto the subspace W span ({ w 1 , w 2 })

Sol:

w 1 , w 2 : an orthogonal basis for W

w2

w1

u 1 , u 2

,

w2

w1

3

1

an orthonormal

,

), 1, 0 , 0 :

(0,

10

10

basis for W

projW v v , u 1 u 1 v , u 2 u 2

6

3

1

9 3

(0,

,

) 1, 0, 0 (1, , )

5 3

10

10

10

5.69

v

v

v u

v projS v

v cu

v proju v

projS v u

projS v

proju v

u

cu

v proju v v cu

u

S

v projS v v u

※ Theorem 5.9 tells us that among all the scalar multiples of a vector

u, i.e., cu, the orthogonal projection of v onto u is the one closest

to v (see the left figure)

※ This property is also true for projections onto subspaces. That is,

among all the vectors in the subspace S, the vector projSv is the

vector closest to v (see the right figure and Theorem 5.15 on the

next slide)

5.70

Theorem 5.15: Orthogonal projection and distance

Let S be a subspace of an inner product space V, and v V.

Then for all u S , u proj S v

|| v proj S v || || v u ||

(Among all the vectors in the subspace S,

o r || v proj S v || min || v u || the vector proj v is closest to v)

S

Pf:

v u ( v proj S v ) (proj S v u )

proj S v u S and v proj S v S

v proj S v proj S v u

v p ro j S v , p ro j S v u 0

T hus the P ythagoream T heorem in T heorem 5.6 can b e applied,

vu

2

v proj S v

2

proj S v u

2

.

S ince u proj S v , the second term on the right hand side is positive,

and w e can have

v proj S v v u

5.71

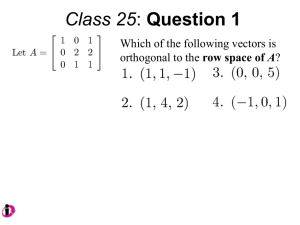

Theorem 5.16: Fundamental subspaces (基本子空間) of a

matrix, including CS(A), CS(AT), NS(A), and NS(AT)

If A is an m×n matrix, then

(1) C S ( A ) N S ( A Τ ) (or expressed as C S ( A ) N S ( A ))

Τ

P f: C onsider any v C S ( A ) and any u N S ( A ), and the goal is to prove v u 0

T

( A (1) ) T

T

A u

( A ( n ) )T

u NS ( A )

T

v u ( c1 A

(2)

(3)

(4)

(1)

cn A

(n)

) u ( c1 A

CS ( A ) NS ( A ) R

T

( A (1) ) T u 0

u

= =0

( A ( n ) )T u 0

m

(1)

u

cn A

(n)

u ) (0

0) 0

(because CS ( A ) CS ( A ) R

Τ

(Proved by

setting B = AT

and B satisfies

the first property)

m

in T hm. 5.13)

C S ( A ) N S ( A ) (or expressed as C S ( A ) N S ( A ))

Τ

C S ( A ) N S ( A ) R (because CS ( A ) CS ( A ) R in T hm. 5.13)

T

n

T

T

n

※ Note that according to Theorem 5.13, we know that every inner product space

V can be represented as the direct sum of a subspace S and its

corresponding S . Since CS(A) (CS(AT)) is a subspace of Rm (Rn), we can

derive CS ( A ) CS ( A ) R m ( CS ( A T ) CS ( A T ) R n )

※ Ex. 6 on the next slide verifies these four properties are true

5.72

Ex 6: (Fundamental subspaces)

Find the four fundamental subspaces of the matrix

1

0

A

0

0

2

0

0

0

0

1

0

0

(reduced row-echelon form)

Sol:

C S ( A ) span

1, 0, 0, 0 , 0,1, 0, 0

C S ( A ) RS A span

Τ

NS ( A ) span

1, 2, 0 ,

is a subspace of R

0, 0,1

4

is a subspace of R

3

2 ,1, 0 is a subspace of R 3

(The nullspace of A is a solution space of the homogeneous system

Ax = 0, i.e., you need to solve Ax = 0 to derive (–2, 1, 0))

5.73

1

Τ

[ A | 0] 2

0

0

0

0

0

0

0

1

0

0

N S ( A ) span

T

0

G .-J. E .

0

0

0, 0,1, 0 ,

1

0

0

0, 0, 0,1

0

0

0

1

0

0

0

0

s

0

t

0

0

0

is a subspace of R

4

Check:

Τ

C S ( A) N S ( A )

(a(1, 0, 0, 0) + b(0, 1, 0, 0)) · (c(0, 0, 1, 0) + d(0, 0, 0, 1)) = 0

CS ( A ) NS ( A ) R

T

Τ

C S ( A ) N S ( A)

v = v1 + v2 = (a(1, 0, 0, 0) + b(0, 1, 0, 0)) + (c(0, 0, 1, 0) + d(0,

0, 0, 1))

(e(1, 2, 0) + f(0, 0, 1)) · (g(–2, 1, 0)) = (e, 2e, f) · (–2g, g, 0) = 0

CS ( A ) NS ( A ) R

T

4

3

w = w1 + w2 = (e(1, 2, 0) + f(0, 0, 1)) + (g(–2, 1, 0)). (Since

(1, 2, 0), (0, 0, 1), (–2, 1, 0) are mutually orthogonal, these

three vectors can be a basis to span R3 according to

Corollary of Theorem 5.10)

5.74

Ex 3:

W span ({ w 1 , w 2 })

Let W is a subspace of R4 and w 1 (1, 2, 1, 0), w 2 (0, 0, 0, 1) .

(a) Find a basis for W

(b) Find a basis for the orthogonal complement of W

Sol:

1

2

A

1

0

0

0

.-J. E .

G

0

1

1

0

0

0

0

1

0

0

(reduced row-echelon form)

w1 w 2

(a) W C S A , and since G .-J. E . w ill not affect the dependency am ong

colum ns, w e can conlude that

1, 2,1, 0 , 0, 0, 0,1

independent and could be a basis of W

are linearly

5.75

CS A NS A

(b) W

1

A

0

Τ

2

1

0

0

Τ

(The nullspace of AT is a solution space of the

homogeneous system ATx = 0)

x1 2 s t

2

1

x2

s

1

0

s

t

x3

0

1

t

x

0

0

0

4

0

1

2,1, 0, 0 1, 0,1, 0

is a basis for W

Check for Theorem 5.13:

(1)

dim( W ) dim( W

(2)

W W

R

4

) dim( R )

4

v = v1 + v2 = (c1(1, 2, 1, 0) + c2(0, 0, 0, 1)) + (c3(–2, 1, 0, 0)

+ c4(–1, 0, 1, 0)). (Since these four vectors are linearly

independent, these four vectors can form a basis for R4, i.e.,

for each v, there is a unique solution set of ci. In other

words, R4 is the direct sum of W and W )

5.76

Least squares problem (最小平方問題):

Ax b

m n n 1 m 1

(A system of linear equations)

(1) When the system is consistent, we can use the Gaussian

elimination with the back substitution to solve for x

(2) When the system is inconsistent, only the “best possible”

solution of the system can be found, i.e., to find a solution of x

for which the difference (or said the error) between Ax and b is

smallest

Note: Theorem 4.19 states that the system of linear equations Ax = b is

consistent if and only if b is in the column space of A

5.77

Least squares solution:

Given a system Ax = b of m linear equations in n unknowns,

the least squares problem is to find a vector x in Rn that

minimizes the distance between Ax and b, i.e., A x b

with respect to the Euclidean inner product in Rn. Such

vector is called a least squares solution of Ax = b

※ The term least squares comes from the fact that minimizing

2

A x b is equivalent to minimizing A x b = (Ax – b) ·

(Ax – b), which is a sum of squared errors

5.78

A M mn

x R

In Thm. 4.19 on Slides 4.97 and 4.98, Ax can

expressed as x1A(1)+x2A(2)+…+xnA(n)

n

(1)

(2)

(n)

That is, find xˆ1 A xˆ 2 A ... xˆ n A ,

which is closest to b

Ax C S ( A)

D efine W C S ( A ), and the problem to find xˆ

such that A xˆ is closest to b is equivalent to find

the vector in C S ( A ) closest to b , that is pr ojW b

b

b Axˆ

Axˆ = projW b

W

T hus A xˆ projW b (To find the best solution xˆ which should satisfy this equation)

( b projW b ) ( b A xˆ ) W ( b A xˆ ) C S ( A )

Τ

b A xˆ C S ( A ) N S ( A )

Τ

A ( b A xˆ ) 0

(The nullspace of AT is a solution space of

the homogeneous system ATx=0)

Τ

Τ

A A xˆ A b (the n×n linear system of normal equations (一般方程式)

associated with Ax = b)

5.79

Note:

The problem of finding the least squares solution of A x b

is equal to the problem of finding an exact solution of the

associated normal system A Τ A xˆ A Τ b

Theorem associated with the least squares solution:

For any linear system A x b , the associated normal system

Τ

Τ

A A xˆ A b

is consistent, and all solutions of the normal system are least

squares solution of Ax = b. In other words, if W is the column

space of A, and xˆ is the least squares solution of Ax = b, then

the orthogonal projection of b on W is A xˆ , i.e.,

proj W b A xˆ

5.80

Theorem for the normal equation:

If A is an m×n matrix with linearly independent column

vectors, then for every m×1 vector b, the linear system Ax = b

has a unique least squares solution. This solution is given by

Τ

1

Τ

xˆ ( A A ) A b

Meanwhile, if W denotes the column space of A, then the

orthogonal projection of b onto W is

projW b A xˆ A ( A A )

1

A b

5.81

Ex 7: Solving the normal equations

Find the least squares solution of the following system

Ax b

1

1

1

1

2

3

0

c0

1

c1

3

and find the orthogonal projection of b onto the column space

of A

5.82

Sol:

1

A A

1

T

1

A b

1

T

1

2

1

2

1

3

1

1

1

1

3

2

6

3

1

3

0

4

1

11

3

6

14

the corresponding normal system

A A xˆ A b

T

3

6

T

6 cˆ 0 4

ˆ

14 c1 11

5.83

the least squares solution of Ax = b

5

cˆ 0 3

xˆ 3

cˆ1 2

the orthogonal projection of b onto the column space of A

1

proj CS ( A ) b A xˆ 1

1

1 5

61

3 8

2 3 6

2 17

3

6

※ Find an orthogonal basis for CS(A) by performing the GramSchmidt process, and then calculate projCS(A)b directly, you will

derive the same result

5.84

※ The above problem is equivalent to find a line y = c0+c1x that “best fits”

these three points (1, 0), (2, 1), and (3, 3) on the xy-plane.

※ This analysis is also called the least squares regression analysis, in

which we can find the “best fit” linear relationship between x and y, and

next we can estimate y given the different values of x.

※ The matrix representation for the three equations corresponding to (1, 0),

(2, 1), and (3, 3) is as follows

0 c 0 c11

1 c 0 c1 2

3 c c 3

0

1

0 1

1 1

3 1

1

2

3

c0

Y XC

c1

※ Since the above system of linear equations is inconsistent, only the “best

possible” solution of the system, i.e., to find a solution of C to minimize

the difference between XC and Y

※ According to the theorem on Slide 5.81, the solution of C should be Cˆ

(XTX) –1XTY (Y b , X A , and C x) , which is exactly the same as the

formula of the least squares regression in Section 2.5

5.85

※ The results imply the least squares regression line for (1, 0), (2, 1), and (3, 3)

is y = – (5/3) + (3/2)x

※ Since the “best fit” rather than the “exact” relationship is considered, an

error term e should be introduced to obtain the exact equations

corresponding to (1, 0), (2, 1), and (3, 3).

0 cˆ 0 cˆ11 e1

0 1

1 cˆ 0 cˆ1 2 e1 1 1

3 cˆ cˆ 3 e

0

1

1

3 1

1

2

3

1/ 6

5 / 3

1

/

3

Y X Cˆ E

3/2

1 / 6

※ The minimized sum of squared errors is

E E 1 / 6

T

1/ 6

1

1 / 6 1 / 3

6

1 / 6

1 / 3

※ Note that the square root of ETE is the distance between Y and X Cˆ , i.e.,

(0 ( 1 / 6)) (1 (8 / 6)) (3 (17 / 6))

2

2

2

1/ 6

5.86

Ex: Prediction of world population

The following table shows the world population (in billions)

for six different years

Year

1980 1985 1990 1995

2000 2005

Population

4.5

6.1

4.8

5.3

5.7

6.5

Let x = 0 represent the year 1980. Find the least squares

regression quadratic polynomial y = c0+c1x+c2x2 for these data

and use the model to estimate the population for the year 2010

※ The least squares regression analysis can be employed to solve not

only the univariate regression in the previous example but also

multivariate regressions, i.e., y = c0 + c1x1 + c2x2+…+ cnxn, where y is

the dependent variable and xi are explanatory variables

5.87

By substituting the data points (0, 4.5), (5, 4.8), (10, 5.3), (15, 5.7),

(20, 6.1), and (25, 6.5) into the quadratic polynomial y = c0+c1x+c2x2,

we can produce the least squares problem

Ax b

1

1

1

1

1

1

0

5

10

15

20

25

0

25

100

225

400

625

4 .5

4 .8

c0

5 .3

c

1 5 .7

c 2

6 .1

6

.

5

The normal equations are

A A xˆ A b

T

6

75

1375

75

1375

28125

28125

611875

1375

T

cˆ 0 32 . 9

cˆ1 447

cˆ 2 8435

5.88

The solution is

cˆ 0 4 . 47

xˆ cˆ1

0 . 08

cˆ 2 0 . 00007

4 .5

0 . 08

0

Evaluating this polynomial at x = 30 gives the estimate of the

world population for the year 2010

y 4 . 5 0 . 08 ( 30 ) 6 . 9 billion

5.89

In the field of management, the least squares regression is

commonly used to find the linear relationship between the

dependent variable (y) and explanatory variables (xi), for example,

– The relationship between the growth of the cost of purchasing

material (y) and the growth of the oil price (x)

–

–

If the regression result is y = 0.01 + 3x, it means that if the oil price rises

by 1%, then the firm’s cost on material on average increases by 3%

The relationship between the excess returns of an individual

stock i (y = ri – rf) and the excess returns of the market index (x

= rM – rf) (single-factor model or CAPM)

–

–

If the regression result is y = 0.05 + 1.2x, it means that if the market

excess return rises by 1%, then the excess return of the individual stock

on average increases by 1.2%

Expected market excess return next year

9%

10%

Expected individual excess return next year

15.8% 17%

The coefficient for x, which is 1.2 here, is called beta

11%

18.2%

5.90

Homework 1: Find betas for three firms

Solve the least squares regression problem as follows

ri,t – rf,t = αi + βi(rM,t – rf,t) + et

–

ri,t and rM,t are total returns of the asset i and the market index on each

trading day t, which include both capital gains and dividend income

–

The risk free rate rf,t is approximated by 1-month Treasury yields

–

It is inappropriate to calculate returns according to closing prices, and you

need to find adjusted closing prices to compute the adjusted (total) return

S&P 500 total return index can be downloaded from the course website

Adjusted closing prices of S&P 500 components can be downloaded from

the finance page of U.S. Yahoo

Since ri,t and rM,t are daily returns, convert them to annualized returns

U.S. Department of the Treasury: http://www.treasury.gov/resourcecenter/data-chart-center/interest-rates/Pages/TextView.aspx?data=yield

Today is assumed to be Sept. 1, 2014 and it is required to employ the

prior two-year historical daily returns to solve αi and βi for each firm5.91

Bonus: construct a portfolio rp = w1r1 + w2r2 + w3r3 to replicate

the expected return and beta of the market index in the prior two

years, i.e., solving w1, w2, and w3 in the following system

w1 E ( r1 ) w 2 E ( r2 ) w 3 E ( r3 ) E ( rM )

w1 1 w 2 2 w 3 3 M 1

w1 w 2 w 3 1

(Note that the expectations are approximated by the sample averages)

– In-sample test: examine that rp is indeed with identical average

return and beta as the market portfolio for the prior two years, i.e.,

verify the expected return and beta of a portfolio are the weighted

averages of the expected returns and betas of component stocks

– Out-of-sample test: compare the average return and beta of this

portfolio and the market index for the next two months and tell me

what you observe

Total points for this homework is 10. The basic requirement is 7

points, and the bonus is 3 points

5.92

Keywords in Section 5.4:

orthogonal to W: 正交於W

orthogonal complement: 正交補集

direct sum: 直和

projection onto a subspace: 在子空間的投影

fundamental subspaces: 基本子空間

least squares problem: 最小平方問題

normal equations: 一般方程式

5.93

5.5 Applications of Inner Product Spaces

Least Squares Approximations for a function

– An important application: use a polynomial function to

approximate the probability density function (pdf) for the

standard normal distribution, and then you can derive the

approximation for the cumulative distribution function

(cdf) of the standard normal distribution

1

g ( x ) a 0 a1 x a 2 x a 3 x a 4 x n ( x )

2

c

a0 x

4

1

2

a1 x

2

1

3

a2 x

3

3

1

4

a3 x

4

2

2

( a 0 a1 x a 2 x a 3 x a 4 x ) dx

2

3

e

x /2

4

1

5

N (c )

a 4 x k |

( k can be solved via a 0 x

5

1

2

c

a1 x

2

1

3

a2 x

3

1

4

c

n ( x ) dx

a3 x

4

1

5

1

c

2

2

e

x /2

dx

a 4 x k | 1)

5

5.94

Calculate N(c) by the Monte Carlo Simulation

– Monte Carlo Simulation: generate some random scenarios and

conduct further analysis based on these scenarios

– By definition, N(c) = prob (x c) for x ~ N(0,1)

– Draw, for example, 1000 random samples from a standard

normal distribution x ~ N(0,1)

– Then N(c) can be estimated with #(x c) / 1000

Draw random samples from N(0,1) in Excel

– Rand(): draw uniformly distributed random samples from [0,1]

(The probabilities for x equal to any

values between 0 and 1 are the same)

5.95

Normsinv(): inverse of the cumulative density function of the

standard normal distribution

※Normsinv(Rand()) can draw random samples from the

standard normal distribution

–

N ( x)

1

0

x

The function Rand() draws a random sample from [0,1].

Then we use the inverse function N 1 (Rand()) to derive a

simulated value of x from the standard normal distribution,

where N (x) is the cumulative distribution function of the

standard normal distribution.

5.96

Analyze a project with expanding and aborting options by the

Monte Carlo Simulation

– Time 0: initial investment V0

t

– Time t1: the value of the project is V1 ~ N (V 0 (1 k ) , 1 ) , and the

firm can double the investment if V1 u (i.e.,V1 2V1 ), abort

the investment if V1 d (i.e., V1 0 ), or otherwise maintain the

same investment amount such that V1 V1 , where k is the

expected growth rate of the investment

(t t )

, 2 )

– Time t2: the value of the project is V 2 ~ N (V1(1 k )

1

2

1

※ Through the Monte Carlo Simulation, you can generate

thousands of scenarios for the final payoff of the project, and

further analyze the associated mean and s.d. of the payoff of

the project

5.97