Spectral decomposition

advertisement

Dirac Notation and Spectral

decomposition

Michele Mosca

review”: Dirac notation

t

ψ

For any vector ψ , we let

denote ψ , the

complex conjugate of ψ .

We denote by φ ψ φ ψ the inner

product between two vectors φ and ψ

ψ

defines a linear function that maps

φ ψφ

(I.e.

ψ φ ψ φ

… it maps any state

φ to the coefficient of its ψ component)

More Dirac notation

ψ ψ defines a linear operator that maps

ψ ψ φ ψ ψφ ψφ ψ

This is a scalar so I

can move it to front

ψ (I.e.

ψ projects a state to its ψ component

Recall: this projection operator also corresponds to the

“density matrix” for ψ

)

More Dirac notation

More generally, we can also have operators

like θ ψ

θ ψ φ θ ψφ ψφ θ

Example of this Dirac notation

For example, the one qubit NOT gate

corresponds to the operator 0 1 1 0

e.g.

0

1 1 0 0

(sum of matrices

applied to ket vector)

0 1 0 1 0 0

0 10 1 00

0 0 11

1

This is one more

notation to calculate

state from state and

operator

The NOT gate is a 1-qubit unitary operation.

Special unitaries:

Pauli Matrices in new notation

The NOT operation, is often called the X or

σX operation.

0 1

X X NOT 0 1 1 0

1

0

1 0

Z Z signflip 0 0 1 1

0 1

0 i

Y Y i 0 1 i 1 0

i

0

Recall: Special unitaries:

Pauli Matrices in new representation

Representation of

unitary operator

What is e

iHt

??

It helps to start with the spectral

decomposition theorem.

Spectral decomposition

Definition: an operator (or matrix) M is

t

t

“normal” if MM =M M

t

t

E.g. Unitary matrices U satisfy UU =U U=I

E.g. Density matrices (since they satisfy

=t; i.e. “Hermitian”) are also normal

Remember: Unitary matrix operators and density

matrices are normal so can be decomposed

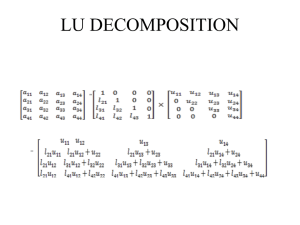

Spectral decomposition Theorem

Theorem: For any normal matrix M,

there is a unitary matrix P so that

M=PPt where is a diagonal matrix.

The diagonal entries of are the

eigenvalues.

The columns of P encode the

eigenvectors.

Example: Spectral decomposition of

the NOT gate

X 0 1

X1 0

X 0 11 0

1

1

1

1

1 0 2

0 1 2

2

2

X { 0 , 1 } 1 1

1

1

1 0

0 1

2

2

2

2

1

1

1

1

0

1

0

1

2

2

2

2

X

X

X

1 0

X { , }

0

1

This is the middle matrix in

above decomposition

Spectral decomposition: matrix

from column vectors

a11

a

21

P

an1

ψ1

a1n

a2 n

ann

ψ2 ψn

a12

a22

an 2

Column vectors

Spectral decomposition:

eigenvalues on diagonal

λ1

Λ

λ2

Eigenvalues on the

diagonal

λn

Spectral decomposition: matrix

as row vectors

a

a

t

P

*

a1n

*

11

*

12

*

21

*

22

a

a

a2 n

*

a ψ1

ψ2

a

ψ

*

ann n

Adjont matrix = row vectors

*

n1

*

n2

Spectral decomposition: using row

and column vectors

PΛP

t

ψ1

From theorem

ψ2

λi ψi ψi

i

λ1

ψn

λ1

0

0

λ2

0

λ2

0

ψ1

ψ

2

ψ

λn n

0

th

0 i row

i th colum n

0

0

0

λi

0

i

λn

0

0

1

0

Verifying eigenvectors and

eigenvalues

PΛP t ψ 2

ψ1

ψ1

Multiply on right by state vector Psi-2

ψ2

ψ2

λ1

ψn

λ1

ψn

λ2

λ2

ψ1

ψ2

ψ

λn n

ψ2

ψ1 ψ2

ψ2 ψ2

ψ ψ

λn n 2

Verifying eigenvectors and

eigenvalues

ψ1

ψ2

ψ1

ψ2

λ1

0

1

λ

2

ψ n

0

λn

0

λ2

ψ n λ2 ψ 2

0

useful

Why is spectral decomposition useful?

Because we can calculate f(A)

m-th power

Note that

So

ψ

i

ψi

m

ψi ψi

recall

ψi ψ j δij

m

m

λi ψi ψi λi ψi ψi

i

i

Consider

f ( x) am x

m

m

1

e.g. e x m

m m!

x

Why is spectral decomposition

useful? Continue last slide

M

f M am M am λi ψi ψi

m

m

i

m

m

m

am λi ψi ψi am λi ψi ψi

m

i

i m

f λi ψi ψi

m

i

= f( i)

Now f(M) will be in matrix

notation

f ( M ) am M m

m

f ( PΛP ) am PΛP

t

m

λ1

P am

m

m

t m

m t

am PΛ P P am Λ P

m

m

m

t

m

am λ1

m

t

P P

m

λn

Pt

m

am λn

m

Same thing in matrix notation

am λ1m

m

f ( PΛP t ) P

f λ1

Pt

P

f λn

ψ1

ψ2

Pt

m

m am λn

f λ1

ψ n

ψ1

ψ2

f λn

ψn

Same thing in matrix notation

f λ1

f ( PΛP t ) P

ψ1

ψ2

f λi ψi ψi

i

Pt

f λn

f λ1

ψ n

ψ1

ψ2

f λn

ψn

Important formula in

matrix notation

f ( PP ) f i i i

t

i

“Von Neumann

measurement in the

computational basis”

Suppose we have a universal set of quantum

gates, and the ability to measure each qubit

in the basis { 0 , 1 }

If we measure (0 0 1 1 ) we get

2

with

probability

b

α

b

We knew it from beginning

but now we can generalize

Using new notation this can be described like this:

We have the projection operators P0 0 0

and P1 1 1 satisfying P0 P1 I

We consider the projection operator or

“observable” M 0P0 1P1 P1

Note that 0 and 1 are the eigenvalues

When we measure this observable M, the

probability of getting the eigenvalue b is

2

Pr( b) Φ Pb Φ α b and we are in that

case left with the state

Pb

b

b b

p(b) b

Polar

Decomposition

Left polar decomposition

Right polar decomposition

This is for square

matrices

Gram-Schmidt

Orthogonalization

Hilbert Space:

Orthogonality:

Norm:

Orthonormal basis: