18_performance

advertisement

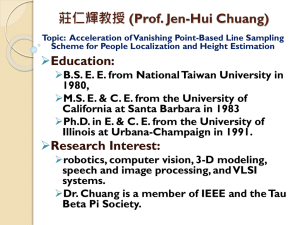

System Performance & Scalability i206 Fall 2010 John Chuang QuickTime™ and a TIFF (LZW) decompressor are needed to see this picture. John Chuang http://bits.blogs.nytimes.com/2007/11/26/yahoos-cybermonday-meltdown/index.html 2 Computing Trends Multi-core CPUs Data centers Cloud computing What are the drivers? - scalability, availability, cost-effectiveness John Chuang Servic e Server Client Server Client Server 3 Lecture Outline Performance Metrics Availability Queuing theory - M/M/1 queue Scalability - M/M/m queue John Chuang 4 What is Performance? Users want fast response time and high availability Managers want happy users, and many of them, while minimizing cost What are standard measures of system performance? John Chuang 5 Performance Metrics Response time (seconds) Throughput (MIPS, Mbps, TPS, ...) Resource utilization (%) Availability (%) John Chuang 6 Availability QuickTime™ and a decompressor are needed to see this picture. Availability = MTTF / (MTTF + MTTR) -Mean-time-to-failure (MTTF) -Mean-time-to-recover (MTTR) Quic kT ime™ and a dec ompress or are needed to s ee this pi cture. Availability Down-time per year One hour down-time per: 90% 36 days 9 hours 99% 3.7 days 4.1 days 99.9% 9 hours 41.6 days 99.99% 53 minutes 1.14 years 99.999% 5 minutes 11.41 years John Chuang 7 Response Time Client Formulate request Network Server Message latency Queuing time Processing time Message latency Interpret response Adapted from: David Messerschmitt M/M/1 Queue (m = 100) Response Time (s) 0.25 0.2 0.15 0.1 0.05 0 0 John Chuang 0.2 0.4 0.6 Utilization 0.8 1 8 Queuing Theory 1. Arrival Process 5. Customer Population John Chuang 6. Service Discipline 4. System Capacity 2. Service Time Distribution 3. Number of Servers Source: Raj Jain 9 Kendall’s Notation (1953) 1. Arrival Process 5. Customer Population 2. Service Time Distribution 6. Service Discipline 4. System Capacity 3. Number of Servers A/B/c/k/N/D - A: arrival process B: service time distribution c: number of servers k: system capacity N: population size D: service discipline John Chuang M: Markov (exponential, memoryless, random, Poisson) D: deterministic E: Erlang H: hyper-exponential G: general FCFS: first come first served FCLS: first come last served RR: round-robin etc. 10 Example Systems / /FCFS (simplified as M/M/1) 8 8 M/M/1/ - Markovian (Poisson, memoryless) arrival Markovian service time 1 server Infinite server capacity Infinite arrival stream First-come-first-serve discipline Other examples: - M/M/1/k (finite capacity) - M/M/m (m servers) - G/D/1 (arbitrary arrival, deterministic service time) John Chuang 11 M/M/1 Queue Poisson arrival, with average arrival rate of l jobs/sec Poisson service, with average service rate of m jobs/sec Single server with infinite queue System utilization (hopefully < 1): r = l/m Average number of jobs in system: N = n·pn = r/(1 - r) System throughput (if r < 1) : X=l Average response time (from Little’s Law): R = N/X = 1/(m - l) John Chuang 12 Example: Web Server Web server receives 40 requests/second Web server can process 100 requests/second What is server utilization? At any given time, how many requests are at server (waiting plus being processed)? What is the mean total delay at server (waiting plus processing)? What happens when traffic rate doubles? John Chuang 13 Example: Web Server l = 40 requests/second m = 100 requests/second Utilization = r = l/m = 40/100 = 40% # of requests = N = r/(1 - r) = 0.67 Average time spent at server = R = N/X = 0.67/40 = 17ms John Chuang 14 Example: Traffic Doubled l = 80 requests/second m = 100 requests/second Utilization = r = l/m = 80/100 = 80% # of requests = N = r/(1 - r) = 4 Average time spent at server = R = N/X = 4/80 = 50ms (more than doubled!) John Chuang 15 Approaching Congestion l = 99 requests/second m = 100 requests/second Utilization = r = l/m = 99/100 = 99% # of requests = N = r/(1 - r) = 99 Average time spent at server = R = N/X = 99/99 = 1 second! John Chuang 16 Utilization Affects Performance M/M/1 Queue (m = 100) Response Time (s) 0.25 0.2 0.15 0.1 0.05 0 0 0.2 0.4 0.6 0.8 1 Utilization John Chuang 17 M/M/1/k Queue (Finite Capacity) r = l/m N = r/(1-r) – (k+1)rk+1/(1-rk+1) R = N/X = N/leff - where leff = l(1-Pk) = effective arrival rate - and Pk = rk(1-r)/(1-rk+1) = probability of a full queue Loss rate = l - leff John Chuang 18 M/M/1/k Response Time M/M/1 and M/M/1/k Queues (m = 100) 0.25 M/M/1 M/M/1/1 Response Time (s) 0.2 M/M/1/2 M/M/1/10 0.15 M/M/1/100 0.1 0.05 0 0 0.2 0.4 0.6 0.8 1 Utilization John Chuang 19 M/M/1/k Throughput Throughput given Service rate m = 100 jobs/sec 100 M/M/1 Throughput (jobs/sec) 90 M/M/1/1 80 M/M/1/2 70 M/M/1/10 60 M/M/1/100 50 40 30 20 10 0 0 0.2 0.4 0.6 0.8 1 Utilization John Chuang 20 Lecture Outline Performance Metrics Availability Queuing theory - M/M/1 queue Scalability - M/M/m queue John Chuang 21 Scalability The capability of a system to increase total throughput under an increased load when resources (typically hardware) are added - Cost of additional resource - Performance degradation under increased load John Chuang 22 Scalability Example Original web server: can process m requests/sec; accepts requests at l/sec Now request rate increases to 10l/sec and web server is swamped (r = 10l/m)! Need to add new hardware! John Chuang 23 Which is better? Option 1: One big web server that can process 10m requests/sec Option 2: Ten web servers, each can process m requests/sec; each accepts 10% of requests (l/sec per server) Option 3: Ten web servers, each can process m requests/sec; share single queue (load balancer) that accepts requests at 10l/sec John Chuang 24 Option 2: (ten M/M/1 queues) l m l m l m l m l m l John Chuang 10l 10m m m m Option 3: M/M/10 queue m m l m l m l m l Option 1: M/M/1 queue with big server m m 10l m m m m m 25 M/M/m Queue (m Servers) r = l/mm N = mr + rf/(1-r) where and QuickTime™ and a TIFF (LZW) decompressor are needed to see this picture. QuickTime™ and a TIFF (LZW) decompressor are needed to see this picture. QuickTime™ and a TIFF (LZW) decompressor are needed to see this picture. John Chuang 26 Which is Better? m = 10; m = 100; l = 50 Option 1 (M/M/1 big) Utilization (r) 0.5 Number of requests (N) Response Time (R) Option 2 (ten M/M/1) 0.5 Option 3 (M/M/10) 0.5 1 1*10 5.036 2ms 20ms 10.07ms Remember: Scalability is not just about performance! John Chuang 27