ANOVA - The Joy of Stats

advertisement

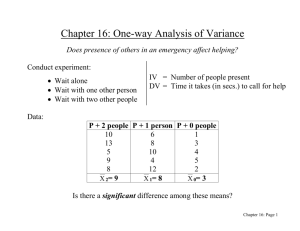

ANOVA: Analysis of Variance The Titanic Disaster Were there differences in survival rates by gender/age groups (men, women, children) or economic groups (1st, 2nd, and 3rd class tickets and crew)? IF SO, WHY? Survival rate = Proportion of the group that survived Durkheim: Suicide Were there differences in the suicide rates of Protestants, Catholics, and Jews? If so, why? The quantitative analysis sparks the theoretical and qualitative analysis. (Remember, this is a low absolute risk.) What Does ANOVA Do? It compares the means (of an interval-ratio dependent variable) for the groups defined by the categories of an independent variable measured at the nominal or ordinal level. Example: Are mean weights different for fans of action movies, horror movies, and romantic comedies? The Null Hypothesis The null hypothesis is that all of the group means are equal to each other — that there are no differences. μ1 = μ2 = μ3 = μ4 etc. for however many groups there are. Rejecting the null hypothesis implies that, in at least one pair of group means, the means were different from each other. Remember the Variance? Subtract to find deviation of each score from the mean. Square each difference. Sum the squared differences. Find the mean squared difference by dividing by the number of cases (if it’s a sample, use n – 1). Can you write the formula? So What Does the Variance Have to Do with It? Remember: Variances are measures of deviations from means. We are going to use variances to compare means. See Garner (2010), from page 203. 3 Variances in Play! These are the grand or total variance based on the deviations between each score and the overall mean the between-groups variance based on deviations between group means and the overall mean the within-groups variance based on the deviation between each score and ITS group mean How Does ANOVA Do It? Between-groups variance is “good” — it suggests that there are differences among the groups’ DV means that are related to the grouping variable (the IV). Within-groups variance is “bad” or error — it suggests that the independent variable is not a good predictor of differences in the DV means. This variation remains unexplained. Is there more variability between the groups than variability within the groups? We will compute the ratio of between-groups variance to within-groups variance. Example Are there significant differences among the GPA means of 4 groups defined by “movie faves” — action, horror, drama, and comedy? We will use j to designate the number of groups, in this case, 4. The Data: GPAs of Movie Fans Group 1 (action): 0, 1, 2, 3, 4 mean = 2. Group 2 (drama): 0, 0, 4, 4 mean = 2. Group 3 (horror): 3, 3, 3, 3, 3 mean = 3. Group 4 (comedy): 4, 4, 4, 4 mean = 4. Question: Is there a significant difference among these means? Null hypothesis: μ1 = μ2 = μ3 = μ4. The F-ratio The test-statistic is called the F-ratio and is computed by a division: The numerator (top) is computed as betweengroups variation: Deviations of the group means from the overall (grand) mean, with each squared deviation weighted by the number of cases in the group, and then summed and averaged for all the groups. Denominator—next slide, please! The Denominator of the F-ratio The denominator (bottom) is computed as within-groups variation: Squared deviations of the value for each case from the mean of its own group, and then all the squared deviations summed and averaged for all the cases. F-ratio (continued) HEY, LET’S TAKE THOSE CALCULATIONS ONE STEP AT A TIME! OK, but first, let’s see what we are going to do with the F-ratio (the test statistic) once it is computed. Significance? The null hypothesis is that all the group means are equal to each other. If F is significant (exceeds critical value), it has a low p-value (p < .05) or “Sig” in SPSS/PASW. We can reject the null hypothesis of equal group means. Steps in Calculating F Our first step is to calculate the within-groups variance (a mean sum of squares). Our second step is to calculate the betweengroups variance (a mean sum of squares) Our third step is to calculate the ratio: Betweengroups mean sum of squares divided by withingroups mean sum of squares). Ugh. The Within-Groups Sum of Squares Find the difference between each score and its own group mean by subtracting the group mean from the score. Square this difference. Sum the squares for the entire data set. There will be a square for each score (case) in the data set. Mean Square Within Divide the within-groups sum of squares by the total number of cases minus the number of groups (n – j). SSwithin / (n – j) This is the denominator (bottom) of the F-ratio and is “bad” — variability we cannot predict from our independent variable. Between-Groups Sum of Squares Subtract each group mean from the grand mean. Square the difference. Multiply this square by the number of cases in the group to weight the squared difference. Sum these weighted squares. (There will be a weighted square for each group of the IV.) Mean Square Between Divide the between-groups sum of squares obtained at the previous step by its degrees of freedom: (j – 1) where j is the number of categories of the IV. This is “good” variability, based on differences between (or among) the groups. The F-ratio F = meansqbetween / meansqwithin The “between” term is good variability (predicted by the IV). The “within” term is bad or error variability (not predicted by the IV). Degrees of Freedom The F-ratio has a separate df calculation for the numerator and for the denominator. df for the numerator is (j – 1). df between Look across the top row of the chart for the critical values of F. df for the denominator is (n – j) df within Look down the left column of the chart. What’s the Next Step First, we do the division: meansqbetween-groups / meansqwithin-groups So we have the value of a test statistic called F. Is F “Big Enough” to Have a Low pValue? We check out F in the chart. See Garner (2010, pp. 326–29). If F is BIG relative to its degrees of freedom (and there are 2 of these in play — see previous slides), it is significant — i.e., there is a lot of between-groups variability relative to withingroups variability. (P is low.) Drat, Not Yet Done! Post-Hoc Multiple Comparison Procedures The problem with finding that F is significant is that we still need to figure out which group means are significantly different from each other. (A significant F just tells us SOME of them are, but we don’t yet know which.) For this, we need to look at the post-hoc results: Bonferroni is one I like to look at, but it assumes equal group variances; others prefer Tukey’s HSD. Be Sure to Get the Descriptives! When you run ANOVA in SPSS/PASW, be sure to check “Descriptives” in the “One Way ANOVA” dialog box entitled “Options” to see what the group means actually are. ANOVA as Part of Regression Analysis In addition to a data analysis technique in its own right, ANOVA is part of linear regression analysis, so we need to be able to interpret it in that context. It is used in figuring out what proportion of variation in the dependent variable can be predicted from the independent variable, a value called the coefficient of determination, R2. ANOVA is actually easier to understand and calculate as part of a regression analysis than as a compare-means procedure. ANOVA and Independent-Samples tTests [1] When there are only TWO groups — randomly selected rather than paired, matched, or retested — an independent-samples t-test is often used instead of ANOVA. The independent-samples t-test is usually reported in research but the result (p-value) is IDENTICAL to the ANOVA result. t-Test results are a little harder to set up and read in SPSS/PASW. Independent-Samples t-Tests [2] Just like ANOVA, independent-samples t-tests are set up under the SPSS/PASW commands Analyze–Compare Means. Specify the test variable (interval-ratio DV) and the grouping variable (categoric IV). Enter the groups’ values for the grouping variable. Output first shows Levene test for equal variances and then the result of the t-test. ANOVA Summary Remember the logic of ANOVA: It involves the F test statistic. F is computed by the ratio of two kinds of variation (between- and within-groups variation). ANOVA Summary (continued) When there is a lot of between-groups variance and relatively little within-groups variance, the result is significant (we can safely reject the null hypothesis of no differences among the means). In SPSS/PASW and other software, ANOVA is often located under Analyze–Compare Means in the menu.