Chapter 11

Basic Data Analysis for Quantitative

Research

McGraw-Hill/Irwin

Copyright © 2013 by The McGraw-Hill Companies, Inc. All rights reserved.

Statistical Analysis - Overview

• Every set of data collected needs some

summary information that describes the

numbers it contains

– Central tendency and dispersion

– Relationships of the sample data

– Hypothesis testing

11-2

Measures of Central Tendency

Mean

• The arithmetic average of the sample

• All values of a distribution of responses are summed and divided by

the number of valid responses

Median

• The middle value of a rank-ordered distribution

• Exactly half of the responses are above and half are below the median

value

Mode

• The most common value in the set of responses to a question

• The response most often given to a question

11-3

Dialog Boxes for Calculating the Mean, Median, and

Mode (in ‘Frequencies’ function)

11-4

Measures of Dispersion

Range

• The distance between the smallest and largest values in a set of

responses

Standard deviation

• The average distance of the distribution values from the mean

Variance

• The average squared deviation about the mean of a distribution

of values

11-5

SPSS Output for Measures of Dispersion

11-6

Type of Scale and Appropriate Statistic

11-7

Univariate Statistical Tests

• Used when the researcher wishes to test a

proposition about a sample characteristic

against a known or given standard

• Appropriate for interval or ratio data

• Test: “Is a mean significantly different from

some number?”

– Example: “Is the ‘Reasonable Prices’ average

different from 4.0?”

11-8

Univariate Hypothesis Test Using X-16 –

Reasonable Prices

11-9

Bivariate Statistical Tests

• Test hypotheses that compare the

characteristics of two groups or two variables

• Three types of bivariate hypothesis tests

– Chi-square

– t-test

– Analysis of variance (ANOVA)

11-10

Cross-Tabulation (“Cross-tabs”)

• Used to examine relationships and report

findings for two categorical (i.e. ‘nominal’)

variables

• Purpose is to determine:

– if differences exist between subgroups of the

total sample on a key measure

– whether there is an association between two

categorical variables

• A frequency distribution of responses on two

or more sets of variables

11-11

Cross-Tabulation:

Ad Recall vs. Gender

11-12

Chi-Square Analysis

• Assesses how closely the observed frequencies fit

the pattern of the expected frequencies

– Referred to as a “goodness-of-fit”

• Tests for statistical significance between the

frequency distributions of two or more nominally

scaled (i.e. “categorical”) variables in a crosstabulation table to determine if there is any kind of

association between the variables

11-13

SPSS Chi-Square Crosstab Example

Do males and females recall the ads differently?

Comparing Means: Independent

Versus Related Samples

• Independent samples: Two or more groups of

responses that supposedly come from different

populations

• Related samples: Two or more groups of responses

that supposedly originated from the same

population

– Also called “Matched” or “Dependent” samples

– SPSS calls them “Paired” samples

• Practical tip: Ask yourself, “Were the subjects reused (“Paired”) or not re-used (“Independent”) in

order to obtain the data?

11-15

Using the t -Test to Compare Two Means

• t-test: A hypothesis test that utilizes the t

distribution

– Used when the sample size is smaller than 30 and

the standard deviation is unknown

• Where,

X 1 m ean of sam ple 1

X 2 m ean of sam ple 2

S X 1 X 2 standard error of the difference betw ee n the tw o m eans

11-16

Comparing two means with

Paired Samples t-Test

Do average scores on variables X-18 and X-20 differ from each other?

11-17

Comparing Two Means with

Independent Samples t-Test

Do males and females differ with respect to their satisfaction?

11-18

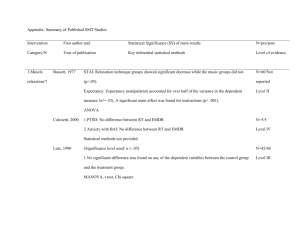

Analysis of Variance (ANOVA)

• ANOVA determines whether three or more

means are statistically different from each

other

• The dependent variable must be either

interval or ratio data

• The independent variable(s) must be

categorical (i.e. nominal or ordinal)

• “One-way ANOVA” means that there is only

one independent variable

• “n-way ANOVA” means that there is more

than one independent variable (i.e. ‘n’ IVs)

11-19

Analysis of Variance (ANOVA)

• F-test: The test used to statistically evaluate

the differences between the group means in

ANOVA

11-20

Example of One-Way ANOVA

Does distance driven affect customers’ likelihood of returning?

11-21

Analysis of Variance (ANOVA)

• ANOVA does not tell us where the significant

differences lie – just that a difference exists

• Follow-up (Post-hoc) tests: Analysis that flags the

specific means that are statistically different from

each other

– Performed after an ANOVA determines there is an

“Omnibus” differenc between means

• Some Pairwise Comparison Tests (there are

others)

– Tukey

– Duncan

– Scheffé

11-22

Results for Post-hoc Mean Comparisons

11-23

n-Way ANOVA

• ANOVA that analyzes several independent

variables at the same time

– Also called “Factorial Design”

• Multiple independent variables in an ANOVA

can act in concert together to affect the

dependent variable – this is called Interaction

Effect

11-24

n-way ANOVA: Example

• Men and women are shown humorous and

non-humorous ads and then attitudes toward

the brand are measured.

• IVs (factors) = (1) gender (male vs. female),

and (2) ad type (humorous vs. non-humorous)

• DV = attitude toward brand

• Need 2-way ANOVA design here (also called

“factorial design”) because we have two

factors

– 2 x 2 design (2 levels of gender x 2 levels of ad type)

11-25

n-Way ANOVA Example

Does distance driven and gender affect customers’ likelihood of

recommending Santa Fe Grill?

11-26

n -Way ANOVA Post-hoc Comparisons

11-27