Ch 3 Notes

advertisement

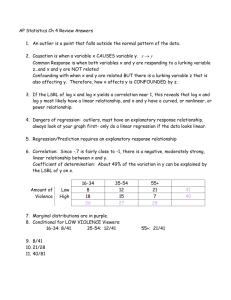

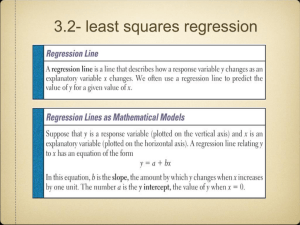

Ch 3 – Examining Relationships YMS – 3.1 Scatterplots Some Vocabulary Response Variable – Measures an outcome of a study – AKA dependent variable Explanatory Variable – Attempts to explain the observed outcomes – AKA independent variable Scatterplot – Shows the relationship between two quantitative variables measured on the same individuals Scatterplots Examining – Look for overall pattern and any deviations – Describe pattern with form, strength, and direction Drawing – Uniformly scale the vertical and horizontal axes – Label both axes – Adopt a scale that uses the entire available grid Categorical Variables – Add a different color/shape to distinguish between categorical variables Classwork p125 #3.7, 3.10-3.11 Homework: #3.16, 3.22 and 3.2 Blueprint YMS – 3.2 Correlation Correlation Measures the direction and strength of the linear relationship between two quantitative variables Facts About Correlation Makes no distinction between explanatory and response variables Requires both variables be quantitative Does not change units when we change units of measurement Sign of r indicates positive or negative association r is inclusive from -1 to 1 Only measures strength of linear relationships Is not resistant In Class Exercises p146 #3.28, 3.34 and 3.37 Correlation Guessing Game Homework 3.3 Blueprint YMS – 3.3 Least-Square Regression Regression Regression Line – Describes how a response variable y changes as an explanatory variable x changes LSRL of y on x – Makes the sum of the squares of the vertical distances of the data points from the line as small as possible Line should be as close as possible to the points in the vertical direction – Error = Observed (Actual) – Predicted LSRL Equation of the LSRL Slope Intercept Coefficient of determination – r2 The fraction of the variation in the values of y that is explained by the least-squares regression of y on x Measures the contribution of x in predicting y If x is a poor predictor of y, then the sum of the squares of the deviations about the mean (SST) and the sum of the squares of deviations about the regression line (SSE) would be approximately the same. Understanding r-squared: A single point simplification Al Coons Buckingham Browne & Nichols School Cambridge, MA al_coons@bbns.org y Error w.r.t. mean model Error eliminated by y-hat model Proportion of error eliminated by Y-hat model Error eliminated by y-hat model = Error w.r.t. mean model r2 = proportion of variability accounted for by the given model (w.r.t the mean model). y Error w.r.t. mean model Error eliminated by y-hat model Proportion of error eliminated by Y-hat model Error eliminated by y-hat model = Error w.r.t. mean model = ~ Facts about Least-Squares Regression Distinction between explanatory and response variables is essential A change of one standard deviation in x corresponds to a change of r standard deviations in y LSRL always passes through the point The square of the correlation is the fraction of the variation in the values of y that is explained by the least-squares regression of y on x Classwork: Transformations and LSRL WS Homework: #3.39 and ABS Matching to Plots Extension Question (we’ll finish the others in class) Residuals observed y – predicted y or Positive values show that data point lies above the LSRL The mean of residuals is always zero Residual Plots A scatterplot of the regression residuals against the explanatory variable Helps us assess the fit of a regression line Want a random pattern Watch for individual points with large residuals or that are extreme in the x direction Outliers vs. Influential Observations Outlier – An observation that lies outside the overall pattern of the other observations Influential observation – Removing this point would markedly change the result of the calculation Classwork: Residual Plots WS Homework: p177 #3.52 and 3.61 Doctor’s for the Poor This will be graded for accuracy! Ch 3 Review p176 #3.50-3.51, 3.56, 3.59, 3.69, 3.76-3.77