Document

advertisement

Outline

•

•

•

•

Time series prediction

Find k-nearest neighbors

Lag selection

Weighted LS-SVM

Time series prediction

• Suppose we have an univariate time series x(t)

for t = 1, 2, …, N. Then we want to know or

predict the value of x(N + p).

• If p = 1, it would be called one-step prediction.

• If p > 1, it would be called multi-step

prediction.

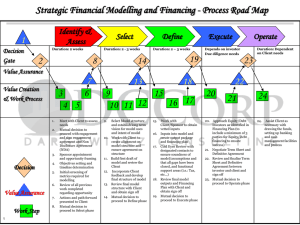

Flowchart

Find k-nearest neighbors

• Assume the current time

index is 20.

• First we reconstruct the

query

q [ x 20 1

y 20 1

x 20

y 20 ]

• Then the distance

between the query and

historical data is

D Trend ( q , t 2 ) {[( x 20 x 20 1 ) ( x 2 x 2 1 )] [( y 20 y 20 1 ) ( y 2 y 2 1 )] }

2

2

D Original ( q , t 2 ) [( x 20 1 x 2 1 ) ( y 20 1 y 2 1 ) ( x 20 x 2 ) ( y 20 y 2 ) ]

2

2

2

2

0 .5

D (q, t2 )

N ( D Trend ) N ( D Original )

2

Find k-nearest neighbors

• If k = 3, and the first k

closest neighbors are t14,

t15, t16. Then we can

construct the smaller

data set.

x13

D x14

x15

y 13

x14

y 14

y 14

x15

y 15

y 14

x16

y 16

y 15

y 16

y 17

Flowchart

Lag selection

• Lag selection is the process of selecting a

subset of relevant features for use in model

construction.

• Why we need lag?

• Lag selection is like feature selection, not

feature extraction.

Lag selection

• Usually, the lag selection can be divided into

two broad classes: filter method and wrapper

method.

• The lag subset is chosen by an evaluation

criterion, which measures the relationship of

each subset of lags with the target or output.

Wrapper method

• The best lag subset is

selected according to

the model.

• The lag selection is a

part of the learning.

Filter method

• In this method, we need

the criterion which can

measures the correlation

or dependence.

• For example, correlation,

mutual information, … .

Lag selection

• Which is better?

• The wrapper method solve the real problem,

but need more time.

• The filter method provide the lag subset which

perform the worse result.

• We use the filter method because of the

architecture.

Entropy

• The entropy is a measure of uncertainty of a

random variable.

• The entropy of a discrete random variable is

defined by

H ( X ) p ( x ) log p ( x )

x X

• 0log0 = 0

Entropy

• Example, let

1

X

0

with probabilit

y p

with probabilit

y 1-p

• Then

H ( X ) p log p (1 p ) log( 1 p )

Entropy

Entropy

• Example, let

a

b

X

c

d

with probabilit

y 1/ 2

with probabilit

y 1/ 4

with probabilit

y 1/ 8

with probabilit

y 1/ 8

• Then

H (X )

1

2

log

1

2

1

4

log

1

4

1

8

log

1

8

1

8

log

1

8

7

4

bits

Joint entropy

• Definition: The joint entropy of a pair of

discrete random variables (X, Y) is defined as

H ( X ,Y )

x X y Y

p ( x , y ) log p ( x , y )

Conditional entropy

• Definition: The conditional entropy is defined

as

H (Y | X )

p ( x ) H (Y | X x )

x X

• And

H ( X , Y ) H ( X ) H (Y | X )

Proof

H ( X ,Y )

p ( x , y ) log p ( x , y )

p ( x , y ) log p ( x ) p ( y | x )

p ( x , y ) log p ( x )

x X y Y

x X y Y

x X y Y

p ( x ) log p ( x )

x X

H ( X ) H (Y | X )

p ( x , y ) log p ( y | x )

x X y Y

x X y Y

p ( x , y ) log p ( y | x )

Mutual information

• The mutual information is a measure of the

amount of information one random variable

contains about another.

• It’s the extended notion of the entropy.

• Definition: The mutual information of the two

discrete random variables is

I ( X ,Y )

p ( x , y ) log

x X y Y

H (X ) H (X |Y )

p ( x, y )

p( x) p( y)

Proof

I ( X ,Y )

p ( x , y ) log

p( x) p( y)

x, y

p ( x , y ) log

p(x | y)

p( x)

x, y

p ( x, y )

p ( x , y ) log p ( x | y )

p ( x , y ) log p ( x )

x, y

x, y

p ( x ) log p ( x ) ( p ( x , y ) log p ( x | y ) )

x

x,y

H (X ) H (X |Y )

H (Y ) H (Y | X )

H ( X ) H (Y ) H ( X , Y )

The relationship between entropy and

mutual information

Mutual information

• Definition: The mutual information of the two

continuous random variables is

I ( X ,Y )

dxdyp ( x , y )

p ( x, y )

p( x) p( y)

• The problem is that the joint

probability density function of X and Y is hard

to compute.

Binned Mutual information

• The most straightforward and widespread

approach for estimating MI consists in

partitioning the supports of X and Y into bins

of finite size

I ( X , Y ) I binned ( X , Y )

p ( i , j ) log

p (i ) p ( j )

ij

p (i )

n (i )

N

p (i )

n (i )

N

p (i , j )

p (i, j )

n (i , j )

N

Binned Mutual information

• For example, consider a set of 5 bivariate

measurements, zi=(xi, yi), where i = 1, 2, …, 5.

And the values of these points are

Index

1

2

3

4

5

Feature 1 Feautre 2

0

1

0.5

5

1

3

3

4

4

1

Binned Mutual information

Binned Mutual information

p x (1)

p y (1)

3

5

2

5

p xy (1,1)

, p x (2)

, p y (2)

1

5

2

5

2

5

, p y (3)

, p xy (1, 2 )

1

5

1

5

, p xy (1,3 )

1

5

, p xy ( 2 ,1)

1

5

, p xy ( 2 , 2 )

1

5

Binned Mutual information

Iˆ ( X , Y )

3

3

i 1

p xy ( i , j ) log

j 1

p xy ( i , j )

p x (i ) p j ( j )

1

1

log(

5

5

3

5

0 . 1996

1

2

5

)

1

5

log(

1

5

3

5

2

5

)

1

5

log(

1

5

3

5

1

5

)

1

5

log(

1

5

2

5

2

5

)

1

5

log(

5

2

5

2

5

)

Estimating Mutual information

• Another approach for estimating mutual

information. Consider the case with two

variables. The 2-dimension space Z is spanned

by X and Y. Then we can compute the distance

between each point.

z i z j max{ x i x j , y i y j }

i , j 1, 2 ,..., N

i j

Estimating Mutual information

• Let us denote by (i ) / 2 the distance from z i to

its k-nearest neighbor, and by x (i ) / 2 and y (i ) / 2

the distances between the same points

projected into the X and Y subspaces.

• Then we can count the number nx(i) of points

xj whose distance from xi is strictly less

than (i ) / 2 , and similarly for y instead of x.

Estimating Mutual information

Estimating Mutual information

• The estimate for MI is then

Iˆ

(1 )

( X ,Y ) (k )

1

N

N

[ ( n

x

( i 1)) ( n y ( i 1))] ( N )

i 1

• Alternatively, in the second algorithm, we

replace nx(i) and ny(i) by the number of points

with

xi x j x (i ) / 2

y i y j y (i ) / 2

Estimating Mutual information

Estimating Mutual information

Estimating Mutual information

• Then

Iˆ

(2)

( X , Y ) (k )

1

k

1

N

N

[ ( n

i 1

x

( i )) ( n y ( i ))] ( N )

Estimating Mutual information

• For the same example, k = 2

• For the point p1(0, 1) D max{ 0 .5 , 4} 4

12

D 13 max{ 1, 2} 2

D 14 max{ 3 , 3} 3

D 15 max{ 4 , 0} 4

n x (1) 2

• For the point p2(0.5,5)

n y (1) 2

D 21 max{ 0 . 5 , 4} 4

D 23 max{ 0 . 5 , 2} 2

D 24 max{ 2 . 5 ,1} 2 . 5

D 25 max{ 3 . 5 , 4} 4

n x (2) 2

n y (2) 2

Estimating Mutual information

• For the point p3(1,3)

D 31 max{ 1, 2} 2

D 32 max{ 0 . 5 , 2} 2

D 34 max{ 2 ,1} 2

D 35 max{ 3 , 2} 3

n x (3) 2

• For the point p4(3,4)

n y (3) 1

D 41 max{ 3 , 3} 3

D 42 max{ 2 . 5 ,1} 2 . 5

D 43 max{ 2 ,1} 2

D 45 max{ 1, 3} 3

n x (4) 2

n y (4) 2

Estimating Mutual information

• For the point p5(4,1)

D 51 max{ 4 , 0} 4

D 52 max{ 3 . 5 , 4} 4

D 53 max{ 3 , 2} 3

D 54 max{ 1,3} 3

n x (5 ) 1

n y (5 ) 2

• Then

1

(1 )

Iˆ ( X , Y ) ( k )

N

(2)

1

N

[ ( n

( i ) 1) ( n y ( i ) 1)] ( N )

i 1

5

[ ( n

5

i 1

0 . 2833

x

x

( i ) 1) ( n y ( i ) 1)] ( 5 )

Estimating Mutual information

• Example

– a=rand(1,100)

– b=rand(1,100)

– c=a*2

• Then

I ( a , b ) 0 . 0427

I ( a , c ) 2 . 7274

Estimating Mutual information

• Example

– a=rand(1,100)

– b=rand(1,100)

– d=2*a + 3*b

• Then

I ( a , d ) 0 . 2218

I ( b , d ) 0 . 6384

I (( a , b ), d ) 1 . 4183

Flowchart

Model

• Now we have a training data set which

contains k records, then we need a model to

predict.

Instance-based learning

• The points that are close to the query have

large weights, and the points far from the

query have small weights.

• Locally weighted regression

• General Regression Neural Network(GRNN)

Property of the local frame

Property of the local frame

Weighted LS-SVM

• The goal of the standard LS-SVM is to

minimize the risk function:

min J ( , e )

1

2

T

1

2

k

j 1

2

ej

• Where the γ is the regularization parameter.

Weighted LS-SVM

• The modified risk function of the weighted

LS-SVM is

min J ( , e )

1

2

T

1

k

2

j 1

• And

j wj

j 1, 2 ,..., N

2

e

j j

Weighted LS-SVM

• The weighted is designed as

w1 j D Nj

w 2 j u j median (U )

w j exp( 1 (

N ( w1 j ) N ( w 2 j )

2

))