pptx

advertisement

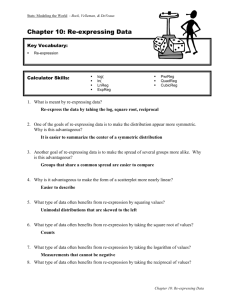

STA291 Statistical Methods Lecture 28 Extrapolation and Prediction Extrapolating – predicting a y value by extending the regression model to regions outside the range of the x-values of the data. Extrapolation and Prediction Why is extrapolation dangerous? It introduces the questionable and untested assumption that the relationship between x and y does not change. Extrapolation and Prediction Cautionary Example: Oil Prices in Constant Dollars Price = – 0.85 + 7.39 Time Model Prediction (Extrapolation): On average, a barrel of oil will increase $7.39 per year from 1983 to 1998. Extrapolation and Prediction Cautionary Example: Oil Prices in Constant Dollars Price = – 0.85 + 7.39 Time Actual Price Behavior Extrapolating the 1971-1982 model to the ’80s and ’90s lead to grossly erroneous forecasts. Extrapolation and Prediction Remember: Linear models ought not be trusted beyond the span of the x-values of the data. If you extrapolate far into the future, be prepared for the actual values to be (possibly quite) different from your predictions. Unusual and Extraordinary Observations Outliers, Leverage, and Influence In regression, an outlier can stand out in two ways. It can have… 1) a large residual: Unusual and Extraordinary Observations Outliers, Leverage, and Influence In regression, an outlier can stand out in two ways. It can have… 2) a large distance from x: “High-leverage point” A high leverage point is influential if omitting it gives a regression model with a very different slope. Unusual and Extraordinary Observations Outliers, Leverage, and Influence Tell whether the point is a high-leverage point, if it has a large residual, and if it is influential. Not high-leverage Large residual Not very influential Unusual and Extraordinary Observations Outliers, Leverage, and Influence Tell whether the point is a high-leverage point, if it has a large residual, and if it is influential. High-leverage Small residual Not very influential Unusual and Extraordinary Observations Outliers, Leverage, and Influence Tell whether the point is a high-leverage point, if it has a large residual, and if it is influential. High-leverage Medium (large?) residual Very influential (omitting the red point will change the slope dramatically!) Unusual and Extraordinary Observations Outliers, Leverage, and Influence What should you do with a high-leverage point? Sometimes, these points are important. They can indicate that the underlying relationship is in fact nonlinear. Other times, they simply do not belong with the rest of the data and ought to be omitted. When in doubt, create and report two models: one with the outlier and one without. Unusual and Extraordinary Observations Example: Hard Drive Prices Prices for external hard drives are linearly associated with the Capacity (in GB). The least squares regression line without a 200 GB drive that sold for $299.00 was • 18.64 0.104Capacity. found to be Price The regression equation with the original data is • 66.57 0.088Capacity Price How are the two equations different? The intercepts are different, but the slopes are similar. Does the new point have a large residual? Explain. Yes. The hard drive’s price doesn’t fit the pattern since it pulled the line up but didn’t decrease the slope very much. Working with Summary Values Scatterplots of summarized (averaged) data tend to show less variability than the un-summarized data. Example: Wind speeds at two locations, collected at 6AM, noon, 6PM, and midnight. Raw data: R2 = 0.736 Daily-averaged data: R2 = 0.844 Monthly-averaged data: R2 = 0.942 Working with Summary Values WARNING: Be suspicious of conclusions based on regressions of summary data. Regressions based on summary data may look better than they really are! In particular, the strength of the correlation will be misleading. Autocorrelation Time-series data are sometimes autocorrelated, meaning points near each other in time will be related. First-order autocorrelation: Adjacent measurements are related Second-order autocorrelation: Every other measurement is related etc… Autocorrelation violates the independence condition. Regression analysis of autocorrelated data can produce misleading results. Transforming (Re-expressing) Data An aside On using technology: Transforming (Re-expressing) Data Linearity Some data show departures from linearity. Example: Auto Weight vs. Fuel Efficiency Linearity condition is not satisfied. Transforming (Re-expressing) Data Linearity In cases involving upward bends of negativelycorrelated data, try analyzing –1/y (negative reciprocal of y) vs. x instead. Linearity condition now appears satisfied. Transforming (Re-expressing) Data The auto weight vs. fuel economy example illustrates the principle of transforming data. There is nothing sacred about the way x-values or yvalues are measured. From the standpoint of measurement, all of the following may be equallyreasonable: x vs. y x vs. –1/y x2 vs. y x vs. log(y) One or more of these transformations may be useful for making data more linear, more normal, etc. Transforming (Re-expressing) Data Goals of Re-expression Goal 1 Make the distribution of a variable more symmetric. y vs. x y vs. log x Transforming (Re-expressing) Data Goals of Re-expression Goal 2 Make the spread of several groups more alike. y vs. x log y vs. x We’ll see methods later in the book that can be applied only to groups with a common standard deviation. Looking back Make sure the relationship is straight enough to fit a regression model. Beware of extrapolating. Treat unusual points honestly.You must not eliminate points simply to “get a good fit”. Watch out when dealing with data that are summaries. Re-express your data when necessary.