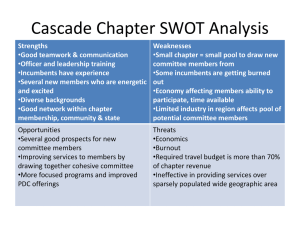

Resource Management in the Virtual World

Singapore, Q1 2013

1

Topic

How Resource Management works in vSphere 5

• Server Pool

• Storage Pool

• Network Pool

Architecting Pools of resources in large environment

• Server Pool

• Storage Pool

Monitoring Pools of resources in large environment

• Performance monitoring

• Compliance monitoring

3

Confidential

Resource Pool: CPU and RAM

The “Resource Pool” that most of us know.

4

Confidential

Server Resource Pool: Quick Intro

5

Confidential

Server Resource Pool: Quick Intro

6

Confidential

Server Resource Pool

Cluster means you no longer need to think of individual ESXi host

• No longer need to map 1000 VM to 100 ESX

What it is

• Grouping of ESX CPU/RAM in a cluster, as if they are 1 giant computer.

• They are not, obviously, as a VM can’t span across 2 hosts at a given time.

• A few apps might be ESXi aware, and do their own co-ordination. Example is vFabric EM4J (Elastic Memory

for Java). But this is a separate topic altogether

• A logical grouping of CPU and RAM only

• No Disk and Network

• Cluster must be DRS-enabled to create resource pools

What it is not

• A way to organise VM. Use folder for this.

• A way to segregate admin access for VM. Use folder for this.

Example: a cluster has 8 ESX host. Each has 2 cores.

So total is 48 GHz

VI-3 Cluster of

[CPU]

8 * (3.0Ghz * 2)

[RAM]

8 * 16,384MB

7

Root Resource Pool

[CPU]

49,152Mhz

[RAM] 131,072MB

Confidential

Child Resource Pools

A slice of the parent RP

Child RP can exceed the capacity of the root resource pool

Used to allocate capacity to different consumers and to enable delegated

administration

RP1-1 – Limits

[CPU] 14,745Mhz

[RAM] 39,320MB

RP1-2 – Limits

[CPU] 9,831Mhz

[RAM] 26,216MB

RP2 – Limits

[CPU] 8,096Mhz

[RAM] 24,576MB

RP1 – Limits

[CPU] 24,576Mhz

[RAM] 65,536MB

VI-3 Cluster of

8 * (3.0Ghz * 2)

[CPU]

8 * 16,384MB

[RAM]

8

RP3 – Limits

[CPU] 16,192Mhz

[RAM] 40,960MB

Root Resource Pool

49,152Mhz

[CPU]

[RAM] 131,072MB

Confidential

RP Settings

Can control CPU and RAM only

• Disk is done at per VM level.

• Network is done at per vDS port group level.

Shares is mandatory

• Can’t set it to blank

Shares is always relative

• Relative to other VM in same Resource Pool or Cluster

Reservation

• Impact the cluster Slot Size. Use sparingly.

• Can’t overcommit. Notice the triangle

Take note of “MHz”

• Not aware of CPU generation

• 2 GHz Xeon 5600 is considered as same speed as

2 GHz Xeon 5100.

No such thing as “unlimited” in Limit

• A VM can’t go beyond its Configured value.

• A VM with 2 GB RAM won’t run as if it has 128 GB

(assume ESXi has 128 GB)

9

Confidential

Configuration, Reservation, Limit

Configured

For resources above

“Limit” - you will

never gain access

• The amount presented to BIOS of the VM.

• Hence a VM will never exceed its configured amount as it

can’t see beyond it. ESX RAM is irrelevant.

• A Windows VM configured with 8 GB. Windows will start

swaping to its own swap file in its NTFS drive if it reach 8

GB.

Limit

“Configured” = amount configured for the VM

It’s available for someone else’s

reserved utilization (it can be “stolen”

from you)

down the CPU. It just give the VM less CPU cycle.

Reservation

• Define the minimum amount of a resource that a consumer

is guaranteed to receive – if asked for

• Reserved capacity that is not used is available to other

consumers for them to use – but not reserve

• If a consumer asks for reserved capacity that has been

“loaned” to another consumer, it is reclaimed and given to

satisfy the reservation

10

Confidential

Reservation

Limit

• A virtual property. Does not exist in physical server.

• Not visible by VM.

• Can be used to force slow down a VM. ESXi does not clock

For resources between “Reservation”

and “Limit” - if you ask for it, you get it

if it’s available

Resource usage here is guaranteed –

if you ask for it, you get it.

If you don’t use it, it’s available for

someone else’s unreserved utilization

(it can be “loaned out”, but is reclaimed

on request)

VM-level Reservation

CPU reservation:

•

•

•

•

•

Guarantees a certain level of resources to a VM

Influences the admission control (PowerOn)

CPU reservation isn’t as bad as often referenced:

CPU reservation doesn’t claim the CPU when VM is idle (is refundable)

CPU reservation caveats: CPU reservation does not always equal priority

• VM uses processors and “Reserved VM” is claiming those CPUs = ResVM has to wait until threads / tasks are finished

• Active threads can’t be “de-schedules” if you do so = Blue Screen / Kernel Panic

Memory reservation

• Guarantees a certain level of resources to a VM

• Influences the admission control (PowerOn)

• Memory reservation is as bad as often referenced. “Non-Refundable” once allocated.

• Windows is zeroing out every bit of memory during startup…

Memory reservation caveats:

• Will drop the consolidation ratio

• May waste resources (idle memory cant’ be reclaimed)

• Introduces higher complexity (capacity planning)

11

Confidential

Resource Pool shares is not “cascaded” down to each VM.

The more VM you put into a Resource Pool, the less each get.

• The pool is not per VM. It is for the entire pool.

• The only way to give the VM guarantee is to set the pool for each VM. This has admin overhead

as it’s not easily visible.

VM3

VM4

Pool 1

Pool 1 VM5

VM2

Pool

33

Pool

VM6

Pool 2

Pool 2

VM1

12

Confidential

Resource Pool: A common mistake…

Sys Admin created 3 resource pool called Tier 1, Tier 2, Tier 3.

• The follow the relative High, Normal, Low share.

• So Tier 1 gets 4x the shares of Tier 3.

Place 10 VM on each Tier.

• 30 total in the cluster.

• Everything is fine for now.

• Tier 1 does get 4x the share.

Since Tier 1 performs better, place 10 more VM on Tier 1.

• So Tier 1 now has 20 VM

Result: Tier 1 performance drops.

• The 20 VM are fighting the same share.

The above problem will only happens if there is

contention. If the physical ESXi host has enough

resource to satisfy all 40 VMs, then Shares do not kick in.

13

Confidential

Implication of poorly design resource pool

The cluster has 2 resource pools and a few VM outside these 2 resource pools.

“Test 1” resource pool is given 4x the shares. But it has 8 VM. So 26% / 8 = ~3% per VM.

14

Confidential

Per VM settings

Screen is based on Sphere 5 and VM hardware version 8

15

Confidential

Shares Value and Shares

Shares can be “Normal” but the value can differ from VM to VM.

Use script to set all the values to identical amount.

16

Confidential

Example

VM 1

VM 2

VM 3

ESXi Hypervisor

VM 1:

VM 2:

VM 3:

Memory size: 4GB

Reservation: 0

Limit: unlimited

Shares: 3000

Idle memory: 0

Memory size: 4 GB

Reservation: 0

Limit: unlimited

Shares: 1000

Idle memory: 0

Memory size: 2 GB

Reservation: 2 GB

Limit: unlimited

Shares: 1000

Idle memory: 0

Entitlement: 3 GB

Entitlement: 1 GB

Entitlement: 2 GB

6 GB pRAM

Total for 3 VM = 10 GB.

But ESX only has 6 GB.

VM 3 will get 2 GB, as it has reservation.

ESX has 4 GB left.

VM 1 will get 3000/4000 shares, which is 3/4 * 4 GB = 3 GB

VM 2 will get 1000/4000, which is 1/4 * 4 GB = 1 GB.

VM 2 performance drops.

VM 3 performance not affected at all

17

Confidential

Resource Pool: Best Practices

For Tier 1 cluster, where all the VMs are critical to business

• Architect for Availability first, Performance second.

• Translation: Do not over-commit.

• So resource pool, reservation, etc are immaterial as there is enough for everyone.

• But size each VM accordingly. No oversizing as it might slow down.

For Tier 3 cluster, use carefully, or don’t use at all.

• Tier 3 = overcommit.

• So use Reservation sparingly, even at VM level.

• This guarantees resource, so it impacts the cluster slot size.

• Naturally, you can’t boot additional VM if your guarantee is fully used

• Take note of extra complexity in performance troubleshooting.

• Use as a mechanism to reserve at “group of VMs” level.

• If Department A pays for half the cluster, then creating an RP with 50% of cluster resource will guarantee them the resource,

in the event of contention. They can then put as many VM as they need.

• But as a result, you cannot overcommit at cluster level, as you have guaranteed at RP level.

Do not configure high CPU or RAM, then use Limit

•

•

•

•

E.g. configure with 4 vCPU, then use limit to make it “2” vCPU

It can result in unpredictable performance as Guest OS does not know.

High CPU or high RAM has higher overhead.

Limit is used when you need to force slow down a VM. Using Shares won’t achieve the same result

Don’t put VM and RP as “sibling” or same level

18

Confidential

Resource Pool: Disk and Network

The “Resource Pool” that most of us don’t give enough attention.

19

Confidential

Disk is set at individual VM, not Resource Pool

Default Shares Value is 1000.

This is at Datastore level,

which may span across cluster.

You can set Limit, but not

Reservation.

NFS Datastore can even span

across vCenter (use case:

read-only templates and ISO

images)

20

Confidential

Reviewing Disk Resource Pool

Shares is at Datastore level. Just like “Server” Resource Pool, the more VM you put, the less each VM.

You can view at Cluster level (which give view across datastores from this single cluster). This does not

tell the whole picture as the datastores may span across clusters.

You cannot view at individual ESXi level if it is part of a cluster

21

Confidential

Viewing at Datastore level

Shares is at Datastore level. Just like “Server” Resource Pool, the more VM you put, the less each VM.

You can view at Cluster level (which give view across datastores from this single cluster). This does not

tell the whole picture as the datastores may span across clusters. Do no span a datastore across “data

center” as you can only see 1 DC at a time.

You cannot view at individual ESXi level if it is part of a cluster.

22

Confidential

Pre-requisite: Storage IO Control

As a Datastore is just a logical construct, it has no physical limit by itself. The limit is on underlying LUN or

path. To enable sharing, enable Storage I/O Control

23

Confidential

Enabling Storage I/O Control

Not enabled by default

24

Confidential

Storage DRS

Finally, a “cluster” for storage

• Differences

• VM disks won’t move to another DS in the event of datastore or LUN failure

• Has concept of storage tiering.

• Similarity

• No need to specify individual datastore

• Affinity and Anti-Affinity rules

• Load balance among datastores, although in hours/days and not 5 minutes.

New feature in vSphere 5

More details here.

25

Confidential

Network Resource Pool

Tenant 1 VMs

Tenant 2 VMs

VR

vMotion

Mgmt

FT

NFS

iSCSI

Server Admin

vSphere Distributed Portgroup

Teaming Policy

vSphere Distributed Switch

26

Traffic

Shares

Limit (Mbps)

802.1p

vMotion

5

150

1

Mgmt

30

NFS

10

iSCSI

10

2

FT

60

--

VR

10

--

VM

20

Tenant 1

5

--

Tenant 2

15

--

Shaper

Scheduler

Scheduler

-250

2000

Load Based

Teaming

Limit enforcement

per team

--

Shares enforcement

per uplink

4

Confidential

Network Resource Pool

27

Confidential

Network Resource Pool

New feature in vSphere 5.

Can set shares and Limit, but not Reservation.

Unlike CPU/RAM, there is no reservation for Disk and

Network

• Network & Disk is not something that is completely

controlled by ESX.

• Array is serving multiple ESX or Cluster, and even non

ESX.

• Network has switches, router, firewall, etc which will

impact performance.

28

Confidential

Sample Architecture

This shows an example for Cloud for ~2000 VM. It also uses Active/Passive data centers.

29

Confidential

Sample Architecture

Primary Data Center (Active)

vCenter 1

Confidential Cluster

Management VMs

for Desktops

reside in

IT Cluster

With LinkedMode.

With SRM integration

Standalone

vCenter 2

Tier 1 Clusters

Tier 2 Clusters

Special Clusters

vCenter 3

Tier 3 Clusters

IT Cluster

Desktop

Cluster N

Desktop

Cluster 1

8 ESXi

SAN Fabric

NFS LAN

NFS Storage

30

Confidential VM

FC Storage

NFS LAN

Tier 1 Storage

Tier 2 Storage

Confidential

Tape back up

NFS Storage

Tier 3 Storage

IT Cluster

8 ESXi

The need for IT Cluster

Large Cloud

Special purpose cluster

• Running all the IT VMs used to manage the

virtual DC or provide core services

• The Central Management will reside here too

• Separated for ease for management &

security

This separation keeps

VMware

vCenter (for Server Cloud)

vCenter Heart-beat

vCenter Update Manager

Symantec AppHA Server

vCloud Director

Storage

Storage Mgmt tool (may need physical RDM

to get fabric info)

Network

Network Management Tool

Nexus 1000V Manager (VSM)

Core Infra

MS AD 1

MS AD 2

Syslog server

File Server (FTP Server)

Advance vDC Services

Site Recovery Manager + DB

Chargeback + DB

Agentless AV

Object-based Firewall

Security

Security Management Server

vShield Manager

Admin

Admin client (1 per Sys Admin)

VMware Converter

vMA

vCenter Orchestrator

Application Mgmt

App Dependancy Manager

Management

vCenter Ops + DB

Help Desk

Desktop

View Managers + DB

ThinApp Update Server

vCenter (for Desktop Cloud)

Business Cluster clean,

“strictly for business”.

31

Confidential

3 Tier Server resource pool

Create 3 clusters

• The hosts can be identical.

Tier

# Host

Node Spec?

Failure

Tolerance

MSCS

#VM

Monitoring

Remarks

Tier 1

5

(always)

Always

Identical

2 hosts

Yes

Max 18 per

cluster

Application level.

Extensive Alert

Only for Critical App.

No Resource Overcommit.

Tier 2

4–8

(likely 8)

2 variations

1 host

Limited

Max 70 VM.

10 per (N-1)

Tier 3

6–8

(likely 8)

3 variations

1 host

No

Max 105 VM

15 per (N-1)

App can be vMotion to Tier 1

during critical run

Infrastructure level

Minimal Alert.

Each project then “leases” vCPU and GB

• Not GHz, as speed may vary.

• Not using Resource Pool, as we can’t control the #VM in the pool

32

Confidential

Resource Overcommit

3 Tier pools of storage

Create 3 Tiers of Storage.

• This become the type of Storage Pool provided to VM

• Paves for standardisation

• Choose 1 size for each Tier. Keep it consistent.

• 20% free capacity for VM swap files, snapshots, logs, thin volume growth, and storage vMotion (inter tier).

• Use Thin Provisioning at array level, not ESX level.

• Separate Production and Non Production

• VMDK larger than 1 TB will be provisioned as RDM. Virtual-compatibility mode used.

Example

Tier

Interface

IOPS

Latency

RAID

RPO

RTO

Size

Limit

Snapshot

# VM

1

FC

>4000

10 ms

10

1 hour

1 hour

1 TB

70%

Yes

~10 VM.

EagerZeroedThick

2

FC

>2000

15 ms

5

4 hour

4 hour

2 TB

80%

No

~20 VM. Normal Thick

3

iSCSI

>1000

20 ms

5

8 hour

8 hour

3 TB

80%

No

~30 VM. Normal Thick

33

Confidential

Mapping: Cluster - Datastore

Always know which cluster mounts what datastores

• Keep the diagram simple. Not too many info. The idea is to have a mental picture that you can remember.

• If your diagram has too many lines, too many datastores, too many clusters, then it maybe too complex.

Create a Pod when such thing happens. Modularisation can be good.

34

Confidential

Performance counters: CPU

Same counters are shown for

other period, because no real

time counters.

It does not make sense to see

real time.

35

Confidential

Performance counters: RAM

counters not shown: Memory Capacity Usage

36

Confidential

37

Confidential

Memory: Consume vs Active

Consumed = how much physical RAM a VM has allocated to it

• It does not mean the VM is actively using it. It can be idle page.

Two types of memory overcommitment

Mapped to pRAM

• “Configured” memory overcommitment

• (Sum of VMs’ configured memory size) / host’s mem.capacity.usable*

• This is what is usually meant by “memory overcommitment”

Hypervisor

• “Active” memory overcommitment

• (Sum of VMs’ mem.capacity.usage*) / host’s mem.capacity.usable*

Impact of overcommitment

• “Configured” memory overcommitment > 1

• zero to negligible VM performance degradation

• “Active” memory overcommitment ≈ 1

• very high likelihood of VM performance degradation!

*Only available in vSphere 5.0. But net effect is the same.

38

Confidential

consumed

Configured Memory Overcommitment

Parts of idle and free memory

not in physical RAM due to

reclamation

VM 1

free

VM 2

idle

active

free

VM 3

idle

active

free

idle

active

Hypervisor

All VMs’ active memory stays resident in physical RAM,

allowing for maximum VM performance

Entitlement >= demand for all VMs [good]

39

Confidential

Active Memory Overcommitment

No idle and free memory

in physical RAM

VM 1

VM 2

active

VM 3

active

active

Hypervisor

Some VM active memory not in physical RAM,

which will lead to VM performance degradation!

Entitlement < demand for one or more VMs [bad]

40

Confidential

Example

Notice that Active is lower than Consumed and Limit.

• VM was doing fine.

Active

41

Limit

Consumed

Confidential

VM is fighting with ESX for

memory

vSphere and RAM

Below is a typical picture.

Most VMware Admin will conclude that ESX is running out of RAM.

• Time to buy new RAM

• This is misleading. It is showing memory.consumed, not memory.active counter.

42

Confidential

vCenter Operation and RAM

Same ESX. vCenter Ops shows 26%.

vCenter Ops is showing the right data

43

Confidential

Performance Monitoring

44

Confidential

45

Confidential

46

Confidential

Global view

47

Confidential

Thank You

And have fun in the pool!

Confidential

© 2009 VMware Inc. All rights reserved