Safety Management Systems & Reliability

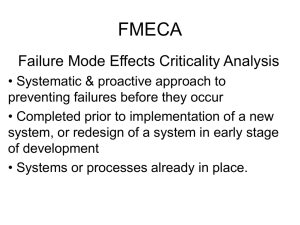

advertisement

Safety Management Systems & Reliability Chris W. Hayes, MD CPSOC April 12, 2011 Overview How safe is healthcare? What is Safety Management System System defences “Swiss Cheese” model Reliability – Group exercise Summary How Safe is Healthcare? How Safe is Healthcare? Canadian Adverse Events Study – 7.5% of admission suffer an AE – 9250 to 23750 preventable deaths/yr – Death from AE in 1/165 admissions Baker R. The Canadian Adverse Events Study. CMAJ 2001. How Safe is Healthcare? Health Care Why Is This So? “Medicine used to be simple, ineffective and relatively safe. Now it is complex, effective and potentially dangerous.” Sir Cyril Chantler Chairman, King’s Fund Why Is This So? Clinical medicine has become extremely complex: – Increased patient volume, acuity – Growing therapeutic options – Expanding knowledge, evidence – Surprises, uncertainty – Many sources of (incomplete) information – Interruptions and multitasking Why Is This So? AND… Safe and quality outcomes (for the most part) dependent on healthcare providers [humans] Is that a problem? Why Is This So? Strengths Large memory capacity Large repertory of responses Flexibility in applying responses to information Ability to react creatively to the unexpected •Compassionate / caring Limitations Difficulty in multitasking Difficulty in recalling Gets worse with: detailed information -fatigue quickly Poor computational ability -stress -lack of knowledge Limited short term memory -lack of confidence Perception -lack of supportive work environment Where Should We Be? Health Care Blood Transfusion Anesthesia How Do We Get There? Healthcare needs to become more like an ultrasafe industry – Learn from other ultra-safe industries – Learn from components of medicine that have achieved high degree of safety – Develop a strong Culture of Safety Safety Management Systems? Safety Management System, SMS – Taken from ultra-safe, HROs – An organizational approach to safety – Focuses on the system not the person A systematic, explicit and comprehensive process for managing safety risks Safety Management Systems? SMS origins from aviation industry – In response to major airline disasters in the 1960’s – Initial focus on “safety system” • Made department / individuals responsible for safety Safety Management Systems? Safety Management Systems? SMS origins from aviation industry – In response to major airline disasters in the 1960’s – Initial focus on “safety system” • Made department / individuals responsible for safety – Realization that to achieve full scale safety goals need whole organization approach Safety Management Systems? Main objectives: – Detecting and understanding the hazards and risks in your environment – Proactively making changes to minimize risks – Learning from errors that occur in order to prevent their reoccurrence Safety Management Systems? With the understanding that: – Safety is everyone’s job Culture Of – Embedded at all levels Safety – Humans are fallible – System defences need to be designed / redesigned to protect patients System Defences Redundancy and Diversity – Need for multiple layers – Need for multiple approaches 2 Types of defences – Hard defences – engineered features, forcing functions, constraints – Soft defences – rules, policies, double-checks, signoffs, auditing, reminders System Defences Hazardous domains (nuclear power) – activities are stable and predictable – heavy reliance on engineered safety features. Healthcare defences – most of the defences are human skills. – sharpenders (nurses, junior MDs) are the ‘glue’ that holds these defences together. System Defences Disaster happens when: – There are initiating disturbances, AND – The defences fail to detect and/or protect – often necessary for several defences to fail at the same time. Incidence of error (losses) depends on: – The frequency of initiating disturbance (hazards) – The reliability of the system defences Reason’s “Swiss Cheese” Model Defences are only as strong as their weakest link! Some holes due to active failures Losses Hazards Other holes due to latent conditions A System Model of Accident Causation Reason’s “Swiss Cheese” Model Defences are only as strong as their weakest link! Some holes due to active failures Losses Hazards Other holes due to latent conditions A System Model of Accident Causation An Example SMH ICU – Patient with CVA has seizure in ICU – MD orders 1g Dilantin over 20 minutes – MD called to reassess patient for severe hypertension and ST changes – Metoprolol given with bradycardia but little BP effect – Pt suffers large MI and CHF An Example Reason’s “Swiss Cheese” Model Medication organization Manufacturer Hazards Sound-alike look-alike drug Purchasing Losses CHF/MI RN/MD Double-check Making Your System Safer Accept that errors will be made Incorporate features of Ultra-safe SMS – Actively seek hazards (FMEA, Walk-Rounds) and learn from errors that have occurred (RCA) – Create multiple defense layers to prevent error (hard and soft as appropriate) – Make safety everyone’s job Making Your System Safer “We cannot change the human condition But… we can change the conditions under which humans work” James Reason Making Healthcare Reliable How do you close the hole’s in the Swiss Cheese – Design strong defences • Engineer problem away – Include human factors design (later) – Build in reliable processes Reliability Reliability Measured as the inverse of the system’s failure rate Failure free operation over time – – – – – Chaotic: failure in greater than 20% of events 10-1: 1 or 2 failures out of 10 10-2: <5 failures per 100 10-3 : <5 failures per 1000 10-4 : <5 failures per 10000 Reliability Reliability principles, used to design systems that compensate for the limits of human ability, can improve safety and the rate at which a system consist-ently produces desired outcomes. Reliability Three-step model for applying principles of reliability to health care systems: 1. Prevent failure 2. Identify and Mitigate failure 3. Redesign the process based on the critical failures identified. Table Exercises – The case As your organization’s PSO your are made aware of several patients who received cardiopulmonary resuscitation following Code Blue calls despite known advance directives stating the patients’ wishes were to be DNR In both cases the DNR order was in the chart but were not easily located nor were the assigned nurses aware of the order You were aware that No Resuscitation Policy that contained a standardized order form was created, approved by senior management and MAC and was available for use POLICY PRACTICE Group Exercise Identify a process to make more reliable Describe the current process (flow chart) Identify where the defects occur in the current system Set a reliability goal for the segment Roll Out - The Usual Way B O A R D R O O M R E A L W O R L D Initial Plan IDEA Discus s & Revise Discus s & Revise Discus s & Revise Roll Out - The Better Way B O A R D R O O M R E A L W O R L D Initial Plan IDEA Applying Reliability Understand the process Find the defects, bottlenecks and workarounds Plan process improvements Test them…small scale, front-line involvement….until they work Look for failures and …redesign Summary Healthcare has high error rate Understanding hazards and learning from errors vital Defences that rely on more than human vigilance need to be in place Need a strong culture of safety Need to build reliable processes Start small….involve frontline Safety improvement is everyone’s job Thank You! Questions? chayes@cpsi-icsp.ca