Research evaluation at CWTS

advertisement

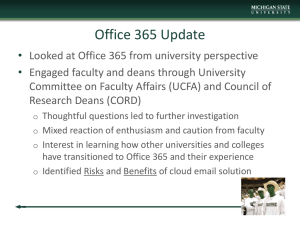

Research evaluation at CWTS Meaningful metrics, evaluation in context Ed Noyons, Centre for Science and Technology Studies, Leiden University RAS Moscow, 10 October 2013 Outline • Centre of science and Technology Studies (CWTS, Leiden University) history in short; • CWTS research program; • Recent advances. 25 years CWTS History in Short 3 25 years CWTS history in short (1985-2010) • Started around 1985 by Anthony van Raan and Henk Moed; One and a half person funded by university; • Context is science policy, research management; • Mainly contract research and services (research evaluation); • Staff stable around 15 people (10 researchers); • Main focus on publication and citation data (in particular Web of Science). 25 years CWTS history in short (2010 - …) • Block funding since 2008; • Since 2010 – moving from Services mainly with some research to: – Research institute with services; – New director Paul Wouters; • New recruitments: now ~35 people. 5 Research and services CWTS Research programme 6 Bibliometrics (in context science policy) is ... Opportunities • Research Accountability => evaluation • Need for standardization, objectivity • More data available Vision • Quantitative analyses • Beyond the ‘lamppost’ – Other data – Other outputs • Research 360º – Input – Societal impact/quality – Researchers themselves Background of the CWTS research program • Already existing questions • New questions: 1. How do scientific and scholarly practices interact with the “social technology” of research evaluation and monitoring knowledge systems? 2. What are the characteristics, possibilities and limitations of advanced metrics and indicators of science, technology and innovation? Current CWTS research organization • Chairs – Scientometrics – Science policy – Science Technology & innovation • Working groups – – – – – Advanced bibliometrics Evaluation Practices in Context (EPIC) Social sciences & humanities Society using research Evaluation (SURE) Career studies A look under the lamp post Back to Bibliometrics 12 Recent advances at CWTS • Platform: Leiden ranking • Indicators: New normalization to address: 1. Multidisciplinary journals 2. (Journal based) classification • Structuring and mapping – Advanced network analyses – Publication based classification – Visualization: VOSviewer http://www.leidenranking.com The Leiden Ranking 14 Platform: Leiden Ranking http://www.leidenranking.com • Based on Web of Science (2008-2011); • Only universities (~500); • Only dimension is scientific research; • Indicators (state of the art): – Production – Impact (normalized and‘absolute’) – Collaboration. 15 Leiden Ranking – world top 3 (PPtop10%) PPtop10%: Normalized impact Stability: Intervals to enhance certainty 16 Russian universities (impact) Russian universities (collaboration) Dealing with field differences Impact Normalization (MNCS) 19 Background and approach • Impact is measured by numbers of citations received; • Excluding self-citations; • Fields differ regarding citing behavior; • One citation is one field is more worth than in the other; • Normalization – By journal category – By citing context. 20 Issues related to journal category-based approach • Scope of category; • Scope of journal. 21 Journal classification ‘challenge’(scope of category) (e.g. cardio research) Approach Source-normalized MNCS • Source normalization (a.k.a. citing-side normalization): – No field classification system; – Citations are weighted differently depending on the number of references in the citing publication; – Hence, each publication has its own environment to be normalized by. 23 Source-normalized MNCS (cont’d) • Normalization based on citing context; • Normalization at the level of individual papers (e.g., X) • Average number of refs in papers citing X; • Only active references are considered: – Refs in period between publication and being cited – Refs covered by WoS. 24 Collaboration, connectedness, similarity, ... Networks and visualization 25 VOSviewer: collaboration Lomonosov Moscow State University (MSU) • WoS (1993-2012) 26 • Top 50 most collaborative partners • Co-published papers Other networks • Structure of science output (maps of science); • Oeuvres of actors; • Similarity of actors (benchmarks based on profile); •… 27 Structure of science independent from journal classification Publication based classification 28 Publication based classification (WoS 19932012) • Publication based clustering (each pub in one cluster); • Independent from journals; • Clusters based on Citing relations between publications • Three levels: – Top (21) – Intermediate (~800) – Bottom (~22,000) • Challenges: – Labeling – Dynamics. 29 Map of all sciences (784 fields, WoS 1993-2012) Each circle represents a cluster of pubs Colors indicate clusters of fields, disciplines Social and health sciences Cognitive sciences Biomed sciences Earth, Environ, agricult sciences Distance represents relatedness (citation traffic) Maths, computer sciences Physical sciences Surface represents volume Positioning of an actor in map • Activity overall (world and e.g., Lomonosov Moscow State Univ, MSU) o Proportion Lomonosov relative to world; • Activity per ‘field’ (world and MSU) o Proportion MSU in field; • Relative activity MSU per ‘field’; • Scores between 0 (Blue) and 2 (Red); • ‘1’ if proportion same as overall (Green). 31 Positioning Lomonosov MSU 32 Positioning Lomonosov MSU 33 Positioning Russian Academy of Sciences (RAS) 34 Alternative view Lomonosov (density) 35 Using the map: benchmarks • Benchmarking on the basis of research profile – Distribution of output over 784 fields; • Profile of each university in Leiden Ranking; – Distributions of output over 784 fields; • Compare to MSU profile; • Identify most similar. 36 Most similar to MSU (LR) universities • FR - University of Paris-Sud 11 • RU - Saint Petersburg State University • JP - Nagoya University • FR - Joseph Fourier University • CN - Peking University • JP - University of Tokyo 37 Density view MSU 38 Density view St. Petersburg State University 39 VOSviewer (Visualization of Similarities) http://www.vosviewer.com • Open source application; • Software to create maps; • Input: publication data; • Output: similarities among publication elements: – – – – Co-authors Terms co-occurring Co-cited articles … 40 More information CWTS and methods • www.cwts.nl • www.journalindicators.com • www.vosviewer.com • noyons@cwts.leidenuniv.nl 41 THANK YOU 42 Basic model in which we operate (research evaluation) • Research in context Example (49 Research communties of a FI univ) High Int-cov and large P Low Int_cov & small P 5.00 ‘Positive’ effect MNCS (new) 4.00 3.00 2.00 ‘Negative’ effect 1.00 0.00 0.00 1.00 2.00 MNCS (traditional) 3.00 4.00 5.00 RC with a‘positive’effect mncs high mncs Agv • Most prominent field • Impact increases mncs low 0 20 40 60 80 100 120 140 0 20 40 60 80 100 120 140 ASTRONOMY & ASTROPHYSICS (0.8 -> 1.3) METEOROLOGY & ATMOSPHERIC SCIENCES (0.8 -> 1.2) GEOSCIENCES, MULTIDISCIPLINARY (0.8 -> 1.1) GEOCHEMISTRY & GEOPHYSICS (0.8 -> 1.3) PHYSICS, NUCLEAR (1.9 -> 1.5) PHYSICS, MULTIDISCIPLINARY (7.1 -> 7.7) PHYSICS, PARTICLES & FIELDS (3.2 -> 4.6) MULTIDISCIPLINARY SCIENCES (0.5 -> 0.6) Rc with a‘negative’ effect mncs high 0 mncs Agv 5 10 mncs low 15 • Most prominent field • Impact same 20 25 30 35 ENDOCRINOLOGY & METABOLISM (1.0 -> 1.1) NUTRITION & DIETETICS (0.6 -> 0.5) PEDIATRICS (1.3 -> 0.8) IMMUNOLOGY (2.0 -> 1.3) RHEUMATOLOGY (1.0 -> 1.1) • Less prominent field • Impact decreases PUBLIC, ENVIRONMENTAL & OCCUPATIONAL HEALTH (0.9 -> 0.9) MEDICINE, GENERAL & INTERNAL (3.8 -> 3.5) ALLERGY (3.4 -> 1.8) 0 5 10 15 20 25 30 35 Wrap up Normalization • Normalization based on journal classification has its flaws; • We have developed recently an alternative; • Test sets in recent projects show small (but relevant) differences;