Why Parallel

advertisement

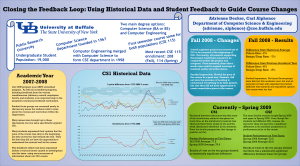

Number of transistors per chip continues to follow Moore’s Law; but the transistors are going into multiple cores, bigger cache, bridge technology, etc But in 2003, clock speed hit a wallWhere are the 32GHz processors? Parallel & Distributed Computing (PD) is now its own Knowledge Area (KA). Architecture and Organization (AR) is 16 Tier 2 core hours PD is 5 Tier 1 hours, and 10 Tier 2 hours. PD is seen as critical to many other KA’s, and can properly be placed there; Strawman seems to indicate a standalone course is fastest way to get up and running now. http://www.cs.gsu.edu/~tcpp/curriculum/ Using a Bloom’s taxonomy of KnowComprehend-Apply this group proposes some 90 hours of instruction in PD Only about 15 hours at the “Apply” level They see 5 core courses involved, and a larger list of upper division electives › CS1, CS2, Architecture, DataStruc/Algor, Discrete ACM SIGCSE 2009 (Chattanooga) Dr. Michael Wrinn (Intel Senior Course Architect): › Intel is not going to be making any single core processors. The world has already gone parallel and you better start teaching parallel computing to your students. The Free Lunch is over Professional societies have served notice that our curricula must include PD. The world in which our students will live their careers will be a parallel world. It is already a multicore world. It must begin as early as CS1/CS2 Intel microgrant program to develop PD curricular material ACM SIGCSE “Nifty Programming Assignments” › Nick Parlante at nifty.stanford.edu If we want to target CS1/CS2, why not begin with those assignments the SIGCSE community has already endorsed as nifty? My thanks to the 2011 Nifty Authors for their gracious permission to use their work › DO NOT MAKE SOURCE CODE AVAILABLE Take students’ serial solution to a problem and add parallelism by “grabbing the low-hanging fruit”. We use C++ in the MS Visual Studio.NET IDE The Intel Parallel Studio, especially the Parallel Advisor, is a natural choice. › Parallel Studio is also available for Unix as a stand-alone product, both as command line & GUI Trapezoidal Numerical Integration › Not a “nifty”, but is embarrassingly parallel BMP puzzles: who done it? › David Malan, Harvard University Book Recommendations: look out Netflix › Michele Craig, University of Toronto Hamming Codes: old school error correction › Stuart Hansen, University of Wisconsin-Parkside Evil Hangman: cheating is a strategy! › Keith Schwarz, Stanford University A classic Numerical Methods technique Improves upon Riemann sums by adding area of trapezoids rather than simple rectangles. b a ba f ( x1) 2 f ( x2) 2 f ( x3) ... 2 f ( xN ) f ( xN 1) f ( x)dx 2N N = number of subintervals Obviously a big for-loop to add the f(x)’s Mr. Body has met foul play. But he left a “Clue” to his murderer’s identity by coding the green & blue pixel values in a harmless-looking BMP image. The red pixels merely obscure the message So process the 2D array of pixels in the BMP, suppressing red and enhancing green/blue to find the killer. 2D arrays & parallel for loops is a natural Given a list of books, have a set of readers give their rating of the book [-5 to +5]. Then take a new reader and comparing her ratings to your total population, make the appropriate book recommendations to her. Computing dot products (actually just about any array processing) in an O(n2) environment is a parallel dream Prof Hansen uses this assignment to teach: › Error correcting codes › Matrix multiplication › Binary representations of data › Binary I/O (optional) Use a generator matrix and a parity check matrix to manipulate Hamming(7,4) Matrix multiplication (even tiny matrices) is bull's-eye parallel fodder. Find a big list of words. Challenge a human to solve Hangman. The wrinkle is that the computer never actually picks a word till it is forced to do so. For every human guess, find the largest subset of words possible For example: HEAR_ (can be HEARD, HEART, HEARS) Essentially impossible to beat in 10 guesses.