Presenting, Analyzing, and Using Date for Improvement of Student

advertisement

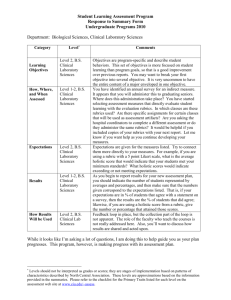

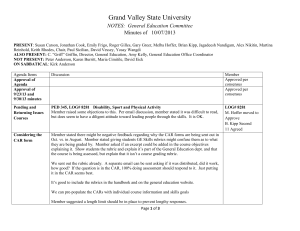

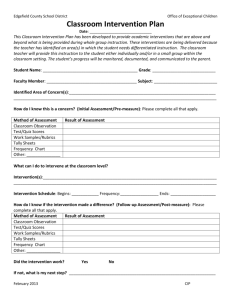

Essential Practices for Effective Rubric Implementation: Tips on Training and Using Data for Improvement Ashley Finley, Ph.D Senior Dir. of Assessment & Research, AAC&U National Evaluator, Bringing Theory to Practice Linda Siefert, Ed.D Director of Assessment University of North Carolina-Wilmington General Education & Assessment Conference Portland, OR February 27, 2014 Capturing What Matters: VALUE Rubrics Initiative Rubric Development 16 rubrics Created primarily by teams of faculty Inter-disciplinary, interinstitutional Three rounds of testing and revision on campuses with samples of student work Intended to be modified at campus-level Utility Assessment of students’ demonstrated performance and capacity for improvement Faculty-owned and institutionally shared Used for students’ selfassessment of learning Increase transparency of what matters to institutions for student learning VALUE Rubrics (www.aacu.org/value) Knowledge of Human Cultures & the Physical & Natural Worlds Personal & Social Responsibility Content Areas No Rubrics Intellectual and Practical Skills Inquiry & Analysis Critical Thinking Creative Thinking Written Communication Oral Communication Reading Quantitative Literacy Information Literacy Teamwork Problem-solving Civic Knowledge & Engagement Intercultural Knowledge & Competence Ethical Reasoning Foundations & Skills for Lifelong Learning Global Learning Integrative & Applied Learning Integrative & Applied Learning The Anatomy of a VALUE Rubric Criteria Levels Performance Descriptors The Role of Calibration Essential training through collaboration and discussion Transparency of standards (without standardization) Building shared, inter-disciplinary knowledge around skills assessment and application of the rubric Shared stake in data collection and discussion for improvement Building intentionality of assignment development The Calibration Training Process Scoring Steps: Review rubric to familiarize yourself with structure, language, performance levels Ask questions about the rubric for clarification or to get input from others regarding interpretation Read student work sample Connect specific points of evidence in work sample with each criterion at the appropriate performance level (if applicable) Calibration Steps: Review scores Discuss scoring rationale Opportunity to change scores The Ground Rules We are not changing the rubric (today). This is not grading. Think globally about student work and about the learning skill. Think beyond specific disciplinary lenses or content. Start with 4 and work backwards. Connect evidence in work sample with language in performance cell. Pick one performance benchmark per criterion. Do not use “.5”. Zero and NA do exist but are distinct. Assign “0” if work does not meet benchmark (cell one) performance level. Assign “not applicable” if the student work is not intended to meet a particular criterion. How Have Campuses Used Rubrics to Improve Learning? Using the VALUE Rubrics for Improvement of Learning and Authentic Assessment 12 Case Studies Frequently asked questions http://www.aacu.org/value/casestudies/ When presenting assessment results… Consider the Type of Raw Data Rubrics describe categories. These categories are generally ordered. (4 reflects higher quality performance than 3.) But they are likely not equally distributed on a number line. ( 1 is not the same distance from 2 as 2 is from 3. 4 is not twice a “good” as 2.) Therefore, summary presentations and statistical procedures should be appropriate for ordinal numbers. Consider the Readers Disciplines have commonly used styles of presenting data. Individuals have personal learning styles. Consider presenting the data in multiple forms that will resonate with different styles. Charts Graphs Verbally, qualitatively (rubrics provide quality descriptions for each level) Chart The percent of students meeting the adopted benchmark for lower-division courses. Dimension % of Work Products Scored Two or Higher CT 1 Explanation of Issues 83.4% CT2 Evidence 77.0% CT3 Influence of Context and Assumptions 58.6% CT4 Student’s Position 70.1% CT5 Conclusions and Related Outcomes 56.7% Example Combination Chart and Graph 100% 80% 60% 40% 20% 0% 4 3 2 1 0 CT1 10.3% 41.6% 31.5% 14.9% 1.7% CT2 9.1% 37.2% 30.7% 21.1% 1.9% CT3 4.6% 27.5% 26.5% 32.1% 9.3% CT4 7.5% 33.9% 28.7% 26.2% 3.7% CT5 3.1% 26.3% 27.3% 33.2% 10.1% Example Verbal Description CT4 Student’s Position Less than one in twenty work products contained no statement of student position (scores of 0). One fourth of the work products provided a simplistic or obvious position (scores of 1). Three in ten work products provided a specific position that acknowledged different sides of an issue (scores of 2). One third of the work products took into account the complexities of the issue and acknowledged the points of view of others (scores of 3). And less than one in ten work products provided an imaginative position that took into account the complexities of the issue, synthesized others’ viewpoints into the position, and acknowledged the limits of the position taken (scores of 4) Scenario You will work in table groups to analyze and then discuss recent assessment findings and how they might be used to improve student learning. Share Out