PowerPoint slides

advertisement

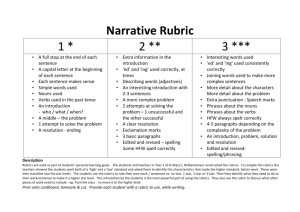

Frequently asked questions about development, interpretation and use of rubrics on campuses 1 Terry Rhodes ◦ Vice President, Office of Quality, Curriculum and Assessment – AAC&U Ashley Finley ◦ Senior Director of Assessment and Research – AAC&U finley@aacu.org 2 Created with funding from FIPSE & State Farm In response to Spellings Commission report on need for greater accountability in higher educ. AAC&U guided development of 16 rubric teams ◦ Consisting of faculty (primarily), student affairs, institutional researchers, national scholars ◦ Inter-disciplinary, inter-institutional Each rubric underwent 3 rounds of testing on campuses with samples of student work, feedback went back to teams for revision Intended to be modified 4 What Types of Institutions are Accessing the Rubrics? Representation also includes: • All US states and territories • Higher education consortia • International institutions • K-12 schools and systems Also: The Voluntary System of Accountability (VSA) approved use of rubrics for campus reporting in 2011 5 Assessment of students’ demonstrated performance & capacity for improvement Faculty-owned & institutionally shared Used for students’ self-assessment of learning Increase transparency of what matters to institutions for student learning 6 Knowledge of Human Cultures & the Physical & Natural Worlds ◦ Civic Knowledge & Engagement ◦ Intercultural Knowledge & Competence ◦ Ethical Reasoning ◦ Foundations & Skills for Lifelong Learning ◦ Global Learning ◦ Content Areas No Rubrics Intellectual and Practical Skills ◦ ◦ ◦ ◦ ◦ ◦ ◦ ◦ ◦ ◦ Inquiry & Analysis Critical Thinking Creative Thinking Written Communication Oral Communication Reading Quantitative Literacy Information Literacy Teamwork Problem-solving Personal & Social Responsibility Integrative & Applied Learning ◦ Integrative & Applied Learning The Anatomy of a VALUE Rubric Criteria Levels Performance Descriptors Do the performance levels correspond to year in school? (no) What about to letter grades? (no) Can an assignment be scored by someone whose area of expertise is not the same as the content covered in the assignment? (yes) “I’m a philosophy professor so how can I score a paper from a biology class?” Can the rubrics be used by two year institutions? (yes) Can the rubrics be used for graduate programs? (yes) Can VALUE rubrics be used for course-level assessment? (yes, with modification or adaptation to include content areas) 10 Most people consider baking a skill. If a novice baker is given a recipe and a picture, can he/she recreate the dish? Chances are what is created will look more like this…but over time will get better with practice. Calibration (norming) sessions Assignment design workshops Rubric modification workshops, specifically for adaptation of rubrics for program-level or course-level assessment Data-centered events framed around interpretation of institutional data, celebration of areas of success and opportunity to gather recommendations for improvement From: UNC-Wilmington, Critical Thinking Rubric Dimension % of students % of students who scored 2 or who scored 3 higher of higher Explanation of Issues 68.3 35.5 Interpreting & Analysis 65.0 28.2 Influence of Context and Assumptions Student’s position 48.8 21.2 54.5 24.0 Conclusions and related outcomes 47.7 17.0 Critical Thinking: Issues, Analysis, and Conclusions Percent of Ratings Inter-rater reliability = >.8 Percent of Ratings Critical Thinking: Evaluation of Sources and Evidence Percent of Ratings “VALUE added” for 4 years - writing Lower Credit Higher Credit 9 7.4 7.6 8 7 6 6.5 6.8 5.7 5.6 5 7.8 4.2 4 3 2 1 0 Critical Rsrch & Oral Literacy Info Lit Comm Quant Lit Crit. Lit. (CT, Rdg, Writing): 1,072 samples = gain of 0.88 bet. lower & higher credit students. Research & Info. Literacy: 318 samples = gain of 1.49. The interdisc. scoring team found that programmatic definitions & practices around citation of researched info. varied widely, difficult to consistently score for plagiarism. Oral Comm: 875 samples = gain of 0.14. 39% of samples not related to rubric. Samples had wide range of quality & other tech. limitations. Quant. Reas.: 322 samples = gain of 0.97. Scoring team found 30% of samples were not related to rubric… Using the VALUE Rubrics for Improvement of Learning and Authentic Assessment 12 Case Studies Frequently asked questions http://www.aacu.org/value/casestudies/ Additional MSC Webinars Value Rubrics June 17, 3-4pm; June 18, 4-5pm (eastern) Coding and Uploading Artifacts Using Taskstream Date and Time TBD Webinars will be recorded and posted to: http://www.sheeo.org/msc Webinars already posted: Welcome to the MSC Questions? Pilot Study Overview Assignment Design Webinar Sampling for the Pilot Study IRB & Student Consent 19