Jonas PowerPoint slides - CCSSO State Consortium on Educator

advertisement

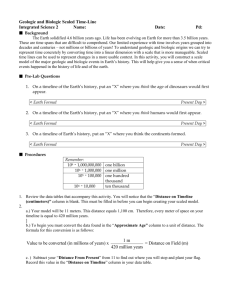

Implementing Virginia’s Growth Measure: A Practical Perspective Deborah L. Jonas, Ph.D. Executive Director, Research and Strategic Planning Virginia Department of Education May 2012 2 Potential Uses for Student Growth Percentiles • School improvement (including accountability) and program evaluation • One component of comprehensive performance evaluation ▫ Consistent with the Code of Virginia requirement to incorporate measures of student academic progress in evaluations (§ 22.1-295). ▫ Growth percentiles may form the basis of one of multiple measures of student progress, when available and appropriate. • Planning for differentiated teacher professional development • Communications with students and parents 3 Current Policy and Program Initiatives • Implementing 2011 Virginia Board of Education Guidelines for Uniform Performance Standards and Evaluation Criteria for teachers statewide, which: ▫ includes a comprehensive model for teacher performance evaluation; ▫ recommends that evaluations include multiple measures of student academic progress; and ▫ recommends that one measure of student academic progress be the state provided student growth percentiles when available and appropriate. • Incorporating growth measures into Elementary and Secondary Education Act waiver application. • Student growth percentile training for administrators. 4 Overview of our work • Began working with nationally recognized experts in calculating student growth percentiles in spring 2010. • Discovered that a standard development/modeling approach would not work in Virginia. • Developed policy solutions—some of which may be informed by growth data; many can be informed by additional research. 5 Today’s topics • Varied test progressions in statistical models • Imperfect model fit—ceiling effects • Changing tests in 2011-2012 • Other 6 Test progressions • Not all students progress through Virginia’s tests using the same pathway, e.g., ▫ Grade 6, 7, Algebra I ▫ Grade 6, 7, 8, Algebra I • This impacts statistical model building and the “peer” or comparison group. • States using or moving to end-of-course tests may need to consider how to model unique student progressions through courses and tests—they do have an impact. 7 Model fit—Impact of floor and ceiling effects • Compromises validity of some statistical estimates.* • Important issues: ▫ Impact on individual student data. ▫ Impact on grouped data (teachers, grade-level schools, districts). • Policy should be developed to account for these issues. *See Koedel , C. & Betts , J. (2009) . Value-added to What? How a ceiling in the testing instrument influences valueadded estimation. http://economics.missouri.edu/working-papers/2008/WP0807_koedel.pdf. 8 Concrete example of ceiling effect impact* Scaled Score Grade 3 Scaled Score Grade 4 Scaled Score Grade 5 SGP Grade 5 Student A 600 600 600 67 Student B 554 600 600 80 Student C 522 600 600 88 Scaled Score Range 200 - 600 9 Concrete example of ceiling effect impact Scaled Score Grade 3 Scaled Score Grade 4 Scaled Score Grade 5 SGP Grade 5 Student A 600 600 600 67 Student B 554 600 600 80 Student C 522 600 600 88 This is the maximum possible growth score for students with a perfect score for three consecutive years. Growth is still considered high (in Virginia), how high is not clear. 10 Concrete example of ceiling effect impact—a more extreme case Scaled Score Grade 3 Scaled Score Grade 4 Scaled Score Grade 5 SGP Grade 5 Student A 600 600 600 24 Student B 568 600 600 50 Student C 522 600 600 59 11 Concrete example of impact of ceiling effects—a more extreme case Scaled Score Grade 3 Scaled Score Grade 4 Scaled Score Grade 5 SGP Grade 5 Student A 600 600 600 24 Student B 568 600 600 50 Student C 522 600 600 NOTE: The impact of significant floor effects would be similar. 59 This is the maximum possible growth score for students with a perfect score for three consecutive years, and may not be a valid measure of student growth. This occurs because the majority of students who earn perfect scores for two consecutive years also earn a perfect score in the third year. 12 What if you have floor or ceiling effects in your tests? • Statistical adjustments may be possible, depending on the magnitude of the ceiling effects (Koedel & Betts, 2009). ▫ Statistical adjustments that may be acceptable for research purposes are not always acceptable for accountability purposes. ▫ More statistical work is needed in this area. • Policy for Growth Data ▫ Virginia established a cut-off for which students will receive and not receive growth data. ▫ Resulted in the state providing no data for a substantial percentage of students. • Policies related to growth data cut-offs will be re-evaluated when new tests are in place. Koedel , C. & Betts , J. (2009) . Value-added to What? How a ceiling in the testing instrument influences value-added estimation. http://economics.missouri.edu/working-papers/2008/WP0807_koedel.pdf. 13 Changing tests and growth data • Virginia has not adopted the Common Core State Standards ▫ Implemented new mathematics assessments this year, 2011-2012. ▫ New English assessments will be implemented in 2012-2013. • New tests complicate growth model development, training, interpretation, and timing of various use-cases. 14 Considerations when tests change • Growth-to-Standard calculations ▫ It is not clear how many years of data are needed to measure growth-tostandard reliably. ▫ The amount of data needed depends at least in part on the magnitude of changes in student outcomes each year. • Use of growth data in school and educator accountability ▫ Virginia has not included growth-to-standard data in proposed school or educator accountability due to changing tests. ▫ Statewide training is focused on data interpretation and use of multiple measures to support teachers work to ensure students can meet new and higher expectations. ▫ Regularly analyzing data and considering policy and programmatic implications. • Public information becomes an even greater challenge after tests change. Stay tuned……there’s more to learn! 15 A few other considerations • Lack of growth data for students assessed with alternative tests and the impact on use in evaluation and accountability. • Impact of floor/ceiling effects on other data derived from state assessments (e.g., Lexile® and Quantile® data). • Public information requests for data and Freedom of Information Act/privacy laws and regulations. • Data interpretations when growth is calculated for assessments when more than one year is between last and most recent tests. Lexile® and Quantile® are trademarks of MetaMetrics, Inc., and are registered in the United States and abroad. Copyright © 2012 MetaMetrics, Inc. 16 Contact Information Deborah L. Jonas, Ph.D. Executive Director for Research and Strategic Planning Virginia Department of Education Deborah.Jonas@doe.virginia.gov