Text Complexity

advertisement

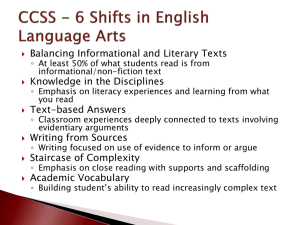

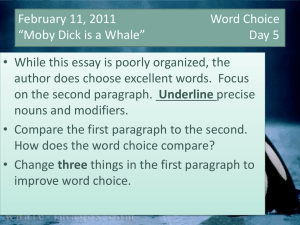

Instruction in Reading USING DATA AND RESEARCH INTO PRACTICE FOR GROWTH AND INSTRUCTION What is Reading? 2 “Reading is an active and complex process that involves – – – Understanding written text Developing and interpreting meaning; and Using meaning as appropriate to type of text, purpose, and situation” (NAEP Framework, 2009) Reading is the single most important educational skill students will learn. As students move up in grade levels text demand significantly increases. 2 Two important goals for improvement: 1. Increase the percentage of students reading “at grade level” each year at each grade level from kindergarten through tenth grade. 2. Decrease the percentage of students with serious reading difficulties each year at each grade level. Our most important measure of success in accomplishing these goals is assessing student performance in reading comprehension using an initial screening, mid-year assessment, and outcome measure at the end of each grade level. 3 4 4 5 5 6 6 Raising Achievement and Closing Gaps Between Students 7 8 Text complexity is the key to accelerating student achievement in reading. 8 Text Complexity - ACT Study 9 Purpose: Determine what distinguished the reading performance of students likely to succeed in college and not. • Process: Set benchmark score on the reading test shown to be predictive of success in college (“21” on ACT composite score) Looked at results from a half million students. Divided texts into three levels of complexity: uncomplicated, more challenging, and complex. Performance on the ACT Reading Test by Comprehension Level (Averaged across Seven Forms) 10 Performance on the ACT Reading Test by Textual Element (Averaged across Seven Forms) 11 Text Complexity Matters 12 Performance on complex texts is the clearest differentiator in reading between students who are more likely to be ready for college and those who are less likely to be ready. Texts used in the ACT Reading Test reflect three degrees of complexity: uncomplicated, more challenging, and complex. Performance on the ACT Reading Test by Degree of Text Complexity (Averaged across Seven Forms) In this figure, performance on questions associated with uncomplicated and more challenging texts both above and below the ACT College Readiness Benchmark for Reading 13 follows a pattern similar to those in the previous analyses. 13 Improvement on each of the two kinds of questions is gradual and fairly uniform. 13 Recap of ACT Findings 14 Question type and level (main idea, word meanings, details) is NOT the chief differentiator between student scoring above and below the benchmark. The degree of text complexity in the passages acted as the “sorters” within ACT. The findings held true for both males and females, all racial groups and was steady regardless of family income level. What students could read, in terms of its complexity--rather than what they could do with what they read—is greatest predictor of success. FCAT has complex passages and highly cognitive demanding questions. 14 STUDENTS WHO ARRIVE BEHIND IN READING OR CLOSE TO GRADE LEVEL ARE OFTEN TAUGHT THROUGH COURSES THAT DON’T DEMAND MUCH READING . 15 Many students are engaged in shallow reading, skimming text for answers, focusing only on details and failing to make inferences in order to integrate different parts of the text. Years of reading in this superficial way will cause a student’s reading ability to deteriorate. For many students the decline of text demands in the courses that they take has both an immediate and long term impact on student achievement. The Percent Of Students Who Have Previously Scored A Level 3 Or Higher On FCAT Reading 2011 FCAT Results Grade Of Students Scoring Level 1 on the FCAT Reading, the Percent who have previously scored a Level 3 or higher in Reading Of Students Scoring Level 2 on the FCAT Reading, the Percent who have previously scored a Level 3 or higher in Reading 4 21 53 5 29 67 6 36 76 7 31 72 8 43 85 9 46 87 10 58 16 90 16 What is FAIR? 17 A K-2 assessment system administered to individual students 3 times a year, with electronic scoring, Adobe AIR version, and PMRN reports linked to instructional resources. A 3-12 computer-based system where students take the assessments 3 times a year. Several tasks are adaptive. PMRN reports are available, linked to instructional resources. Printed toolkit available. © 2011 Florida Department of Education The K-2 “Big Picture” Map Broad Screen/Progress Monitoring Tool (BS/PMT) “All” students • Letter Naming & Sounds • Phonemic Awareness • Word Reading Broad Diagnostic Inventory (BDI) “All” students “Some” students for vocabulary • Listening Comprehension • Reading Comprehension • Vocabulary • Spelling (2nd grade only) Targeted Diagnostic Inventory (TDI) “Some” students; some tasks • K = 9 tasks • 1st = 8 tasks • 2nd = 6 tasks Ongoing Progress Monitoring (OPM) “Some” students •K – 2 = TDI tasks •1 – 2 = ORF 18 K-2 Targeted Diagnostic Inventory (TDI) Map Kindergarten • Print Awareness • Letter name and sound knowledge • Phoneme Blending • Phoneme Deletion Word Parts/Initial • Letter Sound Connection Initial • Letter Sound Connection Final • Word Building –Initial Consonants • Word Building –Final Consonants • Word Building –Medial Vowels First Grade • Letter Sound Knowledge • Phoneme Blending • Phoneme Deletion Initial • Phoneme Deletion Final • Word Building –Consonants • Word Building –Vowels • Word Building –CVC /CVCe • Word Building –Blends Second Grade • Phoneme Deletion Initial • Phoneme Deletion Final • Word Building –Consonants • Word Building –CVC /CVCe •Word Building –Blends & Vowels • Multisyllabic Word Reading 19 The K – 2 “Score” Map BS/PMT PRS = Probability of Reading Success LC = Listening Comprehension BDI Total questions correct (implicit/explicit) RC = Reading Comprehension Total questions correct (implicit/explicit), Fluency, Percent Accuracy Target Passage VOC = Vocabulary Percentile Rank SPL = Spelling Percentile Rank TDI ME = Meets Expectations BE = Below Expectations OPM ORF = Adjusted Fluency OPM TDI Tasks = ME or BE and Raw Score 20 Target RC Passages for Grades 1 and 2 (BDI) Florida Center for Reading Research 21 Grade 1 PRS Chart (2010-2011) 22 AP1 0 1 2 3 4 5 6 7 8 9 10 PRS 0.11 0.14 0.20 0.27 0.36 0.46 0.56 0.66 0.75 0.82 0.86 AP2 0 1 2 3 4 5 6 7 8 9 10 PRS 0.01 0.02 0.03 0.05 0.09 0.17 0.28 0.44 0.61 0.76 0.86 AP3 0 1 2 3 4 5 6 7 8 9 10 PRS .01 .02 .03 .06 .12 .20 .33 .49 .65 .79 .88 Student Score Detail Box (K-2) Excellent report to include in a student’s cumulative folder ©2011 Florida Center for Reading Research 23 Just Read, Florida! along with staff from the Florida Center for Reading Research reviewed FAIR data for 2nd graders from school year 2009-10 who had a Probability of Reading Success (PRS) of .85+. We followed this cohort into grade 3 to see how they performed on FCAT Reading last school year, 2010-11. Results 74% of students with a .85 PRS at the end of 2nd grade in SY 09-10 scored FCAT Reading Level 3 or above in 3rd grade SY 10-11. 15% of students with a .85 PRS at the end of 2nd grade in SY 09-10 scored FCAT Reading Level 1 in 3rd grade SY 1011. 11% of students who had .85 PRS at the end of 2nd grade in SY 09-10 scored FCAT Reading Level 2 in 3rd grade SY 10-11. As we move into FCAT 2.0, the best way to reduce failures on Grade 3 FCAT is to target instruction earlier, in grades K-2. Waiting to address reading difficulties in grade 3 is too late. 24 Grades 3-12 Assessments Model 25 Broad Screen/Progress Monitoring Tool Reading Comprehension Task (3 Times a Year) If necessary Targeted Diagnostic Inventory Maze & Word Analysis Tasks Diagnostic Toolkit (As Needed) © 2011 Florida Department of Education Ongoing Progress Monitoring (As Needed) Purpose of Each 3-12 Assessment RC Screen Helps us identify students who may not be able to meet the grade level literacy standards at the end of the year as assessed by the FCAT without additional targeted literacy instruction. Mazes Helps us determine whether a student has more fundamental problems in the area of text reading efficiency and low level reading comprehension. Relevant for students below a 6th grade reading level. Word Analysis Helps us learn more about a student's fundamental literacy skills--particularly those required to decode unfamiliar words and read and write accurately. 26 How is the student placed into the first passage/item? Task Placement Rules Reading Comprehension Adaptive •The first passage the student receives is determined by: • Grade level Maze – Not adaptive Two predetermined passages based on grade level and assessment period (AP). WA - Adaptive • AP 1-3 starts with predetermined set of 5 words based on grade level. Student performance on this first set of 5 words determines the next words the student receives. • 5-30 words given at each assessment period based on ability. 27 How is the student placed into subsequent passages? 28 Based on the difficulty of the questions the student answers correctly on the first passage, the student will then be given a harder or easier passage for their next passage. Difficulty of an item is determined using Item Response Theory (IRT). Because of this feature, the raw score of 7/9 for Student A and 7/9 for Student B, when reading the same passage, does not mean they will have the same converted scores. The 3-12 “Big Picture” Map Type of Assessment Name of Assessment Broad Screen/Progress Monitoring Tool (BS/PMT) – Appropriate for ‘All’ students • Reading Comprehension (RC) Targeted Diagnostic Inventory (TDI) – “Some” students • Maze • Word Analysis (WA) Ongoing Progress Monitoring (OPM) – “Some” students • Maze • ORF • RC Informal Diagnostic Toolkit (Toolkit) – “Some” students • Phonics Inventory • Academic Word Inventory • Lexiled Passages • Scaffolded Discussion Templates 29 The 3-12 “Score” Map Reading Comprehension BS/PMT FCAT Success Probability (FSP) Color- coded Percentile Standard Score Lexile® Ability Score and Ability Range FCAT Reporting Categories Maze - TDI Percentile Standard Score Adjusted Maze Score Word Analysis - TDI OPM Percentile Standard Score Ability Score (WAAS) RC – Ability Score, Ability Range, Reporting Categories Maze – Adjusted Maze Score ORF (3rd – 5th) Adjusted Fluency Score 30 Lexile® Measure Two types of Lexile measures Lexile reader measure Represents a person’s reading ability on the Lexile scale (this is what you will see on your reports) Has nothing to do with the Lexile of the passage. Lexile text measure Indicates the reading demand of the text in terms of word frequency and sentence length. Range of uncapped Lexile Measures on FAIR: 225-2105 Range around Lexile Measure = -100 and +50 (e.g., 600L, 500 – 650L) 31 Student Score Detail Box- 3-12 32 Table 1: Correlations between the FCAT and both RC Screen and FSP Fall RC Grade Screen 3 .64 4 .66 5 .69 6 .70 7 .71 8 .70 9 .69 10 .67 FSP .62 .75 .78 .75 .75 .75 .73 .74 Winter RC Screen .75 73 .75 .72 .72 .71 .69 .67 FSP .73 .76 .78 .75 .75 .75 .73 .74 Spring RC Screen .78 .76 .76 .74 .73 .72 .70 .67 FSP .76 .77 .79 .75 .75 .75 .73 .74 33 Table 2: Screening Accuracy of the FAIR predicting FCAT success Fall Winter Spring FSP = FSP = FSP = FSP = FSP = FSP = % < Level 3 Grade 0.85 0.70 0.85 0.70 0.85 0.70 FCAT 3 .99 .99 .99 .99 .99 .99 28 4 .95 .97 .98 .97 .98 .96 31 5 .98 .95 .98 .94 .98 .94 33 6 .98 .95 .98 .96 .98 .95 40 7 .97 .92 .97 .92 .97 .92 38 8 .92 .82 .92 .82 .91 .81 51 9 .95 .88 .95 .87 .95 .87 59 10 .90 .80 .91 .81 .91 .80 69 34 The Common Core State Standards Text Complexity 35 Common Core State Standards Text Complexity 36 The Common Core State Standards places a strong emphasis on the role of text complexity in evaluating student readiness for college and careers. “The Common Core State Standards hinge on students encountering appropriately complex texts at each grade level in order to develop the mature language skills and the conceptual knowledge they need for success in school and life.” (p. 3) Advantages to Common Core Standards 37 • A focus on college and career readiness • Inclusion of the four strands of English Language Arts: • • • • Reading Writing Listening and speaking Language • The benefits of an integrated literacy approach – all educators have a shared responsibility for literacy instruction, regardless of discipline or content area. • A focus on results rather than means – . . .“the Standards leave room for teachers, curriculum developers, and states to determine how those goals should be reached and what additional topics should be addressed.” (p. 4) • Efficiencies of scale – common standards allow for greater collaboration among states in the areas of: • Professional development • Resource development • Teaching tools Text Complexity 38 Included within the Standards is an enhanced focus on text complexity. Specifically, within reading standard #10: Anchor Standard: R.CCR.10 Read and comprehend complex literary and informational texts independently and proficiently. Example Grade-level Standard (6th grade): RI.6.10 By the end of the year, read and comprehend literary nonfiction in the grades 6-8 text complexity band proficiently, with scaffolding as needed at the high end of the range. Guiding Questions 39 What do the Common Core Learning Standards mean by text complexity? What is a text complexity band? and How do we ensure the texts our students are reading are in the appropriate text complexity band? Overview of Text Text Complexity 40 Text complexity is defined by: Qualitative measures – levels of meaning, structure, language conventionality and clarity, and knowledge demands often best measured by an attentive human reader. Quantitative measures – readability and other scores of text complexity often best measured by computer software. Reader and Task considerations – background knowledge of reader, motivation, interests, and complexity generated by tasks assigned often best made by educators employing their professional judgment. Reader and Task Common Core State Standards Quantitative Measures Ranges for Text Complexity Grade Bands 41 Common Core State Standards Quantitative Measures Ranges for Text Complexity Grade Bands 42 Text Complexity Grade Bands Suggested Lexile Range Suggested ATOS Book Level Range** 2-3 450L – 790L 2.0 – 4.0 4-5 770L – 980L 3.0 – 5.7 6-8 955L – 1155L 4.0 – 8.0 9-10 1080L – 1305L 4.6 – 10.0 11-CCR 1215L – 1355L 4.8 – 12.0 K-1 Where do we find texts in the appropriate text complexity band? 43 We could…. Choose an excerpt of text from Appendix B as a starting place: Use available resources to determine the text complexity of other materials on our own. or… Determining Text Complexity 44 A Four-step Process: 1. Determine the quantitative measures of the text. 2. Analyze the qualitative measures of the text. 3. Reflect upon the reader and task considerations. 4. Recommend placement in the appropriate text complexity band. Reader and Task Step 1: Quantitative Measures 45 Quantitative Measures Measures such as: • Word length • Word frequency • Word difficulty • Sentence length • Text length • Text cohesion Step 1: Quantitative Measures 46 The Quantitative Measures Ranges for Text Complexity: This document outlines the suggested ranges for each of the text complexity bands using: 1. Lexile Text Measures ---or--- 2. ATOS Book Levels (Accelerated Reader) Step 1: Quantitative Measures 47 Let’s imagine we want to see where a text falls on the quantitative measures “leg” of the text complexity triangle, using either the Lexile text measures or the ATOS book level (or both). For illustrative purposes, let’s choose the text, Narrative of the Life of Fredrick Douglass. Step 1: Quantitative Measures 48 Lexile Text Measure: 1080L ATOS Book Level: 7.9 In which of the text complexity bands would this text fall? Common Core Learning Standards Quantitative Measures Ranges for Text Complexity Grade Bands 49 Text Complexity Grade Bands Suggested Lexile Range Suggested ATOS Book Level Range** K-1 100L – 500L* 1.0 – 2.5 2-3 450L – 790L 2.0 – 4.0 4-5 770L – 980L 3.0 – 5.7 6-8 955L – 1155L 4.0 – 8.0 9-10 1080L – 1305L 4.6 – 10.0 11-CCR 1215L – 1355L 4.8 – 12.0 * The K-1 suggested Lexile range was not identified by the Common Core State Standards and was added by Kansas. ** Taken from Accelerated Reader and the Common Core State Standards, available at the following URL: http://doc.renlearn.com/KMNet/R004572117GKC46B.pdf Step 1: Quantitative Measures 50 Remember, however, that the quantitative measures is only the first of three “legs” of the text complexity triangle. Our final recommendation may be validated, influenced, or even over-ruled by our examination of qualitative measures and the reader and task considerations. Step 1: Quantitative Measures 51 Additional Resources • Lexile Measures and the Common Core State Standards http://www.lexile.com/using-lexile/lexile-measures-and-the-ccssi/ • Accelerated reader and the Common Core State Standards http://doc.renlearn.com/KMNet/R004572117GKC46B.pdf • Coh-Metrix http://cohmetrix.memphis.edu/cohmetrixpr/index.html Coh-Metrix calculates the coherence of texts on a wide range of measures. It replaces common readability formulas by applying the latest in computational linguistics and linking this to the latest research in psycholinguistics. Step 2: Qualitative Measures 52 Measures such as: • Structure • Language Demands and Conventions • Knowledge Demands • Levels of Meaning/Purpose Common Core Standards Qualitative Features of Text Complexity 53 Structure (could be story structure and/or form of piece) Simple Complex Explicit Implicit Conventional Unconventional Events related in chronological order Events related out of chronological order (chiefly literary texts) Traits of a common genre or subgenre Traits specific to a particular discipline (chiefly informational texts) Simple graphics sophisticated graphics Graphics unnecessary or merely supplemental to understanding the text Graphics essential to understanding the text and may provide information not elsewhere provided Common Core Standards Qualitative Features of Text Complexity 54 Language Demands: Conventionality and Clarity Literal Figurative or ironic Clear Ambiguous or purposefully misleading Contemporary, familiar Archaic or otherwise unfamiliar Conversational General Academic and domain specific Light vocabulary load: few unfamiliar or academic words Many words unfamiliar and high academic vocabulary present Sentence structure straightforward Complex and varied sentence structures Though vocabulary can be measured by quantifiable means, it is still a feature for careful consideration when selecting texts Though sentence length is measured by quantifiable means, sentence complexity is still a feature for careful consideration when selecting texts Common Core Standards Qualitative Features of Text Complexity 55 Knowledge Demands: Life Experience (literary texts) Simple theme Complex or sophisticated themes Single theme Multiple themes Common everyday experiences or clearly fantastical situations Experiences distinctly different from one’s own Single perspective Multiple perspectives Perspective(s) like one’s own Perspective(s) unlike or in opposition to one’s own Common Core Standards Qualitative Features of Text Complexity 56 Knowledge Demands: Cultural/Literary Knowledge (chiefly literary texts) Everyday knowledge and familiarity with genre conventions required Cultural and literary knowledge useful Low intertextuality (few if any references/allusions to other texts) High intertextuality (many references/allusions to other texts Common Core Standards Qualitative Features of Text Complexity 57 Levels of Meaning (chiefly literary texts) or purpose (chiefly informational texts) Single level of meaning Multiple levels of meaning Explicitly stated purpose Implicit purpose, may be hidden or obscure Step 2: Qualitative Measures 58 The Qualitative Measures Rubrics for Literary and Informational Text: The rubric for literary text and the rubric for informational text allow educators to evaluate the important elements of text that are often missed by computer software that tends to focus on more easily measured factors. Step 2: Qualitative Measures 59 Because the factors for literary texts are different from information texts, these two rubrics contain different content. However, the formatting of each document is exactly the same. And because these factors represent continua rather than discrete stages or levels, numeric values are not associated with these rubric. Instead, six points along each continuum is identified: not suited to the band, early-mid grade level, mid-end grade level, early-mid grade level, mid-end grade level, not suited to band. Step 2: Qualitative Measures 60 How is the rubric used? And how would Narrative of the Life of Fredrick Douglass fair when analyzed through the lens of the Text Rubric? Step 2: Qualitative Measures 61 Step 3: Reader and Task 62 Considerations such as: • Motivation • Knowledge and experience • Purpose for reading • Complexity of task assigned regarding text • Complexity of questions asked regarding text Step 3: Reader and Task Ten Guiding Principles 63 1. Make close reading and rereading of texts central to lessons. 2. Provide scaffolding that does not preempt or replace text. 3. Ask text dependent questions from a range of question types. 4. Emphasize students supporting answers based upon evidence from the text. 5. Provide extensive research and writing opportunities (claims and evidence). Step 3: Reader and Task Ten Guiding Principles 64 6. Offer regular opportunities for students to share ideas, evidence and research. 7. Offer systematic instruction in vocabulary. 8. Ensure wide reading from complex text that varies in length. 9. Provide explicit instruction in grammar and conventions. 10. Cultivate students’ independence. Text Complexity Key to Student Reading Success 65 Text complexity matters because…. “making textbooks easier ultimately denies students the very language, information, and modes of thought they need most to move up and on.” -Marilyn Jager Adams Text Requirements in Middle and High School 66 Many students are engaged in shallow reading, skimming text for answers, focusing only on details and failing to make inferences in order to integrate different parts of the text. Years of reading in this superficial way will cause a student’s reading ability to deteriorate. For many students the decline of text demands in the courses that they take has both an immediate and long term impact on student achievement. What Are We Doing To Accelerate Success? 67 Just Read, Florida! New Professional Development 68 The Comprehension Instructional Sequence • An instructional model based upon research evidence introduced this year to Florida’s teachers. • The model assists teachers of students in grades 6-12 in implementing whole-class examination of difficult texts and build students’ specialized knowledge. • This sequence helps students grasp textual nuances they would not understand on their own. • It is a “text-dependent” approach, ensuring the close examination of key text details and utilizes complex text. Teaching Students to Think as They Read New: Next Generation Content Area Reading Professional Development 69 Facilitates the type of instruction needed to yield high outcomes in literacy for all students. Uses close reading, text based questions, text based discussions, and writing in response to reading to focus students on reading text closely to draw evidence from the text. Emphasizes reading deeply in multiple disciplines. Comprehension strategies are taught in an integrated fashion with instructional coherence and direct application. Fosters respect for the discipline and content while providing the necessary scaffolds for students to extract the meaning with deep understanding of the content being taught. Additional Resources 70 Appendix A - Qualitative Rubric for Text Complexity Appendix B - Common Core State Standards Text Exemplars