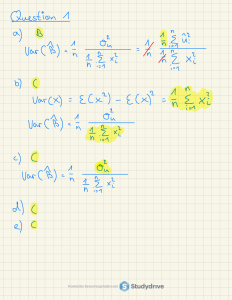

Discrete: pmf p(·) Continuous: pdf f (·) Support Set Countable set of values Uncountable set of values Probabilities p(x) = P (X = x) f (x) 6= P (X = x) = 0 for all x Rb P (a ≤ X ≤ b) = a f (x)dx = F (b) − F (a) Joint pXY (x, y) = P(X = x, Y = y) P(X ∈ [a, b], Y ∈ [c, d]) = ab cd fXY (x, y) dx dy Marginal pX (x) = Conditional pX|Y (x|y) = pXY (x, y)/pY (y) fX|Y (x|y) = fXY (x, y)/fY (y) Independence pXY (x, y) = pX (x)pY (y) fXY (x, y) = fX (x)fY (y) Expected Value P µX = E[X] = x xp(x) E[g(X)] = PxPg(x)p(x) E[g(X, Y )] = x Py g(x, y)p(x, y) R∞ µX = E[X] = −∞ xf (x) dx R∞ E[g(X)] = −∞ g(x)f (x) dx R∞ R∞ E[g(X, Y )] = −∞ −∞ g(x, y)fXY (x, y) dx dy R R P y pXY (x, y) fX (x) = R∞ −∞ fXY (x, y) dy Table 1: Differences between Discrete and Continous Random Variables Probability Mass Function p(x) Probability Density Function f (x) Discrete Random Variables p(x) = P (X = x) p(x) ≥ 0 p(x) ≤ 1 P x p(x) = 1 P F (x0 ) = x≤x0 p(x) Continuous Random Variables f (x) 6= P (X = x) = 0 f (x) ≥ 0 f (x) can be greater than one! R∞ f (x) dx = 1 −∞ R x0 F (x0 ) = −∞ f (t) dt Table 2: Probability mass function (pmf) versus probability density function. X : S → R (RV is a fixed function from sample space to reals) Collection of all possible realizations of a RV F (x0 ) = P (X ≤ x0 ) In general, E[g(X)] 6= g (E[X]) E[a + X] = a + E[X], E[bX] = bE[X], E[X1 + . . . + Xk ] = E[X1] + . . . E[Xk ] 2 σX ≡ Var(X) ≡ E (X − E[X])2 = E[X 2 ] − (E[X])2 = E [X(X − µX )] p 2 σX = σX Var(a + X) = Var(X), Var(bX) = b2 Var(X), Var(aX + bY + c) = a2 Var(X) + b2 Var(Y ) + 2abCov(X, Y ) X1 , . . . , Xk are uncorrelated ⇒ Var(X1 + . . . + Xk ) = Var(X1 ) + . . . Var(Xk ) Covariance σXY ≡ Cov(X, Y ) ≡ E [(X − E[X]) (Y − E[Y ])] = E[XY ] − E[X]E[Y ] = E [X(Y − µY )] = E [(X − µX )Y ] Correlation ρXY = Corr(X, Y ) = σXY /(σX σY ) Covariance and Independence X, Y independent ⇒ Cov(X, Y ) = 0 but Cov(X, Y ) = 0 ; X, Y independent Functions and Independence X, Y independent ⇒ g(X), h(Y ) independent Bilinearity of Covariance Cov(a + X, Y ) = Cov(X, a + Y ) = Cov(X, Y ), Cov(bX, Y ) = Cov(X, bY ) = bCov(X, Y ) Cov(X, Y + Z) = Cov(X, Y ) + Cov(X, Z) and Cov(X + Z, Y ) = Cov(X, Y ) + Cov(Z, Y ) Linearity of Conditional E E[a + Y |X] = a + E[Y |X], E[bY |X] = bE[Y |X], E[X1 + · · · + Xk |Z] = E[X1|Z] + · · · + E[Xk |Z] Taking Out What is Known E[g(X)Y |X] = g(X)E[Y |X] Law of Iterated Expectations E[Y ] = E [E(Y |X)] Conditional Variance Var(Y |X) ≡ E (Y − E[Y |X])2 X = E[Y 2 |X] − (E[Y |X])2 Law of Total Variance Var(Y ) = E [Var(Y |X)] + Var (E[Y |X]) Table 3: Essential facts that hold for all random variables, continuous or discrete: X, Y, Z and X1 , . . . , Xk are random variables; a, b, c, d are constants; µ, σ, ρ are parameters; and g(·), h(·) are functions. Definition of R.V. Support Set CDF Expectation of a Function Linearity of Expectation Variance Standard Deviation Var. of Linear Combination sXY = Std. Dev. Covariance Correlation rXY = sXY /(sX sY ) n 1 X (xi − x̄)(yi − ȳ) n − 1 i=1 p s2X sX = Variance n 1 X (xi − x̄)2 n − 1 i=1 s2X = Mean Setup Sample Statistic Sample of size n < N from a popn. n 1X x̄ = xi n i=1 σx2 N ρXY = σXY /(σX σY ) 1 X (xi − µX )(yi − µY ) N i=1 p N 1 X (xi − µX )2 N i=1 σXY = σX = 2 σX = Population Parameter Population viewed as list of N objects N 1 X µX = xi N i=1 p σx2 ρXY = σXY /(σX σY ) σXY = E [(X − µX ) (Y − µY )] σX = 2 σX = E (X − E[X])2 Population Parameter Population viewed as a RV X Discrete µX = xp(x) x R ∞ Continuous µX = −∞ xf (x) dx