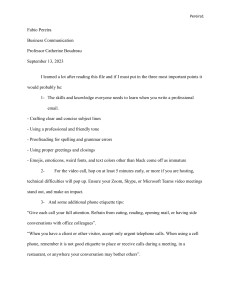

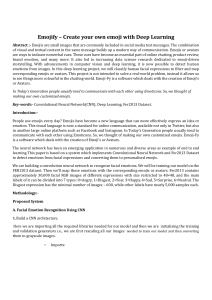

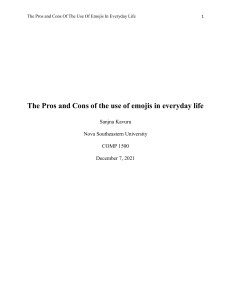

Computers in Human Behavior Reports 9 (2023) 100251 Contents lists available at ScienceDirect Computers in Human Behavior Reports journal homepage: www.sciencedirect.com/journal/computers-in-human-behavior-reports Emojis that work! Incorporating visual cues from facial expressions in emojis can reduce ambiguous interpretations Isabelle Boutet 1, *, Joëlle Guay , Justin Chamberland , Denis Cousineau , Charles Collin School of Psychology, University of Ottawa, Ottawa, Ontario, Canada A R T I C L E I N F O A B S T R A C T Keywords: Emojis Emotion communication Facial action units Social interactions Digital technology Emojis are included in more than half of all text-based messages shared on digital platforms. Evidence is emerging to suggest that many emojis are ambiguous, which can lead to miscommunication and put a strain on social relations. We hypothesized that emojis that incorporate visual cues that distinguish facial expressions of emotions, known as facial action units (AUs), might offer a solution to this problem. We compared interpretation of novel emojis that incorporate AUs with interpretations of existing emojis and face photographs. Stimuli conveyed either sadness, happiness, anger, surprise, fear, or neutrality (i.e., no emotion). Participants (N = 237) labelled the emotion(s) conveyed by these stimuli using open-ended questions. They also rated the extent to which each stimulus conveyed the target emotions using rating scales. Seven out of ten emojis with action units were interpreted more accurately or as accurately when compared to existing emojis and face photographs. A critical difference between these novel emojis and existing emojis is that the emojis with action units were never seen before by the participants - their meaning was entirely derived from the presence of AUs. We conclude that depicting visual cues from real-world facial expressions in emojis has the potential to facilitate emotion communication in text-based digital interactions. 1. Introduction Text-based applications such as text messages (SMS), Snapchat, emails, Twitter, etc. are becoming ubiquitous platforms for social in­ teractions (Hsieh & Tseng, 2017). However, text messaging is fraught with miscommunication, especially with regards to the emotional tone of text messages, which can produce conflicts and hinder relationships ( Edwards et al., 2017; Kelly et al., 2012). Many users attribute texting miscommunication to the paucity of non-verbal cues in this medium of communication (Kelly et al., 2012; Kelly & Miller-Ott, 2018). In contrast, face-to-face (FtF) interactions involve the exchange of a rich array of non-verbal cues conveyed by the face, body, and voice, which provide contextual information that facilitates processing and under­ standing of verbal messages (Hall et al., 2019). Non-verbal cues also provide social information about others’ emotional state and personality traits (e.g., Hall et al., 2019). Thousands of different emojis are now available across various platforms, with emojis that mimic facial ex­ pressions of emotion being most popular (worldemojiday.com). This likely reflects the dominant role that facial expressions of emotion play in non-verbal communication (Gawne & McCulloch, 2019; Jack & Schyns, 2017; Kralj Novak et al., 2015). A growing body of literature suggests that facial emojis play similar communicative functions as facial expressions of emotion. For example, there is evidence that emojis can facilitate processing and understanding of the emotional tone and meaning of verbal messages (Boutet et al., 2021; Daniel & Camp, 2020; Gesselman et al., 2019; Herring & Dainas, 2020; Kaye et al., 2021; Kralj Novak et al., 2015; Rodrigues et al., 2017). Hence, emojis have the potential to reduce miscommunication in digital text-based interactions. However, the meaning of many emojis is un­ known or ambiguous, which limits their utility as tools for emotion communication. For example, only half of the iOS emojis studied in Jaeger et al. (2019) produced consistent interpretations in at least 50% of participants. Miller et al. (2016) measured interpretations of 125 emojis taken from five different platforms and observed that participants disagreed on whether a given emoji conveyed a positive, neutral, or negative emotion about 25% of the time. Disagreements increased when comparing emojis meant to convey the same message but whose appearance changed across platforms. Moreover, emoji interpretation Abbreviations: FtF, face-to-face; AUs, Action Units. * Corresponding author. 136 Jean-Jacques Lussier, Ottawa, Ontario, K1N 6N5, Canada. E-mail address: iboutet@uottawa.ca (I. Boutet). 1 This work was supported by a Social Sciences and Humanities Research Council of Canada Insight Development Grant (430-2020-00188) to IB. https://doi.org/10.1016/j.chbr.2022.100251 Received 21 April 2022; Received in revised form 24 November 2022; Accepted 25 November 2022 Available online 19 December 2022 2451-9588/© 2022 The Authors. Published by Elsevier Ltd. This is an open access article under the CC BY-NC-ND license (http://creativecommons.org/licenses/bync-nd/4.0/). I. Boutet et al. Computers in Human Behavior Reports 9 (2023) 100251 can vary across users depending on culture, contexts, and psychosocial variables (Herring & Dainas, 2020; Jaeger & Ares, 2017; Jaeger et al., 2018; Jaeger et al., 2019; Langlois, 2019; Miller et al., 2016; Rawlings, 2018; Völker & Mannheim, 2021). This is problematic because ambig­ uous or inconsistent emoji interpretations can lead to miscommunica­ tion, elicit feelings of anxiety, and put a strain on social relationships (Bai et al., 2019; Kingsbury & Coplan, 2016). Several factors likely contribute to ambiguous and inconsistent emoji interpretations. Most emojis appear to have been developed for their aesthetic quality (‘cuteness’) and, as a result, many emojis offer poor portrayals of real facial expression (Bai et al., 2019; Franco & Fugate, 2020). Even emojis that convey symbolic emotional signals can be ambiguous (Langlois, 2019). For example, the iOS ( emojis, one after the other, and asked to indicate the degree to which different emotions are portrayed in each stimulus. This served to mea­ sure interpretation accuracy. Many models of emotion communication stipulate that, in addition to emotional information, humans also infer cognitive information when decoding facial expressions of emotions (reviewed by Scherer & Moors, 2018). For example, upon observing a sad facial expression, one might infer that the person has lost someone close to them (Scherer & Grandjean, 2008). Cognitive interpretations are a critical component of emotion communication because understanding underlying cognitive states can facilitate accurate identification of ambiguous emotional ex­ pressions and guide interpersonal behaviours (Scherer & Moors, 2018). Research on interpretations of visual and auditory expressions of emo­ tions support the notion that cognitive interpretations play a funda­ mental role in emotion communication (reviewed by Scherer & Moors, 2018). Given that emojis may act as ‘’proxies’’ for non-verbal signals of emotions (Walther & D’Addario, 2001), we also measured participants’ cognitive interpretations of emojis to examine whether some emojis yield more consistent cognitive interpretations than others. The following hypotheses were tested: ) emoji which conveys the symbolic ‘tear’ can be interpreted as either crying or sleepy (Jaeger et al., 2019). To further complicate matters, some emojis with the same intended meaning look slightly different across device (e.g., iOS or Android) and social media platforms (e.g., Snapchat vs. Face­ book). Finally, users do not typically consult lexicons produced by de­ velopers or those available online (e.g., emojipedia.com) to learn the intended meaning of emojis. Instead, emoji meaning is typically derived implicitly from common usage or cultural references (Fischer & Herbert, 2021; Herring & Dainas, 2020). This makes it difficult for less experi­ enced users or users from another culture to interpret emojis and to confidently incorporate them in digital social interactions. In FtF communication, facial expressions of emotion are recognized on the basis of ‘diagnostic’ features that allow observers to discriminate and recognize basic emotions such as happiness, surprise, anger, disgust, sadness, and fear. Different emotions can be classified according to groups of facial muscles that are activated when a given emotion is expressed, which are called action units (AUs; e.g., Ekman & Friesen, 1982; Ekman & Keltner, 1997; Gosselin et al., 1995). Humans can decode AUs quickly and effortlessly from a very young age, and brain networks specialized for decoding AUs have been identified (Ekman, 1992b; Ekman & Rosenberg, 2005; Matsumoto & Ekman, 1989; Mat­ sumoto et al., 2000; Zebrowitz, 2017). Because AUs are relatively uni­ versal in their communication of emotion (Ekman, 1992a; Ekman, 1989; but see Ortony & Turner, 1990), we hypothesized that incorporating pictorial depictions of facial AUs in novel emojis might reduce ambig­ uous and inconsistent interpretations (see Fig. 1). H1: Consistency in interpretation for verbal labels will be superior or equivalent for emojis with action units compared to existing emojis and human faces. H2: Accuracy with identifying the targeted emotion will be superior or equivalent for emojis with action units compared to existing emojis and human faces. The same will apply to cognitive interpretations. H3: Confusion between the targeted emotion and other emotions will be inferior or equivalent for emojis with action units compared to existing emojis and human faces. The same will apply to cognitive interpretations. H4: Ratings of intensity for the targeted emotion will be superior or equivalent for emojis with action units compared to existing emojis and human faces. The same will apply to cognitive interpretations. 2. Methods 2.1. Participants A total of 301 undergraduate students were recruited from the Uni­ versity of Ottawa research participation pool. Participants received course credits for their participation. Data from 39 participants were excluded because they did not complete the study or had incomplete data. An additional three participants were excluded from analysis because they had been diagnosed with dyslexia.2 We also excluded seven participants flagged as outliers based on study completion time (i. e., cases where z-scores were greater than two standard deviations from the mean study duration). Data from 15 participants were also excluded because they obtained less than 11/12 on the study ‘engagement’ trials (see 2.2.3 for details). Our final sample retained for analyses consisted of 237 participants (Male = 61; Female = 175; Other = 1; Mage = 19.39, SD = 2.97; range = 18–53 years of age). All participants were native English speakers. De­ tails regarding participants’ self-identified ethnicity can be found in Supplementary materials (SM-Table 1). The study was approved by the University of Ottawa Social Sciences and Humanities Research Ethics Board. An estimation of the total required sample size was computed for Ftest repeated measures using G-power (Faul et al., 2007). For 3 and 6 measurements (smallest and largest number of stimuli compared), a 1.1. The present study Our overarching goal was to test the hypothesis that incorporating facial AUs in emojis might improve consistency and accuracy in emotional communication. To test this hypothesis, we developed novel emojis that incorporate facial AUs associated with sadness, happiness, anger, surprise, fear, or neutral (i.e., no emotion). There is a paucity of empirical data on interpretations of existing emojis, and few studies have examined specific emotional interpretations beyond just positive/ negative valence and arousal (Herring & Dainas, 2020; Jaeger & Ares, 2017; Jaeger et al., 2018; Jaeger et al., 2019; Langlois, 2019; Miller et al., 2016; Völker & Mannheim, 2021). We therefore measured inter­ pretation of emojis using basic emotion labels as well as open-ended questions. In a qualitative block, participants were shown emojis with action units, existing emojis, and human faces and asked to label the mood(s) and/or feeling(s) conveyed by each stimulus using their own word(s). For each stimulus, we report the proportion of participants who endorsed the seven most commonly used labels, which reflects inter­ pretation consistency across participants. A qualitative approach is important in emotion research because only asking participants to choose among pre-defined emotional categories may not adequately capture the experience produced by emotional signals (e.g., Russell, 1993; Scherer & Grandjean, 2008). In a quantitative block, participants were again shown emojis with action units, human faces and existing 2 Recruitment of participants for this study was part of a larger project where some of the stimuli involved reading text messages. For the larger project, participants with dyslexia were removed because it could affect reading times. 2 I. Boutet et al. Computers in Human Behavior Reports 9 (2023) 100251 Fig. 1. Action units (AUs) associated with fear in human faces and in a novel fear emoji created for this study. 2.2. Materials Table 1 Novel Emoji stimuli and AUs portrayed. Emotion Fear Novel Emoji Name Action unit Codes “fear with teeth” 1-2-4-5-20-25-26 “fear with no teeth” 1-2-4-5-25-26 Neutral “neutral“ happiness “happy open eyes” 6-12-25-26 “happy closed eyes” 6-12-25-26 “sad lower lid raiser” 1-4-7-15-17 “sad neural eyes” 1-4-15-17 Surprise “surprise” 1-2-5-25-26 Anger “anger with teeth” 4-5-10-25 “anger closed mouth” 4-5-23-24 Sadness 2.2.1. Stimuli A total of 33 stimuli were used in this study: 10 novel emojis with action units, 9 existing emojis, and 14 face photographs. Emojis with action units were created to portray key AUs for sadness, happiness, anger, surprise, fear, or neutral (Gosselin et al., 1995; Paul Ekman Group, n.d.; see Table 1). Emojis with action units were created by the research team using an iPhone compatible application, Moji Maker, as well as a photo editing software, Adobe Photoshop. The final emojis with action units retained for the present study are the result of several phases of creation, modification, and team-based discussion where the presence, visibility, and similarity to facial action units were evaluated. Overall, our aim was to (i) include as many target action units as possible in an emoji for a given target emotion, and (ii) include emojis that were comparable to the face stimuli we used as controls in this study. As a result, for some emotions, multiple emojis were created and retained for the study because Moji Maker offered sufficient flexibility to explore different options with regards to AU depictions, intensity, and configuration. Because of a paucity of empirical data on interpretation of emojis, and because the popularity of given emojis fluctuates with time, we choose existing emojis based on results from Jaeger et al. (2019). Jaeger et al. (2019) derived meanings for several iOS emojis from semantic analysis of internet sites. They also recorded participants’ interpretation of these emojis using a qualitative approach. They report the percentage of participants whose interpretation matched the meaning derived from the semantic analysis. Nine emojis (see Table 4 in Jaeger et al., 2019) were selected as follows: the emoji (1) had to have a content category that matched one of our six target emotions; and (2) had to have at least 65% of respondents who used a label that matched our target emotions to describe that emoji. Existing emojis are shown in the Results and can also be found in Supplementary Materials (SM-Table 2). Photographs of male and female human faces were taken from the Japanese and Caucasian Facial Expressions of Emotions (JACFEE) database (Biehl et al., 1997; Matsumoto & Ekman, 1988). The AUs associated with each target emotion have been validated (Biehl et al., 1997). One female and one male face was selected for each emotion category based on correspondence with the novel emojis with regards to specific AUs, the number of AUs expressed, and their intensity. For fear, we created two novel emojis, one that portrayed AU20 (feat with teeth), and the other without. Finding female face photographs with the exact same combination of AU was impossible. As result, we utilized two male and two female faces for this condition. JACFEE stimuli used in this study are shown in Supplementary Materials (SM-Table 3). 2.2.2. Procedure The study was administered online using a Qualtrics-based survey (https://www.qualtrics.com). Median time of completion was 49 min. After answering demographic questions (age, gender, education, etc.), participants were taken through the three main experimental blocks power of 0.80, a medium effect size f2 of 0.25 and a correlation across measurements of 0.20 (as found in a review of cognitive tasks, Goulet & Cousineau, 2019), the required sample was 29 (6 measurements) and 43 (3 measurements). Hence, our sample of 252 participants was suffi­ ciently large to detect a medium effect size. 3 I. Boutet et al. Computers in Human Behavior Reports 9 (2023) 100251 (See Fig. 2). The first experimental block was a qualitative block where participants labelled the stimuli. The second and third blocks were quantitative blocks where participants rated either emotional in­ terpretations (one block) or cognitive interpretations (the other block) of the stimuli. Order of presentation of the emotional interpretation and cognitive interpretation blocks was counterbalanced across participants. To maximize standard viewing conditions, participants were instructed to complete the study on a laptop computer located approximately 50 cm from their eyes. To illustrate the visual appearance of a given trial, examples of test windows captured while the survey was run from different platforms are provided in Appendix D. The size of the stimuli for these examples are also provided. In all the blocks, stimuli were presented sequentially in a random order. In the qualitative block, each stimulus was accompanied by the following instructions: “Please look at this expression and describe all of the moods and/or feelings you think it conveys’’. Participants typed their an­ swers in a response box shown below the question. There was no limit to the number of characters that could be entered in the response box. Following the qualitative block, participants were given a 2-min break. In the second part of the experiment, participants were taken through the emotional interpretation and cognitive interpretation blocks. For each block, stimuli were presented sequentially in a random order. In the emotion interpretation block, participants were asked to “Please use the following response options to indicate how intense the emotion or emotions are portrayed in the current expression” for each stimulus presented. Six 6-point scales ranging from 0 (absent emotion) to 5 (very strong emotion) were shown, one for each of the 5 basic emotions (happiness, anger, sadness, fear, and surprise; see Gosselin et al., 1995) plus a filler item (hunger, Scherer & Grandjean, 2008, see Table 2). Neutral was not included as an option as the absence of expression would be rated as 0 for all emotions. In the cognitive interpretation block, participants were shown again all stimuli in random order, one after the other. Participants were asked to “Please use the following response options to indicate how likely the current expression is to be por­ trayed in each of the following situations” for each stimulus presented. Six 6-point rating scales ranging from 0 (expression unlikely) to 5 (expres­ sion very likely) were shown, one for each of the six cognitive in­ terpretations plus a filler item (see Table 2). Cognitive interpretations were taken from Scherer and Grandjean (2008). In addition, 12 ‘engagement’ trials were included across the three blocks to measure participants’ attentiveness to the task at hand. For each block, four engagement trials were embedded semi-randomly among the regular trials. Out of those four engagement trials, two fol­ lowed a trial where an emoji had been shown and two followed a trial where face photographs had been shown. Each engagement trial con­ sisted of three images, including one from the immediately preceding trial and two similar distractors never shown throughout the study. Participants had to identify the stimulus that was presented in the Table 2 Situational appraisals and emotion pairings. Emotion Situational Appraisal Fear Neutral Happiness Sadness Hunger* Surprise Anger I am in a dangerous situation and I don’t know how to get out of it. I am not thinking about anything in particular at this moment. I have just received a gift. I just lost someone very close to me. I think I need to eat something* Something unexpected has happened. This ruins my plans but I will fight to get what I want. Notes: *Filler items used by Yik and Russell (1999) (as cited in Scherer & Grandjean, 2008). Wording of appraisals for Neutral and Anger have been modified for this study. (See Scherer & Grandjean, 2008, for original wording). previous trial. 2.3. Dependent variables For the qualitative block, the proportion of participants (word count/ number of participants × 100) who provided the seven most frequentlyused labels was extracted from the answers provided using InVivo software. For the quantitative block, three dependant variables were extracted from the data: (1) accuracy, (2) confusability and (3) intensity. (1) For accuracy, a score of 1 was given if the participant gave the tar­ geted emotion category the highest intensity rating, or else a score of 0 was assigned (see Gosselin et al., 1995). For example, a participant would get a score of 1 if the fear expression was shown and the partic­ ipant provided the highest rating for the fear response category. Hence, accuracy reflects how accurately participants interpreted emojis ac­ cording to the targeted emotion category. (2) Confusability was oper­ ationalized as the mean intensity of the non-targeted emotions. For example, a participant would get a score of 4 if a fear expression was shown and the participant gave a rating of 2 for two other emotions than fear. Confusability reflects how likely a stimulus is to be confused with unintended emotions. These two dependent variables were measured for both emotion and cognitive interpretations. (3) Intensity was oper­ ationalized as the intensity rating for the targeted emotion. For example, a participant would get an intensity score of 5 if the stimulus portrayed fear and the fear emotion was rated at 5 (maximum rating: very strong emotion) on the scale. For the neutral emoji, it would be expected that a stimulus that conveys no emotion would receive a 0 on all the rating scales. We therefore computed the sum of the ratings across participants for all six scales. The lower the sum, the more ‘neutral’ the interpretation. 2.4. Data analysis Given the very large number of pairwise comparisons that would be Fig. 2. Flowchart of procedure for data collection. 4 I. Boutet et al. Computers in Human Behavior Reports 9 (2023) 100251 required to compare all stimuli across all dependent variables, we opted for visual representation of the data. Differences across stimulus cate­ gories can be ascertained using difference- and correlation-adjusted 95% confidence interval (CI) error bars (Cousineau et al., 2021). With such CI, mean results can be compared across stimuli using the golden rule – the mean result for stimulus A is considered different from the mean result for stimulus B if the mean value for stimulus A falls outside the CI for stimulus B (Cousineau, 2017). Effect sizes can also be evaluated visually using an indicator of the magnitude of a Cohen’s d of 0.25, which corresponds to a small-to-medium effect (Cohen, 1988). For ac­ curacy, which is a binary variable, mean proportion and CIs were calculated using a technique derived from the two-proportions test and the arcsin transform (Cousineau, 2021; Laurencelle & Cousineau, 2022). For simplicity, and because emotion and cognitive interpretation results were generally consistent, results for the dependent variable Intensity are only reported for the emotion interpretation data. low for these labels, between 15% and 30%. Overall, results were comparable across the three stimuli tested. For surprise, the novel emoji was labelled as ‘amazed’ by almost 70% of participants, and as ‘shocked’ by 55% of participants. Only the existing ‘screaming’ emoji produced similar consistency in interpretation, with almost 75% of participants labelling it as ‘shocked’. Face photographs produced comparable results as the novel emoji for the label ‘amazed’ and as the existing ‘screaming’ emoji for ‘shocked’. For happiness, the existing emojis and face pho­ tographs produced the most consistent interpretation with over 85% of participants labeling these stimuli as ‘happy’. While significantly fewer participants labelled the novel emojis as ‘happy’ (65% and 75% of participants), over 35% of participants labelled the happy ‘with eyes closed’ novel emojis as ‘laughter’ which is consistent with the emotion targeted by the emoji. Hence, labelling consistency was comparable across all stimuli tested. For sadness, the ‘tear drop’ existing emoji and face photographs produced the most consistent interpretations with over 85% of participants labeling those stimuli as ‘sad’. Similar results were obtained for face photographs. Fewer participants (55%–60% of par­ ticipants) labelled the novel emojis as ‘sad’. However, all stimuli pro­ duced comparably consistent interpretations for the second most frequent label ‘depressed’. Finally, for neutral, face photographs were labelled as ‘neutral’ by almost 35% of participants, a higher rate of agreement than the novel and existing emojis, which were labelled as ‘neutral’ by just over 20% of participants. 3. Results 3.1. H1: consistency in interpretation for verbal labels will be superior or equivalent for emojis with action units compared to existing emojis and human faces Results of the qualitative analysis are shown in Fig. 3. For anger, the novel emoji ‘mouth open’ and the existing angry emoji were interpreted with a high level of agreement with over 80% of participants using the label ‘angry’ to describe the emotion conveyed. The next most frequent label was ‘frustrated’, with over 25% of participants using this label to describe the novel ‘closed mouth’ angry emoji and the existing emoji. Face photographs yielded comparable results. For fear, results were less consistent across participants. The most frequent label was ‘scared’, followed by ‘worry’ and ‘nervous’. Agreement among participants was 3.2. H2: accuracy with identifying the targeted emotion will be superior or equivalent for emojis with action units compared to existing emojis and human faces Accuracy results are shown in the first row of Figs. 4–9. Accuracy reflects how accurately participants interpreted emojis according to the targeted emotion category. Because results were consistent, the Fig. 3. Results showing the proportion of participants who endorsed specific labels for novel emojis (*), existing emojis and face photographs. Note. Error bars represent difference and correlation adjusted 95% CI. The mean result for stimulus A is considered different from the mean result for stimulus B if the mean value for stimulus A falls outside the CI for stimulus B (Cousineau, 2017). The purple bar illustrates the magnitude of a small-to-medium effect size. 5 I. Boutet et al. Computers in Human Behavior Reports 9 (2023) 100251 Fig. 4. Results for angry novel emojis (*), existing emojis, and face photographs. Accuracy, confusability and intensity results are shown for emotion interpretations (left) and cognitive interpretations (right). Error bars represent 95% CI. The mean result for stimulus A is considered different from the mean result for stimulus B if the mean value for stimulus A falls outside the CI for stimulus B (e.g., Cousineau, 2017). The purple bar illustrates the magnitude of a small-to-medium effect size. following description encompasses both emotion interpretations and cognitive interpretations. For anger, novel emojis were interpreted as accurately as existing emojis and faces. For fear, novel emojis were interpreted as accurately as faces (no existing fear emoji met the in­ clusion criteria described in Stimuli and hence no fear emoji was included in our study). For surprise, our novel emojis were interpreted as accurately as faces and more accurately than existing emojis. For sadness, novel emojis performed poorly: they were interpreted less accurately than existing emojis and faces. Finally, for happiness, the ‘eyes closed’ novel emoji was interpreted as accurately as existing emojis and faces. In contrast, the ‘eyes open’ novel emoji was interpreted less accurately than the other stimuli tested. 3.3. H3: confusion between the targeted emotion and other emotions will be inferior or equivalent for emojis with action units compared to existing emojis and human faces Confusability results are shown the second row of Figs. 4–9. Con­ fusability reflects how likely a stimulus is to be confused with emotions not targeted by the stimuli. Again, descriptions encompass both types of interpretations because results were consistent across emotional and cognitive interpretations. For anger, the existing emoji produced less 6 I. Boutet et al. Computers in Human Behavior Reports 9 (2023) 100251 Fig. 5. Results for fear novel emojis (*), existing emojis, and face photographs. Accuracy, confusability and intensity results are shown for emotion interpretations (left) and cognitive interpretations (right). Error bars represent 95% CI. The mean result for stimulus A is considered different from the mean result for stimulus B if the mean value for stimulus A falls outside the CI for stimulus B (e.g., Cousineau, 2017). The purple bar illustrates the magnitude of a small-to-medium effect size. confusion than the other stimuli tested. The novel emojis produced as much confusion as faces. For fear, all stimuli tested produced the same level of confusion. For surprise, both novel emojis produced as little confusability as faces. Existing emojis produced the most confusion. For sadness, novel emojis produced more confusion than existing emojis and faces. Finally, for happiness, novel emoji ‘eyes closed’ produced as little confusion as existing emojis and faces. Novel emoji ‘eyes open’ produced the most confusion. 3.4. H4: ratings of intensity for the targeted emotion will be superior or equivalent for emojis with action units compared to existing emojis and human faces Intensity results are shown in the last row of Figs. 4–9. Intensity reflects the strength of the emotional signal, with stronger signals being associated with more accurate emotion identification (Hess et al., 1997). For this analysis, we focused on emotion interpretations. For anger, ratings for both novel emojis were more intense than for the existing emoji and as intense as for faces. For fear, both novel emojis elicited slightly higher ratings than face photographs but the difference was 7 I. Boutet et al. Computers in Human Behavior Reports 9 (2023) 100251 Fig. 6. Results for surprised novel emojis (*), existing emojis, and face photographs. Accuracy, confusability and intensity results are shown for emotion in­ terpretations (left) and cognitive interpretations (right). Error bars represent 95% CI. The mean result for stimulus A is considered different from the mean result for stimulus B if the mean value for stimulus A falls outside the CI for stimulus B (e.g., Cousineau, 2017). The purple bar illustrates the magnitude of a small-to-medium effect size. negligible. For surprise, the novel emoji produced the lowest ratings. For sadness, both novel emojis produced highest ratings. For happi­ ness, novel emoji ‘eyes open’ produced the most intense ratings. Novak et al., 2015; Rodrigues et al., 2017). However, many existing emojis are ambiguous (Herring & Dainas, 2020; Jaeger & Ares, 2017; Jaeger et al., 2018; Jaeger et al., 2019; Miller et al., 2016; Völker & Mannheim, 2021), which can lead to miscommunication, elicit feelings of anxiety, and put a strain on social relationships (Bai et al., 2019; Kingsbury & Coplan, 2016). We hypothesized that incorporating facial action units (AUs), which are the basic elements of facial expressions of emotions (Ekman & Friesen, 1982), might reduce ambiguous and inconsistent interpretations. To test this hypothesis, novel emojis that incorporate pictorial depictions of facial AUS were developed. We 4. Discussion Emojis have the potential to enrich digital text-based interactions by facilitating processing and understanding of the emotional tone and meaning of text messages (Boutet et al., 2021; Daniel & Camp, 2020; Gesselman et al., 2019; Herring & Dainas, 2020; Kaye et al., 2021; Kralj 8 I. Boutet et al. Computers in Human Behavior Reports 9 (2023) 100251 Fig. 7. Results for happy novel emojis (*), existing emojis, and face photographs. Accuracy, confusability and intensity results are shown for emotion interpretations (left) and cognitive interpretations (right). Error bars represent 95% CI. The mean result for stimulus A is considered different from the mean result for stimulus B if the mean value for stimulus A falls outside the CI for stimulus B (e.g., Cousineau, 2017). The purple bar illustrates the magnitude of a small-to-medium effect size. hereby introduce these novel emojis and report data on how their interpretation compares with that of existing emojis and face photo­ graphs. We first review results as they relate to the hypotheses outlined in the Introduction, followed by a discussion of implications and limitations. participants who interpreted emojis using the same label varied across emotions. Existing emojis were chosen based on the following criteria: emojis that were labelled with one of our target emotions by at least 65% of participants in Jaeger et al. (2019) were included. If one were to use the same threshold for the emojis with AUs, the novel angry, surprised, and happy emojis would meet this criterion. The highest proportion observed was for the existing ‘tear drop’ sad emoji, with over 90% of participants providing the ‘sad’ label for this stimulus. In contrast, consistency in interpretation was very low for fear and neutral emojis. Finally, face photographs did not yield more consistent interpretations than emojis overall. 4.1. Qualitative labels (H1) Overall, the results support H1 with consistency in interpretation being superior or equivalent for emojis with action units compared to existing emojis and face photographs. However, the proportion of 9 I. Boutet et al. Computers in Human Behavior Reports 9 (2023) 100251 Fig. 8. Results for sad novel emojis (*), existing emojis, and face photographs. Accuracy, confusability and intensity results are shown for emotion interpretations (left) and cognitive interpretations (right). Error bars represent 95% CI. The mean result for stimulus A is considered different from the mean result for stimulus B if the mean value for stimulus A falls outside the CI for stimulus B (e.g., Cousineau, 2017). The purple bar illustrates the magnitude of a small-to-medium effect size. The proportions of participants who endorsed targeted labels were generally higher in Jaeger et al. (2019) as compared to results reported here. For example, the existing neutral emoji was labelled as ‘neutral’ by 76% of participants in Jaeger et al. (2019), in contrast with only 50% of participants in our study. Reasons for this difference are unclear. One possibility is that a much larger sample size was used in Jaeger et al. (2019) (1000+) than in the present study (250+). Another possibility is that data was collected at two different time points, and that emoji in­ terpretations evolve with time. It is important to note that we intentionally choose existing emojis with high consistency in interpretation to use the best possible benchmark for comparison with the emojis with action units we created. Interpretation of those emojis is not representative of ambiguity in emoji interpretation. Past research shows that only 25%–50% of participants label emoji meanings consistently (Jaeger et al., 2019; Miller et al., 2016). In that regard, the novel angry ‘mouth open’, happy ‘eyes closed’, and surprised novel emojis are faring very well in terms of addressing the ambiguity problem with 90%, 75% and 55% of participants using the targeted emotion label to describe these stimuli. 10 I. Boutet et al. Computers in Human Behavior Reports 9 (2023) 100251 The existing ‘tear drop’ sad emoji produced the most consistent in­ terpretations across all emojis tested: 95% of participants used the tar­ geted ‘sad’ label to describe the emotion conveyed by this stimulus. Moreover, accuracy of interpretation was at 95% for this emoji. Simi­ larly, 98% of participants in Jaeger et al. (2019) labelled this emoji as ‘sad’. These results suggest that the tear drop offers a powerful symbol of sadness by representing the act of crying. We conclude that expressing sadness using the tear drop symbol is likely more effective than using AUs and hence encourage its use to communicate sadness. It is important to note that confusions between certain emotional expressions such as fear and surprise are well documented in the FtF literature (e.g., Gosselin et al., 1995; Jack et al., 2009; Palermo & Coltheart, 2004; Tong et al., 2007; Zhang & Ji, 2005). Similar results were found for fearful stimuli, albeit the effect was stronger for face photographs than emojis. With regard to stimuli that conveyed sur­ prised, only the existing ‘screaming’ emoji produced confusions with fear. A similar finding was reported by Jaeger et al. (2019). It therefore appears that certain emojis convey emotional signals less ambiguously than face stimuli. As discussed in more details below, one means of reducing miscommunication is to make those emojis readily available in emoji menus. 4.3. Implications Overall, our results provide a ‘proof-of-concept’ for the hypothesis that incorporating signals from facial expressions of emotions in emojis offers a promising avenue for reducing miscommunication in digital interactions. Furthermore, findings of consistent and accurate inter­ pretation of specific emojis with action units and existing emojis implies that these stimuli have the potential to enhance emotion communication on digital platforms. The data reported here and elsewhere (Jaeger et al., 2019; Miller et al., 2016) points to specific emojis that are less ambig­ uous and that should be favored by users. Ultimately, we hope that the novel emojis that were consistently and accurately interpreted will also be made available on popular platforms to enhance emotional commu­ nication on digital platforms. A critical difference between our novel emojis and existing emojis is that the novel emojis were never seen before by the participants and hence their meaning was entirely derived from the presence of visual cues derived from facial expressions of emotions. This contrasts with many existing emojis whose meanings is learned implicitly from com­ mon usage or cultural references (Fischer & Herbert, 2021; Herring & Dainas, 2020; Langlois, 2019). It is unclear how the meaning of existing emojis is derived from repeated use in different contexts and commu­ nities. For example, for some existing emojis, visual cues offer a domi­ nant cue for interpretation, while for other emojis, implicitly learned meanings may be the dominant cue. Either way, what our and others’ research suggests is that these routes can lead to inconsistent or ambiguous interpretations (Herring & Dainas, 2020; Jaeger & Ares, 2017; Jaeger et al., 2018; Jaeger et al., 2019; Langlois, 2019; Miller et al., 2016; Völker & Mannheim, 2021). Whether repeated use of the novel emojis would produce the same effect over time remains to be determined. However, we prefer to adopt a more optimistic perspective. We predict that one important advantage of incorporating AUs in emojis is that users with limited digital literacy, such as seniors (Hauk et al., 2018; Statistics Canada, 2018), might decode these emojis more accu­ rately than existing emojis. This is critical given that older adults appear to experience more confusion when using emojis than younger adults (Weiβ et al., 2020). We predict that emojis depicting AUs offer a digital equivalent of a FtF system of emotion communication, and hence should facilitate interpretation and usage of emojis across a wider range of users. Fig. 9. Results for neutral novel emojis (*), existing emojis, and face photo­ graphs. Results represent the sum of intensity ratings for the five emotion categories measured. The lower the value, the more ‘neutral’ is the interpre­ tation. Error bars represent 95% CI. The mean result for stimulus A is consid­ ered different from the mean result for stimulus B if the mean value for stimulus A falls outside the CI for stimulus B (e.g., Cousineau, 2017). The purple bar illustrates the magnitude of a small-to-medium effect size. 4.2. Emotion and cognitive interpretations (H2, H3, H4) As compared to existing emojis and faces, participants were more accurate or as accurate at identifying the target emotion conveyed by 7 out of 10 novel emojis with action units. The only emojis that did not perform as well were the two emojis for sadness and the ‘eyes open’ happy emoji. With regards to confusion, results were very similar with the same 7 emojis with action units being less likely or as likely to be confused with non-intended emotions as existing emojis. One notable exception is the ‘mouth open’ angry novel emoji which produced more confusion than the existing angry emoji but just as much confusion as faces. Comparable results were found for cognitive interpretations. With regards to intensity, 9 out 10 emojis with action units produced more intense ratings than existing emojis and face photographs. As a whole, these results support H2, H3 and H4 for the majority of the emojis with action units developed. Hence, the quantitative results, together with the qualitative results, support the notion that emojis that incorporate signals from facial expressions offer a promising solution to the ambi­ guity problem. Three of the emojis with action units conveyed a more ambiguous signal than existing emojis: the two emojis for sadness and the ‘’ happy closed eyes” emoji. Difficulties inherent to depicting certain facial AUs may have contributed in part to these results. For example, we did not incorporate any AUs associated with the face contour (e.g., chin raiser -AU17- was not incorporated in the sad emojis) because we wanted to maintain the circular outline that is a signature of the vast majority of emojis currently available. It is possible that participants had difficulty interpreting depicted AUs in the absence of other AUs that would typi­ cally be provided in facial expressions of emotions. Indeed, groups of AUs are coactivated when a given emotion is expressed (Ekman, 1992b) and it has been suggested that specific AUs and AU-configurations offer critical, diagnostic information, for signalling basic emotions (Smith et al., 2005; Wegrzyn et al., 2017). 4.4. Limitations One limitation of the current study is that emojis were large and 11 I. Boutet et al. Computers in Human Behavior Reports 9 (2023) 100251 presented alone in the center of the screen. This presentation mode lacks ecological validity as it does not match the typical format in which emojis are shown in text-based communication. It is possible that when used on smaller devices like cell phones, the depictions of facial AUs would not be visible to users and emotions conveyed may be more ambiguous. It will therefore be important for future research to examine interpretation of these novel emojis in more ecologically valid presen­ tation formats. Another limitation is the fact that our sample came from only one country, which does not reflect world-wide diversity in emoji use and meanings. Additional research with other samples of partici­ pants is needed given that emoji interpretations can vary across culture, and that emoji use is constantly evolving (Herring & Dainas, 2020; Jaeger & Ares, 2017; Jaeger et al., 2018; Jaeger et al., 2019; Langlois, 2019; Miller et al., 2016; Völker & Mannheim, 2021). The median completion time for the study was 50 min which might be considered long for an online study. Long testing times can produce fatigue effects or lead to attrition. Order of presentation of the 33 stimuli was randomized, such that fatigue would not systematically affect per­ formance on a given set of stimuli. Moreover, participants did not perform at chance level in the second block, when fatigue effects would most likely be at play. We also did not find evidence that participants who took longer to complete the study were more likely to quit before finishing. Finally, participants’ engagement in the task at hand was evaluated throughout the study and data from participants who made more than one error on engagement trials was excluded from the anal­ ysis (see Methods). We therefore feel that completion time likely had little impact on interpretation of our results. The current study focused on decoding basic emotions from emojis (e.g., Ekman, 1992a). It has been argued that multi-dimensional models more adequately capture affective states (e.g., Fernández-Dols & Rus­ sell, 2017; Palermo & Coltheart, 2004). One example is the circumplex model that conceptualizes emotions along two-dimensions: valence (positive/negative) and arousal (calm/excited). In line with previous studies on interpretation of existing emojis (e.g., Ferré et al., 2022; Jaeger et al., 2019; Rodrigues et al., 2018), it will be important for future research to investigate how valence and arousal interpretations of emojis with action units compare with interpretations of existing emojis. – review & editing. Charles Collin: Conceptualization, Writing – review & editing. Declaration of competing interest None. Data availability We have shared the link for the shared data on OSF https://osf. io/4xpjc/?view_only=d385b1a71c144174ad7b13e544698ff9. Appendix A. Supplementary data Supplementary data to this article can be found online at https://doi. org/10.1016/j.chbr.2022.100251. References Bai, Q., Dan, Q., Mu, Z., & Yang, M. (2019). A systematic review of emoji: Current research and future perspectives. Frontiers in Psychology, 10. https://doi.org/ 10.3389/fpsyg.2019.02221 Biehl, M., Matsumoto, D., Ekman, P., Hearn, V., Heider, K., Kudoh, T., & Ton, V. (1997). Matsumoto and ekman’s Japanese and caucasian facial expressions of emotion (JACFEE): Reliability data and cross-national differences. Journal of Nonverbal Behavior, 21(1), 3–21. https://doi.org/10.1023/A:1024902500935 Boutet, I., LeBlanc, M., Chamberland, J. A., & Collin, C. A. (2021). Emojis influence emotional communication, social attributions, and information processing. Computers in Human Behavior, 119, Article 106722. https://doi.org/10.1016/j. chb.2021.106722 Cohen, J. (1988). In Statistical power analysis for the behavioral Sciences (2nd ed.). Routledge. https://doi.org/10.4324/9780203771587. Cousineau, D. (2017). Varieties of confidence intervals. Advances in Cognitive Psychology, 13(2), 140. https://doi.org/10.5709/acp-0214-z Cousineau, D. (2021). In Plotting proportions with superb. https://dcousin3.github.io/supe rb/articles/VignetteC.html. Cousineau, D., Goulet, M.-A., & Harding, B. (2021). Summary plots with adjusted error bars: The superb framework with an implementation in R. Advances in Methods and Practices in Psychological Science, 1–46. https://doi.org/10.1177/ 25152459211035109 Daniel, T. A., & Camp, A. L. (2020). Emojis affect processing fluency on social media. Psychology of Popular Media, 9(2), 208. https://doi.org/10.1037/ppm0000219 Edwards, R., Bybee, B. T., Frost, J. K., Harvey, A. J., & Navarro, M. (2017). That’s not what I meant: How misunderstanding is related to channel and perspective-taking. Journal of Language and Social Psychology, 36(2), 188–210. https://doi.org/10.1177/ 0261927X16662968 Ekman, P. (1989). The argument and evidence about universals in facial expressions. Handbook of Social Psychophysiology, 143–164. Ekman, P. (1992a). An argument for basic emotions. Cognition & Emotion, 169–200. https://doi.org/10.1080/02699939208411068 Ekman, P. (1992b). Facial expressions of emotion: New findings, new questions. Psychological Science, 3(1), 34–38. https://doi.org/10.1111/j.1467-9280.1992. tb00253.x Ekman, P., & Friesen, W. V. (1982). Felt, false, and miserable smiles. Journal of Nonverbal Behavior, 6(4), 238–252. https://doi.org/10.1007/BF00987191 Ekman, P., & Keltner, D. (1997). Universal facial expressions of emotion: An old controversy and new findings. Ekman, P., & Rosenberg, E. L. (2005). In What the face RevealsBasic and applied studies of spontaneous expression using the facial action coding system (FACS). Oxford University Press. https://doi.org/10.1093/acprof:oso/9780195179644.001.0001. Emojipedia. (n.d.). World emoji day. https://worldemojiday.com/. Emojipedia. (n.d.). Emojipedia. https://emojipedia.org/. Faul, F., Erdfelder, E., Lang, A. G., & Buchner, A. (2007). G* power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. https://doi.org/10.3758/BF03193146 Fernández-Dols, J.-M., & Russell, J. A. (Eds.). (2017). The science of facial expression. Oxford University Press. Fischer, B., & Herbert, C. (2021). Emoji as Affective Symbols: Affective judgments of emoji, emoticons and human faces varying in emotional content. Frontiers in Psychology, 12, 1019. https://doi.org/10.3389/fpsyg.2021.645173 Franco, C. L., & Fugate, J. M. B. (2020). Emoji face renderings: Exploring the role emoji platform differences have on emotional interpretation. Journal of Nonverbal Behavior, 44(2), 301–328. https://doi.org/10.1007/s10919-019-00330-1 Gawne, L., & McCulloch, G. (2019). Emoji as digital gestures. language@internet, 17(2). Gesselman, A. N., Ta, V. P., & Garcia, J. R. (2019). Worth a thousand interpersonal words: Emoji as affective signals for relationship-oriented digital communication. PLoS One, 14(8), Article e0221297. https://doi.org/10.1371/journal.pone.0221297 Gosselin, P., Kirouac, G., & Doré, F. Y. (1995). Components and recognition of facial expression in the communication of emotion by actors. Journal of Personality and Social Psychology, 68(1), 83–96. https://doi.org/10.1037/0022-3514.68.1.83 5. Conclusion Many emojis are ambiguous, which limits the utility of this poten­ tially rich form of emotion communication. Humans recognize and discriminate facial expressions of emotions on the basis of emotional signals called facial AUs (Ekman, 1992b; Ekman & Rosenberg, 2005; Matsumoto & Ekman, 1989; Matsumoto et al., 2000; Zebrowitz, 2017). We hypothesized that incorporating facial AUs in novel emojis may offer a solution to the ambiguous emoji problem. This hypothesis was sup­ ported for 7 out of 10 novel emojis created using this approach. Since most humans already have the expertise for decoding facial AUs, knowledge of emoji lexicons (e.g., emojipedia.com) and learning of implied meanings is not necessary for accurate interpretation of these novel emojis. Our hope is that developers of digital technology will take into consideration the empirical data reviewed here to design emoji menus that facilitate use of unambiguous emojis, whether they be existing emojis or the novel emojis developed here. Using unambiguous emojis to convey emotions could reduce miscommunication and improve digital social interactions. CRediT authorship contribution statement Isabelle Boutet: Conceptualization, Stimuli development, Method­ ology, Formal analysis, Project administration, Supervision, Writing – original draft, Writing – review & editing. Joëlle Guay: Stimuli devel­ opment, Formal analysis, Writing – original draft, Writing – review & editing. Justin Chamberland: Methodology, Software, Formal analysis, Writing – review & editing. Denis Cousineau: Formal analysis, Writing 12 I. Boutet et al. Computers in Human Behavior Reports 9 (2023) 100251 Matsumoto, D., LeRoux, J., Wilson-Cohn, C., Raroque, J., Kooken, K., Ekman, P., Yrizarry, N., Loewinger, S., Uchida, H., Yee, A., Amo, L., & Goh, A. (2000). A new test to measure emotion recognition ability: Matsumoto and ekman’s Japanese and caucasian brief affect recognition test (JACBART). Journal of Nonverbal Behavior, 24 (3), 179–209. Miller, H., Thebault-Spieker, J., Chang, S., Johnson, I., Terveen, L., & Hecht, B. (2016). “Blissfully happy” or “ready toFight”: Varying interpretations of emoji. March. In , Vol. 10. Proceedings of the international AAAI conference on web and social media, 1. Ortony, A., & Turner, T. J. (1990). What’s basic about basic emotions? Psychological Review, 97(3), 315–331. https://doi.org/10.1037/0033-295X.97.3.315 Palermo, R., & Coltheart, M. (2004). Photographs of facial expression: Accuracy, response times, and ratings of intensity. Behavior Research Methods, Instruments, & Computers, 36(4), 634–638. https://doi.org/10.3758/bf03206544 Paul Ekman Group. (n.d.). Universal emotions. Retrieved September 19, 2020, from https://www.paulekman.com/universal-emotions/. Rawlings, A. (2018). Dec. 11. In Why emoji mean different things in different cultures. BBC News https://www.bbc.com/future/article/20181211-why-emoji-mean-different-th ings-in-different-cultures. Rodrigues, D., Lopes, D., Prada, M., Thompson, D., & Garrido, M. V. (2017). A frown emoji can be worth a thousand words: Perceptions of emoji use in text messages exchanged between romantic partners. Telematics and Informatics, 34(8), 1532–1543. https://doi.org/10.1016/j.tele.2017.07.001 Rodrigues, D., Prada, M., Gaspar, R., et al. (2018). Lisbon Emoji and Emoticon Database (LEED): Norms for emoji and emoticons in seven evaluative dimensions. Behav Res, 50, 392–405. https://doi.org/10.3758/s13428-017-0878-6 Russell, J. A. (1993). Forced-choice response format in the study of facial expression. Motivation and Emotion, 17(1), 41–51. https://doi.org/10.1007/BF00995206 Scherer, K. R., & Grandjean, D. (2008). Facial expressions allow inference of both emotions and their components. Cognition & Emotion, 22(5), 789–801. https://doi. org/10.1080/02699930701516791 Scherer, K. R., & Moors, A. (2018). The emotion process: Event appraisal and component differentiation (Vol. 31). https://doi.org/10.1146/annurev-psych-122216- 011854 Smith, M. L., Cottrell, G. W., Gosselin, F., & Schyns, P. G. (2005). Transmitting and decoding facial expressions. Psychological Science, 16(3), 184–189. https://doi.org/ 10.1111/j.0956-7976.2005.00801.x Statistics Canada. (2018). In Smartphone use and smartphone habits by gender and age group, inactive (Table 22-10-0115-01). https://doi.org/10.25318/2210011501-eng Tong, Y., Liao, W., & Ji, Q. (2007). Facial action unit recognition by exploiting their dynamic and semantic relationships. IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(10), 1683–1699. https://doi.org/10.1109/ TPAMI.2007.1094 Völker, J., & Mannheim, C. (2021). Tuned in on senders’ self-revelation: Emojis and emotional intelligence influence interpretation of WhatsApp messages. Computers in Human Behavior Reports, 3, Article 100062. https://doi.org/10.1016/j. chbr.2021.100062 Walther, J. B., & D’Addario, K. P. (2001). The impacts of emoticons on message interpretation in computer-mediated communication. Social Science Computer Review, 19(3), 324–347. https://doi.org/10.1177/089443930101900307 Wegrzyn, M., Vogt, M., Kireclioglu, B., Schneider, J., & Kissler, J. (2017). Mapping the emotional face. How individual face parts contribute to successful emotion recognition. PLoS One, 12(5), Article e0177239. https://doi.org/10.1371/journal. pone.0177239 Weiß, M., Bille, D., Rodrigues, J., & Hewig, J. (2020). Age-related differences in emoji evaluation. Experimental Aging Research, 46(5), 416–432. https://doi.org/10.1080/ 0361073X.2020.1790087 Yik, M. S. M., & Russell, J. A. (1999). Interpretation of faces: A cross-cultural study of a prediction from Fridlund’s theory. Cognit. Emot., 13(1), 93–104. https://doi.org/ 10.1080/026999399379384 Zebrowitz, L. A. (2017). First impressions from faces. Current Directions in Psychological Science, 26(3), 237–242. https://doi.org/10.1177/0963721416683996 Zhang, Y., & Ji, Q. (2005). Active and dynamic information fusion for facial expression understanding from image sequences. IEEE Transactions on Pattern Analysis and Machine Intelligence, 27(5), 699–714. https://doi.org/10.1109/TPAMI.2005.93 Goulet, M. A., & Cousineau, D. (2019). The power of replicated measures to increase statistical power. Advances in Methods and Practices in Psychological Science, 2(3), 199–213. Hall, J. A., Horgan, T. G., & Murphy, N. A. (2019). Nonverbal communication. Annual Review of Psychology, 70, 271–294. https://doi.org/10.1146/annurev-psych-010418103145 Hauk, N., Hüffmeier, J., & Krumm, S. (2018). Ready to be a silver surfer? A meta-analysis on the relationship between chronological age and technology acceptance. Computers in Human Behavior, 84, 304–319. https://doi.org/10.1016/j. chb.2018.01.020 Herring, S. C., & Dainas, A. R. (2020). Gender and age influences on interpretation of emoji functions. ACM Transactions on Social Computing, 3(2), 1–26. https://doi.org/ 10.1145/3375629 Hess, U., Blairy, S., & Kleck, R. E. (1997). The intensity of emotional facial expressions and decoding accuracy. Journal of Nonverbal Behavior, 21(4), 241–257. Hsieh, S. H., & Tseng, T. H. (2017). Playfulness in mobile instant messaging: Examining the influence of emoticons and text messaging on social interaction. Computers in Human Behavior, 69, 405–414. https://doi.org/10.1016/j.chb.2016.12.052 Jack, R. E., Blais, C., Scheepers, C., Schyns, P. G., & Caldara, R. (2009). Cultural confusions show that facial expressions are not universal. Current Biology, 19, 1543–1548. https://doi.org/10.1016/j.cub.2009.07.051 Jack, R. E., & Schyns, P. G. (2017). Toward a social psychophysics of face communication. Annual Review of Psychology, 68, 269–297. https://doi.org/ 10.1146/annurev-psych-010416-044242 Jaeger, S. R., & Ares, G. (2017). Dominant meanings of facial emoji: Insights from Chinese consumers and comparison with meanings from internet resources. Food Quality and Preference, 62, 275–283. https://doi.org/10.1016/j. foodqual.2017.04.009 Jaeger, S. R., Roigard, C. M., Jin, D., Vidal, L., & Ares, G. (2019). Valence, arousal and sentiment meanings of 33 facial emoji: Insights for the use of emoji in consumer research. Food Research International, 119, 895–907. https://doi.org/10.1016/j. foodres.2018.10.074 Jaeger, S. R., Xia, Y., Lee, P. Y., Hunter, D. C., Beresford, M. K., & Ares, G. (2018). Emoji questionnaires can be used with a range of population segments: Findings relating to age, gender and frequency of emoji/emoticon use. Food Quality and Preference, 68, 397–410. https://doi.org/10.1016/j.foodqual.2017.12.011 Kaye, L. K., Rodriguez-Cuadrado, S., Malone, S. A., Wall, H. J., Gaunt, E., Mulvey, A. L., & Graham, C. (2021). How emotional are emoji?: Exploring the effect of emotional valence on the processing of emoji stimuli. Computers in Human Behavior, 116, Article 106648. https://doi.org/10.1016/j.chb.2020.106648 Kelly, L., Keaten, J. A., Becker, B., Cole, J., Littleford, L., & Rothe, B. (2012). ap. Qualitative Research Reports in Communication, 13(1), 1–9. https://doi.org/10.1080/ 17459435.2012.719203 Kelly, L., & Miller-Ott, A. E. (2018). Perceived miscommunication in friends’ and romantic partners’ texted conversations. Southern Communication Journal, 83(4), 267–280. https://doi.org/10.1080/1041794X.2018.1488271 Kingsbury, M., & Coplan, R. J. (2016). RU mad@ me? Social anxiety and interpretation of ambiguous text messages. Computers in Human Behavior, 54, 368–379. https://doi. org/10.1016/j.chb.2015.08.032 Kralj Novak, P., Smailović, J., Sluban, B., & Mozetič, I. (2015). Sentiment of emojis. PLoS One, 10(12), Article e0144296. https://doi.org/10.1371/journal.pone.0144296 Langlois, O. (2019). In L’impact des émojis sur la perception affective des messages texte. Université d’Ottawa/University of Ottawa. https://doi.org/10.20381/ruor-23871. Doctoral dissertation. Laurencelle, L., & Cousineau, D. (2022). Analyses of proportions using arcsine transform with any experimental desig. Manuscript submitted for publication. Matsumoto, D., & Ekman, P. (1988). Japanese and caucasian facial expressions of emotion and neutral faces (JACFEE and JACNeuF). San Francisco, 401 Parnassus Avenue, San Francisco, CA 94143: University of California (Available from the Human Interaction Laboratory. Matsumoto, D., & Ekman, P. (1989). American-Japanese cultural differences in intensity ratings of facial expressions of emotion. Motivation and Emotion, 13(2), 143–157. 13