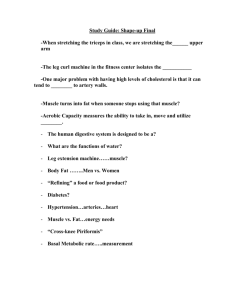

Table of Contents Article 1: Overview of Research 3 - The Scientific Method 3 - Variables 4 - Types of Research 5 - Study Designs 6 - Types of Publications 7 Article 2: Reading and Interpreting Research - Abstract - Introduction - Materials & Methods - Results - Discussion - Conclusion 9 10 10 11 11 11 11 Article 3: Statistical Concepts - Overview of Statistics - Data Representation 12 12 16 Article 4: Challenges for Researchers 20 - Funding 20 - Trusting Research 22 Article 5: Common Methods for Measuring Variables - Body Water - Body Composition - Protein Metabolism - Hypertrophy Measurements - Energy Expenditure - Hormones - Muscle Excitation - Strength Testing - Psychometrics - Closing Remarks - References How To Read Research: A Biolayne Guide 28 28 29 33 36 37 39 40 40 41 43 44 2 Article 01 Overview of Research Science is known as a branch of knowledge or body of truth/facts. Science is based on research. The Oxford University Press defines research as, “the systematic investigation into and study of materials and sources in order to establish facts and reach new conclusions” 1. The term research can have a variety of definitions and meanings depending on the context. There are many different branches of research with diverse focuses. This guide will provide a general understanding of research from a broad perspective and narrow it down to the details that are of specific importance to exercise and nutritional science. It’s important to understand that many of the definitions, topics and concepts that we discuss have multiple definitions and lack clear characteristics. With everything we discuss in this guide, we will provide our best definition and interpretation as we understand it. Our goal with this guide is to provide you with the necessary knowledge and information you need to critically read and interpret scientific publications and their respective findings. Remember, one study doesn’t prove anything. Individual studies are pieces to a much larger puzzle. Tuckman, 2012 2 characterized the research process by these five properties: 1. Systematic: researchers follow certain rules and parameters when investigating a specific question and designing a research study. This involves identifying variables of interest, designing a study to test the relationships of the variables, and collecting data to evaluate the problem and prediction. 2. Logical: examining the procedures from testing a theory allows for evaluation of the conclusions that are made. How To Read Research: A Biolayne Guide 3. Empirical: collecting data. 4. Reductive: evaluating data to establish generalizations for explaining relationships. 5. Replicable: the research process is recorded and described in detail to allow for future studies to test the findings and build future research. The Scientific Method You may not remember learning about the scientific method in grade school, so let’s do a quick recap. The scientific method is a formal set of steps that researchers follow to conduct research. The scientific method can be broken up in a variety of different ways, but for the sake of simplicity we will divide the scientific method into these four steps 3. 1. Identifying and developing a problem: all research starts with identifying a problem or topic of interest and defining the studies purpose. 2. Formulating the hypotheses: a hypothesis is a testable statement of the anticipated results of a study. This is a formal prediction of what will occur when the study is carried out, based on prior results or theory. 3. Gathering data: researchers use processes and validated methods to measure and collect data during the study or experiment. 4. Analyzing and interpreting results: once data is collected from the experiment or study, it is then analyzed using statistical methods to determine the accuracy of the hypothesis. Researchers aim to understand what was found and how it fits within the context of other evidence. 3 Variables Variables are factors that can be measured or manipulated during research. Once the problem is identified variables of interest are determined to design a study around those variables to be tested and measured. There are a number of different types of variables and here we cover the primary variables that you should know to further understand the research process. Independent Variables Independent variables are what is being manipulated by the researcher to determine the relationship or affect it has on another variable. Independent variables are also known as the experimental or treatment variable, input, cause or stimulus. For example, an independent variable could be the type of diet subjects are following (i.e. high carb, high fat, low carb, etc.). Independent variables can also have different levels. For example, if a training study is evaluating high, moderate and low training volume and muscle hypertrophy, training volume would be the independent variable with the different levels being high, moderate and low. Dependent Variables Dependent variables are measured following a treatment or stimulus. Dependent variables are known as the output or response variable and they are observed or measured to determine the effect of the independent variable 2. The dependent variable changes as a result of the manipulation of the independent variable. Examples of dependent variables are body composition, strength, resting metabolic rate, blood hormones, etc. If a study is investigating high fat vs. low fat diet and weight loss, weight loss would be considered the dependent variable while the type of diet would be considered the independent variable. Control Variables Control variables are factors that could influence the results and are left out of the study 3. Control variables are not a part of a study and instead controlled by the researcher to cancel out or neutralize any potential How To Read Research: A Biolayne Guide effects they may have on the relationship between the independent and dependent variables 2. The caloric intake in a diet study could be viewed as a control variable when comparing two different types of diets. Extraneous Variables Extraneous variables are factors that can influence the relationship between the independent and dependent variables, but it is not identified or controlled in the study 3. This can cause spurious associations between variables. There may be an association between the independent and dependent variables but could be due to both variables being affected by a third unknown or uncontrolled variable (extraneous). For example, let’s assume a study is examining differences in weight loss when following a high carb/low fat diet or a high fat/low carb diet and let’s say they don’t equate calories. By not having any control over caloric intake that could be an extraneous variable because it can impact the changes between groups irrespective of the type of diet. 4 Extraneous variables are usually identified following an experiment when associations between variables have been identified and examined further. They can also be identified by researchers during the study design, but because of lack of resources researchers may be unable to control or account for a specific variable. Other variables known as confounding variables and covariates are similar to extraneous variables and often used synonymously, but slightly different. Just know that extraneous variables, confounding variables and covariates are additional, unknown variables that weren’t identified or controlled in the study and have some type of impact on the independent and dependent variables. Types of Research There are many different types of research to answer different kinds of questions and problems. The different types and categories of research are limitless, we will discuss the common types that are generally incorporated into exercise and sports science research. Basic vs. Applied Research in exercise and nutrition science can be placed somewhere on a spectrum between basic and applied research 3. Basic research is commonly referred to as “bench science”. Basic research is difficult and is generally done in a laboratory under tightly controlled conditions. Basic research operates under scientific theories and often involves animals, but the relevance or direct value to practitioners is limited 3. You can think of this type of research as a scientist in a lab with pipettes and cell cultures, studying underlying molecular mechanisms. In contrast, applied research is limited in the type of control it offers, but it’s much more practical and carries high ecological validity. Meaning, it applies to real-world settings/conditions. This type of research involves human subjects and is based on common practice and experiences. Comparing different diet and training programs How To Read Research: A Biolayne Guide while measuring fat loss or muscle growth would be considered an applied form of research because they are performed in real world settings with limited control over the environment. Quantitative Research Quantitative research is the most common type of research you will find in exercise and nutrition science. Quantitative research is concerned with numbers and groups, the aim is to determine the relationship between variables 4. The relationships between variables are expressed through statistical analysis (we’ll cover later). This type of research is objective and tightly follows the scientific method and seeks to determine a cause and effect. Studies that are classified as quantitative research can be further classified into two different study types known as experimental and descriptive (observational). Experimental - Experimental research involves the manipulation of treatments or interventions. The aim of experimental research is to establish causeand-effect relationships and commonly utilizes some form of randomization (discussed below) 3. Experimental studies require diligent control over variables and other factors that may impact the outcomes of a study. Experimental studies are also known as longitudinal or repeated-measure studies 4. Experimental studies measure subjects before and following treatments or interventions. This type of research aims to explain phenomena through controlled manipulation of variables, commonly viewed as the ‘gold-standard’ for research. Descriptive - Descriptive research is also known as observational research and measures things as they are without intervening 4. There is no attempt to change or modify certain behaviors. This type of research doesn’t attempt to determine cause and effect (although many media outlets and even researchers are guilty of attempting to infer causation from these results) and instead characterizes phenomena as it exists. This type of research is less controlled and utilizes questionnaires, interviews and observation. 5 Qualitative Research Qualitative research is concerned with words and individuals. Qualitative research is more subjective and seeks understanding of multiple realities/truths and requires constant comparison and revision. Qualitative research rarely develops hypotheses prior to the study and instead uses more general questions to guide the study 3. Qualitative research has been growing interest in the field of exercise science and is now being included more frequently. This type of research has been historically used in social sciences like psychology, sociology, and anthropology 5. This type of research is concerned with behaviors like attitude, beliefs, motivation and perception, all of which are becoming popular in the field of exercise science and sports medicine. Qualitative research is frequently used to evaluate community and school physical activity programs to understand the less tangible outcomes like the participants attitudes and experiences about a program of interest 5. Qualitative methods of data collection can include open-ended questionnaires, interviews or market research focus groups 5. How To Read Research: A Biolayne Guide Study Designs Animal models Animal model research commonly includes rats or mice as subjects to perform more intensive and controlled experiments. Other species are included in various types of research and many debate the ethical considerations associated with this design. Nevertheless, humans share many anatomical and physiological similarities with different animals, which allows investigation into underlying mechanisms. Animal models allow for testing of novel therapies before applying to humans, although not all results can be directly translated to humans 6. Controlled Trials Controlled trials include a group that does not receive a specific treatment or intervention. This is called the control group and either receives nothing at all or a placebo. 6 Placebo-Controlled - When one of the treatments is inactive and does not produce any impact or effect on any of the variables it’s considered a placebo. Placebocontrolled trials can be single or double blinded. Single blinded trials are when the subjects are blinded to the type of treatment they’re receiving. In other words, they don’t know if they’re getting the active or inactive treatment. This is done to avoid the placebo effect. If subjects believe one treatment is more or less effective than the other it can actually cause a psychosomatic change to occur irrespective of the treatment itself. Double-blind trials include the subject and the researchers being blinded to the treatments and when done properly researchers are blinded to the statistical analysis as well. Randomized Controlled Trials - Randomizing participants to groups can reduce the risk of researcher bias on the outcomes of interest and assumes both groups to be similar. This type of study design is of the highest quality because it tightly controls for factors and variables that could influence the results, regardless of the effectiveness of the treatments or interventions. Crossover Designs This type of study design includes both groups receiving both treatments at different times. For example, group 1 may receive treatment 2 and group 2 may receive treatment 1. After a specified time period, treatments are switched to the other group. These studies are unique in that each subject is able to be used as their own control since they both receive each treatment. Case Studies Case studies observe and report data on one participant (n = 1). Case studies provide an in-depth and detailed analysis that can assist in developing theories, evaluating programs, and developing interventions 7. Case studies lack a specific intervention or treatment and instead observe and control testing procedures. This type of study design is generally categorized as a type of quantitative, descriptive study, but can be used in qualitative research as well 7. This type of study design has gained popularity in physique athlete How To Read Research: A Biolayne Guide research because it’s hard to recruit that type of population and implement an intervention that they’re willing to follow. Generally case studies have widely been utilized in fields such as medicine, psychology, counseling and sociology 3. Cohort Studies This is a type of longitudinal study that investigates a certain sample of people that share defining characteristics. This type of design can be experimental or observational depending on how it is applied. Types of Publications After a scientific study is conducted, analyzed and written, it’s then submitted to a journal for peer review. Peer review involves one or more professionals or experts within the same field to critically evaluate the submitted manuscript. Reviewers can choose to simply reject the paper after reading it or suggest revisions for the authors to complete before the paper can be accepted. The peer review process is not perfect by any means, but it provides a form of regulation to maintain the quality and integrity of the scientific literature and ensure the study is suitable for publication. Different journals follow minor differences in their rules and regulations. They also vary in the way their publications are formatted, while following a general template. All scientific journals have what’s called an impact factor. The higher the impact factor of a journal, the higher the quality and therefore, higher quality studies are published in those journals. The impact factor is calculated based on the number of citations the articles receive that are published in that journal. There are a number of different types of scientific publications, but here we briefly describe the primary types you’ll encounter. Original Research Original research is a standard peer-reviewed publication, what you would consider to be a published scientific study. This type of publication follows a 7 Systematic Reviews The main purpose of systematic reviews is to create generalizations by integrating empirical research 8. Systematic reviews attempt to answer a specific research question and use a systematic process to collect relevant data sources and synthesize the empirical findings. Systematic reviews address relevant theories, critically analyze the data of the included studies, attempt to resolve conflicting evidence on a topic and identify central issues for future research 8. Systematic reviews are a superior form of a literature review because they use a systematic process to collect, evaluate and synthesize the data on a particular subject. Commonly thought to be the same thing as a meta-analysis, systematic reviews differ in that they don’t use any formal statistical methods to analyze the combined data of studies, they simply summarize the empirical evidence. Meta-Analysis general format including an introduction, methods, results, discussion and conclusions. Original research is considered a primary source and includes data and results that have not been published previously. Narrative (Literature) Review Narrative reviews are considered secondary sources and provide a review and general consensus on a specific topic. Authors collect relevant, primary source articles relating to a specific topic and provide a summary of the most current and relevant evidence pertaining to that topic. Narrative reviews are different from systematic reviews in that they are based on the opinion of the authors and lack strict control over which studies to include in the review. You can think of these as an opinion-based article including a collection and summary of original research. These can be helpful when trying to understand concepts, theories or a body of evidence regarding a specific topic but be careful accepting them as truth since it’s only the opinion of the researchers who wrote it. These reviews can be subject to confirmation bias and cherry picking studies that fit their narrative. How To Read Research: A Biolayne Guide Meta-analyses include the results of two or more studies. Meta-analyses were first introduced in 1976 by Gene Glass and defined as “a technique of literature review that contains a definitive methodology and quantifies the results of various studies to a standard metric that allows the use of statistical techniques as a means of analysis” 3. Meta-analyses can be distinguished from literature reviews because they include a definitive methodology for including specific studies in the literature analysis, and the results of various studies are quantified to a standard metric called effect size (which we will cover later) 3. Different from systematic reviews, they use various statistical methods to combine and analyze the data of a number of studies. Meta-regressions are an extension of metaanalyses and include a more effective and advanced statistical tool to assess the relationships between variables. Meta-regressions account for covariates or other study characteristics of interest. When carried out properly, meta-analyses are considered the highest quality of scientific study. 8 Article 02 Reading and Interpreting Research Reading research can be a challenging task for those who are not experienced and educated to read scientific publications. Before being able to interpret results and findings from research, it’s necessary to understand the layout and how to read a study. Most peer-reviewed journal publications follow a similar and general format, with minor differences. Understanding the general layout of publications will make it easier to identify key details of studies and understand the findings and takeaways. This section of the guide focuses on how to read scientific studies and interpret their findings. After we cover the general layout and briefly describe each section of a published study, we How To Read Research: A Biolayne Guide will cover basic statistics and dig into challenges faced by researchers in exercise and nutritional science. We will finish this section with how to trust studies and evaluate studies reporting conflicting findings. General Format The author line of publications follows a specific order. The first author is the one who coordinated and had the largest role or responsibility in the study. Generally, if this is a graduate student’s project or thesis their mentor or supervisor will be listed last. The remaining order of authors will be based on their level of contribution. The general format for peer-reviewed, academic publications include five sections known as the introduction, methods, results, discussion, and conclusion. The abstract is another section, but it is separate from the actual publication. Abstract After the study title and author line you will find the abstract. The abstract is a paragraph summary of the study. The abstract includes one to two sentences 9 from each of the sections of the publication. Don’t be an abstract warrior and only read the abstract to report what the study found. The details are important, and findings are accompanied with caveats. Introduction The introduction is the first section of all publications. The introduction includes a discussion of recent and previous studies that relate to the current study of interest. Intro’s start with more general background information and progress into key details and publications that apply to the current study. The intro also discusses any controversies between theories or hypotheses and highlights the importance for the current study. The intro includes two key pieces of the study known as the purpose and the hypothesis: • Duration of the study: how long did the experiment occur and how often did they observe and measure changes? • Instrumentation: which devices and methods were used to collect data. How was body fat percentage (BF%) tested? Did they use appropriate equipment for what they were attempting to test? Were their measurements valid and reliable? • Level of control: were the participants in a tightly controlled environment (metabolic ward) or was this a free-living experiment? Studies that include supervision for resistance training studies are more tightly controlled than studies that allow Purpose - The purpose of the study is a one to two subjects to train on their own. Studies that provide sentence that describes the aim or the reason for why the study is being carried out. food to subjects during diet studies have more Hypothesis - Based on previous research and understanding researchers develop what’s known as a hypothesis, a short explanation of the predicted results. Hypotheses cannot be proven, but when the data backs up the hypothesis it is “supported” and when it doesn’t its “rejected” 10. control over studies that rely on self-reported nutritional intake. Ethical and diligent researchers will specify their studies strengths and limitations in the discussion but paying close attention to the details in the methods will allow you to identify the level of control in a particular study. Materials & Methods The Material & Methods (methods) section is where the study design is explained, detailing the procedures for each measurement during experimentation. Methods provide specific details of how the experiment was carried out so that future research can attempt to replicate and build on previous results. Key details that you want to focus on are: • Variables of interest: what did the researchers manipulate and have control over (independent), and which variables were tested or measured (dependent). • Participants: how many people were studied and what were their characteristics. Were they male? What was their training status? Were they overweight? How To Read Research: A Biolayne Guide 10 Group Effect - This tells us if there was a significant Results This is the section of publications that most people skip over or shy away from because most people find math and numbers confusing. Later we will provide a brief and general overview of statistics to help with your confidence and ability to interpret results. In the results section researchers report the outcomes of the statistical tests that include the relationships between data from experimentation 2. The results section also includes the majority of figures and tables that represent the data in a different way than reported in the text. The results section is written so that readers can interpret the data from only reading the text and the figures are designed to represent the data in a way that allows for interpretation without having to read the results section. The results section does not include any of the researcher’s interpretation or explanation of the data, that occurs in the discussion section. There are three types of effects generally (not always) reported in the results section that you should focus on. For the following sections we will reference this table for an example: Changes in Bodyweight between a high carb and high fat diet. Diet Group Baseline Post-Testing High Carb 200lbs 180lbs High Fat 190lbs 175lbs change within a group, this does not compare groups, but rather tells us if a group made a real change. For example, this would tell us if the high carb group experienced a significant change from baseline to post-testing. Interaction (group x time) Effect - An interaction effect is what you want to focus on if you wish to compare groups. This compares the rate of body weight change from baseline to post-testing between dieting groups. In other words, did the high carb group lose more body fat from baseline to post-testing or did the high fat group lose more body fat from baseline to post-testing. Discussion Like the intro, the discussion is a heavier section where researchers provide their interpretation and explanation for the results they found. There is no general format for this section, but includes an indepth summary of the results from the study that was conducted. The majority of the discussion is focused on comparing and contrasting the results of the conducted study to what has been previously reported by similar studies. The discussion and intro are good places to learn about other studies you might not have known about. Towards the end of the discussion you’ll generally find a disclosure of the strengths and limitations of the study. Every study has limitations and if a study doesn’t explicitly mention their primary limitations, that could be a red flag. Some publications also include a conclusion within the discussion section, but some journals may include a separate section for conclusions or practical recommendations. Conclusion Main Time Effect - This simply explains if there was a significant change in the dependent variable from baseline to post-testing for all subjects. Referring to the table above, this will tell us if there was a change in body weight from baseline to post-testing for both groups (high carb & high fat) combined. How To Read Research: A Biolayne Guide Everyone knows what a conclusion is, but in this short section authors give a final summary of the main takeaways and practical recommendations. This is a more concise version of the discussion, short and practical. 11 Article 03 Statistical Concepts Overview of Statistics Most people cringe at the word statistics and we understand why. Math and statistics can be complex and difficult to understand. There are various meanings for the word statistics, which adds to its confusion. With a mixture of math and logic, statistics is a branch of mathematics that is concerned with collection, analysis and interpretation of data . Data are scores and 3 values that we obtain from measuring the outcomes (dependent variables) of interest in a study. Collecting data is only one piece of the puzzle, if researchers don’t know what to do with the data and how to properly describe the data, then the findings may seem underwhelming. Statistics are a way of describing data characteristics and examining the relationships between How To Read Research: A Biolayne Guide variables, this allows for greater objectivity when interpreting research and drawing conclusions. This section provides a simple overview of some common and basic statistical concepts that you will encounter throughout exercise and nutrition research. Again, this is a brief section and doesn’t even scratch the surface of the broader and more complex statistical methods that exist. Statistics operate under a number of assumptions and rules, if these are violated, they can misrepresent the data. Statistics is not our area of expertise and it’s important to realize that if you don’t fully understand statistics they can be misused to deceive people into believing the data is more appealing than it actually may be. Percent Change Very simply, this is the change between two values expressed as a percentage. You have to be careful with percentage change because it can sometimes appear to be a greater change than it actually is. That’s why you also want the raw or true values. For example, if a study is looking at leptin changes and they have a baseline value of 0.3ng/mL and a post-test value of 1.0ng/mL, the absolute change is 0.7ng/mL, but the percentage 12 change is 233% [(1 - 0.3) / (0.3 x 100)]. While this change is minimal and may not be meaningful, the percentage change can make it appear as if it’s a big deal. Central Tendency The mean is probably one of the most commonly understood mathematical terms. The mean describes the average value of a group of numbers. In statistics, the mean is a measure of central tendency, which represents a central or balance point within a set of data 10. The mode and median are similar to the mean because they represent centrality, but technically they’re slightly different. Mode refers to the most frequent value that appears in a data set, which may or may not be close to the mean. Median refers to the middle point of a data set, in other words 50% of the scores will fall under the median. For example, let’s assume the following 10 scores were collected during an experiment: 6 6 6 10 11 12 14 14 16 17 Mean = 11.2 The average of all scores (6+6+6+10+11+12+14+14+16+17 **/** 10) Median = 11.5 Middle value (5 scores below and 5 scores above this value) Mode = 6 Most frequent score If the data set had an odd number of values, then the middle value is simply the median (ex. 1, 2, 3; 2 would be the median). Just remember there are slightly different ways to describe central tendency, but most often you’ll hear about the mean since mode and median are only reported for certain instances. When evaluating data based on calculated means it’s important to identify any outliers or extreme values in the data. Outliers and high variability of data can produce inflated or misleading results because the mean is sensitive to outliers and extreme values. In contrast, the median is not sensitive to outliers and extreme values, meaning the median won’t change if there is a greater spread in the data. If the mean is being reported it’s important to also take note of the standard deviation to account for this. How To Read Research: A Biolayne Guide Standard Deviation The standard deviation is concerned with the variability or the spread of a data set. As previously mentioned, the mean is the central point of a data set and the standard deviation is an estimate of the variability around that central point. In other words, the standard deviation represents the typical amount that a score deviates from the mean. When the standard deviation is low that means the spread or dispersion of scores is small and more tightly grouped closer to the mean. When the standard deviation is large it signifies a widespread or high variability of scores, when this occurs the mean may not be a good representation of the data. The mean and standard deviation are forms of descriptive statistics which is useful for summarizing the data of a specific group. Meaning, they are only able to describe the data we have accrued, it cannot tell us if the results we acquired will happen again. Other statistical tests can fall under another form known as inferential statistics, which can allow (not always) for conclusions and generalizations of a sample to the larger population. P-value Probability is the underlying concept of p-values, which is the likelihood that something will occur. P-values reflect the level of significance, and the odds that the findings are due to chance, it’s impossible to have a p-value of 0 3. In exercise science the p-value is considered to be ‘significant’ at p < 0.05. Meaning, researchers believe that the odds of their findings occurring by chance are 5 in 100 or they are 95% sure the results were not by chance and the observed differences were a real change. In the results section when changes of a specific variable are reported there is a p-value reported after (e.g., 103.5 ± 15.1 ng/dL (*p* = 0.02)). In exercise and nutritional science, if the p-value is greater than 0.05 the result isn’t deemed to be significant. This is also stated as ‘supporting the null hypothesis’. The null hypothesis states there isn’t a relationship or difference and instead the findings are due to sampling error or random chance. Statistical tests are performed to either support or reject the null hypothesis and anything less than 0.05 rejects the null hypothesis and accepts the research hypothesis. Statistical sig13 nificance is what you should identify when interpreting results, but significant differences aren’t the only thing you want to focus on. A study might show that one type of diet lost significantly more weight than another type of diet, but what if it was only by 0.5 lbs? That doesn’t mean much, but how do you determine if significant results are meaningful? While p-values provide statistical significance, effect sizes allow researchers to communicate practical significance of their results 11. for meta-analytic conclusions, and they are commonly used for future study planning using a power analysis 11. Effect sizes can be interpreted based on recommendations by Cohen 1988, which states that effect sizes can range from small (d = 0.2), medium (d = 0.5), and large (d = 0.8) 12. Larger effect sizes are more significant. Effect sizes are also commonly used to plan future studies by predicting the sample size needed to detect a difference, this type of test is known as a power analysis. Effect Size Power Analysis The effect size reflects the meaningfulness in the changes that occurred during an experiment. While the p-value tells us if there was a statistically significant and real change, the effect size tells us the magnitude in that change. In other words, effect sizes tell us the magnitude of a relationship between two variables 8. Effect size is an absolute value that represents the standardized difference between two means 3. Effect sizes are frequently used in meta-analyses to compare results between different studies. Effect sizes have been considered as the most important result of empirical studies because they are useful for providing the magnitude of effects in a standardized metric despite differences in measurement techniques, they allow How To Read Research: A Biolayne Guide Statistical power relies on the effect size, the significance criterion (generally p < 0.05) and the number of subjects (sample) in a study 11. When researchers are planning a study, they want to know how many subjects they will need to detect a significant difference between treatments or groups. To accomplish this they perform what’s called an ‘a prior power analysis’ which includes using effect size estimates from similar research, the significance criterion of p < 0.05 and a generally accepted minimum level of power (0.80) to calculate the minimum sample size needed to observe an effect of a specific size 11, 12. Researchers could also use the sample size, significance criterion and power to calculate the minimal detectable effect size. 14 Correlation Coefficient (r) Most of you have probably heard the saying, “correlation, does not equal causation”. Correlation is an association and in research we often want to know the degree of association between two variables across a group of subjects. In other words, an increase or decrease in one variable may occur with an increase or decrease in another variable, but the changes in one variable are associated (not caused) with changes in the other variable. There are different types of correlations used in statistics, but here we discuss the r-value, also known as the ‘Pearson product moment coefficient of correlation’. The correlation coefficient is a statistic used to describe the relationship between two variables (independent & dependent). The r-value can range from -1 to +1. A negative r-value represents an inverse relationship between two variables and a positive r-value indicates a direct relationship (we’ll show you this visually in the ‘data representation’ section). For example, a decrease in body weight is commonly associated with a decrease in leptin, this would be an example of a direct relationship (+r), whereas a decrease in body weight is commonly associated with an increase in ghrelin, this would be considered an inverse relationship (-r). An r-value of 0 indicates no relationship and an r-value of 1 indicates a perfect correlation, however it is likely impossible to achieve a 0 or 1 due to the variability in subject responses and other influences related to physical characteristics, traits or abilities 10. In the scatterplot section below, we will provide a visual explanation for the strength and relationships of correlations. It is not uncommon to evaluate the strength of correlation on a spectrum (0.1 – 0.3 = weak, 0.3 – 0.5 = moderate, 0.5 – 1 = strong) 2. However, some statisticians advise against this practice because correlation is context specific 10. For example, a correlation in biological in vitro experiments could commonly consider a 0.9 to be a strong correlation and correlations close to 0.5 would be much weaker, whereas free-living experiments could consider a 0.6 to be a strong correlation. Regardless, it’s important to remember that the closer the r-value is to 1, the stronger the correlation is. How To Read Research: A Biolayne Guide Coefficient of Determination (r2) You will also encounter a statistic known as the coefficient of determination (r2). This is commonly used with regression analysis and can be conceptualized as a ‘correlational effect size’. It provides a percentage of variance in one variable (dependent, outcome variable we want to predict) that can be accounted for by the variance in the other variable (independent, predictor variable) 3. By squaring the r-value you obtain R-squared (r2) which can be calculated to a percentage. For example, if we wanted to predict how much leptin (dependent) would decrease as fat mass (independent) decreased in a group of people dieting we would use the coefficient of determination. Let’s assume we obtained an r-value of 0.76 and you square it (r2 = 0.762 = ~0.58 = 58%) the percentage signifies 58% of the changes in leptin are predicted or explained by changes in fat mass. If there was a regression line calculated and drawn on a scatterplot with leptin (y-axis) and fat mass (x-axis), 58% of the data points would fall within that regression line. T-test The statistical test used to compare the differences between two means is known as a t-test. The larger the t-value the greater difference there is between means, larger t-values are likely to produce lower p-values. There are two types of t-tests we want to focus on. Independent - This type of t-test determines whether two sample means are significantly different when the two groups being compared are unrelated. For example, if a study randomized 20 subjects to a high carb diet and 20 subjects to a high fat diet and you wanted to know the extent to which the mean weight loss differed between groups, you would perform an independent or unpaired t-test. This type of t-test could also be used to determine how different the two dieting groups were in terms of body fat percentage at baseline since baseline differences can pose problems. Dependent - Dependent or paired samples t-tests are used when comparing two groups that are related in some way or one group at multiple points in time (baseline and post-test: repeated measures). For example, 15 if a study was measuring muscle thickness in 10 males before beginning a training program and then again following a training program, they would use a dependent t-test to evaluate the difference between the mean muscle protein synthesis from baseline and post-testing. If there are more than two groups we use a different statistical test. Analysis of Variance If there are more than two means/groups we wish to compare, we need to perform an extension of a t-test known as Analysis of Variance (ANOVA) 10. The score that is generated from running an ANOVA is known as the F-value (similar to a t-value) and indicates the size of group mean differences. One-Way - A one-way ANOVA is used to determine if statistically significant differences exist between 3 or more means/groups. For example, let’s assume a study is comparing training volume with three groups (low, medium, high) and the dependent variable of interest is muscle growth. The ANOVA would tell us if a difference exists between low vs. medium, low vs. high, and medium vs. high. However, a one-way ANOVA fails to tell us where the significant difference in muscle growth is for the three groups. You could evaluate this unofficially by examining group means, but to statistically test where the difference is, you will have to perform a post-hoc test. Repeated Measures - You will frequently encounter repeated measures ANOVA in the statistical analysis section of many exercise science studies. This statistical test is used to compare the same individuals across time points (repeated measures). For example, let’s assume a study is comparing muscle growth at the beginning, middle and end of a training program. You would run repeated measures ANOVA to determine if there were significant changes between time points. Post-Hoc - If an ANOVA detects a statistical difference between means, we then want to determine where this significance lies. Is it occurring within a group over time (baseline to post-test) or did one group exhibit a greater difference compared to the others (interacHow To Read Research: A Biolayne Guide tion)? There are a number of different types which we won’t cover here, but just know that this gives a more specific idea of differences between means/groups. Data Representation Figures, graphs and tables are used to represent data visually, which can provide a unique perspective and greater understanding of the results. There are tons of different types of figures available, but we’ll talk about a few common types you’ll often see. A couple of key points we want to make regarding most figures and graphs. Different journals will have varying formatting requirements, but you can expect some components to be the same. Underneath the actual figure there will be a title and a description of what the figure is displaying. You will also find any special symbols (i.e., *) to be defined here, but generally the symbols that are used to represent statistical significance or depict a relationship between variables. It’s important to take notice of the axis titles, units of measurement and the scale that is used. There are instances when the scale of a figure doesn’t start at 0 and this can lead to misunderstanding of the actual data. If a graph or figure scale doesn’t start at 0 there should be some type of break expressed with two dashed lines (//) to represent a nonzero baseline. Generally, graphs are better for providing a general overview or “big picture” view of a set of data, whereas tables are better for exact values and individual raw data. Histogram Histograms are a common figure and generally the easiest to understand. Histograms are great when comparing groups or the distribution of a set of scores for a particular group. Most people would also consider or refer to these figures as “bar charts”. However, there’s a slight difference. Bar charts are used for qualitative data that are separated into categories (i.e., gender, race, other specific groups) and the bars are separated and not touching each other. Histograms have vertical bars that are directly adjacent to one another with no 16 space (unless there’s an interval with no scores), signifying continuity 10. Scatter Plot Scatter plots are another type of graph that most people are familiar with. This type of figure commonly reports data points for individual scores for two variables but could also be used to display baseline and post-test scores for an individual 13. You’ll find this type of figure is used most for correlational analyses and while the data points are not connected by lines, a non-vertical line of fit can be generated to summarize or predict the relationship between variables or data points, known as simple regression 13. Simply by looking at scatter plots we can get a pretty good idea of the type of correlation and its strength. example below that is not to scale. There are 5 elements in all box plots that you want to know to understand this type of visual depiction: 1. Q1: This is the first side of the rectangle and signifies the 25th percentile of the data set. Meaning, Line Graph Line graphs depict related data points that are connected with a line, sometimes they include symbols [13]. Line graphs are great when comparing time trials where there are multiple testing points over a period of time. For example, comparing the response of two different supplement treatments over a predefined period of time. Box and Whisker Plots Box and whisker plots (box plots) are used to depict the distribution of a data set. Once you understand each component of a box plot, you’ll realize how simple and effective they can be at summarizing a set of scores. Usually box plots are vertical, but we have provided an How To Read Research: A Biolayne Guide 25 percent of the scores fall under this line. 2. Median: The median (as described previously) is the middle value and 50 % of the scores fall under this value. 3. Q2: is the right side of the rectangle and represents the 75th percentile, meaning 75% of the scores fall below this value. 4. Whiskers: The whiskers can be found on either 17 side of the rectangle and depict the minimum and maximum values within a set of scores. However, these do not include any outliers or extreme values. 5. Outliers & extreme values: Outliers and extreme values are any scores or values that are widely different from the rest of the data set and “stick out”. There’s actually a mathematical way to determine these for a box and whisker plot, but we’ll spare you the details. Just know they are represented by the O and E below and can be expressed as other special symbols in different publications. Forest Plots You will mostly see forest plots in Joe Rogan podcasts with James Wilks… just kidding. You typically see Forest Plots in meta-analyses because they depict the individual results as well as the pooled results of the meta-analysis. Forest plots will indicate the strength of the treatment effect with the y-axis containing a list of the studies included in the analysis and the x-axis will have a distinction of what the studies favor (control vs. treatment) 13. Each study will have their mean symbolized as a data marker and their respective confidence interval (we will cover next, but generally 95%) represented as a horizontal line 13. The size of the data marker generally represents the sample size, or the weight carried by that particular study in the meat-analysis. Diamond markers are generally used to represent the overall or pooled result 13. In the example below adapted by Morton et al. (2017), you will find three different diamonds 14. The first two unfilled diamonds represent the pooled results of trained vs. untrained samples and the filled in or dark diamond represents the overall or total results of the meta-analysis (including trained and trained subjects). Oftentimes forest plots will contain a clear description of what each marker symbolizes underneath the actual figure. Error bars Elements that you will commonly see on most figures are error bars. Error bars are lines that represent the variability of the data being reported. There are different types of error bars and if the legend or description How To Read Research: A Biolayne Guide under the figure doesn’t explicitly state what kind they are they can be rather meaningless 25. The standard deviation (SD) bars represent the typical difference between the data points and their mean, whereas standard error (SE) bars indicate how variable the mean will be if you repeat the study over and over, and more subjects or samples decrease the SE 15. You’ll notice in the forest plot above that they included 95% CI error bars, which indicates where the true mean will fall within that bar on 95% of occasions 15. SE and CI with wider bars indicate larger error and shorter bars indicate higher precision, as sample sizes increase the bars become shorte 15. Error bars are helpful in visually depicting the significance in changes between groups. When error bars overlap the difference isn’t significant or in other words, the larger the gap between error bars the smaller the p-value will be. Error bars can be valuable in justifying the authors conclusions, but like any statistic they are only a guide and you should rely upon your own logic and understanding to determine the meaningfulness in the results being reported 15. Tables Tables are generally self-explanatory and describe the different symbols in the figure legend/description below. This table is from Layne’s PhD thesis where they examined the time course of plasma amino acid levels in response to ingestion of various protein sources 18 [63]. What’s important to notice here is how the statistics are portrayed. The first number is the mean for the particular group under the designated time category and the second number is the standard error associated with the mean. The letters after the standard error are used to statistically differentiate the means from each other, while the means with an * indicate that they are different from the baseline levels. For example, let’s compare the 30-minute whey group leucine (Leu) levels to the 30-minute wheat group leucine levels. The whey group has an ‘a*’ whereas the wheat group has a ‘b*.’ This indicates that these values are statistically different from each other (different letters) and both are significantly different than baseline (because they both have a *). However, let’s look at threonine (Thr) levels in the Whey, Wheat, and Wheat + Leu groups at 90 minutes. The Whey group at 90 minutes has an ‘a*’, while the Wheat group has a ‘b’, and the Wheat + Leu group has an ‘ab.’ So what does this mean? It means that the Whey group is statistically different from the Wheat group and from baseline. Also, the Whey group was not statistically different from the Wheat + Leu group since they both share an ‘a.’ The Wheat + Leu group was also not different from Wheat since they both share the letter ‘b’ and they weren’t significantly different from baseline. Post-prandial changes for plasma amino acids 1-3 Baseline Whey Time (Min) Wheat Wheat + Leu 30 90 135 30 90 135 30 90 135 Leu 86 ± 4 226 ± 17 a* 164 ± 26 a* 173 ± 22 a* 151 ± 8 b* 86 ± 6 b 99 ± 5 b 211 ± 8 a* 137 ± 8 148 ± 3 a* lle 69 ± 2 116 ± 11 a* 104 ± 4 a* 134 ± 16 a* 110 ± 6 b* 66 ± 3 b 86 ± 6 b 98 ± 6 b* 60 ± 4 b* 67 ± 1 c Val 117 ± 5 234 ± 17 a* 161 ± 5 a* 186 ± 19 a* 154 ± 8 b* 91 ± 3 b 104 ± 7 b 131 ± 18 b 77 ± 6 b* 77 ± 2 c* Lys 608 ± 24 1083 ± 78 a* 593 ± 34 688 ± 62 930 ± 64* 553 ± 28 698 ± 14 933 ± 67 597 ± 55 726 ± 48 Met 49 ± 2 102 ± 6 a* 62 ± 2 a* 80 ± 5 a* 72 ± 3 b* 42 ± 1 b 52 ± 3 b 71 ± 3 b* 44 ± 4 b 46 ± 2 b Thr 309 ± 9 594 ± 73 * 567 ± 18 a* 554 ± 38 a 383 ± 21 330 ± 18 b 314 ± 22 b 387 ± 12 382 ± 20 ab 308 ± 13 b Plasma amino acids express as umol/L. Data are mean ± SE<; n = 5-6. Means without a common letter differ between treatments within. time-points, P < 0.05.* Indicates different from fasted (P < 0.05). 3 12 h food-deprived controls. 1 2 How To Read Research: A Biolayne Guide 19 Article 04 Challenges for Researchers Research critics will often complain about studies not performing a specific measurement or failing to account for some variable. Oftentimes these criticisms are invalid or unwarranted because of the limits imposed on researchers. Armchair scientists who unfairly criticize studies for certain aspects oftentimes fail to recognize the challenges that researchers in nutrition and exercise science face. Depending on the academic institution, labs and universities vary widely in the equipment and funding they have available for research. Obviously, larger labs with graduate and postdoctoral programs are able to attract larger grants and more funding for projects which leads to more sophisticated testing instruments and a higher level of control over testing conditions. While there is growing interest in exercise and nutritional sciences which leads to more funding sources, there are still studies that can’t be conducted due to lack of resources. Funding The primary challenge for researchers in exercise and nutritional science is funding. There are various funding sources available such as governmental like the NIH, University grants, industry funding from food or supplement companies, organizations such as ACSM, NSCA, and other private foundations and non-profit organizations. The unfortunate reality is that even with studies receiving funding, the funding generally isn’t enough to support the desired level of control to be considered a high-quality study. To give you an idea of how quickly the costs for a study can add up, here in Florida the cost of performing a blood hormone test like leptin is roughly $70 per blood draw. So, let’s assume you wanted to test 10 subjects before and after a diet, that’s two leptin tests per subject which adds up to $1,400 for only 10 subjects. That’s a small sample size and if you wanted to make it a stronger study you would likely need more like 40 people which could cost upwards of How To Read Research: A Biolayne Guide $5,600 and that’s just to test one hormone. That’s not considering other lab supplies you might need, and the researcher wouldn’t be able to pay their staff anything which means they would need to find students who are willing to volunteer their time on top of their academic responsibilities. If you’re looking at studies that test protein metabolism in rats, the cost of carrying out an experiment could be upwards of $50,000. Many studies need to pay subjects to recruit the necessary sample size and if it’s a dieting study that includes supplying food, the cost of food can be astronomical. Nowadays many supplement companies are becoming more interested in having scientifically validated research to support the efficacy of their products for improved marketing. Some studies sponsored by supplement companies can cost tens of thousands of dollars and can even reach upwards of hundreds of thousands of dollars when offering to pay subjects to participate. We haven’t even discussed the costs associated with the instrumentation necessary to test certain variables in a lab. Generally, departments receive funding from their Universities for lab related costs to maintain, repair 20 or replace testing equipment. The amount received yearly for department budgets is generally only enough to afford maintenance on their current equipment and replace regularly used supplies, they can’t afford to buy new equipment or replace machines every year. Most exercise science programs have what’s called a metabolic cart (which we’ll discuss later) and costs upwards of $20,000, that’s not including the costs to maintain normal functioning or replace certain supplies needed for regular use. That is why labs are limited by funding and the equipment they have available. Available lab equipment It should now be no surprise why most exercise science programs can’t afford to have sophisticated testing equipment. The type of equipment in a researcher’s lab will determine the type of studies they can conduct. Some labs are focused on more mechanistic studies that involve molecular biology experimentation using cells and microscopes, whereas other labs are focused on more practical and applied research that investigate the effectiveness of a type of training modality. Researchers will focus on a specific area of interest and build their labs around that focus. The majority of How To Read Research: A Biolayne Guide exercise science programs will have a metabolic cart, treadmills, cycle ergometers, various types of body composition testing instruments, heart rate and blood pressure monitors, and some other performancebased testing equipment, but again this will depend on the university, the region and the faculty’s research interest. We will cover some common measurement techniques later, but it’s important to understand that very few labs have the most sophisticated testing equipment like a metabolic ward, MRI’s or muscle biopsy testing, due to funding. Aside from the major challenges of funding and lab equipment, researchers are governed by their institution to ensure responsible research conduct. IRB/ethics boards Academic institutions have ethics boards or governing bodies that oversee experimental research. At many universities the governing body is known as the Institutional Review Board (IRB) for humans and the Institutional Animal Care and Use Committee (IACUC) for animal research . The purpose of these departments is to ensure safe and ethical standards are being followed according to laws and regulations. Before a study can begin recruiting and testing subjects, they must go through a formal review process to obtain study approval. This is one of the most annoying processes involved in research because it’s time consuming and tedious. It’s comparable to filing your taxes, but more detail oriented and time consuming. While necessary, this approval process can take away time from conducting the experiment because most academic institutions operate on semester timelines that may include breaks or holidays that interfere with the study timeline. So, if it takes 8 weeks to approve a study and then another 3 weeks to recruit enough 21 subjects that’s the majority of the semester and only leaves a few weeks to conduct an experiment. This is why you will often see studies that aren’t much longer than 12 weeks in duration. The IRB process includes an informed consent for subjects and a very formal written study protocol explaining in detail every aspect of the study, including how you intend to recruit subjects. Subject Recruitment Subject recruitment is the other annoying process for conducting human research. Recruitment can be difficult and time consuming for exercise science and nutrition researchers. As mentioned previously, many labs don’t have the necessary funding to pay subjects to participate in their studies. Free protein powder and supervised training in the lab can be an appealing incentive to some, but many others don’t want to follow a standardized program for fear of less than optimal results. This is why you generally see sample sizes less than 50 in training studies. Even if a researcher is lucky enough to recruit 50 people you generally have subjects drop out due to various reasons and can end up losing up to 20 subjects or more sometimes depending on testing or intervention requirements. People have a hard time following specific instructions, especially if it means changing their usual lifestyle to accommodate study procedures when there is no incentive to comply. Think about asking college students to follow a specific diet and no alcohol on the weekends or asking them to come to the lab early before classes for testing or training, or how about asking them if it’s ok to stick a needle as large as a pencil in their leg for a muscle biopsy? Obviously, studies that include animal models don’t have to ‘recruit’ subjects, but they have to pay more for their ‘subjects’. Scheduling and Testing As mentioned earlier, scheduling and experimental time frames can be a major issue in conducting experiments, especially if operating under University semester timelines. Even if studies have the opportunity to occur over multiple semesters or with no time restrictions, scheduling can be a logistical nightmare for research staff. For example, let’s assume a study is investigating muscle growth over 12 weeks in How To Read Research: A Biolayne Guide 50 subjects and the training program consists of 3 full body days per week supervised in the lab by research staff. Not only will you have to create a schedule for the research staff to supervise each training day, but you’ll also need to schedule each participant for each training session each week. Not to mention, you’ll have to schedule your baseline testing, mid-point testing (if there is one) and post-testing. Depending on which measurements will be taken, it could take an hour for each participant, which means 50 hours per testing session multiplied by three testing points and that’s 150 hours only for the measurement testing sessions. That doesn’t account for the hour each subject is training in the lab 3 days per week over 12 weeks. The time requirement researchers ask from their subjects can be a lot. This is a good example of why you don’t see many training studies over 12 weeks, it takes a lot of time and money! Trusting Research How can you trust research and how do you evaluate studies that show conflicting findings? Individuals without research experience are at a severe disadvantage when it comes to being able to tease out the nuances and extrapolate upon results presented in publications. Bias We all have our own biases towards certain ideas or topics, unfortunately most people either fail to admit or don’t realize they have a bias towards a particular topic. Good scientists recognize and acknowledge their bias in an effort to tightly control for them in their experimental design. Being biased means having an unbalanced opinion or belief regarding a certain topic or idea. This often leads to being close-minded and failing to recognize conflicting or contrary evidence, beliefs or ideas. Scientifically speaking, bias is a systematic deviation between an estimated value and its true value 3. In other words, it can be used to represent error. There are a few types of biases that are important to understand to become more critical of research. 22 Confirmation Bias - This is essentially when people will cite evidence or report data that fits their bias or belief, while ignoring or failing to provide evidence that says otherwise. You’ll oftentimes see unethical individuals cite one study that supports their argument while failing to acknowledge five other studies that refute their argument. There could also be a scenario where someone misinterprets or takes very weak evidence and glorifies it to make it seem stronger than it really is. Politics is a good example, you will oftentimes see certain media or news outlets reporting a story that is misleading or simply untrue. They may use a weak study or twist the narrative of a particular topic to support their side of the story. Sometimes you’ll see a news report showing only a piece of an interview or press conference where it falsely portrays an individual’s beliefs to make them look bad and push their own political agenda. In research you may come across a discussion where authors are comparing their findings to other studies, but they fail to acknowledge other studies that refute their findings. Publication Bias - Publication bias is actually a pretty common and unfortunate practice in the scientific community. This type of bias is concerned with publishing studies that only report significant results. Published studies that support their hypothesis represent 85.9% of published studies in 2007 compared to studies that reject their hypothesis 16. Let’s face it, studies with stronger findings or significant results are more appealing to readers, especially editors and publishers because they’re more likely to get cited in other research, which leads to higher journal impact factors and more revenue for journals 16. Completing a study with insignificant findings can pose challenges for researchers and leaving them unpublished also poses a few issues. While the majority of responsibility for publication bias lies with journal editors and publishers, researchers can be guilty also. Researchers are busy and they usually have a research agenda planned out so that once a study is completed, they can begin on the next project, and oftentimes they have multiple research projects occurring at the same time. Earlier we briefly described what goes into designing and carrying out a research study, it’s obvious that research studies How To Read Research: A Biolayne Guide are a serious undertaking and require substantial time, money, and effort to complete them. When the results turn out to be non-significant it can be crushing to the researcher and the amount of time and headache they would have to put into getting it published just isn’t worth it so they store it in a file and forget about it (“file drawer effect”) 17. There’s also scenarios where graduate students carry the responsibility of writing up and submitting their manuscript for publication after completing the research project and instead they either graduate or move on to another program without completing the publication process. Other times researchers still put in the effort to get their study published but due to publication bias of journals it may be difficult or impossible to receive acceptance. However, reasons for researchers being guilty of publication bias are due to lack of time, low quality or an incomplete study, fear of rejection, or insignificant findings 16. Even though resources, time and effort will go wasted when studies aren’t published, there are some consequences of failing to publish studies with negative results. Before researchers invest time in designing a study, they obviously explore journals to find publications that are similar to their research question or hypothesis and evaluate their findings. If a study isn’t published due to negative results and another researcher wants to test the same hypothesis, they will be wasting valuable time and resources on a study that would produce negative results. Therefore, even though a study produces negative results it should still be published to inform future research. Additionally, unpublished data can misguide metaanalysis findings and conclusions. If meta-analyses are using data that only show significant findings when there are unpublished studies to conflict with some studies, they can produce false positives and misguide recommendations 16. Appropriately performed metaanalysis of clinical trials are the highest quality of scientific publications and commonly used for healthcare decision making and therapies 18. One of the more serious consequences of unpublished negative data is the potential harm to individuals from pharmaceutical drugs or even supplements. Publishing these negative 23 results could improve safety and standards of drugs before they’re released [16, 18]. Maybe a supplement study is carried out and finds no positive effect of their treatment, but there were some subjects who reported adverse symptoms or side effects. This study goes unpublished but could be detrimental to someone’s health. Inflation Bias - Commonly referred to as “p-hacking”, this is when unethical researchers will try a wide variety of statistical tests and then selectively report the significant results 17. This is essentially when researchers torture their data until they obtain a significant finding. It’s important to understand that statistical analyses should be pre-determined and a part of the study design process. P-hacking commonly occurs when researchers conduct a study and after collecting data decide to perform additional or different statistical tests based on the gathered data. Another common occurrence is when they simply eliminate outlier data from subjects who didn’t respond or responded much greater than the rest of the group. Another situation in which researchers are guilty of p-hacking is when they manipulate or change the groups, they established at the beginning of the study to make one group look like they experienced greater change. Lastly, p-hacking can occur from researchers performing data analysis part way through the duration of the study and discontinuing the study based on their results or simply not performing other statistical tests once they find significance [17]. Ethical researchers will do their best to address and acknowledge their biases, which sometimes can be unintentional. Unethical researchers obviously make choices with illintent and biases are irrelevant in those situations. Science and peer-reviewed research does a pretty good job at weeding out the bad apples and part of this deals with addressing conflicts of interest. Funding Sources / Conflicts of Interest - Any time there is a conflict of interest listed at the bottom of a publication it should be evaluated more critically. However, this doesn’t mean you should immediately discredit or dismiss the study or the findings. Ethical researchers list their conflicts of interest to be How To Read Research: A Biolayne Guide transparent and acknowledge any potential personal benefit or gain of the researchers or parties involved. This should be a clear indication that they aren’t trying to “hide” something or be dishonest, it should represent the opposite. If dishonest researchers were attempting to conceal some relationship or personal benefit, they simply would risk not listing a conflict of interest. Earlier we mentioned various sources of funding including food and supplement companies, governmental organizations, private companies, etc. When you come across a supplement company funding a dieting study or a study investigating the effectiveness of a particular supplement, this should raise a red flag, as with any type of company funding a study that investigates their product. But again, it just means you should evaluate the findings more critically. Before even evaluating the results check the study design. Was it a randomized placebo-controlled design? If not, you should be very apprehensive to the findings and results. Randomizing and having a placebo-controlled design is essential when comparing treatments. Evaluating Conflicting Evidence Let’s assume there have only been two studies published on a certain topic and they report contrasting findings. How do you determine which study is better or which study to trust? This is a difficult question to answer and involves many considerations, but we will highlight certain aspects and key details you’ll want to focus on. Results - The level of significance of the results is important and this is one of the first things you should notice, but as mentioned previously (statistical concepts), how meaningful are the results? Remember, we want to see a P-value < 0.05 and the higher the effect size value, the more meaningful it is. After evaluating the statistics, check to see if there is any missing data or if authors also published raw data within the text, appendix or supplementary material. A good example is, if a study is comparing two different types of diets, they should have a table showing their respective diet compositions, if not some type of food records or nutrition data. If there isn’t any type of nutrition data and it’s a diet study, we would be VERY cautious of the findings and the conclusions that are drawn. Publishing 24 raw data is not necessary, but it’s a good practice and if there’s raw data available look it over for yourself to see if there’s any glaring issues or if some of the numbers don’t add up. Within the results section they obviously will report the results from statistical analysis for the primary variables of interest, but they should also provide some type of figure or table to visually represent the data. Lastly, do the results of the study agree with previous studies? It’s ok if they don’t, but in the discussion the authors should explain conflicting results and if there is a reason why results don’t agree. Study Design / Level of control - How much control did the researchers have over the independent variables? Did they provide food to participants if it was a diet study? Were they supervising the resistance training program prescribed to participants? How did they control free-living conditions? Obviously, there are no mandatory requirements researchers should be following for their study design, this will be limited by their laboratory techniques and equipment they have available. But, there are some things you should be asking yourself when reading through the methods section, how did they test and control for X, Y and Z. If a study had subjects in a metabolic ward that’s far more valuable data than any free-living study. Similarly, if a training study doesn’t mention anything about supervised training it’s going to carry more confounding variables and limitations than a study that included supervised training in the lab for the duration of the study. The level of control is going to significantly impact the sample size and the study duration. Increasing the level of control comes at a cost, higher control = higher cost and generally leads to a smaller sample size and shorter study durations to maintain that level of control. Unlike human model designs, rodent models offer a high level of control, longer study duration and a larger sample size at a smaller cost compared to human subject designs. But the results aren’t always transferable to humans. 10 subjects it carries a lot less weight than studies with larger cohorts, but they can still be valuable and contribute to the body of literature. Case studies are at the bottom of the totem pole for study designs, but for investigating certain novel topics they can be the only appropriate design available. These types of studies should just be interpreted with caution and understand that their ability to draw strong conclusions is severely limited. The caveat to this is with studies that are extremely well controlled but have a small subject number. An example of these types of studies would be metabolic ward nutritional studies. In these studies every piece of food is provided to the subjects and they are housed in a ward that measures their energy expenditure. These types of studies do not need to have a high subject number in order to be impactful due to their high degree of control. They are also incredibly expensive which is why they typically don’t have a high subject number. Study Duration - You will generally encounter training studies in exercise science with durations around 12 weeks. This isn’t a bad thing, but the strength of Sample size - How many subjects were included in the study? Generally, studies with less than 10 subjects is a poor sample size and less likely to lead to significant changes in the outcomes. If a study has less than How To Read Research: A Biolayne Guide 25 evidence is going to be less than a study of 24 weeks, assuming all else being equal. Longer study durations provide a bigger picture of what could happen. It’s like having two cars drag race, maybe one car has greater acceleration and pulls ahead for the first ¼ mile, but the other car has greater overall speed and ends up winning the race. With longer durations we can have a more dependable and reliable idea of the changes that could occur. The difficulty with longer studies is that they are more expensive and less likely to have a high degree of control as they become more invasive to the subject’s lives. In general it’s important to understand the limitations that exist in all scientific studies. In general, if you want to conduct a long term study in humans, it will either be a low subject number or not well controlled or both. If you want to conduct a tightly controlled study in humans it will likely be short in duration or low in subject number or both. If you want to conduct a long term, tightly controlled study with a high subject number, it will likely be in animals. Below is a venn diagram providing you with a conceptual framework to give you a better idea of the give and take between variables for study designs. Treatment/Intervention - Any study that involves groups with different treatments or interventions, it’s important to take note of the dosages or the amount of the treatment or intervention. If a study is investigating a specific supplement, is the dosage clearly stated and is it an appropriate dosage to elicit a response? If you’re looking at two studies that compared the effects of caffeine on heart rate, it should be obvious that whichever study used the higher dose will see a greater heart rate. If two training studies are comparing muscle growth in a specific muscle, the level of training volume and intensity are going to have a major impact on their outcomes. If the study is investigating supplements they should be randomized and placebo-controlled to account for the various confounding variables and limitations. Limitations - Every study carries limitations, you can’t account and control for everything, at least not in freeHow To Read Research: A Biolayne Guide living studies. It’s ok for studies to have limitations and generally they’re outside of the researcher’s control, but major limitations should be clearly stated and explained towards the end of the discussion. With that being said, the researchers aren’t going to state every little thing that’s wrong with their study, so don’t expect that. Any major methodological limitations should be explained. Examples of some limitations are low sample sizes, study durations, lack of control over a specific measurement due to lack of laboratory resources, lack of generalizing the findings, differences in treatments, characteristics of subjects, issues with measurement devices, etc. Measures - It’s important to evaluate the methods section for the types of measurements they used to test the dependent variables. There is no perfect measurement available, it’s impossible to measure someone’s true or exact score of any measure and every device used in research will have a certain level of error associated with them. We may have “gold standards’’’ or measures that we use to validate other measures, but this is done through correlations and the criteria we use to validate other measures have their own error rates associated with them. Underwater weighing used to be the “gold standard” for measuring body composition, now we use the 4-compartment model because it’s been shown to be more reliable [19]. This doesn’t mean any study that uses hydrostatic weighing is useless, we just need to be critical of its error rates. There are endless types of available instrumentation to measure certain variables and we’ll cover some common measurements in the next section. When evaluating measurements, we are concerned with the validity and reliability of that measurement. Validity - Validity is arguably the most important consideration for measurement technique and indicates the degree to which a device measures what it’s supposed to 3. This is concerned with how accurate and “true” the measurement technique is. There are a number of types and ways to establish validity of a measurement technique. Frequently in research, validity is established by comparing one type of measurement to a criterion method. For example, 26 the 4-compartment model that uses bod pod for body Standard error of measurement - Standard error volume estimates was used as the criterion to determine of measurement (SEM) is calculated using the ICC if Dual-Energy X-ray Absorptiomertry (DXA) would be and the standard deviation of scores, which means it an acceptable method to measure body volume 20. The accounts for the variability and reliability of the test. validity of a measurement is more difficult to establish than reliability. ReliabilityReliability is concerned with the consistency of the measurement technique. Reliability is the degree to which a device produces stable or consistent results. If a measurement technique is not consistent, then you cannot trust the test. In other words, “a test cannot be valid if it’s This value tells us the level of error and precision of a measurement. SEM values can be viewed as a range, plus or minus around the predicted or measured value. For example, if you’re testing body fat percentage and you measure someone at 15% body fat and the SEM value is 3%, their true percentage is somewhere between 18% and 12% body fat. not reliable” 3. Before performing an experiment it’s Minimal detectable difference - The minimal important to test laboratory equipment that will be detectable difference (MDD) is calculated using the measuring our dependent variables to ensure consistent SEM. This tells us how sensitive the measurement is. and accurate results. This doesn’t have to be done It provides a value in the common unit associated with prior to every experiment, but the equipment used in the testing device and tells us the minimum amount research should be tested to ensure reliability. The test- of change needed to exceed measurement error and retest method is a common technique used to estimate the reliability of testing devices by performing one test, then after a specified time interval, test again 3. We can then perform some stats to obtain some values that tell us how reliable our instruments are. Not all studies do this and some studies test reliability in other ways, but it’s good science to report some type of reliability for testing devices, to ensure changes that occurred are to be considered a ‘real’ change. For example, if the MDD of an RMR machine is 100 kcal then the person you’re testing would have to have an RMR greater or less than 100kcal between testing points to be considered a real change. You may often see different terms used for these three statistics. SEM can also be called standard error of estimate (SEE), MDD can also be called minimal detectable Change (MDC), just know there may be different names that essentially resemble dependable. You will generally find these values in the the same meaning. There are also many other statistics methods section after a brief explanation of the testing available to test validity and reliability of measurement procedures for a specific device. techniques. These are just a few common ones you might come across and hopefully give you a better idea Intraclass correlation - The intraclass correlation coefficient (ICC) is calculated by running a simple ANOVA to produce a reliability coefficient (similar to coefficient correlation, as described in the stats section) that provides an estimate of the error variance of a testing device. This is a good indicator of the stability of the measurement. Values closer to 1 resemble scores that have a high similarity or high correlation as in other correlational scores, likewise scores closer to 0 mean of the error rates associated with testing different variables. We want to reiterate that oftentimes people overlook the error rates associated with some measures and assume they are accurate and/or exact scores. With in-vivo studies it’s impossible to know the true and exact score of certain variables, we test them which gives us a good estimate or prediction of the score and we have to know there is always a certain level of error associated with the device and/or technician. So long as the same technician, same device and testing is done they are less similar. In other words, scores closer to 1 under the same conditions, we can use measurements have less error and better reliability. to compare changes over time. How To Read Research: A Biolayne Guide 27 Article 05 Common Methods for Measuring Variables Body Water Deuterium Dilution Deuterium is a stable isotope of Hydrogen and deuterium dilution serves as the “gold standard” or criterion method for total body water assessment. Researchers use a labeled water that contains a large quantity of deuterium (“heavy water”) and measure concentrations in the urine, blood or saliva to measure total body water. There are other isotopes that can be used in a similar manner to the deuterium dilution method, but most commonly it is deuterium that’s used as a tracer. Using this method subjects void their bladders than drink water with the labelled isotope and after it has equilibrated in the body for a duration of time researchers most commonly collect a urine sample. The urine is then analyzed using a mass spectrometer to determine total body water levels. This method is expensive, time consuming and requires sophisticated laboratory expertise 26. For this reason, other measures have been developed to more conveniently measure total body water (TBW). Bioelectrical Impedance Analysis (BIA) BIA technology uses a small electrical current that is transmitted through your body extremities and between voltage detecting electrodes (contacting hands and/or feet). Water conducts electricity and tissues like fat mass and bone have very little water which increases the resistance (impedance) of the electrical current thereby decreasing the rate of its How To Read Research: A Biolayne Guide transmission. Based on fat mass content in your body, the impedance (resistance) of the electrical current is measured using Ohm’s law (resistance = volume / current) which can then be applied in an equation to quantify water volume, percentage body fat, and FFM 21. There are many different types of BIA devices available and vary based on specific frequencies, cost and complexity, which will impact the validity and reliability of the specific device being used. Nowadays you will commonly see BIA technology integrated into at-home body weight scales. When used for body composition assessment, research indicates that BIA is comparable to DXA when estimating BF%, fat mass or fat-free mass (FFM) 27. However, other research indicates that single assessments using DXA or BIA is questionable due to their accuracy on an individual level [28]. When compared to deuterium dilution for measuring TBW, BIA is close in accuracy, but still slightly underestimates TBW 29. BIA shows promise in accurately estimating TBW, however accuracy in measurement can vary based on the population being 28 studied and with little research comparing BIA to deuterium dilution, the validity to accurately estimate TBW remains questionable 30. Nonetheless, evidence suggests BIA is acceptable for assessing TBW and displays acceptable accuracy when assessing body composition if incorporated into a multi-compartment model 28. Another tool that shares similarities to BIA known as Bioelectrical impedance spectroscopy (BIS), seems to exhibit greater validity and reliability than BIA when assessing TBW 26. Bioelectrical Impedance Spectroscopy (BIS) BIS features the same underlying technology as BIA to estimate body composition and water, which includes an electrical current traveling through the body between electrodes to measure the impedance of the electrical current. BIS devices differ from BIA devices by utilizing a ‘spectra’ of frequencies, which is where the term spectroscopy comes from 30. Although there are single and multi-frequency BIA devices on the market and it’s unclear at what frequency a BIA could be considered BIS; BIS uses Cole modelling to predict body fluids, which has been suggested to be superior for assessing body composition using impedance based methods 30, 32, 33. BIS is also useful in differentiating between intracellular and extracellular body water. The underlying principles used for BIA and BIS are the same for estimating body composition and either device can acceptably be utilized for body water estimations, however it appears BIS is more accepted 26, 28, 30, 33. It’s important to keep in mind the underlying principles for how these impedance based devices were developed and they’re primarily for body water assessment, although they can predict body fat % (BF%), other body composition methods would be more acceptable. fat mass and/or fat-free mass (FFM). The only direct measurement of body composition would involve performing an autopsy on a human cadaver to dissect and weigh various tissues and organs, which is obviously impossible for free living experiments. Therefore, we estimate body composition based on what we know about the weight and composition of various tissues in the body. It’s important to understand that there is no perfect estimate and all techniques and methods have error rates associated with them. For this reason, we cannot place a high level of importance with a specific percentage of body fat. Rather, we use it as an objective measure to quantify and track changes to determine the effectiveness of specific interventions. Skinfold The most common and cost-effective method for estimating body composition is the skinfold technique. This technique assumes a 2-compartment (2C) model (more on multi-compartment models later), splitting body weight into fat mass and FFM. This technique requires firmly grasping the subject’s subcutaneous fat and skin with the thumb and forefingers to measure the thickness (in mm.) with a caliper. You can accomplish these measurements with as few as three sites or as many as seven including the triceps, subscapular, suprailiac, abdominal, upper thigh, chest, and midaxillary. Measuring seven sites Body Composition There are a number of techniques and methods available for measuring body composition, specifically How To Read Research: A Biolayne Guide 29 give a more accurate estimate of BF% because it can account for body fat distribution, some people hold more fat in their lower body compared to upper body. The sum of these site measurements are added together and plugged into a prediction equation to estimate body density, which is then plugged into the Siri equation to estimate body fat percentage (BF%) 34 . There are a number of body density prediction equations available and it’s important to use a population specific equation because the coefficients used in the calculations can produce inaccurate estimations for individuals with varying body fat levels. When using an appropriate population specific equation, skinfold fairly accurately predicts BF% (± 3-4%) 35. The great thing about skinfold is not only the low cost, but you can track site-specific changes to gauge the rate and location of fat loss. Additionally, this is one of the few measurements that actually assess fat thickness, most other measures use X-ray beams and imaging techniques or electrical currents to assess fat mass. This technique is only as accurate and reliable as the technician who is performing the test. The technician must have a lot of experience developing this skill to precisely identify anatomical site location and accurately measure fat thickness consistently. When compared to computed tomography (CT scan) skinfold shows a strong correlation when comparing measurements performed in the abdominal region 36 . However, studies comparing skinfolds to the gold standard 4C model, results indicate large individual error rates, but acceptable group average values 37, 39. Meaning, when you test one person the error rate can be much higher compared to measuring and averaging the BF% of a group of people. For example, you could compare skinfolds to another method and see an over or under estimation in BF% by 6%, but when comparing How To Read Research: A Biolayne Guide the group average BF% the error in BF% estimation could be only 2%. These are arbitrary numbers and don’t reflect the true error rates of skinfolds, those will vary depending on the equation, population and criterion method being used for comparison. Nonetheless, skinfolds are the most cost-effective method and with a skilled technician and correct equations, they can provide an accurate estimate of body composition. A-mode Ultrasound A-mode ultrasound uses ultrasonography technology, which transmits a signal through the skin and tissues and the reflection of the signal at tissue boundaries is transmitted back as an “echo”. There is also another type of ultrasound known as “B-mode” (we’ll cover later), but we’re specifically referring to A-mode ultrasound. Bodymetrix has developed a handheld portable device that is used similarly to how skinfolds are conducted. The device can be used to measure as few or as many sites as desired, simply select the equation and number of sites from a drop-down menu in the software. This technique also relies on the skill of the technician. One of the primary benefits is being less invasive since it does not include “pinching” the 30 subject and while the cost is much less expensive than other sophisticated laboratory equipment, it is still more expensive than skinfolds. The unique aspect of this device is that it can also produce an image of the muscle and fat layers. This device has not been validated to measure muscle thickness, but some researchers suggest it could be a useful tool for measuring acute changes in muscle thickness 40. For body composition it hasn’t been validated adequately to the same degree as other measures, but studies show strong agreement between skinfold and air displacement plethysmography (ADP) 41, 42. Body Volume Measurement Underwater weighing (UWW) and air displacement plethysmography (ADP) accomplished via Bod Pod, are used to measure body volume by applying Archimedes principle, which allows for calculation of body density. Body density can then be used in an equation (generally the Siri equation) to calculate BF%. Underwater weighing is conducted by having the subject sit on a flimsy carriage that is connected to a scale (it’s like a human produce scale) and lowers them into a pool of water. The subject’s nose is pinched closed and they are instructed to blow out all of their air as they are slowly submerged into the pool in a fetal-like position. The testing procedure for this technique is probably the worst compared to others. Imagine exhaling all of your air while hunched over, remaining as still as possible, while being lowered into a pool while researchers attempt to record your weight. Prior to being submerged in water, researchers measure residual lung volume to account for air trapped in the lungs after full exhalation. The Bod Pod is very similar to underwater weighing, except using air, and involves a much more comfortable testing procedure; although those who are claustrophobic may not agree. Subjects are placed in a large plastic “pod” like device with a small window. While sitting on a small seat wearing a swim cap, body volume is measured within a few minutes by subtracting the initial volume of the empty chamber by the reduced air volume with a person inside. This method estimates body composition very closely to hydrostatic or underwater weighing, since they use similar underlying principles. Underwater How To Read Research: A Biolayne Guide weighing was previously considered the gold standard and criterion method to validate other methods, now we have more non-invasive techniques available that can provide greater BF% accuracy. Multi-compartment Models The advancement of technology and how we understand body composition has led to the development of more accurate and precise assessment techniques. Multicompartment models are considered the criterion for validating other methods of body composition estimates 43. By including more measures and multicompartments we can reduce the assumptions made regarding various tissues weights and volumes, leading to a more precise estimate by measuring them. It would be reasonable to assume that by introducing more measurements the error rates associated with those measurements could reduce the accuracy, but research shows these error rates are negligible 44. Multi-compartment models range from the traditional 2C all the way up to 6-compartments (6C). The 4-compartment (4C) model is viewed as the gold standard for body composition assessment 45 . The 4C model will be accomplished based on the instrumentation that labs have available, but generally it is accomplished using a DXA and a measurement of body water (generally BIA). It has not been established if increasing the complexity of these methods justifies the potential benefits 46. The more complex and sophisticated these models become, the cost of testing increases due to the instrumentation needed to measure various tissues, making it impossible for some research labs. Dual-Energy X-Ray Absorptiometry (DXA) Dual-Energy X-Ray Absorptiometry (DXA) is a common and popular method to test body composition. DXA machines were originally developed for bone mass assessment. Now they have become a common method for body composition testing, if labs are fortunate enough to have the funding to support the high cost associated with them. DXA is a practical and noninvasive way to measure body fat percentage. Subjects comfortably lie supine on a table for the 10-15 minute 31 2 Compartment Model 3 Compartment Model 4 Compartment Model 5 Compartment Model How To Read Research: A Biolayne Guide 6 Compartment Model 32 test while two low-energy X-ray beams (with minimal radiation exposure) slowly pass across the body. The computer software generates an image of the underlying tissues and quantifies bone mineral content (BMC), total fat mass, and FFM 21. Additionally, DXA has the ability to perform regional body tissue analysis to determine if specific areas of the body have lower or higher body fat or BMC. Many believe DXA scans are a superior method for testing BF%. However, if certain variables are not accounted for and if DXA scans are not performed correctly (like any measure) there is potential for high error rates. DXA scans operate under a 3-compartment model, splitting body weight into: body fat, fat-free mass (FFM) and BMC. Body water fluctuates throughout the day based on water and glycogen stores and these fluctuations can lead to large error rates because DXA fails to account for body water. This is supported by previous research showing a 3C model with a body water measurement produces smaller error rates than DXA when compared to a 4C model 28. When looking at group level comparisons, DXA seems to have pretty good accuracy compared to the gold standard 4-compartment model 28. However, when looking at individual comparisons or changes, the error rates can be much higher, especially if individuals differ in certain characteristics such as sex, size, fatness or nutritional status 28, 47. The error rates of DXA scans will vary from study to study depending on methodological differences of the study design, but research has shown that DXA error rates can be as high as 8-10%, which is similar to the error rates of hydrostatic weighing 48. While DXA shows a strong correlation to CT scans, DXA still underestimated fat weights by 5kgs 49. The accuracy of DXA has also been questioned when evaluating weight loss changes from a study that simulated weight gain by wrapping lard around subjects and performing a DXA scan. Results showed that the DXA scans quantified the lard as bone mineral content rather than fat 50. For these reasons, results should be interpreted with caution from studies using exclusively a DXA scan to evaluate weight changes. Instead, researchers should incorporate DXA scans into a 4C model that also accounts for body water to more accurately estimate body composition changes. How To Read Research: A Biolayne Guide As you can see all of these techniques and methods carry some limitations and while some methods may be more accurate or precise, they are all acceptable methods for estimating fat and fat-free mass. Since a large portion of FFM is muscle, you may see some of these techniques used to infer increases in FFM as increases in muscle growth (hypertrophy) 51. However, there are more direct and appropriate methods available to assess hypertrophy. Protein Metabolism In the body, protein is in a continuous state of breakdown and synthesis, this simultaneous process is known as protein turnover. In a typical 70kg male, about 0.3kg of protein is degraded and replaced each day to avoid the breakdown of stored protein 22. Protein metabolism is a complex and intricate process that requires sophisticated laboratory equipment and testing techniques. Here we describe a few methods that are commonly used to assess protein turnover. Isotopic Tracer Method Muscle Protein Synthesis (MPS) is one of the more complicated measures to explain. To assess the rate of MPS scientists often use a ‘tracer’, which is a molecule that they can track and ‘see’ which tissues it ends up in. In the case of MPS we use either a radioactive (less common) or stable isotope form of an amino acid to measure MPS. You may remember from general chemistry that an isotope is an atom that has a different number of neutrons than normal, which increases its weight. Since it’s heavier than a normal molecule we can use a gas chromatography mass spectroscopy (GCMS) to separate it from the ‘normal’ molecules. A common amino acid isotope used to assess MPS is D-5 Phenylalanine (an amino acid). D-5 means the 5th carbon on the phenylalanine is deuterated hydrogen which contains an extra neutron, thus making it heavier than normal phenylalanine. D-5 Phenylalanine is often chosen as a tracer because it is not metabolized by the muscle (although various other amino acids are used 33 as well), so it can be assumed that any D-5 that winds up in muscle protein did so due to MPS. To assess MPS, typically the amino acid isotope is infused or injected into the bloodstream of the subject that is undergoing whatever treatment is being provided. The tracer will then be taken up by the muscle in the form of intracellular amino acids or incorporated into proteins via MPS. This ratio of peptide bound tracer vs. intracellular tracer forms the basis behind determining the ‘rate’ of MPS. To put it in more practical terms, if the tracer is found in greater concentrations in muscle proteins in one treatment group vs. another, it is likely that the first treatment group has higher rates of MPS since more of the tracer wound up there. The actual equation of MPS is a bit more complicated than this and for bolus injections of isotopes (usually done in rodents) the equation is: MPS (%/hr) = MPS = (Eb x 100)/(Ea x t) where t is the time interval between isotope injection and snap freezing of muscle expressed in hours and Eb and Ea are the enrichments of 2 H5-phenylalanine in hydrolyzed tissue protein and in muscle free amino acids, respectively. In the case of infusing a tracer the equation is portrayed as: MPS (%/ hr) = (Ep2 - Ep1)/(Eic)/(t 100) where Ep2 and Ep1 are the protein-bound enrichments from muscle biopsies at time 2 (Ep2) and previous muscle biopsy at time 1 h (Ep1). Eic is the mean intracellular phenylalanine enrichment from the biopsies and t is the tracer incorporation time. We realize these equations probably look quite daunting but the only thing you need to know is that we are comparing the incorporation of the tracer at one time point vs. another time point to see how much has been incorporated into muscle tissue and in what timeframe. If we have that information and we have the intracellular concentrations of that tracer, then we can determine the rate of MPS. Once a biopsy (human testing) or sacrifice (animal studies) is performed and the muscle tissue is taken, it is immediately frozen in liquid nitrogen to ‘freeze’ all metabolic processes so that there is now a ‘snapshot’ of the muscle metabolism. The tissue is then later ‘powdered’ (fancy word for grinding it into a powder with a mortar and How To Read Research: A Biolayne Guide pestle), homogenized, and then taken through various chemical reactions in order to separate the protein bound amino acids from the intracellular amino acids (this is usually done by adding perchloric acid to the sample). The intracellular amino acids and peptide bound amino acids are then taken through several other chemical reactions to prepare them for the GCMS and then run through the GCMS which allows scientists to determine the concentrations of the tracer in the muscle and intracellular fluid by separating the tracer from the normal amino acid on the GCMS (the gas chromatograph helps separate the isotope based on weight since it’s heavier). Then the concentrations of the tracer in each sample can be determined by comparing them to standardized concentration samples that are also run through the GCMS. Once we have the concentrations of these samples, we can plug them into our equation to determine MPS. Easy right? We doubt anyone is saying that and we can assure you that it’s not. The entire process is extremely sensitive to error, which takes around 2 weeks to analyze ~100 samples and is a minefield for potential errors. Scientists have to be borderline obsessive about handling their samples and execution of reactions in order to ensure good data. Nitrogen Balance Nitrogen balance is the difference between nitrogen intake and nitrogen excretion. A negative nitrogen balance occurs when nitrogen excretion is greater than nitrogen intake and vice versa. A neutral nitrogen balance is said to occur when nitrogen intake is equal to nitrogen excretion. Protein contains roughly 16% nitrogen content on average, so by knowing protein intake we can then calculate nitrogen intake 22. Nitrogen excretion on the other hand, is more complicated to measure and control for. Nitrogen excretion can occur through urine, feces, sweat, and skin 22. One of the primary drawbacks to this method is attempting to quantify nitrogen excretion which can often lead to an underestimation of total nitrogen excretion. Another drawback of the nitrogen balance method is the effects of dietary intakes. During caloric restriction an increase in nitrogen excretion can occur, even when 34 protein intake is high and when protein intake increases nitrogen excretion generally increases as well. These drawbacks can lead researchers to overestimate nitrogen intake and underestimate nitrogen excretion, leading to inaccurate estimates of nitrogen balance 22 . The majority of nitrogen stored in the body resides in skeletal muscle tissue and is often used to assess muscle protein metabolism, however this method is more indicative of whole-body protein turnover and does not specify tissue specific protein metabolism. This is important because while skeletal muscle mass is the largest source of nitrogen in the body, the turnover rate for skeletal muscle is very slow at only ~1% per day whereas the liver and gut tissues turn over at 30-80% per day. Due to this, nitrogen balance changes often reflect what is occuring in those tissues vs. muscle mass. 3-Methylhistidine 3-methylhistidine is an amino acid present in actin and myosin, which are contractile units of muscle fibers. 3-methylhistidine can be measured from a muscle biopsy, in the blood or more commonly in the urine. Unlike the nitrogen balance method, 3-methylhistidine can be used as a urinary marker for muscle protein breakdown, since roughly 90% of 3-methylhistidine is located in skeletal muscle 22, 23. When skeletal muscle is broken down, 3-methylhistidine is excreted through the urine because it cannot be recycled from degraded contractile proteins 22. However, as much as 25% of urinary 3-methylhistidine could come from other nonmuscle sources 24. Another limitation of this method is that 3-methylhistidine is present in dietary meat. So large intakes or increase in dietary meat consumption would increase urinary excretion giving researchers inaccurate data. So long as these limitations are accounted for and controlled it can be a viable method to assess skeletal muscle degradation. acid that cannot be metabolized or produced in muscle tissue and monitoring how much goes in and out of the muscle 22, 25. Phenylalanine, tyrosine and lysine are not metabolized in muscle, but most often you’ll see phenylalanine used 22. Arteries carry blood and nutrients to the skeletal muscle and waste products or nutrients are carried out of the skeletal muscle through the veins. By inserting a catheter into the vein and artery of the leg or arm, researchers can then measure the concentration of phenylalanine in the veins and arteries at those locations. Then muscle protein synthesis is determined by the disappearance of phenylalanine in arterial blood (signifying phenylalanine being deposited in muscle protein), and the appearance of phenylalanine in venous blood signifies muscle protein breakdown 22. The obvious downside to this is you can’t have catheters inserted in subjects indefinitely. This technique is employed for short durations, usually only a few hours after a specific treatment. This only gives a small snapshot of what could occur, the problem is that the observations are generalized or extrapolated into long-term changes. It’s common for some of these methods to be implemented together in some studies to generate a more reliable outlook of protein metabolism due to the limitations associated with each technique. Since protein synthesis leads to more muscle mass and protein breakdown leads to less, a better approach for investigating the long-term changes of protein metabolism may be to specifically measure muscle growth. The Arteriovenous Net Balance Technique Unlike the nitrogen balance method for measuring protein metabolism, this technique can measure rates of protein synthesis and breakdown that occur within the muscle tissue. This is accomplished using an amino How To Read Research: A Biolayne Guide 35 Hypertrophy Measurements B-mode Ultrasound The most common device you will encounter for assessing muscle thickness changes is the B-mode ultrasound. This is the same type of ultrasound device that’s used for measuring fetal development during pregnancy. Similar to A-mode ultrasound, the device probe converts electrical energy into high-frequency sound waves that pass through the skin surface and underlying tissues, which reflect from the bone surface to produce an echo 21. Compared to A-mode, B-mode is more expensive and technically demanding, it also produces a higher resolution image that provides more detail and tissue differentiation 21. This method of assessing muscle thickness is non-invasive, can be done quickly and is less expensive than most other measures of muscle growth. Like the skinfold technique, this assessment is skill dependent and relies on the error rate of the technician. Hypertrophy can vary through different regions of the same muscle and B-mode measurements only represent the site specific region that’s measured, it is not indicative of hypertrophy of the entire muscle 51, 52. Advanced Imaging Techniques The two types of advanced imaging techniques we will briefly discuss are computed tomography (CT) and Magnetic Resonance Imaging (MRI). These are highly complex and expensive pieces of equipment, which is why you’ll rarely see them used for body composition or muscle growth research. These two methods are as close as we can get to human cadaver analysis in free-living subjects, with their advanced imaging techniques they allow for visualizing and quantifying organs and tissues such as muscle and fat 54. CT scans use ionizing radiation X-ray beams that pass through tissues with differing densities, which generates cross-sectional, 2-dimensional radiographic images of body segments 21. Using these images researchers can determine total tissue area, tissue thickness and volume of tissues within an organ 21. CT scans have How To Read Research: A Biolayne Guide been shown to be reliable and valid for assessing changes in muscle cross sectional area (CSA) [55]. MRI is a large tunnel-like machine you see in most medical tv shows. These machines use electromagnetic fields and radio waves to generate detailed images of the organs and tissues within the body. Unlike CT scans, MRI’s don’t use ionizing radiation, whereas CT scans do emit a small amount of radiation exposure. MRI can be used for a variety of measurements including, total and subcutaneous adipose tissue assessment, muscle’s lean and fat components, muscle thickness, and muscle volume 21. MRI is viewed as a reference standard for regional muscle mass analysis and is the most accurate in terms of assessing changes in gross muscle size 51, 56. The few downsides associated with MRI (aside from the high cost) include its inability to assess the molecular adaptations that occur within muscle fibers and they fail to evaluate the metabolic and underlying mechanisms of muscle tissue 51, 57. Muscle Biopsy Muscle biopsies are a safe procedure accomplished using an anesthetic to numb the site, then a large pencil sized needle is inserted through the skin and underlying subcutaneous tissues and fascia to reach the skeletal muscle tissue sample that is clipped and removed. Muscle biopsy samples can be used to assess microscopic and molecular changes to skeletal muscle. When evaluating microscopic changes, the sample is frozen, thinly sliced and attached to a slide and stained (depending on the method used), to determine fiber cross sectional area (fCSA) or fiber type-specific cross sectional area 51. Molecular assessment of muscle growth takes it another step deeper than microscopic and analyzes the changes in protein sub-fractions (the components that make up muscle fibers) such as actin, myosin, or other sarcoplasmic protein concentrations 51. When evaluating molecular changes there are a variety of different methods and protocols available. Limitations to muscle biopsies share some similarities to B-mode ultrasound. Muscle biopsies only measure the site where the sample was extracted and any observed changes are also assumed to occur in the surrounding fibers, as previously mentioned, muscle growth can vary throughout the muscle. Additionally, 36 the difference in tissue processing methods between labs and the lack of standardization makes it difficult to compare findings between studies 51. Lastly, it’s impossible to perform a biopsy in the same location twice, so biopsy samples within the same study could be comparing the changes of different regions of the measured muscle. For a more comprehensive and in-depth review of measurements relating to muscle hypertrophy we strongly suggest a review by Haun et al. (2018) 51. Energy Expenditure Energy expenditure is essentially a measure of heat production. Cellular metabolism results in heat production and measuring the body’s rate of heat production gives a direct assessment of metabolic rate 21. We can measure heat production directly or indirectly by measuring the exchange of gases (carbon dioxide and oxygen). Indirect Calorimetry Resting metabolic rate or resting energy expenditure How To Read Research: A Biolayne Guide (REE) is an estimation of the amount of energy an individual expends at rest (laying on a bed) over a 24hour period. This estimation is derived from analysis of the volume of air breathed during a specified period of time and the composition of expired air 21. The most accepted measure to determine REE is via indirect calorimetry, using a device known as a metabolic cart. The metabolic cart analyzes air volume and composition the participant is breathing. To accomplish this, the metabolic cart includes a computer interface to display data output recorded by a device that continuously measures the subject’s expired air, a flow-measuring device to record the amount of air volume breathed and a small gas chamber that analyzes the oxygen and carbon dioxide composition of expired air 21. The subject lies supine on a table with a facemask or a plastic canopy that collects the air breathed which travels through a long tube to the metabolic cart. The device then estimates the number of calories per day the participant uses at rest, based on the volume of air breathed and the composition of expired air, accounting for ambient air temperature and composition. Substrate utilization is accomplished within the same test and is calculated 37 from the volume of carbon dioxide produced divided by the volume of oxygen consumed, known as respiratory quotient (RQ). The RQ value is used to determine if a greater percentage of calories burned come from fat or carbohydrates. This method of energy expenditure is non-invasive and takes approximately 20 minutes to complete, with the first 5 minutes discarded for calibration purposes and the remaining 15 minutes used to extrapolate the data into a 24-hour period. The obvious drawback to this method is the high cost associated with the device and requiring the subject to be at rest (not sleeping) for 20 minute time periods. This method also requires careful calibration between tests and controlling for variables by testing subjects fasted, prior to any food or drink consumption. Doubly Labeled Water Technique The doubly labeled water technique involves consuming a quantity of water with a known concentration of non-radioactive stable isotope forms of hydrogen and oxygen 21. This method estimates average daily energy expenditure in free-living conditions once the isotopes have distributed throughout all bodily fluids (roughly How To Read Research: A Biolayne Guide 5 hours) 21. These labeled isotopes serve as tracers and can be measured as they leave the body through sweat, urine, pulmonary vapor, and carbon dioxide (CO2). The difference between elimination rates of the two isotopes is determined using an isotope ratio mass spectrometer and allows for an estimate of total CO2 production 21. During the observation period (several days to weeks), researchers measure a urine or saliva sample for concentrations of the enriched isotopes for estimation of CO2 production rate. Researchers then use this estimated carbon dioxide production rate and the subject’s RQ to calculate energy expenditure. This technique has a high cost associated with it, which results in low sample sizes and doesn’t allow for evaluation of day to day variations in energy expenditure. However, this method allows prolonged assessment periods that don’t interfere with everyday life or physical activity. This method also serves as a criterion to validate other methods since its accuracy averages between 3-5% when compared to direct measurements of energy expenditure in controlled 21 settings . Drawbacks to this technique are that it does not assess what is contributing to changes in energy expenditure (BMR vs. NEAT vs. TEF vs. Exercise) and has been demonstrated to possibly overestimate energy expenditure in low carb diets 64. Direct Calorimetry Direct calorimetry is the most controlled and accurate measure available for estimating energy expenditure. This is accomplished using a metabolic ward or metabolic chamber that houses subjects in a room sized chamber. The chamber has an inlet for oxygen to flow into the chamber and an outlet for CO2 to exit. There is also a layer of water surrounding the chamber 38 and as the subject’s heat is dissipated it warms that layer of water. By knowing the volume of water and the temperature change of the water, researchers can then calculate heat production. Then calculate energy expenditure based on heat production. This type of measurement is highly expensive, which is why few labs have this available for measuring energy expenditure. For this reason, you will often find energy expenditure to be measured using indirect methods. When this type of measurement is used in studies, you’ll see they use small sample sizes to account for the high cost. But these types of studies will always be more powerful than a study that uses an indirect measurement. Hormones Hormones are chemical messengers synthesized in specific glands and transported in the blood to targeted cells or receptors to elicit a physiological response. Hormone secretion rarely occurs at a constant rate and adjusts rapidly to meet the demands of the body 21. Various sources can impact a hormone secretion rate depending on the magnitude of chemical stimulatory or inhibitory input 21. The secreted amount of a hormone is indicative of its blood plasma concentration 21. Hormones are most commonly tested from blood draws and analyzed based on their blood serum concentrations. Some hormones can be tested through saliva which introduces a less invasive and more costeffective method. Cortisol is commonly assessed from saliva and has been shown to have a linear correlation with blood concentrations 58. However, the correlation was low and blood concentrations could not be inferred from salivary cortisol concentrations 58. Also, the concentrations of hormones in saliva are much less than the concentrations in the blood, which could indicate that salivary measures are more indirect and imply passive diffusion rather than active secretion 58. There are a number of factors that can impact the results of salivary hormone testing, but when procedures are standardized and certain variables are accounted for, salivary hormone testing can be a good and acceptable How To Read Research: A Biolayne Guide method, especially if blood testing is not an option. However, blood testing will give a better indication of the secreted hormone concentration. We have frequently noticed in the fitness industry many individuals place hormones on a pedestal and unreasonably emphasize hormone data. While hormone results are objective and excellent physiological outcomes, they shouldn’t be exaggerated. There are a few things you want to consider when evaluating hormone results. Most hormones are secreted based on a variety of stimuli, while other hormones follow specific secretory daily cycles (diurnal pattern) or several week cycles 21. If not performed properly, one single blood draw will fail to account for the specific secretory pattern of certain hormones and won’t tell you anything about the changes that occurred following a treatment. Even with multiple testing points, the secretory pattern must be acknowledged or flaws in the analysis and interpretation of results can occur. Acute changes in hormones don’t necessarily lead to long-term adaptations. A great example of this is the hormone hypothesis for muscle growth. It was commonly believed that the acute increases in anabolic hormones like testosterone and growth hormone following resistance training leads to greater muscle growth. However, this has been discredited by a comprehensive review that explains how acute postexercise increases in systemic hormones are not a proxy measure for increased muscle growth 59. Rather, these transient increases in hormone concentrations are more likely due to changes in fuel demand and increased fuel mobilization to support exercise. Hormone data gives us an objective measure for assessing underlying physiological responses to certain treatments, but it only gives us a snapshot of physiological changes during the specific measurement points. Therefore, it’s imperative to have a comprehensive understanding of specific hormones and underlying physiology when evaluating hormone data. 39 Muscle Excitation Electromyography (EMG) Electromyography is a measure of how the neuromuscular system is behaving 60. In exercise science, EMG is commonly used to investigate variables such as muscle activation, force production, muscle recruitment, muscle strength and hypertrophy (which is problematic as we’ll discuss). Surface EMG (sEMG) is the most frequently used device and is highly sensitive to increases and decreases in voltage that occur on the muscle fiber membrane 60 Small electrodes are placed over the muscle group/s of interest on the surface of the skin. The electrodes transmit the detected electrical impulses to a computer that displays a graphical representation of the voltage amplitude readings. Great caution is needed when reading studies using EMG as a primary outcome due to the complicated nature of sEMG and lack of longitudinal work 60. Amplitudes measured with sEMG are the most frequently reported metric in EMG experiments, which are a measure of excitation, they are not a direct measure of activation and sEMG amplitudes by themselves cannot be used to infer motor unit recruitment or rate coding 60 In other words, sEMG cannot tell us if a certain exercise is recruiting more muscle fibers or if the muscle fibers that are activated are firing at a faster rate. Additionally, the passive properties of muscle allow force production to occur with a corresponding sEMG amplitude reading of zero, indicating sEMG amplitudes cannot reliably predict muscle force during dynamic tasks 60, 61. It has been assumed that greater sEMG amplitudes from certain exercises can be used to predict longterm adaptations in strength and muscle hypertrophy, this is currently unknown and conclusions should be interpreted with caution due to sEMG’s inability to account for muscle properties and the number of other variables that can impact hypertrophy and strength adaptations 60. There are a number of factors that can influence sEMG amplitudes aside from muscular effort such as muscle length, contraction type, contraction speed, tissue conductivity, and electrode How To Read Research: A Biolayne Guide placement; if variables like these are not accounted for it can make comparisons between different exercises inappropriate 60. The take home message from this discussion is that EMG data can be very messy and misconstrued with inappropriate conclusions, but that’s not to say that all EMG studies/data are useless. The previously mentioned confounding variables need to be controlled when using EMG as a primary outcome. Comparing different exercises using a within-subject and within-muscle (comparing pre- and post-test results from the same person and same muscle in the same testing session) design may provide more reliable data on muscular excitation and force production when amplitude signals are appropriately normalized and other variables are controlled 60. While EMG data can be useful for understanding the neuromuscular system, the conclusions and recommendations are currently limited by lack of longitudinal studies 60. Strength Testing Strength is a skill and highly specific, not only to the type of exercise, but also specific to the intensity and rep range you consistently train at. This can be problematic when attempting to measure and compare strength adaptations between groups exposed to different training programs. Repetition Maximum (RM) Repetition maximum (RM) is the most commonly used test of strength you’ll encounter in the literature. An RM test can be used for any number of repetitions to assess the maximum amount of weight a subject can lift for a specified number of repetitions, most often a 1RM test is utilized. This type of test lends itself to a certain level of subjectivity because load selection is dependent on the research staff who are supervising. If researchers over or underestimate the load change it can lead to a subject not achieving a true maximum and falling short due to fatigue from repeated max attempts. So long as proper standardization is applied with an established protocol, subjectivity can be minimized. 40 Generally, most protocols involve a few warm-up sets with progressively heavier weight in each set. After a few minutes rest between sets the subject would then attempt a near maximal attempt. During each completed attempt, the weight is increased based on the researcher’s discretion. A skilled researcher should find a 1RM within three to five attempts. Some researchers suggest if the only measure of strength in a study is a 1RM, it may overlook strength adaptations because the 1RM is a skill and will improve most when training closely reflects the 1RM test 62. For example, if you have one group training closer to their 1RM during a training period, theoretically they would perform better at a 1RM test than a group who trains further away from their 1RM, making it difficult to compare adaptations. For this reason, it may be more appropriate to include a test that both groups are inexperienced at performing. Tests using dynamometers can accomplish this and provide a more objective measure. Dynamometry There are various types of dynamometers used in research. It can range from spring or hydraulic loaded dynamometers to highly sophisticated computerized dynamometers that can isolate various types of contractions and force outputs. The spring and hydraulic dynamometers are usually used for a measure of forearm isometric strength using a hand grip dynamometer. These are simply a handle that you squeeze and hold for a few seconds to measure the pounds or kilograms of force that you generate. The more sophisticated computerized devices offer more functions and provide a more comprehensive evaluation of muscle function and strength. These can range from simple handgrip dynamometers to leg extension and mechanized squat devices. These computerized devices like the knee extension dynamometer, can tightly control the range of motion, duration of each rep and the force applied throughout different ranges of motion. These devices can measure a number of variables including maximal voluntary isometric contraction, rate of isometric force development, power, torque, and velocity. Generally, you’ll see rate of force development, maximal voluntary contraction and peak power How To Read Research: A Biolayne Guide reported for various contraction types (eccentric, concentric, isometric, etc.). Psychometrics Psychometrics measure psychological constructs such as, moods, behaviors, and personality traits. These are measured using various types of questionnaires, surveys and interviews. These types of measures fall under descriptive research and are widely used in education and behavioral sciences3. Questionnaires and surveys can utilize open-ended, closed questions or a combination of the two. Open-ended questionnaires provide more opportunity for subjects to elaborate or provide detailed information about their feelings or ideas. For example, “Why did you struggle to adhere with your diet?”. While these types of questions can gather a lot of detailed information, they require considerable time and are difficult to score or compare answers between subjects or groups. Closed questions require a specific response and commonly are yes, or no questions. These types of questionnaires are relatively faster to administer, and score compared to open-ended questionnaires. With an appropriate scoring system closed question surveys can be used to compare answers between subjects or groups of subjects. Closed questions also include different formats such as scaled, ranking or categorical questions. A very common iteration of a scaled questionnaire is known as a visual analog scale (VAS) which has a line with corresponding answer choices and equal intervals between answers that indicate the strength of agreement or disagreement with a statement 3. The problem with some questionnaires and surveys is how questions are worded. Some questions may be worded in a way that subjects may feel there is a “right” or “wrong” answer and change their true response based on trying to satisfy the questionnaire. So, it’s important they’re developed with appropriate wording that doesn’t bias the subject to a certain answer and even the order in which the questions are placed can play a role. Also, the more a subject repeats a specific survey or questionnaire the more likely it is to bias their 41 answers, since they become more familiar with the questionnaire. For this reason, it’s important to have a proper amount of time in between testing points for questionnaires and surveys. It’s not a requirement for questionnaires to be validated prior to its use in an experiment, but it would carry greater importance if it was. Generally, a lot of questionnaires used in exercise and nutrition science have been validated in the field of psychology or other medical related fields which indicates they are acceptable for use, but a lot of times these questionnaires should be developed specifically for the sample being studied. There are many different psychometric questionnaires and surveys available. You’ll most often see a number of different likert or VAS scales used, along with others like the profile of mood states questionnaire, the three factor eating questionnaire, or the Pittsburgh sleep quality index. Psychometrics can be a very useful, cost-effective tool and easily implemented with other more objective based measures to provide an in-depth evaluation. How To Read Research: A Biolayne Guide Once again, we’d like to reiterate that this is not a complete list of all of the available measurements in exercise and nutrition research. There are many others available, but generally these are the ones you will frequently encounter when reading through nutrition and exercise science research publications. When measuring any outcome, it’s best to use a combination of measures whenever possible. This can help to provide a more comprehensive evaluation of the outcomes of interest and control for more variables. Obviously, that’s not always possible for some labs and they have to deal with the equipment they have available. Which means, as consumers of research we need to critically evaluate the methods that are used in experiments and take them for what they’re worth. Again, just because a study uses a less valid and reliable method, doesn’t mean we should throw it out. Instead we can use it as a small piece of evidence and compare with other studies. 42 Closing Remarks There is no substitute for spending years in a lab actually working on a study, but hopefully this handbook has provided insight into the research process. Participating in research is really the only way you can appreciate and understand how much goes into conducting and publishing a study. Unfortunately not everyone has the opportunity to attend higher education, which was the primary motive for this handbook. To share our knowledge and understanding of research based on our experiences conducting studies in nutrition and exercise science. Keep in mind that this is a relatively short and non-comprehensive guide to what goes into conducting and publishing a study. Please take a look at some of our references which includes some excellent books worth investing in if you want to learn more about the research process. If you want to be more active in research but you don’t have the opportunity to attend a University, many labs How To Read Research: A Biolayne Guide in your area would be grateful to have another helping hand. Simply reaching out to a professor in your area who is conducting research that interests you can help guide you in the right direction. We hope you’ll consider subscribing to our research review as part of the [Biolayne.com](http://Biolayne. com) membership. Each month we review 5 scientific studies related to training, nutrition, supplements, muscle growth, fat loss, and other topics related to health and fitness. Our goal is to provide our own opinions, criticisms, dig into the important nuances, but also summarize the studies into a digestible format for the non-scientist. Our mission for this review is to establish a resource that allows individuals to stay up to date on current research and aware of the general consensus of specific topics without a major time commitment. 43 References 1. Oxford University Press. (n.d.). Research English definition and meaning. Lexico Dictionaries | English. 2. 3. 17. Head, M. L., Holman, L., Lanfear, R., Kahn, A. T., & Jennions, M. D. (2015). The extent and consequences of p-hacking in science. PLoS biology, 13(3), e1002106. Tuckman, B. W., & Harper, B. E. (2012). Conducting educational research. Rowman & Littlefield Publishers. 18. KRLEŽA-JERIĆ, K. A. R. M. E. L. A. (2014). Sharing of clinical trial data and research integrity. Periodicum biologorum, 116(4), 337-339. Thomas, J. R., Nelson, J. K., & Silverman, S. J. (2015). Research methods in physical activity. Human kinetics. 19. Moon, J. R., Eckerson, J. M., Tobkin, S. E., Smith, A. E., Lockwood, C. M., Walter, A. A., Cramer, J. T., Beck, T. W., & Stout, J. R. (2009). Estimating body fat in NCAA Division I female athletes: a fivecompartment model validation of laboratory methods. European journal of applied physiology, 105(1), 119–130. 4. Hopkins, W. G. (2000). Quantitative research design. Sportscience, 4(1), 1-8. 5. Draper, C. E. (2009). Role of qualitative research in exercise science and sports medicine. South African Journal of Sports Medicine, 21(1), 27-28. 6. Barré-Sinoussi, F., & Montagutelli, X. (2015). Animal models are essential to biological research: issues and perspectives. Future science OA, 1(4). 7. Baxter, P., & Jack, S. (2008). Qualitative Case Study Methodology: Study Design and Implementation for Novice Researchers. The Qualitative Report, 13(4), 544-559. 8. Cooper, H., Hedges, L. V., & Valentine, J. C. (Eds.). (2019). The handbook of research synthesis and meta-analysis. Russell Sage Foundation. 20. Smith-Ryan, A. E., Mock, M. G., Ryan, E. D., Gerstner, G. R., Trexler, E. T., & Hirsch, K. R. (2017). Validity and reliability of a 4-compartment body composition model using dual energy x-ray absorptiometryderived body volume. Clinical nutrition (Edinburgh, Scotland), 36(3), 825–830. 21. 9. Glass, G. V. (1976). Primary, secondary, and meta-analysis of research. Educational researcher, 5(10), 3-8. 10. Ware, W. B., Ferron, J. M., & Miller, B. M. (2013). Introductory statistics: A conceptual approach using R. Routledge. 11. Lakens, D. (2013). Calculating and reporting effect sizes to facilitate cumulative science: a practical primer for t-tests and ANOVAs. Frontiers in psychology, 4, 863. 12. Cohen, J. (1988). Statistical power analysis for the social sciences (2nd ed.). Routledge. 13. King, L. (2018). Preparing better graphs. Journal Of Public Health And Emergency, 2(1). 14. Morton, R. W., Murphy, K. T., McKellar, S. R., Schoenfeld, B. J., Henselmans, M., Helms, E., Aragon, A. A., Devries, M. C., Banfield, L., Krieger, J. W., & Phillips, S. M. (2018). A systematic review, meta-analysis and meta-regression of the effect of protein supplementation on resistance training-induced gains in muscle mass and strength in healthy adults. British journal of sports medicine, 52(6), 376–384. 15. 16. Cumming, G., Fidler, F., & Vaux, D. L. (2007). Error bars in experimental biology. The Journal of cell biology, 177(1), 7–11. Mlinarić, A., Horvat, M., & Šupak Smolčić, V. (2017). Dealing with the positive publication bias: Why you should really publish your negative results. Biochemia medica, 27(3), 030201. How To Read Research: A Biolayne Guide McArdle, W. D., Katch, F. I., Katch, V. L. (2015). Exercise Physiology: Nutrition, Energy, and Human Performance. United Kingdom: Wolters Kluwer Health/Lippincott Williams & Wilkins. 22. Campbell, B. (Ed.). (2013). Sports nutrition: Enhancing athletic performance. CRC Press. 23. RRooyackers, O. E., & Nair, K. S. (1997). Hormonal regulation of human muscle protein metabolism. Annual review of nutrition, 17, 457–485. 24. Afting, E. G., Bernhardt, W., Janzen, R. W., & Röthig, H. J. (1981). Quantitative importance of non-skeletal-muscle N taumethylhistidine and creatine in human urine. The Biochemical journal, 200(2), 449–452. 25. Katsanos, C. S., Chinkes, D. L., Sheffield-Moore, M., Aarsland, A., Kobayashi, H., & Wolfe, R. R. (2005). Method for the determination of the arteriovenous muscle protein balance during non-steady-state blood and muscle amino acid concentrations. American journal of physiology. Endocrinology and metabolism, 289(6), E1064–E1070. 26. Kerr, A., Slater, G., Byrne, N., & Chaseling, J. (2015). Validation of Bioelectrical Impedance Spectroscopy to Measure Total Body Water in Resistance-Trained Males. International journal of sport nutrition and exercise metabolism, 25(5), 494–503. 27. Schoenfeld, B. J., Nickerson, B. S., Wilborn, C. D., Urbina, S. L., Hayward, S. B., Krieger, J., Aragon, A. A., & Tinsley, G. M. (2020). Comparison of Multifrequency Bioelectrical Impedance vs. DualEnergy X-ray Absorptiometry for Assessing Body Composition Changes After Participation in a 10-Week Resistance Training Program. Journal of strength and conditioning research, 34(3), 678–688. 28. Graybeal, A. J., Moore, M. L., Cruz, M. R., & Tinsley, G. M. (2020). Body Composition Assessment in Male and Female Bodybuilders: A 4-Compartment Model Comparison of Dual-Energy X-Ray Absorptiometry and Impedance-Based Devices. Journal of strength and conditioning research, 34(6), 1676–1689. 44 29. Haas, V., Schütz, T., Engeli, S., Schröder, C., Westerterp, K., & Boschmann, M. (2012). Comparing single-frequency bioelectrical impedance analysis against deuterium dilution to assess total body water. European journal of clinical nutrition, 66(9), 994–997. 30. Moon J. R. (2013). Body composition in athletes and sports nutrition: an examination of the bioimpedance analysis technique. European journal of clinical nutrition, 67 Suppl 1, S54–S59. 31. Matias, C. N., Santos, D. A., Gonçalves, E. M., Fields, D. A., Sardinha, L. B., & Silva, A. M. (2013). Is bioelectrical impedance spectroscopy accurate in estimating total body water and its compartments in elite athletes?. Annals of human biology, 40(2), 152–156. 32. Cole, K.S. Permeability and impermeability of cell membranes for ions in Cold Spring Harbor Symposia on Quantitative Biology. 1940. Cold Spring Harbor Laboratory Press. 33. Matthie, J. R. (2008). Bioimpedance measurements of human body composition: critical analysis and outlook. Expert review of medical devices, 5(2), 239-261. 34. Siri, W. E., Brozek, J., & Henschel, A. (1961). Techniques for measuring body composition. Washington, DC: National Academy of Sciences, 223-224. programme in women. Clinical physiology and functional imaging, 37(6), 663–668. 43. Wang, Z., Pi-Sunyer, F. X., Kotler, D. P., Wielopolski, L., Withers, R. T., Pierson, R. N., Jr, & Heymsfield, S. B. (2002). Multicomponent methods: evaluation of new and traditional soft tissue mineral models by in vivo neutron activation analysis. The American journal of clinical nutrition, 76(5), 968–974. 44. Friedl, K. E., DeLuca, J. P., Marchitelli, L. J., & Vogel, J. A. (1992). Reliability of body-fat estimations from a four-compartment model by using density, body water, and bone mineral measurements. The American journal of clinical nutrition, 55(4), 764–770. 45. Wilson, J. P., Strauss, B. J., Fan, B., Duewer, F. W., & Shepherd, J. A. (2013). Improved 4-compartment body-composition model for a clinically accessible measure of total body protein. The American journal of clinical nutrition, 97(3), 497–504. 46. Nickerson, B. S., & Tinsley, G. M. (2018). Utilization of BIA-Derived Bone Mineral Estimates Exerts Minimal Impact on Body Fat Estimates via Multicompartment Models in Physically Active Adults. Journal of clinical densitometry : the official journal of the International Society for Clinical Densitometry, 21(4), 541–549. 47. 35. Withers, R. T., Craig, N. P., Bourdon, P. C., & Norton, K. I. (1987). Relative body fat and anthropometric prediction of body density of male athletes. European journal of applied physiology and occupational physiology, 56(2), 191–200. Williams, J. E., Wells, J. C., Wilson, C. M., Haroun, D., Lucas, A., & Fewtrell, M. S. (2006). Evaluation of Lunar Prodigy dual-energy X-ray absorptiometry for assessing body composition in healthy persons and patients by comparison with the criterion 4-component model. The American journal of clinical nutrition, 83(5), 1047–1054. 36. Orphanidou, C., McCargar, L., Birmingham, C. L., Mathieson, J., & Goldner, E. (1994). Accuracy of subcutaneous fat measurement: comparison of skinfold calipers, ultrasound, and computed tomography. Journal of the American Dietetic Association, 94(8), 855–858. 48. Clasey, J. L., Kanaley, J. A., Wideman, L., Heymsfield, S. B., Teates, C. D., Gutgesell, M. E., Thorner, M. O., Hartman, M. L., & Weltman, A. (1999). Validity of methods of body composition assessment in young and older men and women. Journal of applied physiology (Bethesda, Md. : 1985), 86(5), 1728–1738. 37. 49. Kullberg, J., Brandberg, J., Angelhed, J. E., Frimmel, H., Bergelin, E., Strid, L., Ahlström, H., Johansson, L., & Lönn, L. (2009). Whole-body adipose tissue analysis: comparison of MRI, CT and dual energy X-ray absorptiometry. The British journal of radiology, 82(974), 123–130. van Marken Lichtenbelt, W. D., Hartgens, F., Vollaard, N. B., Ebbing, S., & Kuipers, H. (2004). Body composition changes in bodybuilders: a method comparison. Medicine and science in sports and exercise, 36(3), 490–497. 38. Evans, E. M., Saunders, M. J., Spano, M. A., Arngrimsson, S. A., Lewis, R. D., & Cureton, K. J. (1999). Body-composition changes with diet and exercise in obese women: a comparison of estimates from clinical methods and a 4-component model. The American journal of clinical nutrition, 70(1), 5–12. 50. Tothill, P., & Hannan, W. J. (2000). Comparisons between Hologic QDR 1000W, QDR 4500A, and Lunar Expert dual-energy X-ray absorptiometry scanners used for measuring total body bone and soft tissue. Annals of the New York Academy of Sciences, 904, 63–71. 51. 39. Peterson, M. J., Czerwinski, S. A., & Siervogel, R. M. (2003). Development and validation of skinfold-thickness prediction equations with a 4-compartment model. The American journal of clinical nutrition, 77(5), 1186–1191. 40. Kuehne, T. E., Yitzchaki, N., Jessee, M. B., Graves, B. S., & Buckner, S. L. (2019). A comparison of acute changes in muscle thickness between A-mode and B-mode ultrasound. Physiological measurement, 40(11), 115004. 41. Wagner D. R. (2013). Ultrasound as a tool to assess body fat. Journal of obesity, 2013, 280713. 42. Schoenfeld, B. J., Aragon, A. A., Moon, J., Krieger, J. W., & TiryakiSonmez, G. (2017). Comparison of amplitude-mode ultrasound versus air displacement plethysmography for assessing body composition changes following participation in a structured weight-loss How To Read Research: A Biolayne Guide Haun, C. T., Vann, C. G., Roberts, B. M., Vigotsky, A. D., Schoenfeld, B. J., & Roberts, M. D. (2019). A Critical Evaluation of the Biological Construct Skeletal Muscle Hypertrophy: Size Matters but So Does the Measurement. Frontiers in physiology, 10, 247. 52. Vigotsky, A. D., Schoenfeld, B. J., Than, C., & Brown, J. M. (2018). Methods matter: the relationship between strength and hypertrophy depends on methods of measurement and analysis. PeerJ, 6, e5071. 53. Haun, C. T., Vann, C. G., Mobley, C. B., Roberson, P. A., Osburn, S. C., Holmes, H. M., Mumford, P. M., Romero, M. A., Young, K. C., Moon, J. R., Gladden, L. B., Arnold, R. D., Israetel, M. A., Kirby, A. N., & Roberts, M. D. (2018). Effects of Graded Whey Supplementation During Extreme-Volume Resistance Training. Frontiers in nutrition, 5, 84. 54. Ward L. C. (2018). Human body composition: yesterday, today, and tomorrow. European journal of clinical nutrition, 72(9), 1201–1207. 45 55. Verdijk, L. B., Gleeson, B. G., Jonkers, R. A., Meijer, K., Savelberg, H. H., Dendale, P., & van Loon, L. J. (2009). Skeletal muscle hypertrophy following resistance training is accompanied by a fiber type-specific increase in satellite cell content in elderly men. The journals of gerontology. Series A, Biological sciences and medical sciences, 64(3), 332–339. 60. Vigotsky, A. D., Halperin, I., Lehman, G. J., Trajano, G. S., & Vieira, T. M. (2018). Interpreting Signal Amplitudes in Surface Electromyography Studies in Sport and Rehabilitation Sciences. Frontiers in physiology, 8, 985. 61. 56. Smeulders, M. J., van den Berg, S., Oudeman, J., Nederveen, A. J., Kreulen, M., & Maas, M. (2010). Reliability of in vivo determination of forearm muscle volume using 3.0 T magnetic resonance imaging. Journal of magnetic resonance imaging : JMRI, 31(5), 1252–1255. 57. Hellerstein, M., & Evans, W. (2017). Recent advances for measurement of protein synthesis rates, use of the ‘Virtual Biopsy’ approach, and measurement of muscle mass. Current opinion in clinical nutrition and metabolic care, 20(3), 191–200. 58. Rantonen, P. J., Penttilä, I., Meurman, J. H., Savolainen, K., Närvänen, S., & Helenius, T. (2000). Growth hormone and cortisol in serum and saliva. Acta odontologica Scandinavica, 58(6), 299–303. 59. West, D. W., Burd, N. A., Staples, A. W., & Phillips, S. M. (2010). Human exercise-mediated skeletal muscle hypertrophy is an intrinsic process. The international journal of biochemistry & cell biology, 42(9), 1371–1375. © Copyright 2022 Biolayne Technologies LLC Roberts, T. J., & Gabaldón, A. M. (2008). Interpreting muscle function from EMG: lessons learned from direct measurements of muscle force. Integrative and comparative biology, 48(2), 312–320. 62. Buckner, S. L., Jessee, M. B., Mattocks, K. T., Mouser, J. G., Counts, B. R., Dankel, S. J., & Loenneke, J. P. (2017). Determining Strength: A Case for Multiple Methods of Measurement. Sports medicine (Auckland, N.Z.), 47(2), 193–195. 63. Norton, L. E., Wilson, G. J., Layman, D. K., Moulton, C. J., & Garlick, P. J. (2012). Leucine content of dietary proteins is a determinant of postprandial skeletal muscle protein synthesis in adult rats. Nutrition & metabolism, 9(1), 67. 64. Hall, K. D., Guo, J., Chen, K. Y., Leibel, R. L., Reitman, M. L., Rosenbaum, M., Smith, S. R., & Ravussin, E. (2019). Methodologic considerations for measuring energy expenditure differences between diets varying in carbohydrate using the doubly labeled water method. The American journal of clinical nutrition, 109(5), 1328–1334. 46