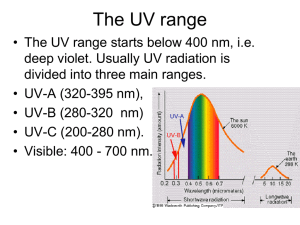

Examples of Instruments RADAR: short for Radio Detection and Ranging. The name comes from WWII, when radio waves were actually used in radar. The waves are scattered and reflected when they come into contact with something. Modern-day radars actually use microwaves. They can pass through water droplets and are generally used with active remote sensing systems. Radar is good for locating objects and measuring elevation. LIDAR: short for Light Detection and Ranging. It is similar to RADAR but uses laser pulses and continuouswave lasers instead of radio waves. TM: stands for Thematic Mapper. It was introduced in the Landsat program and involves seven image data bands that scan along a ground track. ETM+: stands for Enhanced Thematic Mapper Plus. It replaced the TM sensor on Landsat 7. Unlike TM, it has eight bands. MSS: stands for Multispectral Scanner. It was introduced in the Landsat program also, and each band responds to a different type of radiation, thus the name “multispectral”. MODIS: stands for Moderate-resolution Imaging Spectroradiometer. It is on the Terra and Aqua satellites. It measures cloud properties and radiative energy flux. CERES: stands for Clouds and the Earth's Radiant Energy System. It is on the Terra and Aqua satellites. It measures broadband radiative energy flux. SeaWiFS (Sea-viewing Wide Field-of-View Sensor): Eight spectral bands of very narrow wavelength ranges, monitors ocean primary production and phytoplankton processes, ocean influences on climate processes (heat storage and aerosol formation), and monitors the cycles of carbon, sulfur, and nitrogen. Other Instruments ALI: Advanced Land Imager ASTER: Advanced Spaceborne Thermal Emission and Reflection Radiometer ATSR: Along Track Scanning Radiometer AVHRR: Advanced Very High Resolution Radiometer (used with NOAA) AVIRIS: Airborne Visual/Infrared Imaging Spectrometer CCD: Charge Coupled Devices CZCS: Coastal Zone Color Scanner GPS: Global Positioning System HRV: High Resolution Visible sensor LISS-III: Linear Imaging Self-Scanning Sensor MESSR: Multispectral Electronic Self-Scanning Radiometer MISR: Multi-angle Imaging Spectro Radiometer MSR: Microwave Scanning Radiometer RAR: Real Aperture Radar VTIR: Visible and Thermal Infrared Radiometer WiFS: Wide Field Sensor Active Sensing vs. Passive Sensing Active sensing occurs when the satellite produces radiation on its own, and then senses the backscatter. This is useful since it does not depend on outside radiation, but it uses up energy more quickly. Examples of active sensors are a laser fluorosensor and synthetic aperture radar (SAR). These instruments operate even at night, because they do not rely on reflected radiation (which is usually solar in origin). Passive sensing, on the other hand, senses naturally available radiation to create a picture. It does not need to use energy to produce radiation, but it is dependent on the outside radiation's existence. If there is little or no outside radiation, the satellite cannot function well. One exception includes thermal infrared (TIR) sensors, which actually obtain better information at night, because of natural emission of thermal energy. EOS Satellites especially likely to appear on tests are those that come from the Earth Observing System (EOS). The EOS is a series of NASA satellites designed to observe the Earth's land, atmosphere, biosphere, and hydrosphere. The first EOS satellite was launched in 1997. A-Train, or EOS-PM: an EOS satellite constellation scheduled to be with seven satellites working together in Sun synchronous (SS) orbit. Their compiled images have resulted in high-resolution images of the Earth's surface. The A-train, (also known as the Afternoon constellation because of its south-to-north equatorial crossing time of 1:30 PM) was planned to have seven satellites. Four satellites currently fly in the constellation. • Active: o o o o • OCO-2: studies global atmospheric carbon dioxide. It is a replacement for the failed OCO. GCOM-W1 "SHIZUKU": studies the water cycle. Aqua: studies the water cycle, such as precipitation and evaporation. Aura: studies Earth's ozone layer, air quality, and climate. Past: o • PARASOL: studied radiative and microphysical properties of clouds and air particles; moved to lower orbit and deactivated in 2013. o CloudSat: studies the altitude and other properties of clouds; moved to lower orbit following partial mechanical failure in February 2018. o CALIPSO: studies of clouds and air particles, and their effect on Earth's climate; moved to CloudSat's orbit in September 2018. CloudSat and CALIPSO now make up the C-train. Failed to achieve orbit: o OCO (Orbiting Carbon Observatory): was intended to study atmospheric carbon dioxide. o Glory: was to study radiative and microphysical properties of air particles. Both failures occurred because of launch vehicle failure. Morning Constellation, or EOS-AM, is a second constellation in the EOS. It is so named because of its 10:30 AM north-to-south equatorial crossing. Currently there are three satellites in this constellation - the fourth, EO-1, was decommissioned in March 2017. Landsat: A series of 9 satellites using multiple spectral bands. Three are operational today: Landsat 7, Landsat 8 (launched in February 2013), and Landsat 9 (launched in September 2021). Data from Landsat 9 will be available through USGS in early 2022. These are some of the most commonly tested satellites. The name Landsat is a mixture of the two words "land" and "satellite". Terra: Provides global data on the atmosphere, land, and water. Its scientific focus includes atmospheric composition, biogeochemistry, climate change and variability, and the water and energy cycle. Other Satellites There are other notable satellites that may appear on exams and are not affiliated with the EOS. • • • • GOES (Geostationary Operational Environmental Satellite) System: 2 weather satellites in Geostationary orbit 36000 km. It is partially organized by NASA, in cooperation with NOAA. MOS: Marine Observation Satellite SeaSat: SEA SATellite. This satellite is especially significant as the first satellite focused on the oceans, as well as the first satellite to carry synthetic aperture radar (SAR). SPOT: Système Pour l'Observation de la Terre. This is a series of 7 CNES satellites similar to the Landsat program, with a more commercial focus. Image Processing Satellite data is sent from the satellite to the ground in a raw digital format. The smallest unit of data is represented by a binary number. This data will be strung together into a digital stream and applied to a single dot, or pixel (short for "picture element") which gets a value known as a Digital Number (DN). Each DN corresponds to a particular shade of gray that was detected by the satellite. These pixels, when arranged together in the correct order, form an image of the target where the varying shades of gray represent the varying energy levels detected on the target. The pixels are arranged into a matrix. This data is then either stored in the remote sensing platform temporarily, or transmitted to the ground. The data can then be manipulated mathematically for various reasons, which will be discussed below. The human eye can only distinguish between about 16 shades of gray in an image, but it is able to distinguish between millions of colors. Thus, a common image enhancement technique is to assign specific DN values to specific colors, increasing the contrast. A true color image is one for which the colors have been assigned to DN values that represent the actual spectral range of the colors used in the image. A photograph is an example of a true color image. False color (FC) is a technique by which colors are assigned to spectral bands that do not equate to the spectral range of the selected color. This allows an analyst to highlight particular features of interest using a color scheme that makes the features stand out. Composites are images where multiple individual satellite images have been combined to produce a new image. This process is used to create more detailed images that take multiple factors into account, as well as to find patterns that would not have been revealed in a single image. It also helps to create larger images than the satellite itself can make. This is because each satellite covers a specific swath, or area imaged by a satellite with a fixed width. When these swaths are put together into a composite, a larger area is imaged. Traditionally, composites were made by merging colors. Three black and white transparencies of the same image are first made. Each represents a different spectral band - blue, green, and red. Shine white light through each one onto a screen. Then, each band is projected through a filter of the same color - blue band through a blue filter, green through a green, red through a red. Because the blue images are clear on the blue spectral band image, they'll appear blue on the composite. When the three images are aligned, the resulting image will have the natural color (or very close) of the original. This process is called "color additive viewing", and red, green, and blue are often known as additive colors because they add together to create new colors. The earliest color films would record multispectral scenes in multicolored films, which were then developed and merged into one colored film image. Not all composites are true colored. A False Color Composite (FCC) results when one band is assigned color gradient values for another color, such as if the blue band DNs were set to correspond to shades of red. One extremely popular FCC combination assigns red colors to the NIR image. Since healthy vegetation reflects strongly in the NIR region of the EM spectrum, a FCC using this combination displays areas with healthy vegetation as red. This also differentiates natural features from artificial ones: a football field made up of healthy grass has a strong red color, but a football field composed of Astroturf or other artificial substances will show up as a duller red, or even dark brown. Common composites: • • True-color composite- useful for interpreting man-made objects. Simply assign the red, green, and blue bands to the respective color for the image. Blue-near IR-mid IR, where blue channel uses visible blue, green uses near-infrared (so vegetation stays green), and mid-infrared is shown as red. Such images allow seeing the water depth, vegetation coverage, soil moisture content, and presence of fires, all in a single image. o NearIR is usually assigned to red on the image; thus, vegetation often appears bright red in false color images, rather than green, because healthy vegetation reflects a lot of nearIR radiation. This can also be used in identifying urban/artificial areas. Contrast refers to the difference in relative brightness between an item and its surroundings as seen in the image. A particular feature is easily detected in an image when contrast between an item and its background are high. However, when the contrast is low, an item might go undetected in an image. Resolution is a property of an image that describes the level of detail that can be discerned from it. This is important, as images with higher resolution will have higher detail. There are several types of resolution that are important to remote sensing. One of these is spatial resolution, which is the smallest detail a sensor can detect. Since the smallest element in a satellite image is a pixel, spatial resolution describes the area on the Earth's surface represented by each pixel. For example, in a weather satellite image that has a resolution of 1 km, each pixel represents the brightness of an area that is 1 km by 1 km. Other types of resolution include spectral resolution, or the ability of sensor to distinguish between fine wavelength intervals; radiometric resolution, which is the ability of sensor to discriminate very small differences in energy; and temporal resolution, or the time between which the same area is viewed twice. Snell's law describes how light refracts when it enters a new medium. Light travels slower through every nonvacuum medium as it gets absorbed, remitted, and scattered by particles as it travels through. The index of refraction of a medium can be calculated by dividing the speed of light in a vacuum over the speed of light in the medium. These are used in Snell's law (shown below). n1 is the angle for the incident radiation and n1 is the refractive index for the medium the light is entering from. n2 is the angle the light will travel once it enters the new medium and n2 is the refractive index of the new medium. The critical angle is the angle of incidence where the refractive angle is 90°. Any angle greater than the critical angle will be reflected. Common refractive indices include water (1.33), glass (1.52) and diamond (2.42). Air is rounded to 1. n1sin(x1)=n2sin(x2) Electromagnetic radiation (EMR) is the most common energy source for remote sensing. It consists of an electric and magnetic field perpendicular to each other and the direction of travel while traveling at the speed of light. This is important to remote sensing because that's how sensors detect certain data about the objects a satellite is studying. Radiation is an important part of remote sensing, since different materials respond to radiation in different ways, so this can be used to identify objects. One example of this is scattering (or atmospheric scattering), where particles in the atmosphere redirect radiation. There are three types: Raleigh, Mie, and non-selective. This scattering is used to identify the presence and quantity of certain gases in the atmosphere. Also, transmission is when radiation passes through a target, indicating it is unaffected by that particular wave. There are several types of electromagnetic energy that can be emitted, depending on their wavelength. All of them are found in the electromagnetic spectrum (EMS). Gamma rays and x-rays cannot be used for remote sensing because they are absorbed by the Earth's atmosphere: in general, the shorter the wavelength (and the greater the frequency), the more absorption occurs. Ultraviolet radiation is not useful either because it is blocked by the ozone layer. Visible light allows satellites to detect colors a human eye would see. Some of these satellites are panchromatic, meaning they are sensitive to all wavelengths of visible light. Near Infrared (NIR), the region just past the visible portion of the spectrum, is useful for monitoring vegetation, as healthy vegetation reflects much NIR. Short Wave Infrared (SWIR), a region somewhere past NIR, which is useful for determining the spectral signature of objects. A spectral signature is the telltale reflectance of radiation by a material across the spectrum. Each object has a unique spectral signature. Thermal Infrared (TIR), the kind of IR we perceive as "heat". The Earth naturally emits TIR, so TIR remote sensing usually involves passively detecting radiation in this region of the spectrum. This is useful for determining temperatures of objects. Microwaves are used in radar. Different radars utilize different wavelengths, which range from relatively shortwave regions, such as the W-band, to longwave regions such as the L-band. Radars are very good at penetrating foliage, and in the case of longwave radars, the ground, to a certain extent. Microwaves also reveal a lot about the properties of a surface, such as its dielectric constant, moisture, etc. In physics, a blackbody is an ideal object which absorbs and re-emits 100% of all incident radiation. The spectral signature for a blackbody is modeled by a blackbody curve, determined by the Planck Function. The blackbody curve is dependent on temperature. In practice, blackbodies do not exist; instead, most objects are graybodies, which emit a certain percentage of the radiation absorbed. This percentage is known as emissivity. Integrating a Planck function blackbody curve gives the total radiant exitance, or power emitted per unit area, of an object. Radiant exitance is specified by the Stefan-Boltzmann Law. The peak wavelength of an object is the wavelength at which the greatest energy is emitted from an object. This is the peak of the blackbody curve. The peak wavelength of an object can be determined by Wein's Displacement Law. The dominant wavelength of an object is the wavelength which matches the perceived hue of the object. The sun appears yellow (when affected by Earth's atmosphere; it is actually white) because its dominant wavelength is in the yellow portion of the visible light region of the EM spectrum. Albedo is, simply put, the percentage of radiation that is reflected off of an object. Albedo and emissivity are important concepts to understand and differentiate. Whereas albedo concerns incident light that is reflected from a surface, emissivity concerns blackbody radiation that is emitted from an object. In real life applications, white objects, which reflect more wavelengths, have higher albedo. This reduces the amount of radiation that is absorbed, which also reduces the amount of radiation emitted by proxy. According to the Canada Centre for Remote Sensing, whose tutorial you can find in the external links section, there are several things to look for to assist in image interpretation. These are tone, shape, size, pattern, texture, shadow, and association. • • • • • • • Tone is the brightness or color of an object. It's the main way to distinguish targets from backgrounds. Shape is the shape of an object. Note that a straight-edged shape is usually man-made, such as agricultural or urban structures. Irregular-edged shapes are usually formed naturally. Size, relative or absolute, can be determined by finding common objects in images, such as trees or roads. (see Finding Area section, below) Pattern refers to the arrangement of objects in an image, such as the spacing between buildings in an urban setting. Texture is the arrangement of tone variation throughout the image. Shadow can help determine size and distinguish objects. Association refers to things that are associated with one another in photographs, which can assist interpretation, i.e. boats on a lake, etc. During the competition, you may be asked to analyze a picture's NDVI values. NDVI stands for "Normalized Difference Vegetation Index" and is used to describe various land types, usually to determine whether or not the image contains vegetation. The equation provided by USGS for NDVI is as follows: NDVI = (Channel 2 - Channel 1) / (Channel 2 + Channel 1) Channel 1 is in the red light part of the electromagnetic spectrum. In this region, the chlorophyll absorbs much of the incoming sunlight. Channel 2 is in the Near Infrared part of the spectrum, where the plant's mesophyll leaf structure can cause reflectance. You may also see the equation given like so: 𝑁𝐷𝑉𝐼 = (𝑁𝐼𝑅−𝑉𝐼𝑆) (𝑁𝐼𝑅+𝑉𝐼𝑆) Generally, areas rich in vegetation will have higher positive values. Soil tends to cause NDVI values somewhat lower than vegetation, small positive amounts. Bodies of water, such as lakes or oceans, will have even lower positive (or, in some cases, high negative) values. There are some factors that may affect NDVI values. Atmospheric conditions can have an effect on NDVI, as well as the water content of soil. Clouds sometimes produce NDVI values of their own, but if they aren't thick enough to do so, they may throw off measurements considerably. EVI, or the Enhanced Vegetation Index, was created to improve off of NDVI and eliminate some of its errors. It has an improved sensitivity to regions high in biomass and its elimination of canopy background. The equation for EVI is as follows: 𝐸𝑉𝐼 = 𝐺 × (𝑁𝐼𝑅 − 𝑅𝐸𝐷) (𝑁𝐼𝑅 + 𝐶1 × 𝑅𝐸𝐷 − 𝐶2 × 𝐵𝑙𝑢𝑒 + 𝐿) Where NIR is again Near Infrared, and Red and Blue are of course those colors' bands. All three of these are at least partially atmospherically-corrected surface reflectances. The equation filters out canopy noise through L. C1 and C2 are the coefficients of aerosol resistance and G is the gain-factor. The coefficients used by the MODIS-EVI program are the following: L=1, C1 = 6, C2 = 7.5, and G = 2.5. It has a valid range from 10,000 to 10,000 EVI has been adopted as a standard product for two of NASA's MODIS satellites, Terra and Aqua. As it factors out background noise, it's often considered to be more popular than NDVI. Human Interaction Human Interaction with the Earth is a large part of Remote Sensing. It emphasizes how humans affect the Earth on scales detectable by remote sensing. This interaction has previously been represented in tests as deforestation, ozone layer changes, changes in land use, retreat of glaciers, and loss of sea ice, among others. It is important to remember, however, that not all climate change processes are anthropogenic, and other climatology events may be tested as well. Global Warming When human impact on the environment is mentioned, one of the main ideas it entails is global warming. Global warming is defined as “the increase in the average temperature of Earth's near-surface air and oceans since the mid-20th century and its projected continuation”. The causes of global warming are debated, but the main consensus is that the main cause is the increase in concentrations of greenhouse gases. Possible results of global warming include a rise in sea levels, a change in weather patterns, the retreat of glaciers and sea ice, species extinctions, and an increased frequency of extreme weather. The greenhouse effect is caused by certain greenhouse gases that trap heat in the Earth’s atmosphere. The main gases, along with their percent contribution to the greenhouse effect, are water vapor (36-70%), carbon dioxide (9-26%), methane (4-9%), and ozone (3-7%). Of these, carbon dioxide is perhaps the gas most focused on as a potential human impact on the environment; thus, it is the most likely to appear on tests. Humans have increased the amounts of these and other greenhouse gases in the atmosphere during industrialization periods such as the Industrial Revolution. CFC’s and nitrous oxide are among the greenhouse gases now present in the atmosphere that were not before. Different greenhouse gases are more/less powerful in forcing the greenhouse effect. Their ability to force the greenhouse effect is known as radiative forcing and is generally given as a value or a percent. Global dimming refers to a natural process that cools the earth. Generally speaking, global dimming is caused not by gases but by aerosols, such as sulfates and chlorates. These aerosols occur naturally as well as artificially. For example, large-scale volcanic eruptions emit a lot of sulfate into the atmosphere. The 1991 eruption of Mt. Pinatubo dropped the average global temperature by 0.5 degrees C due to the global dimming effect. This is because these aerosols reflect incoming solar radiation very effectively. Furthermore, sulfates and chlorate aerosols in the atmosphere serve as cloud condensation nuclei, or CCNs. CCNs are particles upon which water vapor can more favorably condense, forming clouds. These clouds reflect significantly more incoming radiation than normal clouds due to the Twomey effect. This results in cooling. Chlorates, and to some degree sulfates, deplete ozone. In addition, sulfates react with water to form sulfuric acid, which precipitates as acid rain. Carbon Cycle The carbon cycle is the process through which carbon atoms are cycled through the environment. It cycles through the atmosphere as carbon dioxide, and some carbon is dissolved into the hydrosphere. It is also taken in by plants during photosynthesis and released when the plants die. When animals feed on plants, they also take in carbon. However, the burning of fossil fuels, which come from biomatter, releases excess carbon into the atmosphere, increasing the concentration of carbon dioxide. Carbon can be stored for long periods of time in trees and soil in forest biomes, so the altering of this balance affects the cycle of carbon and can help global warming and climate change. The resulting global warming can then affect plant growth since slight changes in temperature or other biotic factors can kill off certain species of plants. Since there would be less plants remaining alive, more carbon dioxide stays in the atmosphere rather than being taken in by plants. The carbon cycle indicates the presence of what are known as carbon sources and sinks. A source emits carbon into the atmosphere or the biosphere, and a sink absorbs it from the atmosphere, storing that carbon somewhere inaccessible. Oceans, for example, are carbon sinks. In addition, phytoplankton in the oceans photosynthesize and utilize dissolved carbon dioxide to produce energy. Plants also absorb carbon; tropical rainforests, and other biomes containing a very rich and diverse population of flora, are also carbon sinks. However, deciduous plants shed leaves and suspend photosynthesis in the winter, leading to the seasonal fluctuations observed in the Keeling Curve. Natural sources of carbon include wildfires and volcanic eruptions. However, the sources of carbon that are of most interest to scientists are usually anthropogenic - the burning of fossil fuels such as coal or petroleum, for example. Hydrological Cycle The hydrologic cycle, more commonly known as the water cycle, describes the cycle through which water travels. Its base is the more commonly known cycle of evaporation, condensation, and precipitation. Among smaller parts of the water cycle, water is stored as ice and snow in cold climates. It also enters the ground through infiltration, although some simply flows over it as surface runoff. The groundwater flow then takes this water to the oceans where it reenters the main cycle. Finally, some evaporation occurs as evapotranspiration in plants. Fewer plants would result in less carbon taken in, and thus more carbon dioxide in the atmosphere contributing to the greenhouse effect. Water vapor is the most prevalent greenhouse gas, and so monitoring all aspects of the water cycle also provides valuable insight and data concerning climate change and the validity of the greenhouse effect. The ozone layer is a naturally occurring layer of ozone in the stratosphere. Ozone blocks harmful UV from reaching the surface. Ozone can interact with various gases in the atmosphere, some natural, others artificial. These gases may destroy ozone, leading to ozone depletion. Ozone depletion may be seasonal or anthropogenic. Ozone is formed from the reaction of a free oxygen atom with a molecule of oxygen. The resulting molecule is relatively unstable. When a photon carrying sufficient energy, such as that of UV, hits the molecule, the molecule will absorb the energy and break back into its reactants. Therefore, ozone shields the surface of the Earth from ionizing radiation, which would otherwise be very detrimental to life. Ozone Depletion Ozone depletion has been greatly accelerated by pollutants in the atmosphere. Before the "ozone hole" was discovered, many propellants and refrigerants used chlorofluorocarbons (CFCs). In the atmosphere, chlorine atoms will break off from the CFC molecule. Chlorine is a very efficient catalyst in the breakdown of ozone one molecule of chlorine can degrade up to ten thousand molecules of ozone. CFCs were phased out since the Montreal Protocol went into effect in 1989. It is estimated that recovery may last until the mid-21st century. CFCs have been replaced with hydrofluorocarbons (HFCs), which do not contain chlorine, and therefore does not deplete ozone. Ocean Acidification Previously, it was mentioned that oceans dissolve carbon dioxide. This is not the entire explanation. In reality, carbon dioxide reacts with water to form carbonic acid, which can dissociate into carbonate and bicarbonate anions. Carbon dioxide, carbonic acid, carbonate and bicarbonate exist in a temperature-dependent equilibrium. The higher the temperature, the more the equilibrium shifts towards the production of carbonate and bicarbonate. The H+ cations dissociate and accumulate, lowering the pH of the water. This process is known as ocean acidification. Ocean acidification is detrimental to marine life. Organisms particularly sensitive to pH changes include coral and phytoplankton. Corals support the most diverse marine ecosystems, the ocean, and phytoplankton form the staple of many food chains. Coral is made of a calcium carbonate (aragonite/calcite) skeleton, which dissolves in acidic water. In addition, corals produce a natural sunscreen that protects them from ionizing radiation, a process that is slowed in warmer environments. The biodiversity of many coral reefs, including the Great Barrier Reef off Australia, is being threatened by what is known as coral bleaching (dead coral appears bleached). Scientists measure these processes either by directly measuring the sea surface temperature (SST) using thermal infrared remote sensing, or by measuring chlorophyll concentrations and phytoplankton presence/health by proxy. MODIS, for example, has bands dedicated to measuring chlorophyll concentrations.