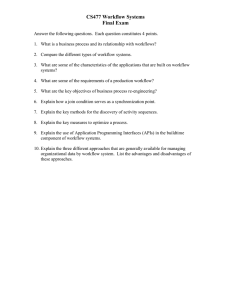

Analytics / Alteryx training for TD practitioners Almaty Course objectives 1 Discuss how TD engagements are changing with more data and more tools available for us to do the work 2 Recognize how and when Alteryx should be applied on TD engagements 3 Become proficient in Alteryx basics and know how to build your knowledge further 4 Understand why we need to be thoughtful about how we work with data if we want to benefit from analytics Page 2 Course agenda Duration Analytics in TD 13:30 – 13:45 Introduction to Alteryx 13:45 – 14:15 Case Study 1 – Part 1: Alteryx basics (hands on) Break Case Study 1 – Part 1: Alteryx basics – introduction Case Study 1 – Part 2: Alteryx macros – introduction Unguided case study 14:14 – 15:00 15:15 – 15:30 15:30 – 16:30 16:30 – 17:00 17:00 – 17:45 Page 3 TD Analytics Page 4 TD Future Practitioner Capabilities TD practitioners will need to be familiar with various tools which will be critical to handle and prepare data 1 2 Data mindset Data transformation Deal Deal adjustments adjustments Trust Discovery Translate Provide confidence that data will be secure and protected Describe What is the right data to request in order to address the client questions Unlock the value in data System reconciliation Databook(s) Extracts to client Carve out statements Dashboards Due diligence report Analytics as a Service – access to clients & bidders Analyse & Understand What insights does the data provide? CARVEx Empower Deliver The most appropriate way of delivering our insights to the client Page 5 Due diligence database Data visualization / output Be the conduit between the client Insights requirements and the analytics required Discover What value exists in both client and external data Carve out adjustments Accounting Accounting adjustments adjustments 3 Ensure that these insights are being delivered to the client and internal team Actions Practitioners need to be familiar with more efficient ways of handling and preparing data. Practitioners will have the ability to deliver the insights to clients in the most effective way. Tools, capabilities, and client value-add Data processing What ► Repeatable data cleansing workflows ► 10x efficiency gains on large datasets ► A must to use whenever large, but not perfectly clean datasets are received ► What ► Interactive data visualizations (desktop & hosted environments) ► Faster deep-dives, more flexibility in analysis ► Advisable to use to make data exploration, Q&A sessions, reporting more effective Partial automation of previously manual tasks Sales pitch to clients ► We can handle all the data you throw at us very efficiently (with seamless updates, raw formats, direct connections to your systems) ► We can be the “data stewards” for all involved stakeholders, so you do not need to provide data twice; we will transparently handle any adjustments to data made Page 6 Data visualizations Sales pitch to clients ► We can get to insights faster and thus address critical issues early in the process ► We can easily perform deep-dives and connect financial, commercial and operational KPI developments into one equity story ► We have solutions that allow sharing data with bidders in a controlled fashion (you see the data, but you cannot get it) Success factors in using analytics on engagements 1 Instead of (or in combination with) standard TD work, not on top of it 2 Planned and integrated into scope and DD approach, reflected in the information requests issued 3 Differentiation between exploratory analysis and ground work vs. deliverables that convey specific insights or results 4 Storyboarding with the senior part of the team is crucial; pen & paper first, software second 5 Early delivery to clients, iterations based on their feedback 6 Patience and resilience when execution does not go smoothly the first time it is tried Page 7 A data-minded team involves everyone “Successfully applying analytics requires all team members to be involved. It is not just hands-on super users” Consultants and Seniors ► ► Take the time to get proficient with the tools. Practice, practice, practice. Follow the latest developments, learn best practices. Share lessons learned! Managers and Senior Managers ► ► Embed analytics: in scoping, information requests, storyboarding. Give your teams space to try out new approaches and occasionally fail. Partners and Directors ► ► Understand the possibilities. Challenge teams to adopt analytics on projects. Market our capabilities aggressively. Today, we can still surprise our clients! Allow for some learning curve. Innovation means failing from time-to-time. Page 8 What are those latest analytics tools and approaches? 1 Visualization tools: Spotfire / Tableau / PowerBI 2 Data processing: Alteryx / PowerQuery 3 Dynamic databooks 4 DAS solutions: CARVEx, DAS Suite (Capital Edge) 5 Deliverables: EY Synapse, Digital Direct 6 Social Media Analytics: Crimson Hexagon Page 9 Examples from the field Page 10 Introduction – why Alteryx? Page 11 We need to stop being “databook-centric” and start being “data-centric” Databook approach Data centric approach Ending point Starting point Ending point Starting point Support services Currency: KD000 Sales sector phones Sales sector Co-Op Head Office Branch Customer Service (Satellite) Advertising Department Maintenance Section of mobile phones Grocery Sector pronto wash Salwa Co-op Sultan / Arabia Zain Support Total KPIs: Number of support service outlets Average revenue per support service FY15A 74 2 138 364 0 1 579 FY16A 78 1 162 317 0 383 133 1,075 6 97 7 154 YTD17A 26 1 79 48 168 32 354 6 59 Source: XXX Ref: - Ref: - Ref: Fonz dealers v s. franchises - Section PL - Profit and Loss Analy sis Databook version Data visualization Machine learning Maximizing flexibility and modern technology that saves time Minimizing the time to get to nicely formatting schedules Data centric approach Issues with the databook approach ► Human errors ► Version control issues ► No reusability Page 12 ► Efficiency in repetitive processes ► Faster, deeper insights ► Platform for innovation Diligence then… vs. diligence now Databook approach ► This went up… This went down… ► This went round and round… Data centric approach Conclusion / Point of view Conclusion / Point of view We recommend that you consider this in the context of your valuation… and seek appropriate SPA protection… Using a data centric approach allows detailed analytics which enables providing much more focussed advice. Page 13 5 tenets of being data-centric 1 Obtain as much data as you can, not as little as you need 2 Differentiate between presentation of data and storage of data 3 Avoid manual changes of source data, have an audit trail 4 Store data in computer friendly way, not human friendly way 5 Leverage technology to do the heavy lifting for you Page 14 Core idea of being data centric: flat files and hierarchies Flat file – one observation per row Enrichment of dataset through hierarchies Time Date (Calendar) Legal Entity Account Number Account Name Amount ABC ABC ABC 123 Entity Information Accounts Data type legend ABC String 123 Double Note: This is an illustrative selection of mappings. Page 15 Date Definitions Date (Calendar) Fiscal Year Fiscal Month YTD vs. YTG ABC ABC ABC Legal Entity Business Unit Country ABC ABC ABC Account Number IS / BS / CF Roll-ups ABC ABC ABC Account Number Statutory / Conso. Reported / Adjusted ABC ABC ABC Region ABC Net Debt / NWC ABC Alteryx will be your best friend in getting your data flattened Page 16 Alteryx graphical user interface 1 ►1 Tool palette ►2 Workflow canvas 3 ►3 Configuration window ►4 Results window 2 4 Page 17 Workflow basics Each tool has input and/or output „ports“. Always need to start with an input of data. The selected file name is displayed. Page 18 You may or may not need to connect to all ports of a tool Connect tools by clicking on a port and dragging it to the port of the next tool. Selecting the data source file Input Data 1 ► Drag an INPUT DATA tool onto the canvas ► 2 Select the arrow next to the “Connect a File or Database” drop down ► ► You must close the source file (e.g. from Excel) before you can input it to a workflow Page 19 Select File Browse and navigate to the source data file and select open Notice the other default input options Alteryx provides Data field types and column selection Select Tool ► It is important to define the “type” of each field accurately as it affects which functions or actions can be performed on that field. ► Most common types include: Double – used for numbers with decimals V_String – used for text/ non-number content Date – recognizes several formats such as xxmmyyyy ► It is good practice to use a SELECT TOOL immediately after importing data (and after performing certain workflows steps) to check the data type for each field. ► Columns can be removed from the dataset with this tool by unchecking the box on the left. Page 20 Data field types (1 of 2) Select Tool TYPE DESCRIPTION EXAMPLE Bool Boolean: The type of an expression with two possible values: True or False 0=False; -1=True Note: any value other than 0 would indicate the value is True. Byte Number: A byte field is a positive whole number than falls within the range, 0 thru 255 0, 1, 2, 3....253, 254, 255 Int16 Number: 2Byte: Twice Exponential to the Byte, or 216 –32,768 to 32,767 Int32 Number: 4Byte: Four Times Exponential to the Byte, or 232 –2,147,483,648 to 2,147,483,647 Int64 Number: 8Byte: Eight Times Exponential to the Byte, or -263 to +263 –9,223,372,036,854,775,808 to 9,223,372,036,854,775,807 Fixed Decimal Number: The specification of width of field and then to decimal threshold. The first number is the total width of number, the second number is to the decimal level of precision. The decimal point is included in the character width. "7.2" => 1234.56 "8.2" => -1234.56 Float Number: A single-precision floating point number is a 32-bit approximation of a real number. +/- 3.4E +/- 38 (7 digits) where 38 is the exponent and 7 digits references seven digits of accuracy. Double Number: A double-precision floating point number is a 64-bit approximation of a real number. +/- 1.7E +/- 308 (15 digits) where 308 is the exponent and 15 digits references fifteen digits of accuracy. String Character: Fixed Length String. The length must be at least as large as the largest character value contained in the field. Limited to 8192 characters. Any string whose length does not vary much from value to value. Page 21 Data field types (2 of 2) Select Tool TYPE DESCRIPTION EXAMPLE V_String Character: Variable Length. Length of field will adjust to accommodate If the string greater than 16 characters and the entire string within the field. varies in length from value to value WString Any string whose length does not vary Character: Wide String will accept unicode characters. Limited to 8192 much from value to value. Æ.ç.ß..Ð.Ñ... characters. Any string that contains unicode characters V_WString Character: Variable Length Wide String If the string greater than 16 characters and varies in length from value to value. If the string contains unicode and is longer than 16 characters, use V_WString, such as a "Notes" or "Address" field. Date Character: A 10 character String in "yyyy-mm-dd" format December 2, 2005 = 2005-12-02 Time Character: A 8 character String in "hh:mm:ss" format 2:47 and 53 seconds, pm = 14:47:53 DateTime Character: A 19 character String in "yyyy-mm-dd hh:mm:ss" format 2005-12-02 14:47:53 Blob Blob: Binary Large Object: A large block of data stored in a database. A BLOB has no structure which can be interpreted by the database management system but is known only by its size and location. an image or sound file SpatialObj Blob: The spatial object associated with a data record. There can be multiple spatial object fields contained within a table. A spatial object can consist of a point, line, polyline, or polygon. Page 22 Filtering and selecting certain rows from the dataset Select Records You can select which rows of data move forward in your workflow by defining the row numbers you want using the SELECT RECORDS tool 1 or creating a filter on the contents of a column using the FILTER tool 2 . 2 1 When you create a filter, you can use the true results or the false results (or both 3 ) as your output to continue on with in the workflow. 3 Page 23 Filter Tool Audit & Input/Output Anchors Selecting the green input and output anchors after running a workflow will help a user audit the changes caused by a particular tool Selecting the input and output anchor will populate the results window with the data for the corresponding point in the workflow. Highlighting the workflow link between icons will describe the errors, warnings and messages caused by each icon in the workflow Page 24 Formula Formula Tool Create or update fields using one or more expressions to perform a broad variety of calculations and/or operations ► 1 Output Field ► ► 2 3 ► 2 Select the appropriate field type of the new field. If an existing field was picked above, the Type is for reference only. Expression ► Page 25 3 This is the field the formula will be applied to. Either choose a field listed in the drop down to edit or add a new field. To add a new field, type the new field name in the box. Type ► 1 The expression box is for reference only. It will populate with the expression built in the Expression Box below. Un-pivoting data (Transpose) Transpose Data that has multiple columns of data such as a trial balance with a column for each reporting entity or month, is already partially summarized. The types of analyses and visualizations you can do with this data is limited. Often, data is most useful to us if we have a single value per record (row). 1 ► From the Key Fields Section, select the field(s) to pivot the table around. This field Name will remain on the Horizontal axis, with its value replicated vertically for each data field selected (step2). These columns typically contain categorical or descriptive contents. ► 2 From the Data Fields Section, select all the fields to carry through the analysis. These are the columns with values in them. 1 2 2 1 1 Page 26 2 4 Combining Multiple Datasets A key feature of Alteryx is the ability to “join” different datasets into one larger combined dataset. There are different tools depending on what you need to achieve. The key ones are: ► Join: similar to a vlookup / index match in Excel. The join tool is used where you have 2 tables with one or two fields in common, and you want to see all fields in the one table. ► Unions: when you have the same structure of data across multiple tables you can use the union tool to combine it into 1 large table to see all rows in the one table. E.g. combining 12 monthly reports into 1. ► Append: when you want to add (or append) values or additional data to each row of your data Example Join Inputs: Join "Left" Data Year Value 1 2001 X1 2002 X2 2003 X3 2004 X4 2005 X5 "Right" Data Year Value 2 2003 Y1 2004 Y2 2005 Y3 2006 Y4 2007 Y5 Page 27 Example Join Outputs Year 2001 2002 L: Joined Data Value 1 Value 2 X1 X2 Year 2003 2004 2005 J: Joined Data Value 1 Value 2 X3 Y1 X4 Y2 X5 Y3 Year 2006 2007 R: Joined Data Value 1 Value 2 Y4 Y5 All values in the Left table not in the Right table All values in both the Left table and the Right table. Note: rows will duplicate if there are multiple potential joins (i.e. more than one row per year) All values in the Right table but not in the Left table Combining Multiple Datasets Example Union Inputs: Union Month Jan Feb Mar Apr May Jun Dataset 1 Value1 Value2 100 35 200 40 300 45 400 50 500 55 600 60 Dataset 2 Month Value1 Value2 Jul 700 65 Aug 800 70 Sep 900 75 Oct 1000 80 Nov 1100 85 Dec 1200 90 Example Union Output "Unioned" Dataset Month Value1 Value1 Jan 100 35 Feb 200 40 Mar 300 45 Apr 400 50 May 500 55 Jun 600 60 Jul 700 65 Aug 800 70 Sep 900 75 Oct 1000 80 Nov 1100 85 Dec 1200 90 All data from dataset A All data from dataset B Note: Dataset A and Dataset B need to have the same structure (fieldnames and datatypes) as each other Example: Joins All combinations generated Append Example: Joins Page 28 Case Study 1 – Trial Balance Data Transformation Page 29 Preview of case study data ► ► ► What information does this file contain? What is it missing? How does its current layout inhibit you from analyzing it? Page 30 Preview of intended results ► ► ► One type of data in a given field (column) One numerical value per record (row) A separate field for each categorical, mapping, or otherwise descriptive element Page 31 Case study 1: Trial balance preparation ► Objective: ► ► Transform a transaction dataset into a flattened file by building a workflow in Alteryx to enable use in databooks, other analytic and visualization tools and the TS diligence dashboards. Three-step approach: 1. Use Alteryx to transform data from one tab into a flat file (1 hour) 2. Leverage the workflow from step 1 to process an entire dataset (45 min) 3. Add meaningful hierarchies (45 min) ► Instructions: ► Page 32 Individually complete this exercise by following the steps detailed in the following slides. Your table facilitators will be there to help you along the way Case study 1 - step 1 (of 15): loading data Purpose: Load data from 1 tab (Apr14) into Alteryx for further processing. ► Open Alteryx and select File New Workflow. ► Drag the Input Data tool ► In the configuration window, select the dropdown in the Connect a File or Database bar, and navigate to the source file “Alteryx Raw Data – ProjectTraining – V1.xslx”. ► In the Choose Table or Specific Query window, select the tab “Apr 14” tab. ► Select option “First row contains data”. This will force Alteryx to give standard column names instead of relying what it found in the first row of data. Consider this as a best practice unless you are sure that the labels in the first row of data are static across dataset. ► Run the workflow to see a current preview of the data. ► You should see the data from tab Apr14 in the results panel. onto the workflow canvas. Note: You cannot have the file open in Excel as Alteryx needs exclusive access to read the data. Save a copy of the file for Excel viewing instead. Page 33 Case study 1 – step 2 (of 15): Excluding irrelevant headers and setting the right headers Purpose: We will exclude irrelevant header data and ensure that column headers of the dataset are appropriate. Specify the data range to pull from your input. ► Select the Preparation palette and choose the Select Records tool, used to select and deselect rows. Enter the desired row range for your data. ► In the case of Project Training, the trial balance information, including row headers, begins on row 7. Enter the range 7+ to ensure no data is missed on future tabs. ► Run the workflow to check your work. ► With only one tab of data to input, it may not take long to run the workflow. But later, when we add multiple tabs, using an unlimited range may slow down the workflow. There are several ways to speed up workflows but the easiest is to limit the number of rows as shown in the range at right. Set the column labels from dataset. ► Select the Developer palette and choose the Dynamic Rename tool and connect the workflow to the L input. ► Page 34 Select “Take Field Names from First Row of Data” in the Rename Mode menu. This will make Row 1 our new column headers. Case study 1 - step 3 (of 15): creating account name and account number columns Purpose: We will separate columns for account name and account number. This will be helpful later and is generally good practice. ► Reviewing your dataset, you should note that your account column has both names and numbers. To separate these into separate columns, select the Parse palette and choose the Text to Columns tool. This tool works very similar to Excel. ► In Field to Split menu, select Financial Row. Noticing in your dataset that “-” is used to separate the account number from the account name, use “-” as the delimiter. ► Input 2 as the number of columns to split. Run the workflow , and look to the Results pane in the bottom right of the workflow. You should see two new columns on the right containing the account number and account names separately. Notice, the column headers are derived from the original column name or the “Output Root Name” of the tool configuration. We will rename columns later. Page 35 Case study 1 – step 4 (of 15): removing subtotals and other irrelevant rows Purpose: The dataset includes subtotals, grand totals and other rows that are not necessary in the flat file. We will remove them from the dataset based on a rule we infer for the data. Clean up the non-data rows in the dataset. ► We can see that the first three rows do not contain TB account data, and that later rows are empty, have subtotals, etc. We need to filter out these rows. ► Reviewing your data, notice that all TB accounts have a “0” in the number. Therefore, if we filter out rows that do not contain a 0, we will remove all irrelevant rows. ► Select the Filter tool under the Preparation palette. It will query records based on an expression to split data into two streams, True (records that satisfy the expression) and False (those that do not). ► Use a Custom Filter and express the condition that we want to achieve (ie. Field “Financial Row1” should contain a “0”). The condition leverages a function called Contains() – there are many other functions that you will learn over time that are helpful in similar situations. Page 36 Case study 1 – step 4 (of 15): removing subtotals and other irrelevant rows (cont’d) ► The tool has two outputs, “True” and “False” ► Be sure to connect the next step in the workflow to the “True” function, as that is the data we want to continue working on. ► Note that the false output can be just as useful as the true output – in circumstances in which you want to split your dataset and perform two different workflows on two different types of data. Note: You do not always want to simply filter subtotals out of your data. In practice, you may want to keep them in your flat file to be able to validate that line items actually add up to subtotals (i.e. check that the dataset internal integrity is working) Page 37 Case study 1 – step 5 (of 15): cleaning up and relabelling data Purpose: Clean up the data for empty values, whitespace in front of / at the end of account names. Relabel columns to appropriate names. Rename columns: ► Use a Select tool to (i) deselect “Financial Row” (it is no longer required), (ii) rename “Financial Row 1” and “Financial Row 2” as “Account number” and “Account name”, respectively, and (iii) highlight and move Account number and Account name to the top of the list. (continued on next page) Note: There are several fields that do not have headers indicating they do not contain data (e.g. Field 26). While we could deselect them at this stage, we would risk our workflow not be reusable for other tabs (in case they have a varying number of columns). We will instead filter out these fields later, after the “flattening” step. Page 38 Case study 1 – step 5 (of 15): cleaning up and relabelling data (cont’d) Clean up your data: ► Next, go to the Preparation palette and select the Data Cleansing which can automatically perform common data cleansing with a simple check of a box such as remove nulls, eliminate extra white space, clear numbers from a string entry. ► Ensure all desired fields under the “Select Fields to Cleanse” menu are selected. Here we can select all fields. ► All “String” fields with [null] will be made blank and all double fields with [null] will be 0. Page 39 Case study 1 – step 6 (of 15): flattening the dataset Purpose: Reshape the file from having 1 row / multiplecolumn form (a “wide” form) to a flattened format that is more flexible. ► Each row of data contains multiple datapoints, one amount for each entitiy. Analyzing the data later will be more powerful if each row only contains one datapoint so we must “unpivot” the data by transposing those columns into rows. ► Select the Transpose tool under the Transform palette. ► The “Key Fields” are the columns are data you do not want transformed from a horizontal to a vertical axis. In our case, select Account number and Account name. ► The “Data Fields” are the columns that contain data for which we want a single row each. This will create a column for the entity and a column for the values. ► Run the workflow Page 40 to see the results. Case study 1 – step 7 (of 15): adding the month and year to the dataset Purpose: Add a column with an indication which month the data refers to. We will pick the month from cell A3 of the dataset. ► Return to your data input file (‘Alteryx Raw DataProject Training V1’) at the beginning of the workflow. Create a new branch by adding another Select Records tool. See illustration at upper right. ► Enter 4 into the Range in the configuration panel to pick up the third row of data only. In the results output you should see Apr 2014 in the first column. ► Use Text to Columns to separate month and year. ► Select field F1 as your column, and use a space as the delimiter. The result should give the month and year in separate columns. You can also choose a specific output root name to identify new columns easily. ► Use the Select tool to (i) rename the two output columns as “Month” and Training Inc2 as “Year” and (ii) deselect all other fields ► Click run and your results should look like the this (continued on next page) Page 41 Case study 1 – step 7 (of 15): adding the month and year to the dataset (cont’d): ► Now that we’ve identified and extracted the month and year information from the source file, we need to add it to the TB information itself ► Select the Join palette and choose the Append Fields tool. This tool will append the fields from a source input to every record of a target input. The souce (S) should always contain fewer records than the Target (T). ► Connect the TB account work stream to the T input and the dates work steam to the S input. ► Run the workstream and notice in the results that every row now has the month and year column. Page 42 Case study 1 – step 8 (of 15): final clean up of the dataset Purpose: Final clean up of the data: making sure we do not have irrelevant entries and adjusting data types. ► It is very important to check that our data types are correct in the final dataset. Use a Select tool and observe that the Value field is currently V_String type. This is obviously not what we want – change it to Double type instead. Note that Value field was created automatically in Step 6 – Alteryx chose the datatype for it based on values that were found. ► Additionally, let’s rename Name field to Entity. ► Finally, run another Data Cleansing Tool on the dataset, as this will ensure that any empty values become zeros in the Value field. (continued on the next page) Page 43 Case study 1 – step 8 (of 15): final clean up of the dataset (con’t) ► If we run the Alteryx workflow and review the results, you’ll see rows containing “Fields” and “N/A” in the Entity column. This is due to the extra columns not containing data imported from the tabs of the original source file. ► As the dataset is now in a flat format, we no longer need to worry about identifying such “columns” manually, but rather we can filter them out based on Name field. Observe that we are interested only in rows where Name column is in format “EntityXX”. ► Use a Filter tool and a Contains() or StartsWith() function to filter the relevant entries. Congratulations! Take a break, and we will move on to learn how to apply your workflow across multiple tabs of datafile at once. Page 44 Note: In this step, we are again filtering out subtotals. If you wanted to check if the “Total” column actually is the sum of all entities, can you figure out a way to do it in Alteryx? (see optional step 8b for a solution on that) Case study 1 – optional step 8b (of 15): checking that subtotals are right ► 1 2 3 4 In some cases, you will want to check that your dataset’s line items add up to subtotals. The general idea is as follows: ► ► ► ► Page 45 2 Separate your dataset into two tables: line items and subtotals. In our example, you can do by using Filter tool from step #8 and further filtering its “False” output to include only rows that have entity name “Total”. 1 4 Aggregate both datasets to the same level of information using a Summarize tool. In this case, we want to Group data by Account Number, Month and Year, and Sum by Value Then, join both datasets to have the “calculated” total value (from line-items dataset) and the original total value (from totals dataset) match on a row-by-row. Use a Join tool for that, and join on common dimensions: Account Number, Month and Year. Finally, use a Filter Tool to find if there are rows where calculated total value is not equal to original total value. 2 2 3 3 Case Study 1 Part 2: Batch Macros Page 46 Introduction to Alteryx Macros Full workflow ► ► ► Alteryx allows packaging your workflows into macros which make your workflow accessible as a new “tool” in a workflow. This generally allows you saving commonly recurring workflows to avoid designing them over and over. In order to convert your workflow to a macro you need to specify inputs/outputs (check out Interface palette), change workflow type to macro under Workflow Configuration and save it. Repetitive steps Convert to a macro New workflow There are three types of macros: ► Standard macros: a simple packaged up workflow ► Batch macros: provided a list of values, run a workflow with each of the values as an input, and provide an output as a union of individual iterations. ► Iterative macros: run a workflow until a specified condition is met. Page 47 Convert to a macro Applying a macro solution to process data across multiple tabs Workflow that processes one tab ► We can leverage the batch macro functionality to have our one-tab workflow to be used to process multiple tabs at once. ► The steps are as follows: ► Modify the one-tab workflow to become a macro by i) adding an explicit Macro Output Tool, ii) adding a Control Parameter Tool which will allow us dynamically change the parameters of the Input Data tool. We will configure it so that Input Data tool does not refer to a fixed worksheet, but rather uses the value of the worksheet we provide to the macro. ► Save the workflow as a macro ► Design another workflow that uses the macro as a tool and passes it a list of tab names Page 48 Macro that accepts a tab name and produces output Pass tab names, get output for all of them at once A new workflow that uses macro Case study 1 – step 9 (of 15): converting the workflow to a batch macro Copy paste our final workflow (as seen in the picture right) into a new workflow and create a batch macro. ► Search for the Control Parameter and connect it to your Input tool. Alteryx will automatically connect an Action tool. ► Go into the configurations of the Action tool (left side of your Canvas) and select “File value” and “Replace a specific string”. We want to be replacing just the “default” tab name, so let’s enter “Apr 2014” as the value. ► Search for a Macro-output tool and connect it to your Append tool at the end of the workflow. ► Now save your workflow. You will notice it is saved with an extension of “yxmc”, not the usual “yxmd”. This workflow is your batch macro, and it now can be used as a new tool in your workflows. Page 49 Case study 1 – step 10 (of 15): testing the batch macro Let’s now test the macro by providing tab names manually ► Open a new workflow and right click on your Canvas. Choose “insert – Macro” and look up your previously saved batch macro. ► Create a Text Input tool and manually give the field name “SheetNames” and enter value “Apr 2014”. ► Connect the Text Input tool and adjust the Macro configuration to use the SheetNames field. ► Run the workflow and you should see results for Apr 2014. ► Repeat the same for Jun 2014. ► Now try passing two rows of data: Apr 2014 and Jun 2014. What happens? (continued on the next page) Page 50 Case study 1 – step 10 (of 15): testing the batch macro (cont.) ► When you run the macro with both Apr 2014 and Jun 2014 as inputs, you should be getting an error “The Field schema for the output changed between iterations” ► This error means that the macro did not produce identical datasets with each of two inputs. In this case, the column names are the same, but the issue is the data types! ► To test it, add a Select Tool after the macro and run it with “Apr 2014” as input and “Jun 2014” as input separately. You will see that The size parameter of Entity field changes from 10 to 11 characters. This is because it is an automatically generated field (step #6) and Alteryx tries to optimize its size. ► To fix it, go back to the original workflow, and add a Select Tool just before the Macro Output Tool, and set Entity field type to be V_String and size to be 255 characters. Don’t forget to save the workflow after you make the changes. ► Now you should be able to run the workflow with both “Apr 2014” and “Jun 2014” passed simultaneously. Page 51 Case study 1 – step 11 (of 15): running the batch macro across all tabs in a dataset Providing tab names manually is not the best solution. Let’s use a better way to do it. ► Instead of relying on Text Input tool, let’s leverage functionality in the Data Input Tool. ► Add an Input tool and look up our source data. However, instead of choosing a tab like we did before, choose “list of sheet names”. ► Clean up the list of sheet names by applying a Select tool to define the data type (V-strings) and a Filter tool to filter out the one unnecessary data point (Sheet1). Now connect these three tools to your batch macro. ► Click onto your batch macro and choose “Sheet Names (V-Strings)”. Now run the workflow and check the result. (use Browse Tool to see the entire result). Less than 10 seconds for processing the entire file into a organized flat format! Woohoo! Page 52 Case Study 1 Part 3: Adding Hierarchies Page 53 Case study 1 – step 12 (of 15): adding a fiscal year rollup and EY formatted month labels Purpose: The original data has calendar years in it. However, we want to present data on a fiscal year basis, which starts in July. Additionally, we want to have EYformatted month labels in the dataset. To add a fiscal year rollup and a EY formatted month labels, we need a “hierarchy” file (also known as a “mapping table”). Such a hierarchy file is provided to you in file Date Mapping.xlsx. ► Use the Input Data tool to add in the Date Mapping.xlsx file from your training materials. ► Add a Select Tool and observe that Year field of Double datatype. Convert it to V_String as we don’t want to treat is as a number. ► Add the Join tool to join the two datasets. The TB accounts should be the left input and the date mapping data should be the right input. In the “Join by specific fields”, select “Month” and “Year”. In the Field list, you can deselect “Right Month” and “Right Year”. ► When we add a connector on the output side of the Join tool, we will connect to the “J“ output. Click the icon to read more about the types of join options. Page 54 Case study 1 – step 13 (of 15): adding a P&L hierarchies Purpose: The original data has only account-level information in it. We want to have aggregation levels that allow us to get total revenue, total EBITDA, and etc. Use the separately created mapping file to add the financial statement hierarchy mapping. ► Next, use the Input tool to add in the Alteryx trainingMapping. Use the Select tool to ensure the data types are properly categorized (Categorize all as V-String for this exercise). ► Choose the Join tool to combine the trial balance work stream with the mapping file. Choose Join by Specific Fields. The left and right columns should be mapped using the columns labeled “account number”. ► Within the fields list of the Join tool, you can deselect Right Account Number as otherwise it will appear in the data twice. Page 55 Case study 1 – step 14 (of 15): checking if we dropped any data Purpose: When we use mapping tables, it is important to make sure we do not lose any data due to “missed joins” Remember that Join Tool has three outputs: ► L output, which represents rows from L input which did not join ► J output, which represents joined rows ► R output, which represents rows from R input which did not join. Check the L and R outputs of the Join tool from the previous step. What do you see? What does it mean? Is the workflow correct? Answer: in this case, the workflow is almost correct. The L output mainly represents accounts ending with “00” in the end, which are subtotal accounts are should be excluded. However, there are a few accounts that are missing in the mapping file and thus excluded. Can you think of a way to identify them? R output represents unused accounts. Page 56 Such “dropped” data in Join Tools is one of the most common gotchas for beginner Alteryx users. Make sure you always check if you have L or R outputs and you are comfortable with that! Case study 1 – step 15 (of 15): reordering columns and exporting the data to Excel Purpose: Final clean up of the data and exporting back to excel ► Use a Select Tool to rename columns as you see fit, and reorganize their order accordingly. ► Then, export the data using the Output tool from the In/Out palette and define where you want to save the output file. Be sure to save it in the file format you intend to use in Spotfire (or other tool) such as Excel or CSV. ► Note that the “Output Options” allow you to define the behaviour of Alteryx if specified output file or sheet already exists. Page 57 Case study 1: Debrief ► Discuss: ► ► ► ► Page 58 What were some benefits gained by processing this dataset in Alteryx instead of Excel? What in this workflow could have been done in a different way? Are there any hidden assumptions in the workflows that one needs to be aware of? Give some additional examples where you think data transformation in Alteryx will be helpful going forward. Quality and Risk Management considerations for Alteryx ► ► As you work with more detailed data, understanding of its basis (i.e. Quality of Financial Information work) becomes increasingly important. Do that upfront! Alteryx workflows should be reviewed in the team ► ► ► ► ► Page 59 Make sure workflows are clearly documented using comment tools to help the reader Any potential issues (e.g. “unjoined” rows) should be flagged in the workflows using message tools that raise errors when unexpected outputs are generated If you are the reviewer who is not technically strong in Alteryx, make sure you understand workflow purpose, any assumptions made, and check inputs and outputs. It’s always possible to take the output of Alteryx and reconcile it back to “ground truth” using usual TD methods Always consult prior to sharing Alteryx workflows with your Engagement Partner and Quality Leader. It represents an additional revenue opportunity as well as requires special considerations for Channel 1 clients. Unguided case study Page 60 Unguided case study: from raw data to a databook ► Objective: ► ► ► Datasets: ► ► ► ► Transform a transaction dataset into a flattened file by building a workflow in Alteryx to enable use in databooks, other analytic and visualization tools and the TS diligence dashboards. Build a dynamic databook showing Lead PL with ability to slice by legal entity / business unit. Data delivered in excel spreadsheets: management accounts per year Multiple business units (A, B, C,…) that each have several legal entities (1010, 1011, 1012, …) Mapping files provided Time: 1 hour Page 61