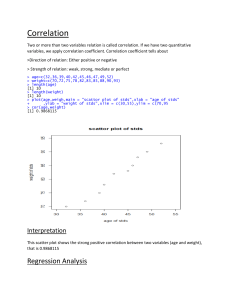

Regression residuals: Minimize sum of squared regression residuals: Ordinary Least Squares (OLS) estimates: Fitted values and residuals: Algebraic properties of OLS regression: Measures of Variation: 1) Total sum pf squares (total car in Y): 2) Explained sum of squares (var explained by regression): 3) Residual sum of squares (car not exp by regression): Decomposition of total variation: Goodness-of-Fit measures: R-squared interpretation: fraction of the total variation in Y explained by the regression Lin-Lin: change by $𝛽1 Log-Lin: change by 𝛽1(%) Log-Log: change by 𝛽1 % Change scale of Y by constant k: multiply all coefficient estimate by k Change X by a multiplicative constant k, leave Y alone: if multiply X by k, then the slope coefficient change by multiplying 1/k, no impact on intercept Homoskedasticity: The value of Y must contain no info about variability of error term Variance of the OLS estimators: Estimating error variance: Standard errors (how precisely the regression coefficients are estimated) for regression coefficients: OLS est of multiple regression model: 1) Regression residuals: 2) Min sum of squared residuals: MLR – Algebraic properties of OLS regression: MLR – Decomposition of total variation: 1) R-squared: 2) Alternative expression for R-squared: