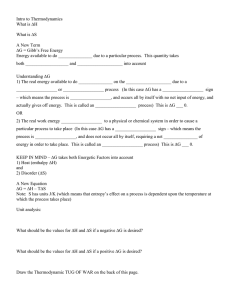

All Shook Up: Fluctuations, Maxwell`s Demon and the

advertisement