PDF, 4MB - Berkeley Reconfigurable Architectures, Systems, and

advertisement

Design Automation

for Streaming Systems

IA

IB

Eylon Caspi

University of California, Berkeley

OA

OB

12/2/05

Outline

♦ Streaming for Hardware

♦ From Programming Model to Hardware Model

♦ Synthesis Methodology for FPGA

• Streams, Queues, SFSM Logic

♦ Characterization of 7 Multimedia Apps

♦ Optimizations

• Pipelining, Placement, Queue Sizing, Decomposition

12/2/05

Eylon Caspi

2

Large System Design Challenges

♦ Devices growing with Moore’s Law

• AMD Opteron dual core CPU: ~230M transistors

• Xilinx Virtex 4 / Altera Stratix-II FPGAs: ~200K LUTs

♦ Problems of DSM, large systems

• Growing interconnect delay, timing closure

♦

“Routing delays typically account for 45% to 65%

of the total path delays” (Xilinx Constraints Guide)

• Slow place-and-route

• Design complexity

• Designs do not scale well on next gen. device; must redesign

♦ Same problems in FPGAs

12/2/05

Eylon Caspi

3

Limitations of RTL

♦ RTL = Register Transfer Level

♦ Fully exposed timing behavior

• always @(posedge clk) ...

♦ Laborious, error prone

♦ Unpredictable interconnect delay

• How deep to pipeline?

• Redesign on next-gen device

♦ Undermines reuse

♦ Existing solutions

• Modular design

• Floorplanning

• Physical synthesis

• Hierarchical CAD

• Latency insensitive communication

12/2/05

Eylon Caspi

4

Streams

♦ A better communication abstraction

♦ Streams connect modules

Stream

Memory

Module

(compute)

• FIFO buffered channel (queue)

• Blocking read

• Timing independent (deterministic)

♦ Robust to communication delay

• Pipeline across long distances

• Robust to unknown delay

Post-placement pipelining

♦ Alternate transport (packet switched NOC)

♦

♦ Flexibly timed module interfaces

• Robust to module optimization (pipeline, reschedule, etc.)

♦ Enhances modular design + reuse

12/2/05

Eylon Caspi

5

Streaming Applications

♦ Persistent compute structure (infrequent changes)

♦ Large data sets, mostly sequential access

♦ Limited feedback

♦ Implement with deep,

system level pipelining

♦ E.g. DSP, multimedia

♦ JPEG Encoder:

12/2/05

Eylon Caspi

6

Ad Hoc Streaming

♦ Every module needs streaming flow control

• Block if inputs not available, output not ready to receive

♦ Every stream needs queueing

• Pipeline to match interconnect delay

• Queue to absorb delay mismatch, dynamic rates

♦ Manual implementation, in HDL

• Laborious

(flow control, queues)

• Error prone

(deadlock if violate protocol, queue too small)

• No automation (pipeline depth, queue choice / width / depth)

♦ Interconnect / queue IP (e.g. OCP / Sonics Bus)

• Still no automation

12/2/05

Eylon Caspi

7

Systematic Streaming

♦ Strong stream semantics: Process Networks

• Stream = FIFO channel with (flavor of) blocking read

• E.g. Kahn Process Networks,

E.g. Dataflow Process Networks (E.A.Lee)

♦ Streams as programming primitive

• Language support hides flow control

♦ Compiler support

• Compiler generated flow control

• Compiler controlled pipelining, queue depth, queue impl.

• Compiler optimizations (e.g. module merging, partitioning)

♦ Benefits

• Easy, correct, high performance • Portable

• Paging / Virtualization is a logical extension (Automatic page partitioning)

12/2/05

Eylon Caspi

8

Outline

♦ Streaming for Hardware

♦ From Programming Model to Hardware Model

♦ Synthesis Methodology for FPGA

• Streams, Queues, SFSM Logic

♦ Characterization of 7 Multimedia Apps

♦ Optimizations

• Pipelining, Placement, Queue Sizing, Decomposition

12/2/05

Eylon Caspi

9

SCORE Model

Stream Computations

Organized for

Reconfigurable Execution

♦ Application = Graph of stream-connected operators

♦ Operator

= Process with local state

♦ Stream

= FIFO channel,

unbounded capacity,

blocking read

♦ Segment

= Memory, accessed

via streams

Segment

Operator

(SFSM)

♦ Dynamics:

• Dynamic I/O rates

• Dynamic graph construction

Stream

(omitted in this work)

12/2/05

Eylon Caspi

10

SCORE Programming Model: TDF

♦ TDF = behavioral language for

• SFSM Operators (Streaming Extended Finite State Machine)

• Static operator graphs

♦ State machine for

• Sequencing, branching

• Firing control

♦ Firing semantics

• In state X, wait for X’s inputs, then evaluate X’s action

i

j

state foo (i, j):

o = i + j;

goto bar;

}

12/2/05

o

Eylon Caspi

11

SCORE / TDF Process Networks

♦ A process from M inputs to N outputs,

unified stream type S (i.e. SM→SN)

♦ SFSM = (Σ, σ0, σ, R, fNS, fO)

• Σ

= Set of states

• σ0 ∈ Σ

= Initial state

• σ∈Σ

= Present state

• R ⊆ (Σ × SM) = Set of firing rules

• fNS : R→Σ

= Next state function

• fO : R→SN

= Output function

♦ Similar to dataflow process networks

[Lee+Parks, IEEE May ‘95],

but with stateful actors

12/2/05

Eylon Caspi

12

Related Streaming Models

♦ Streaming Models

• Kahn PN, DFPN, BDF, SDF, CSDF, HDF,

StreamsC, YAPI, Catapult C, SHIM

♦ Streaming Platforms

• Pleiades, Philips VSP, Imagine, TRIPS

♦ How do we differ?

• Stateful processes

• Deterministic

• Dynamic dataflow rates (FSM nodes)

• Direct synthesis to hardware

• Bounded Buffers

12/2/05

Eylon Caspi

13

Streaming Platforms

♦ FPGA

(this work)

♦ Paged FPGA

• Page = fixed size partition,

connected by streams

• Stylized page-to-page interconnect

• Hierarchical PAR

♦ Paged, Virtual FPGA (SCORE)

• Time shared pages

• Area abstraction (virtually large)

♦ Multiprocessor on Chip

♦ Heterogeneous

12/2/05

Eylon Caspi

14

The Compilation Problem

Programming Model: TDF

Execution Model: FPGA

• Communicating SFSMs

• Single circuit / configuration

- unrestricted size, # IOs, timing

- one or more clocks

• Unbounded stream buffering

• Fixed size queues

memory

segment

TDF

operator

Compile

stream

12/2/05

FPGA

Big semantic gap

Eylon Caspi

15

The Semantic Gap

♦ Semantic gap between TDF, HW

♦ Need to bind:

•

•

•

•

•

•

•

Compile

FPGA

Stream protocol

Stream pipelining

Queue implementation

Queue depths

SFSM synthesis style (behavioral synthesis)

Memory allocation

Primary I/O

♦ SCORE device binds some implementation decisions

(custom hardware), raw FPGA does not

♦ Want to characterize cost of implementation decisions

12/2/05

Eylon Caspi

16

Outline

♦ Streaming for Hardware

♦ From Programming Model to Hardware Model

♦ Synthesis Methodology for FPGA

• Streams, Queues, SFSM Logic

♦ Characterization of 7 Multimedia Apps

♦ Optimizations

• Pipelining, Placement, Queue Sizing, Decomposition

12/2/05

Eylon Caspi

17

Compilation Tool Flow

Application

• Local optimization

• System optimization

• Queue sizing

TDF

tdfc

• Pipeline extraction

• SFSM partitioning / merging

• Pipelining

• Generate flow ctl, streams, queues

Verilog

Synplify

• Behavioral Synthesis

• Retiming

EDIF

(Unplaced LUTs, etc.)

Xilinx ISE

• Slice packing

• Place and route

Device Configuration

Bits

12/2/05

Eylon Caspi

18

Wire Protocol for Streams

♦

D = Data,

V = Valid,

B = Backpressure

♦

Synchronous transaction protocol

• Producer asserts V when D ready, Consumer deasserts B when ready

• Transaction commits if (¬B ∧ V) at clock edge

• Encode EOS E as extra D bit (out of band, easy to enqueue)

Clk

D (Data),

Producer

E (EOS)

V (Valid)

D

Consumer

B (Backpressure)

12/2/05

Eylon Caspi

V

B

19

Operator Firing

♦ In state X, fire if

• Inputs desired by X are ready (Valid, EOS)

• Outputs emitted by X are ready (Backpressure)

♦ Firing guard / control flow

• if (iv && !ie && !ob) begin

ib=0; ov=1;

...

end

id,e

iv

ib

Op

od,e

ov

ob

♦ Subtlety: master, slave

• Operator is slave

♦

To synchronize streams, (1) wait for flow control in, (2) fire / emit out

• Connecting two slaves would deadlock

• Need master (queue) between every pair of operators

12/2/05

Eylon Caspi

20

SFSM

Synthesis

B V E D

Control

Data registers

Stream I/O

FSM

♦ Implemented as

Behavioral

Verilog, using

state ‘case’ in

FSM and DP

Datapath

For State 1

Datapath

For State 2

♦ FSM handles

firing control,

branching

♦ FSM sends state

to DP

♦ DP sends bool.

flags to FSM

Datapath

12/2/05

21

B V E D

FSM Module, Firing Control

TDF:

Verilog

FSM

Module:

12/2/05

foo (input

input

output

{

state one

...

}

unsigned[16] x,

unsigned[16] y,

unsigned[16] o)

(x, eos(y)) : o=x+1;

module foo_fsm (clock, reset, x_e, x_v, x_b, y_e, y_v, y_b,

o_e, o_v, o_b, state, statecase);

...

always @* begin

x_b_=1; y_b_=1; o_e_=0; o_v_=0;

state_reg_ = state_reg;

Default

statecase_ = statecase_stall;

did_goto_ = 0;

case (state_reg)

state_one: begin

if (x_v && !x_e && y_v && y_e && !o_b)

statecase_ = statecase_1;

x_b_=0; y_b_=0; o_v_=1; o_e_=0;

end

...

end // always @*

Eylon Caspi

endmodule // foo_fsm

is stall

Firing condition(s)

for state one

begin

Stream flow ctl

for state one

22

Data-Path Module

TDF:

Verilog

Data-path

Module:

12/2/05

foo (input

input

output

{

state one

...

}

unsigned[16] x,

unsigned[16] y,

unsigned[16] o)

(x, eos(y)) : o=x+1;

module foo_dp (clock, reset, x_d, y_d, o_d, state, statecase);

...

always @* begin

o_d_=16’bx;

Default

did_goto_ = 0;

case (state)

state_one: begin

if (statecase_ == statecase_1)

o_d_ = (x_d + 1’d1);

end

...

end // always @*

endmodule // foo_dp

Eylon Caspi

begin

is stall

Firing condition(s)

for state one

Data-path

for state one

23

Stream Buffers (Queues)

♦ Systolic

• Cascade of depth-1

stages (or depth-N)

♦ Shift register

• Put: shift all entries

• Get: tail pointer

♦ Circular buffer

• Memory with

head / tail pointers

12/2/05

Eylon Caspi

24

Enabled Register Queue

♦ Systolic, depth-1 stage

iB

♦ 1 state bit (empty/full) = V

iV

iD

oV

oD

en

♦ Shift in data unless:

• Full and downstream not ready

to consume queued element

♦ Area 1 FF per data bit

• On FPGA 1 LUT cell per data bit

• Depth-1 (single stage) nearly free,

oB

since FFs pack with logic

♦ Speed: as fast as FF

• But combinationally connects producer + consumer via B

12/2/05

Eylon Caspi

25

Xilinx SRL16

♦ SRL16 = Shift register of depth 16

in one 4-LUT cell

• Shift register of arbitrary width: parallel SRL16,

arbitrary depth: cascade SRL16

♦ Improve queue density by 16x

4-LUT Mode

12/2/05

SRL16 Mode

Eylon Caspi

26

Shift Register Queue

♦ State: empty bit +

capacity counter

iB iV iD,E

♦ Data stored in shift register

• In at position 0

• Out at position Address

♦ Address = number of

stored elements minus 1

♦ Synplify infers SRL16E

from Verilog array

0

+1

-1

FSM

en

Address

Empty

Shift Reg

• Parameterized depth, width

♦ Flow control

• ov = (State==Non-Empty)

• ib = !(Address==Depth-1)

NonEmpty

=Depth-1

=0

full

♦ Performance improvements

zero

• Registered data out

• Registered flow control

• Specialized, pre-computed

fullness

12/2/05

oB oV oD,E

Eylon Caspi

27

SRL Queue with Registered Data Out

♦ Registered data out

iB iV iD,E

• od (clock-to-Q delay)

• Non-retimable

♦ Data output register

extends shift register

♦ Bypass shift register

when queue empty

♦ 3 States

♦ Address = number

of stored elements

minus 2

♦ Flow control

0

+1

-1

Empty

en

Address

Shift Reg

One

=Depth-2

More

full

zero

=0

Data Out

• ov = !(State==Empty)

• ib = (Address

==Depth-2)

12/2/05

oB oV oD,E

Eylon Caspi

28

SRL Queue with Registered Flow Ctl.

♦ Registered flow ctl.

iB iV iD,E

• ov (clock-to-Q delay)

• ib (clock-to-Q delay)

• Non-retimable

0

♦ Flow control

• ov_next = !(State_next

en

Address

• ib_next =

♦ Based on precomputed fullness

-1

Empty

==Empty)

(Address_next

==Depth-2)

+1

Shift Reg

One

More

• full_next =

full_next

full

zero

=Depth-2

=0

Data Out

(Address_next

==Depth-2)

oB oV oD,E

12/2/05

Eylon Caspi

29

SRL Queue with Specialized,

Pre-Computed Fullness

♦ Speed up critical full

pre-computation by

special-casing full_next

for each state

iB iV iD,E

♦ Flow control

• ov_next = !(State_next

==Empty)

• ib_next = full_next

♦ zero pre-computation is

less critical

♦ Result

• >200MHz unless very

large (e.g. 128 x 128)

• All output delays are

clock-to-Q

• Area ≈ 3 x (SRL16E area)

12/2/05

0

+1

-1

Empty

en

Address

Shift Reg

One

full

=Depth-3

=0

More

zero

Data Out

oB oV oD,E

Eylon Caspi

30

SRL Queue Speed

12/2/05

Eylon Caspi

31

SRL Queue Area

12/2/05

Eylon Caspi

32

Page Synthesis

♦ Page = Cluster of

Operator(s) + Queues

♦ SFSMs

Op 1

FSM

• One or more per page

• Further decomposed into

Op 1

Datapath

FSM, data-path

♦ Page Input Queues

• Deep

• Drain pipelined page-topage streams before

reconfiguration

Page

Input

Queue(s)

Queue

♦ In-page Queues

• Shallow

♦ Separately Synthesizable

Modules

Op 2

FSM

• Separate characterization

• Consider custom resources

12/2/05

Eylon Caspi

Op 2

Datapath

33

Page Synthesis

♦ Module Hierarchy

• Individual SFSMs

(combinational cores)

Op 1

FSM

• Local / output queues

Op 1

Datapath

• Operators and

local / output queues

• Input queues

• Page

Page

Input

Queue(s)

Queue

Op 2

FSM

12/2/05

Eylon Caspi

Op 2

Datapath

34

Outline

♦ Streaming for Hardware

♦ From Programming Model to Hardware Model

♦ Synthesis Methodology for FPGA

• Streams, Queues, SFSM Logic

♦ Characterization of 7 Multimedia Apps

♦ Optimizations

• Pipelining, Placement, Queue Sizing, Decomposition

12/2/05

Eylon Caspi

35

Tool Flow, Revisited

♦

Separate compilation

for application, SFSMs

• Page

• SFSM

• Datapath • FSM

Application

tdfc

Verilog

Synplify

EDIF

Tool Options

• Identical queuing for every stream

(SRL16 based, depth 16)

• I/O boundary regs

(for Xilinx static timing analysis)

• Synplify 8.0

• Target 200MHz

• Optimize: FSM, retiming, pipelining

• Retain monolithic FSM encodings

(Unplaced LUTs, etc.)

Xilinx ISE

Device Configuration

Bits

12/2/05

Eylon Caspi

• ISE 6.3i

• Constrain to minimum square area,

at least max slice packing + 20%,

expand if fail PAR

• Device: XC2VP70 -7

36

PAR Flow for Minimum Area

EDIF

Constraints

♦

EDIF netlist from Synplify

♦

Constraints file

• Page area

• Target Period

ngdbuild

♦

ngdbuild:

• Convert netlist EDIF → NGD

map

♦

map:

• Pack LUTs, MUXes, etc. into slices

Ok?

yes

no

trce

Target

packed

timing

par

Ok?

yes

trce

Target

packed

slices

no

Target

1 extra

row/col

♦

trce: (pre-PAR)

• Static timing analysis, logic only

♦

par:

• Place and route

♦

trce: (post-PAR)

• Static timing analysis

37

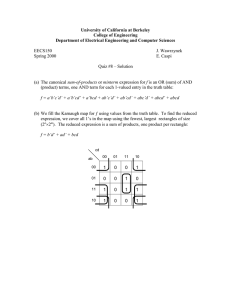

SCORE Applications

♦ 7 Multimedia Applications / 279 Operators

• MPEG, JPEG, Wavelet, IIR

• Written by Joe Yeh

• Mostly feed-forward streaming

• Constant consumption / production ratios,

except compressors (ZLE, Huffman)

Streams

Application

Speed

Area

%Area

%Area

SFSMs

Segments

In

Local

Out

(MHz)

(4-LUT cells)

FSMs

Queues

IIR

8

0

1

7

1

166

1,922

3.4%

27.7%

JPEG Decode

9

1

1

41

8

47

7,442

7.0%

28.7%

JPEG Encode

11

4

8

42

1

57

6,728

7.5%

36.9%

MPEG Encode IP

80

16

6

231

1

47

41,472

5.5%

39.7%

MPEG Encode IPB

114

17

3

313

1

50

65,772

5.2%

40.5%

Wavelet Encode

30

6

1

50

7

106

8,320

10.1%

32.0%

Wavelet Decode

27

6

7

49

1

109

8,712

8.5%

29.6%

Total

279

50

27

733

20

140,368

5.9%

38.3%

12/2/05

Eylon Caspi

38

Page Area

DCT, IDCT

♦

87% of SFSMs are smaller than 512 LUTs — by design

♦

FSMs small

12/2/05

♦ Datapaths dominate in most large pages

Eylon Caspi

39

Page Speed

43%

47%

♦

FSMs (flow control) are fast, never critical

♦

Queues are critical for 1/3 fastest pages

12/2/05

Eylon Caspi

10%

♦ Datapaths dominate

40

Outline

♦ Streaming for Hardware

♦ From Programming Model to Hardware Model

♦ Synthesis Methodology for FPGA

• Streams, Queues, SFSM Logic

♦ Characterization of 7 Multimedia Apps

♦ Optimizations

• Pipelining, Placement, Queue Sizing, Decomposition

12/2/05

Eylon Caspi

41

Improving Performance, Area

♦ Local (module) Optimization

• Traditional compiler optimization

♦

(const folding, CSE, etc.)

• Datapath pipelining / loop scheduling

• Granularity transforms

♦

(composition / decomposition)

♦ System Level Optimization

• Interconnect pipelining

• Shrink / remove queues

• Area-time transformations

♦

12/2/05

(rate matching, serialization, parallelization)

Eylon Caspi

42

Pipelining With Streams

♦ Datapath pipelining

• Add registers at output (or input)

• Retime into place

♦ Harder in practice (FSM, cycles)

• Add registers at strategic locations

• Rewrite control

• Avoid violating communication protocol

♦ Stream pipelining

• Add registers on streams

• Retime into datapath

• Modify queues, not processes

12/2/05

Eylon Caspi

DP

FSM

DP

FSM

DP

43

Logic Pipelining

♦ Add L pipeline registers to D, V

♦ Retime backwards

• This pipelines feed-forward parts of producer’s data-path

♦ Stale flow control may overflow queue (by L)

♦ Modify queue to emit back-pressure when empty slots ≤ L

♦ No manual modification of processes

Retime

Producer

12/2/05

D

(Data)

D

V

(Valid)

B

(Backpressure)

Queue

with

L Reserve

Eylon Caspi

V

Consumer

B

44

Logic Relaying + Retiming

♦ Break-up deep logic in a process

♦ Relay through enabled register queue(s)

♦ Retime registers into adjacent process

• This pipelines feed-forward parts of process’s datapath

• Can retime into producer or consumer

♦ No manual modification of processes

Retime

Producer

D

D

V

V

en

B

12/2/05

B

Eylon Caspi

D

Original

Queue

V

Consumer

B

45

Benefits, Limitations

♦ Benefits

• Simple to implement, relies only on retiming

• Sufficient for many cases, e.g. DCT, IDCT

♦ Limitations

• Feed-forward only (weaker than loop sched.)

• Resource sharing obfuscates retiming opportunities

♦ Extends to interconnect pipelining

• Do not retime into logic — register placement only

• Also pipeline B, modify queue

12/2/05

Eylon Caspi

46

Pipelining Configuration

Retime

Retime

D

V

Queue

with Reserve

D

D

V

V

Lp

B

Retime

SFSM

D

D

D

V

V

V

en

en

B

B

B

B

B

Input side

Logic Relaying

Logic

Pipelining

Output side

Logic Relaying

Li

Lp

Lr

♦ Pipeline depth parameters: Li+Lp+Lr

♦ Uniform pipelining: same depths for every stream

12/2/05

Eylon Caspi

47

Speedup from Logic Pipelining

Enabled Regs (Lr)

12/2/05

Eylon Caspi

D FFs (Lp)

48

Expansion from Logic Pipelining

Enabled Regs (Lr)

12/2/05

Eylon Caspi

D FFs (Lp)

49

Some Things Are Better Left Unpipelined

♦ Page

speedup:

♦ Page

expansion:

♦ Initially fast

pages should

not be pipelined

12/2/05

Eylon Caspi

50

Page Specific Logic Pipelining

♦ Separate pipelining of each SFSM

♦ Assumption:

application speed = slowest page speed

• Critical Page

♦ Repeatedly improve slowest page

until no further improvement is possible

♦ Page improvement heuristics

• Greedy Lr :

• Greedy Lp :

• Max :

Add one level of pipelining in 0+0+Lr

Add one level of pipelining in 1+Lp+0

Pipeline to best page speed (brute force)

♦ Greedy heuristics may end early

• Non-monotonicity: adding a level of pipelining may slow page

12/2/05

Eylon Caspi

51

Speedup from Page Specific

Enabled Regs (Lr)

12/2/05

D FFs (Lp)

Eylon Caspi

52

Expansion from Page Specific

Enabled Regs (Lr)

12/2/05

D FFs (Lp)

Eylon Caspi

53

Interconnect Delay

♦ Critical routing delay grows with circuit size

• Routing delay for an application:

avg. 45% - 56%

• Routing delay for its slowest page: avg. 40% - 50%

• Ratio (appl. to slowest page):

avg. 0.99x - 1.34x

♦

Averaged over 7 apps / varies with logic pipelining

♦ Modular design helps

• Retain critical routing delay of page, not application

• Page-to-page delays (streams) can be pipelined

12/2/05

Eylon Caspi

54

Interconnect Pipelining

♦ Add W pipeline registers to D, V, B

• Mobile registers for placer

• Not retimable

♦ Stale flow control may overflow queue (by 2W)

• Staleness = total delay on B-V feedback loop = 2W

♦ Modify downstream queue to emit back-pressure when

empty slots ≤ 2W

Long distance

Producer

D

(Data)

V

(Valid)

D

Queue

with

2W Reserve

B

(Backpressure)

12/2/05

V

Consumer

B

Eylon Caspi

55

Speedup from Interconnect Pipelining

12/2/05

Eylon Caspi

56

Speedup from Interconnect Pipelining,

No Area Constraint

12/2/05

Eylon Caspi

57

Expansion from Interconnect

Pipelining, No Area Constraint

12/2/05

Eylon Caspi

58

Interconnect Register Allocation

♦ Commercial FPGAs / tool flows

•

•

•

•

No dedicated interconnect registers

Allocation: add to netlist, slice pack, place-and-route

If pack registers with logic

limited register mobility

If pack registers alone

area overhead

♦ Better: Post-placement register allocation

• Weaver et al., “Post-Placement C-Slow Retiming for the Xilinx

•

•

•

•

Virtex FPGA,” FPGA 2003

Allocation: PAR, c-slow, retime, scavenge registers, reroute

No area overhead (scavenge registers from existing placement)

Better performance, since know routing delay

Modification for streaming:

♦

12/2/05

PAR, pipeline, retime, scavenge registers, reroute,

modify queue depths (configuration specialization)

Eylon Caspi

59

Throughput Modeling

♦ Pipelining feedback loops may reduce

throughput (tokens per clock period)

• Which loops / streams are critical?

♦ Throughput model for PN

• Feedback cycle C with

M tokens, N pipe delays,

has token period:

TC = M/N

• Overall token period: T = maxC {TC }

• Available slack:

CycleSlackC = (T - TC)

• Generalize to multi-rate, dynamic rate by unfolding

TC1 = 3

TC2 = 2

equivalent single-rate PN

12/2/05

Eylon Caspi

60

Throughput Aware Optimizations

♦ Throughput aware placement

• Adapt [Singh+Brown, FPGA 2002]

• Stream slack:

Te = maxC s.t. e∈C {TC }

• Stream net criticality: crit = 1 - ((T - Te) / T)

♦ Throughput aware pipelining

• Pipeline stream w/o exceeding slack

• Pipeline module s.t. depth does not exceed any output

stream slack

♦ Pipeline balancing (by retiming)

♦ Process Serialization

• Serial arithmetic for process with low throughput, high slack

12/2/05

Eylon Caspi

61

Stream Buffer Sizing

♦ Fixed size buffers in hardware

• For minimum area

• For performance

(want smallest feasible queue)

(want deep enough to avoid stalls from

producer-consumer timing mismatch)

♦ Semantic gap

• Buffers are unbounded in TDF,

•

•

•

12/2/05

bounded in HW

Small buffer may create

artificial deadlock (bufferlock)

Theorem: memory bound

is undecidable

for a Turing complete

process network

In practice, our buffering

requirements are small

Eylon Caspi

Bounded

x=

y=

x

=x

y

=y

Unbounded

x=

y=

x

=x

y

=y

62

Dealing with Undecidability

♦ Handle unbounded streams

• Buffer expansion [Parks ‘95]

♦

Detect bufferlock, expand buffers

• Hardware implementation

♦

Buffer expansion = rewire to another queue

♦

Storage in off-chip memory or queue bank

♦ Guarantee depth bound for some cases

• User depth annotation

• Analysis

♦

Identify compatible SFSMs with

balanced schedules

♦ Detect bufferlock and fail

12/2/05

Eylon Caspi

63

Interface Automata

de Alfaro + Henzinger,

Symp. Found. SW Eng.

(FSE) 2001

♦ A finite state machine that transitions on I/O actions

• Not input-enabled (not every I/O on every cycle)

♦ G = (V, E, Ai, Ao, Ah, Vstart)

•

•

•

•

Ai

Ao

Ah

E

=

=

=

⊂

input actions

output actions

internal actions

V x (Ai ∪ Ao ∪ Ah) x V

x? (in CSP notation)

y!

”

z;

”

(transition on action)

♦ Execution trace = (v, a, v, a, …)

s

st;

t

S

s?

12/2/05

o!

s

o

F

f?

t

f

T’

S’

sf;

f

T

t?

(non-deterministic branching)

F’

o!

Eylon Caspi

select

o

64

Automata Composition

♦ Composition ~ product FSM with synchronization

(rendezvous) on common actions

Composition edges:

x

A

y

B

x?

z

A

(I)

A’

step A on unshared action

(ii) step B on unshared action

(iii) step both on shared action

y!

x?

Compatible

Composition

→

Bounded

Memory

12/2/05

AB

B

x?

A’B

AB

y;

z!

y?

B’

z!

z!

AB’

A’B’

A’B

y!

y? z!

x?

z!

AB’

y?

A’B’

x?

y!

Automata

Composition

Direct Product

65

Stream Buffer Bounds Analysis

♦ Given a process network, find minimum

buffer sizes to avoid bufferlock

♦ Buffer (queue) is also automaton

x

i

A

♦ Symbolic Park’s algorithm

o

B

y

• Compose network using

•

arbitrary buffer sizes

If deadlock, try larger sizes

x

i

A

♦ Practical considerations:

avoiding state explosion

• Multi-action automata

• Know which streams to expand first

• Compose pairwise in clever order

♦

12/2/05

0

Eylon Caspi

i?

1

o!

Q

o

B

y

i?

Composition is associative

• Cull states reaching deadlock

• Partition system

Q x

2

o!

i?o!

66

SFSM Decomposition

♦

(Partitioning)

Why decompose

• To improve locality

• To fit into custom page resources

♦

Decomposition by state clustering

• 1 state (i.e. 1 cluster) active at a time

♦

Cluster states to contain transitions

• Fast local transitions, slow external trans.

• Formulation: minimize cut of transition

probability under area, I/O constraints

♦

Similar to:

•

•

•

•

12/2/05

VLIW trace scheduling

[Fisher ‘81]

FSM decomp. for low power [Benini/DeMicheli ISCAS ‘98]

GarpCC HW/SW partitioning [Callahan ‘00]

VM/cache code placement

Eylon Caspi

State flow

Data flow

67

Early SFSM Decomposition Results

♦ Approach 1: Balanced, multi-way, min-cut

• Modified Wong FBB [Yang+Wong, ACM ‘94]

• Edge weight is mix: c*(transition probability) + (1-c)*(wire bits)

• Poor at simultaneous I/O constraint + cut optimization

♦ Approach 2: Spectral order + Extent cover

• Spectral ordering clusters connected components in 1D

♦

Minimize squared weighted distance, weight is mix (as above)

• Then choose area + I/O feasible extents [start, end]

•

using dynamic programming

Effective for partitioning to custom page resources

♦ Under 2% external transitions

• Amdahl’s law: few slow transitions ⇒ small performance loss

• Achievable with either approach

12/2/05

Eylon Caspi

68

Summary

♦ Streaming addresses large system design challenges

• Growing interconnect delay

• Flexibly timed module interfaces

• Design complexity

• Reuse

♦ Methodology to compile SCORE applications to Virtex-II Pro

• Language + compiler support for streaming

♦ Characterized 7 applications on Virtex-II-Pro

• Queue area ~38%

♦

• Flow control FSM area ~6%

Improve by merging SFSMs, eliminating queues

♦ Stream pipelining

• For logic

• For interconnect

♦ Stream based optimizations

• Pipelining • Queue sizing

• Placement • Serialization

12/2/05

• Module Merging • Partitioning

Eylon Caspi

69

Supplemental Material

12/2/05

Eylon Caspi

70

TDF ∈ Dataflow Process Networks

♦ Dataflow Process Networks

[Lee+Parks, IEEE May ‘95]

• Process enabled by set of firing rules: R = { r1, r2, … , RK }

• Firing rule = set of input patterns:

ri = ( ri,1, ri,2 , … , ri,M )

• Feedback arc for state

• Firing rule(s) per state

state

♦ DF process for a TDF operator:

process

Patterns match state + input presence

♦ E.g. for state σ : rσ = ( [σ], rσ,1, rσ,2 , … )

♦ Patterns:

rσ,j = [*]

if input j is

in input signature of state σ

rσ,j = ⊥

if input j is not in input signature of state σ

♦

• Single firing rule per state = DFPN sequential firing rules

• Multiple firing rules per state translate the same way,

with restrictions to retain determinism

12/2/05

Eylon Caspi

71

SFSM Partitioning Transform

♦

Only 1 partition active at a time

• Transform to activate via streams

♦

A

C

B

D

New state in each partition: “wait”

• Used when not active

• Waits for activation

from other partition(s)

• Has one input signature

(firing rule) per activator

♦

Firing rules are not sequential,

but determinism guaranteed

• Only 1 possible activator

♦

A

Activation streams from

given source to given dest.

partitions can be merged +

binary-encoded

12/2/05

Eylon Caspi

B

{C,D}

{A,B}

Wait

Wait

AB

CD

{C,D}

{A,B}

C

D

72

Virtual Paged Hardware (SCORE)

♦

Compute model has unbounded resources

♦

Paging

♦

Efficient virtualization

• Programmer does not target a particular device size

• “Compute pages” swapped in/out (like virtual memory)

• Page context = thread (FSM to block on stream access)

• Amortize reconfiguration cost over an entire input buffer

• Requires “working sets” of tightly-communicating pages to fit on device

compute

pages

buffers

Transform

Quantize

RLE

Encode

73