Offset-free Reference Tracking for PC 2007

advertisement

Proceedings of the

46th IEEE Conference on Decision and Control

New Orleans, LA, USA, Dec. 12-14, 2007

FrB03.5

Offset-Free Reference Tracking for Predictive Controllers

Urban Maeder, and Manfred Morari

Automatic Control Laboratory, ETH Zurich, Physikstrasse 3, ETL K13.1, CH – 8092 Zurich, Switzerland

maeder | morari @control.ee.ethz.ch

Abstract— We discuss the offset-free reference tracking problem for linear constrained systems in the presence of disturbances and plant-model mismatch. Introducing disturbance

models, we show how to construct a controller / observer

combination, such that zero offset is achieved. Contrary to

other approaches, the plant model is augmented only by as

many disturbance states as there are tracked variables, thus

yielding the controller with minimal complexity according to

the internal model principle.

when explicit Model Predictive Control is used [2], where

the complexity increases quickly with the number of state

variables.

II. P RELIMINARIES

Consider the feedback system in Figure 1. Let the nominal

model be defined by

x(k + 1) = Ax(k) + Bu(k)

y(k) = Cx(k)

I. I NTRODUCTION

In Model Predictive Control (MPC), a model of the plant

is used to predict the future evolution of the system state

[6], [7]. At each time step an optimization problem is solved

over the sequence of future input moves, possibly subject to

constraints. The first optimal control move obtained by the

optimization is then applied to the plant. At the next time

step, the prediction horizon of the optimization problem is

shifted forward in time and the procedure is repeated.

By the internal model principle [4], there have to be as

many integrators in the controller as there are variables for

offset-free control. Most traditional methods for LQG-style

state-feedback controllers suggest adding the integral of the

tracking error as additional state variable [3], [5]. Such a

scheme is inherently offset-free, but limited in the choice of

the disturbance model. In a constrained setup, these methods

suffer from windup effects [1], [8].

While MPC directly considers constraints, applying these

methods to MPC has some disadvantages. For instance,

the choice of an invariant terminal set which guarantees

feasibility is not clear. Some traditional methods do not

allow freedom in designing the disturbance model, since they

only consider the tracking error. For MPC, a two-degreeof-freedom method, where reference and output signals are

considered independently, is therefore preferable.

For these reasons, interest in disturbance model and observer based approaches has increased [10], [9]. Both teams

of authors independently derived conditions for offset-free

reference tracking. They both prove that if the plant is

augmented with as many disturbance states as there are

measured variables, there will be no offset. In the case where

only a subset of the measured variables is actually to be

controlled with zero offset, this method yields too complex

models. By the internal model principle, it should be possible

to add only as many disturbance states as there are outputs

to control with zero offset. Adding more variables than

necessary will lead to a unnecessarily complex optimization

problem in MPC. This effect is particularly bothersome

1-4244-1498-9/07/$25.00 ©2007 IEEE.

(1)

where x(k) ∈ Rnx , u(k) ∈ Rnu and y(k) ∈ Rny . We assume

(A, B) stabilizable and (C, A) detectable with C full row

rank. The controlled variables are defined as

z(k) = Hy(k)

(2)

with z(k) ∈ Rnz , H full row rank and nu ≥ nz . Input and

states are constrained

x(k) ∈ X ,

u(k) ∈ U,

(3)

where Y and U are convex sets with the origin in their

interior. The goal is to asymptotically eliminate the control

error given a constant reference signal r∞ , that is

z(k) → r∞ ,

k → ∞,

(4)

in the presence of constant, non-decaying disturbances and

plant-model mismatch, assuming stability of the closed loop.

The receding-horizon optimal control problem with

quadratic performance cost is considered

∗

JN

(x(k)) :=

min

u0 ,...,uN −1

N

−1

X

(uTi Rui + xTi Qxi )

i=0

+ xTN QN xN

s. t. xi ∈ X, ui−1 ∈ U, ∀ i ∈ {1, . . . , N },

xN ∈ T ,

xi+1 = Axi + Bui ,

R ≻ 0, Q 0, QN 0.

(5a)

(5b)

(5c)

(5d)

(5e)

The optimization problem is defined by the system model

(A, B), the prediction horizon N , the cost matrices Q and R,

the terminal weight QN , the terminal set T and the current

state x0 .

The problem is repeatedly solved at every time step k for

x0 = x(k) the current the state vector. The optimal input

u(k) = u∗o is applied to the plant, the rest of the optimal

open-loop control sequence u∗1 . . . u∗N −1 is discarded. We

note that the resulting control law is linear in some region

5252

46th IEEE CDC, New Orleans, USA, Dec. 12-14, 2007

u

y

P

H

FrB03.5

Assume that both the system and controller converge to

steady-state and the reference signal is given by r(k) = rss .

If ∃E ∈ Rnx̄ ×nz and E full column rank such that

z

E T (Ak − I)

T

E Bk

E T Br

K

r

Fig. 1.

Control System Structure

containing the origin, where none of the constraints are

active.

The terminal weight and the terminal set can be chosen

such that the nominal closed-loop system is guaranteed to be

stable [6]. For example, if QN is the solution to the discrete

algebraic Riccati equation

0 = Q + AT QN A − QN

− (AT QN B)(B T QN B + R)−1 (B T QN A),

(6)

(7)

If T is such that it is invariant and feasible under this local

controller, then both closed-loop stability and feasibility hold.

For reference tracking, it is clear that zero offset can only

be achieved when the controller is locally unconstrained,

around the requested set point. Thus, we will assume there

exists a set T which contains the requested set point and

which is rendered invariant by the linear controller Kx . Also,

Kx must be feasible within T . The system state must enter

the set T , otherwise the reference cannot be feasibly attained.

In the following, it will be assumed the state enters T and

the local control law is linear. The analysis of offset can thus

be performed for the linear control law Kx .

The state vector is assumed not to be directly measurable;

Hence, a linear state observer is employed.

x̂(k + 1|k) = (A − LC)x̂(k|k − 1) + Bu(k) + Ly(k). (8)

The control signal is

u(k) = −Kx x̂(k|k − 1).

(9)

Introducing the variables x̄(k) = x̂(k|k−1), Ak = A−LC −

BK, Bk = L and Ck = −Kx , the controller dynamics are

defined by

x̄(k + 1) =

u(k) =

Ak x̄(k) + Bk y(k) + Br r(k)

Ck x̄(k) + Dr r(k)

(11a)

= H,

= −I,

(11b)

(11c)

then there is zero offset in the controlled variable z(k), i.e.

z(k) → zss = rss for k → ∞.

Proof. Let uss , yss , x̄ss and rss be the signals at steady-state

and the controller’s state vector, respectively. For the system

to be in steady-state, (Ak − I)x̄ss + Bk yss + Br rss = 0 must

hold. Left multiplying by E T yields E T Bk yss +E T Br rss =

0 and by (11b) and (11c) Hyss = rss and hence zss = rss .

It is observed that E spans the left eigenspace of the

integrator modes (λ = 1) of Ak . Hence, Ak must contain

at least nz integrator modes for E to exist. Equations (11b)

and (11c) impose conditions on the input directions of the

integrators of K in the signals y(k) and r(k) [11].

III. D ISTURBANCE M ODEL

then the local unconstrained control law is

u(k) = −Kx x(k),

Kx = (B T QN B + R)−1 (B T PN A).

= 0,

(10)

with x̄(k) ∈ Rn̄x , u(k) ∈ Rnu and y(k) ∈ Rny

We first state a fundamental, sufficient condition for zero

offset.

Theorem 1 (Zero Offset): Consider the feedback system

shown in Figure 1, let the controller K be given by (10).

To account for the disturbances and plant-model mismatch,

we augment the plant model by a disturbance with integrator

B

A Bd

, Bm =

Am =

, Cm = [C Cd ] , (12)

0 I

0

with Bd and Cd of appropriate dimension. This model

assumes the disturbance is constant over time. This is a

common assumption in practice, when offset-free control at

steady-state is needed. The choice of the matrices Bd and

Cd may be motivated by a real, physical disturbance acting

on the system, but they may also be chosen freely, as design

parameters. The augmented model (Cm , Am ) is assumed to

be detectable.

A. Equivalence of State- / Output Disturbance Models

In the following, the equivalence of state and output disturbance models will be established. A similarity transform

is introduced

I −T12

T =

.

(13)

0

I

Thus, the transformed system matrices are

A (I − A)T12 − Bd

−1

T Am T

=

0

I

Cm T −1

= [C

(14)

CT12 + Cd ] .

It is easy to see that any disturbance model with Cd 6= 0 and

Bd = 0 (output disturbance) can be transformed into a pure

state disturbance model by solving

CT12 = −Cd .

(15)

for T12 . Since C has full row rank, a solution always exists.

5253

46th IEEE CDC, New Orleans, USA, Dec. 12-14, 2007

Conversely, a state disturbance model (Bd 6= 0, Cd = 0)

can be transformed into a pure output disturbance model by

solving

(I − A)T12 = Bd

(16)

for T12 . A solution exists iff span(Bd ) ⊆ span(I − A),

which is trivially true for plants with no eigenvalues at 1.

For simplicity, we will assume Cd = 0 from now on.

Since output disturbance models can always be transformed

to state disturbance models by similarity transform, this

is completely general. The proofs and methods are also

straightforward to extend to the case Cd 6= 0.

IV. C ONTROLLER

In this section, we develop a general method for tracking

constant reference signals in the presence of constant disturbances. The approach is well known and essentially similar

to [9] and [10]. The idea is to first compute the steady-state

target xss (rss , dss ) and uss (rss , dss ) for a given disturbance

and reference, and then devise a stabilizing controller of the

form

u(k) = uss − k(x̂(k|k − 1) − xss ).

(17)

The target variables xss and uss are determined by solving

a steady-state equation

I −A

HC

−B

0

xss

uss

=

Bd

0

dss +

0

I

A solution exists for any rss , dss if

I − A −B

rank

= nx + nz .

HC

0

rss . (18)

(19)

Assume nu ≥ nz . Then, the rank condition (19) can be stated

equivalently in the frequency domain. Let

G(z) = HC(zI − A)−1 B.

=

=

Xd dss + Xr rss ,

Ud dss + Ur rss .

(21a)

(21b)

The MPC problem (5) is modified

∗

JN

(x(k)) :=

min

u0 ,...,uN −1

N

−1

X

((ui − uss )T R(ui − uss )

i=0

+ (xi − xss )T Q(xi − xss )

(22a)

T

+ (xN − xss ) QN (xN − xss )

s. t. xN ∈ T (xss , uss ),

(22b)

(5b), (5d), (5e).

(22c)

The terminal set T now depends on the target variables.

If (22) is feasible, then the optimal control law is linear in

x(k) in some region around the target. Let this unconstrained

controller be given by

u(k) = uss − Kx (x̂(k|k − 1) − xss ).

Introducing the feedback gain matrices

Kd = −Kx Xd − Ud ,

(23)

Kr = −Kx Xr − Ur ,

(24)

Equation (23) is rewritten as linear feedback law of the

ˆ

−

augmented state estimate x̄(k) = [x̂(k|k − 1)T d(k|k

T T

1) ] , where dss is replaced by the current estimate of

the disturbance. Similarly, rss is replaced by the current

reference value. The feedback law is

u(k) = −[Kx Kd ]x̄(k) − Kr r(k).

(25)

This shows the degrees of freedom of the control structure

in both the reference and control error.

V. E STIMATOR

A state estimator is constructed for the augmented model

(Am , Cm ). The estimator gain matrix is

Lx

.

(26)

L=

Ld

The controller dynamics can be written as

x̄(k + 1) =

u(k) =

Ak x̄(k) + Bk y(k) + Br r(k)

Ck x̄(k) + Dr r(k)

(27)

Inserting the augmented model description (12), the control

law (25) and the estimator gain (26) yields the controller

dynamics

A − BKx − Lx C Bd − BKd

,

Ak =

−Ld C

I

BKr

Lx

(28)

,

, Br =

Bk =

0

Ld

Ck = −[Kx Kd ],

Dr = −[Kr ].

A. Conditions

(20)

Then, (19) holds if and only if z = 1 is not a transmission

zero for G(z), i.e. the steady-state gain matrix G(1) has full

row rank [11]. Let the solution to (18) exist and be given by

xss

uss

FrB03.5

For brevity, the following notation is introduced

Φ = I − A + BKx ,

Ψ = Φ + Lx C.

(29)

Consider the nominal system (12) subject to the control

law (25). The steady-state gains are easily computed and

they are given by zss = HCΦ−1 (Bd − BKd )dss for the

disturbance and zss = HCΦ−1 BKr rss for the reference.

Nominal tracking (disturbances are measurable, no plantmodel mismatch) can thus be achieved by choosing Kd and

Kr correctly. For offset-free control, the estimator has to be

considered additionally.

In the following, conditions are given for the matrices

Kx , Kd , Kr , Lx and Ld such that (11a-c) hold for the

dynamic system (28). These results are mostly equivalent

to the conditions given in [9] and [10].

Lemma 1 (Conditions for zero offset): Consider the controller defined by (27) and (28). Assume the closed loop

reaches steady state, the reference r(k) = rss constant and

the following holds

ker(Ld ) ⊆ ker H(I + CΦ−1 Lx )

(30a)

HCΦ−1 BKr = I

(30b)

HCΦ−1 (Bd − BKd ) = 0.

(30c)

5254

46th IEEE CDC, New Orleans, USA, Dec. 12-14, 2007

Then, there is zero offset in the controlled variable, i.e.

z(k) → zss = rss .

Proof. Equation (30a) can be rewritten as ker(Ld C) ⊆

ker(HCΦ−1 Ψ). If it holds, there exist ∆ ∈ Rnz ×nd which

chooses a linear combination of the rows of Ld C such that

∆Ld C = HCΦ−1 Ψ.

(31)

Consider E T = [−HCΦ−1 ∆] as candidate left eigenspace

of Ak to the eigenvalue λ = 1. By using (30), we check if

the conditions of Theorem 1 hold.

i) Using (11a) yields E T (Ak − I) = HCΦ−1 Ψ −

∆Ld C − HCΦ−1 (Bd − BKd ) . The first element

is zero by using (31), the second element vanishes

by (30c), which proves the presence of the integrator

modes.

ii) Checking for the integrator’s input directions using

(11b), we check if E T Bk = H and E T Br = −H.

For the former, we get E T Bk = −HCΦ−1 Lx + ∆Ld ,

and (31)

−HCΦ−1 Lx C + ∆Ld C

−1

= HC

FrB03.5

the Hautus observability condition for the system (Am −

[LTx 0]T Cm , Ĉ) at λ = 1

Ĉ

0

rank I − A + Lx C −Bd < nx + nd ,

(35)

0

0

it is clear that detectability does not hold. Therefore, L̂d

cannot stabilize all nd integrator modes, nor can Ld if it is

rank deficient.

Lemma 3 (Estimator): Consider the controller defined by

(27) and (28). Assume A − Lx C is stable and nd = nz .

Consider the estimator gain

Lx + L̄x H̄

L̄ =

(36)

L̄d H̄

where H̄ = H(I + CΦ−1 Lx ). Assume L̄x and L̄d can be

chosen such that Am − L̄Cm stable. Then, (30a) holds for

the estimator gain L̄.

Proof. Inserting (36) into (31) yields ∆L¯d H̄C =

HCΦ−1 (I − A + BKx + (Lx + L̄x H̄)C). For the theorem

to hold, ∆ must exist. Substituting H̄ yields

−1

−HCΦ Lx C + HCΦ Ψ = HC

HC Φ−1 (−Lx C + Ψ) = HC

{z

}

|

∆L̄d H(I + CΦ−1 Lx )C

HCΦ−1 Ψ +

L̄x H(I + CΦ−1 Lx )C

I

For the reference direction, we immediately get

E T Br = −HCΦ−1 BKr = −I by (30b).

Remark. When Kd and Kr are computed as in (21) and

(24), it is easy to check that (30b) and (30c) hold. The

construction of the estimator gains Lx and Ld given Kx

such that (30a) also holds will be discussed next.

B. Properties

In the following, the problem of constructing the estimator

gains such that (30a) holds is discussed. In principle, given

Kx , and an Lx which stabilizes A−Lx C, the set of candidate

Ld is defined by

∆Ld = H(I + CΦ−1 Lx ).

=

(32)

In order to derive a construction method for Ld , we will

state some properties first.

Lemma 2 (Rank of Ld ): Consider the the linear system

defined by the matrices

A

Bd

, Cm = [C 0] ,

(33)

Am =

0 Ind ×nd

where A ∈ Rnx ×nx , Cm ∈ Rny ×nx and Bd ∈ Rnx ×nd . Assume (Am , Cm ) detectable. Consider the class of estimator

T

gains L = LTx LTd

such that Am − LCm is stable. Then

rank(Ld ) = nd .

(34)

Proof. Choose an arbitrary Lx such that A− Lx Cm is stable.

Suppose there exists a Ld such that rank(Ld ) < nd and

Am −LCm is stable. Since rank(Ld C) < nd , we can choose

Ĉ, L̂d such that L̂d Ĉ = Ld C and rank(Ĉ) < nd . Applying

∆L̄d HCΦ−1 Ψ =

∆L̄d

=

HCΦ−1 (I + L̄x HCΦ−1 )Ψ

I + HCΦ−1 L̄x .

From the last equation, it is immediately clear that ∆ exists,

since L̄d has full rank by Lemma 2.

C. Construction Method

The previous sections suggest a direct construction method

for L.

Algorithm 1: Consider the linear system as in (1) and

(12). Assume Cd = 0, (Cm , Am ) detectable and nd = nz .

Suppose Kd and Kr are known and (30c), (30b) hold.

1) Compute Lx such that A − Lx C is stable and

(H̄Cm , Ā) detectable, where H̄ = H(I + C(I − A +

BKx )−1 Lx ) and Ā = Am − [LTx 0]T Cm .

2) Compute L̄ such that Ā − L̄H̄Cm is stable.

3) The final estimator gain

Lx + L̄x H̄

L2 =

(37)

L̄d H̄

stabilizes Am −L2 Cm and - by Lemma 3 - (30a) holds.

A better way to construct the estimator gain is described

in the following, modified algorithm, which allows to move

the poles associated to the integrator modes independently

from the other modes.

Algorithm 2: 1) Compute Lx such that A − Lx C is

stable and (H̄Cm , Ā) detectable, where H̄ = H(I +

C(I − A + BKx )−1 Lx ) and Ā = Am − [LTx 0]T Cm .

2) Apply the linear transform

I −(I − A + Lx C)−1 Bd

T =

(38)

0

I

5255

46th IEEE CDC, New Orleans, USA, Dec. 12-14, 2007

which brings the system to block-diagonal form

A − Lx C 0

Āt = T ĀT −1 =

(39)

0

I

C̄t

= H̄Cm T −1

(40)

−1

= H̄ C C(I − A + Lx C) Bd . (41)

3) Compute L̄d such that

(I − L̄d H̄C(I − A + Lx C)−1 Bd )

(42)

is stable.

4) Recover the estimator gain for the original system

0

Lx

−1

(43)

+T

L2 =

L̄d H̄

0

Algorithm 2 does not move the eigenvalues already established by A − Lx C. Hence, the integrator eigenvalues can

be placed independently.

D. Summary

An algorithm was proposed for constructing the estimator

such that offset-free control is achieved. The approach uses

a minimal order disturbance model. For every controlled

variable, one disturbance state is added, whereas existing

methods suggest adding as many disturbance states as there

are measured variables. In MPC, this improvement leads to

simpler optimization problems and thus a better performance

of the optimization algorithm.

Assuming stabilizability and detectability of the system,

necessary conditions for the existence of a tracking controller

are that the plant has no transmission zero at 1, that is

I − A −B

rank

= nx + nz ,

(44)

HC

0

and that the chosen disturbance model is detectable. Algorithm 1 and 2 require detectability of (Ā, H̄C) for the

construction of the estimator. It is an open question whether

there always exists an estimator gain Lx for a given Kx

such that detectability holds. Given the local linear control

law Kx , the resulting estimator will remove offset of the

closed loop in the controlled variables. Algorithm 2 describes

a method to construct the estimator in two steps. The closedloop estimator poles for the disturbances can be moved

independently of the poles of A − Lx C.

VI. E XAMPLE

As an example, we consider the double integrator

1 1

0.5

A=

, B=

, C = I.

0 1

1

(46)

The actuator is constrained by

|u| ≤ 0.2.

The dynamics of the physical plant are slightly different and

given by

1

1

Ar =

.

(48)

−0.1 0.9

Furthermore, the system shall be subject to disturbances. The

disturbance model is given by

1

Bd =

.

(49)

.5

Solving the target problem for this disturbance model, we

get Xd = [0 −3/4]T , Ud = −1/2, Xr = [1 0]T and Ur = 0.

For the cost function, we choose

Q = I, R = I,

(47)

(50)

which yields the LQ feedback gains Kx = [0.435 1.03],

Kd = 1.271 and Kr = −0.435. Closed loop performance

is analyzed for two estimators, defined by the gain matrices

L1 and L2 . L1 is the standard Kalman filter gain, while L2

is computed employing Algorithm 2.

1.26 0.87

L1 = 0.29 0.68 ,

0.33 0.13

1.51 1.25

L2 = 0.38 0.83 .

0.47 0.37

(51)

It is easy to check that L1 does not satisfy (30a), while L2

does. To account for the input constraint, we set up the MPC

problem, slightly modifying (5)

∗

JN

(x(k)) :=

min

u0 ,...,uN −1

N

−1

X

(ui − ut )T R(ui − ut )

i=0

+ (xi − xt )T Q(xi − xt )

+ (xN − xt )T QN (xNp − xt )

s. t. |ui−1 | < 0.2, ∀ i ∈ {1, . . . , N },

xi+1 = Axi + Bui + Bd d0

(52)

xt = Xd d0 + Xr r0 , ut = Ud d0 + Ur r0 .

Qn is set to the solution to the DARE which was obtained

while computing Kx . To close the control loop, we set x0 =

ˆ

x̂(k|k − 1), d0 = d(k|k

− 1) and r0 = r(k) and solve (52)

for every time step.

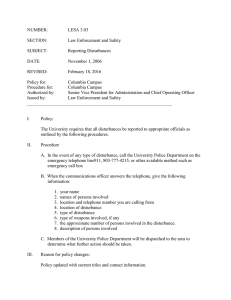

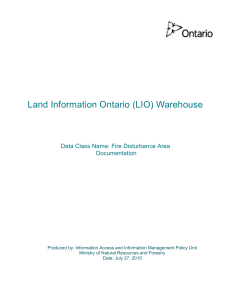

The results are plotted in Figures 2 and 3 for a prediction

horizon of N = 1. For the standard Kalman estimator gain

L1 , the closed loop shows offset. The second plot shows no

offset, but virtually the same performance. This suggests the

proposed method does fit nicely into the classical framework

of controller - observer design.

VII. C ONCLUSION

(45)

The goal is to track x1 , that is

H = [1 0].

FrB03.5

We have seen that robust reference tracking is not straightforward in Model Predictive Control. First we noted the

classic integral control schemes proposed in the literature

[3], [5] cannot be used for MPC straightforwardly. A twodegree-of-freedom approach employing a disturbance model

which may reflect knowledge of the physical structure of the

system is better suited.

5256

46th IEEE CDC, New Orleans, USA, Dec. 12-14, 2007

FrB03.5

x1(k)

2

x (k)

2

x (k)

2

1.5

1

x (k)

2

1.5

r(k)

1

1

0.5

0.5

0

r(k)

0

0

5

10

15

20

time

25

30

35

40

0

1

5

10

15

20

time

25

30

35

40

1

u(k)

u(k)

0.5

0.5

0

0

0

5

10

15

20

time

25

30

35

1

40

0

x (k)

5

10

15

20

time

25

30

35

x (k)

1

1

1

x (k)

x (k)

2

0

40

2

0

d(k)

−1

d(k)

−1

−2

0

5

10

15

20

time

25

30

35

1

−2

40

u(k)

0

5

10

15

20

time

25

30

35

40

u(k)

1

0.8

0.6

0.5

0.4

0.2

0

0

0

5

10

15

20

time

25

30

35

40

0

Fig. 2. Reference step (top plots) and disturbance steps (bottom plots) for

L1 estimator

5

10

15

20

time

25

30

35

40

Fig. 3. Reference step (top plots) and disturbance steps (bottom plots) for

L2 estimator

R EFERENCES

However, the disturbance modeling approach is difficult:

The integrators which are required for robustness do not appear automatically in the controller; they are tightly coupled

to the observer used. The solution proposed in the literature

was to add as many disturbance states to the model as there

are measured variables. This may lead to an unnecessary

increase in the dimension of the state space.

In this paper, we investigated the nature of the problem

further. We showed that the number of disturbance states can

in fact be reduced to the number of controlled variables. The

construction method for the observer gain starts with a linear

state-feedback controller and then constructs an observer

gain which yields zero offset. It is simple and based on

the standard state-space algorithms. By using a predictive

controller with a linear gain around the origin, the results

can be used directly for MPC. In the nominal case (no plant

- model mismatch), stability, feasibility and optimality of the

MPC apply.

It is clear from the construction method that the integrators

only appear for a matching controller / estimator pair. As

soon as the MPC is not unconstrained and the feedback law

changes, the integrators disappear. This avoids windup of the

integrators.

[1] Karl Johan Aström and Lars Rundqwist. Integrator windup and how

to avoid it. In American Control Conference, volume 2, pages 1693–

1698, 1989.

[2] Alberto Bemporad, Manfred Morari, V. Dua, and E. N. Pistikopoulos.

The explicit solution of model predictive control via multiparametric

quadratic programming. In Proceedings of the American Control

Conference, 2000.

[3] Edward J. Davison. Robust Servomechanism Problem, chapter 46,

pages 731–747. IEEE Press, 1996.

[4] B. A. Francis and W. M. Wonham. The internal model principle of

control theory. Automatica, 12:457–465, 1976.

[5] Gene F. Franklin, J. David Powell, and Abbas Emami-Naeini. Feedback Control of Dynamic Systems. Addison Welsey, 1994.

[6] D. Q. Mayne, J. B. Rawlings, C. V. Rao, and P. O. M. Scokaert. Constrained model predictive control: Stability and optimality. Automatica,

36:789–814, 2000.

[7] M. Morari and J.H. Lee. Model predictive control: past, present and

future. Computers & Chemical Engineering, 23(4–5):667–682, 1999.

[8] E. F. Mulder, M. V. Kothare, and Manfred Morari. Multivariable antiwindup controller synthesis using linear matrix inequalities. Automatica, 37:1407–1416, 2001.

[9] Kenneth R. Muske and Thomas A Badgwell. Disturbance models for

offset-free linear model predictive control. Journal of Process Control,

12:617–632, 2002.

[10] Gabriele Pannocchia and James B. Rawlings. Disturbance models for

offset-free model predictive control. AIChE Journal, 49(2):426–437,

2003.

[11] Sigurd Skogestad and Ian Postlethwaite. Multivariable Feedback

Control. John Wiley & Sons, 1996.

5257