Delay Analysis of Real-Time Data Dissemination

advertisement

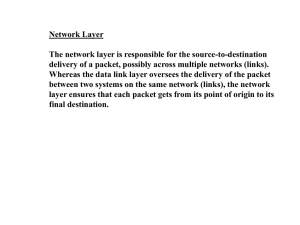

Delay Analysis of Real-Time Data Dissemination Gidon Gershinsky, Avi Harpaz, Nir Naaman, Harel Paz, Konstantin Shagin IBM Haifa Research Laboratory Haifa 31905, Israel gidon | harpaz | naaman | paz | konst @il.ibm.com Keywords: real-time, message dissemination, multicast, congestion, rate control. Abstract The growing popularity of distributed real-time applications increases the demand for QoS-aware messaging systems. In the absence of transmission rate control, congestion may prevent a messaging system from meeting its timeliness requirements. In this paper, we develop an analytic model for congestion in data dissemination protocols and investigate the effect of transmission rate on message delivery latency. Unlike previous works, we take into account the processing overhead of receiver buffer overflow, which has a significant impact on the results. A simulation is used to obtain more insight into the problem and to study a number of additional effects ignored by the analytic model. The presented analysis can be incorporated into a transmission rate control logic, to enable it to rapidly converge to an optimal transmission rate. 1 INTRODUCTION Performance guarantees and predictable task executiontime are becoming increasingly important in a large number of software system types. In addition to hard real-time applications, such as those found in defense systems and manufacturing, many more applications that traditionally would not be classified as real-time domain aim to improve system responsiveness, predictability and overall performance by taking advantage of readily available tools like real-time Java [7] and real-time CORBA [10]. These applications typically require soft real-time guarantees, where some violations of the specified deadlines can be tolerated (normally at the expense of degradation in the provided service). Soft real-time solutions are found in diverse industry sectors including finance, telecommunication, and gaming. Real-time systems often use distributed architecture, and therefore require a predictable form of communication between the different components. In this paper, we consider a messaging transport that provides real-time quality of service (QoS). In particular, we study one-to-many reliable message dissemination, a popular form of scalable information delivery to multiple users. As an example, consider financial data that has to be distributed to a number of stock brokers. We re- strict our attention to a single sender that is sending messages to multiple receivers. The network transport is assumed to be unreliable (typically UDP or raw IP); the messaging transport implements a reliable multicast protocol in order to ensure reliable delivery of messages. We assume a general reliable multicast protocol without making assumptions on whether the protocol is using multicast enhancement features such as multicast enabled network infrastructure or hierarchical multicast. When a message is submitted by the application to the messaging transport, the message is first converted into one or more packets which are then delivered to the destinations where the packets are reassembled into the original message. We consider two types of applications based on the typical message size. The first type, which we refer to as bulkdata applications, represents applications that mainly generate very large messages such as those used in bulk-data transfer (e.g., transfer of a large memory segment); in this case, a large number of packets are created and are ready for transmission (almost) instantly. Applications of the second type, referred to as short-message applications, generate short messages over time; to capture the bursty nature of many shortmessage applications we model the generation of new packets as an ON/OFF process. The ON/OFF model is commonly used to model bursty sources (see, e.g., [6, 3] and references therein). An ON/OFF source alternates between ON and OFF periods; the length of each period is independently distributed. During ON periods packets are generated at a high rate while during OFF periods no packets are generated (or generated at a much lower rate). In this paper, we investigate the delay of packets and messages in a system as described above. The main aspect we examine is congestion at the application and transport level. This type of congestion is caused when a receiver cannot keep up with the sender’s transmission rate and is thus forced to discard some of the data it receives before fully processing it. While most of the works that deal with congestion address congestion at the network level, our experience with realworld applications and messaging systems [14] shows that often network congestion is not the problem (at least for the applications we target here). The reason is that these applications typically communicate over a Local Area Network (LAN) or a high speed Virtual LAN (VLAN) where the network is often dedicated to the application. As networks of 1 Gb/s and above are now widely available, there is enough bandwidth to prevent network congestion. Another observation is that in this setting a sender can typically send data at a much faster rate than a receiver can process it; this is due to longer application and operating system processing times at the receiving side and due to the fact that the receiver may be busy when the transmitted data arrives. As a result, receiverside congestion is common in the presence of bursty data generation and absence of congestion/flow control. We study the effect of congestion on the latency and relate it to the maximal transmission rate a congestion/flow control mechanism may enforce. We show that a congestion control mechanism that limits the sender’s transmission rate can greatly reduce the latency and thus enable the transport layer to support stricter real-time requirements. We present an analytic model for the congestion problem described above. The analysis considers the various processing times, the effect of packet loss at the network and buffer overflow in receivers. First, we address the case of bulk-data applications where a large number of packets are ready for transmission at the scenario’s beginning. Next, we investigate short-message applications where generation of new packets is assumed to be bursty. An important aspect that has been ignored in previous work and is included in our analysis is the additional processing in the receiver due to packet discarding during states of congestion; we show that this processing overhead is a major contributor to the congestion collapse phenomenon that is observed in many applications. To obtain more insight into the problem, we implemented a simulation framework for the system. The simulator takes into account some of the secondary effects that are ignored by the analysis and thus provides more accurate results. It enables us to explore a wide variety of scenarios, including some which the analysis cannot cover (e.g., non-independent packet loss at the network level). We also use the simulator to evaluate the probability of a packet to be delivered within its deadline, under different transmission rates. Related work. Our work studies message and packet delay in the context of real-time data dissemination; our main contribution is in analyzing the effect of congestion on the delay at the transport and application levels. As such, our work is related to issues of both reliable multicast and congestion analysis. Our analysis adopts the model presented by Pingali et al. in [13], which analyzes the throughput of two basic reliable multicast approaches: sender-initiated ACK-based protocols and receiver-initiated NAK-based protocols. Later works [16, 9] extended the throughput analysis of [13] to include delay analysis of the same protocols; the analysis provides the mean delay between the arrival of a packet at the sender and its successful reception at a randomly chosen receiver. These works assume that new packets are generated according to a Poisson process and model the sender’s queue as an M/G/1 queue. Our analysis extends the results of [16, 9] in several ways: • Our analysis takes congestion into account, showing the effects of congestion on the delay. • We consider a more realistic system model. For example, we assume finite reception queues and take into account processing times that are ignored in [16] (e.g., processing overhead of a discarded packet). These more realistic considerations have significant impact on the results, enabling us to explain, for example, the congestion collapse phenomenon. • We consider a more realistic packet generation process that captures the bursty nature of many applications, by referring to bulk-data and short-message applications. • We add real-time perspective to the analysis. While the work of [16] examines the mean delay of an average packet for a randomly chosen receiver, our work refers to the time each individual packet (message) is delivered to all receivers. • We also employ simulation, enabling us to explore attributes that were not investigated in the previous works, such as delivery by deadline probability distribution. Congestion has been studied extensively in the context of the TCP protocol (see, e.g., [5, 2] and [4] for a survey). The effects of bursty data generation (resulting in traffic self-similarity) have also been extensively studied (see, e.g., [8, 12, 3]) but mainly in the context of unicast communication. Several congestion control mechanisms for multicast protocols have been proposed (see [15] for a survey); most of the protocols attempt to be TCP friendly and hence may not be suitable for real-time applications. Delay analysis in the context of reliable multicast has received less attention. The effect of several reliable multicast protocols on self-similarity and long range dependency of network traffic is studied in [11] where delay analysis and simulation at the level of the reliable multicast protocol is also presented. Delay analysis of a multicast signaling mechanism is presented in [17]. Organization. The rest of this paper is organized as follows. Section 2 provides a formal statement of the problem. Sections 3 and 4 present delay analysis of the bulk-data and short-message scenarios, respectively. A description of the simulation environment is provided in Section 5. Numerical results of both analysis and simulation are presented and discussed in Section 6. We conclude in Section 7. 2 SYSTEM MODEL AND DEFINITIONS Our model is similar to the one presented in [13] and [16], yet it incorporates a few significant modifications that are required to model congestion. In our model, there is a single sender that is disseminating data to R identical receivers using a reliable multicast protocol. In contrast to [16], where it is assumed that new packets are generated according to a Poisson process, our model adopts more realistic assumptions. In the bulk-data transfer scenario, we assume that all the packets comprising the bulk reside in the sender’s buffer at the beginning of the scenario. In the short-message scenario, packets are generated according to an ON/OFF process, which is characterized by ON periods with a high packet generation rate and OFF periods with a much lower rate. In both cases new packets are stored in a buffer to be processed by the sender according to its transmission policy. We adopt the network model assumptions of [16]. It is assumed that a packet transmitted by the sender fails to reach a particular receiver (due to network loss) with probability p and that such packet losses are independent in both space and time. Feedback messages sent by receivers are assumed to always reach the sender (we relax this assumption in several simulations). Unlike [16], we assume that the receiver has a finite space queue for storing incoming packets. Hence, a packet that reaches a receiver is inserted into the queue only if the queue is not full and is discarded otherwise. The probability p does not account for packet drop due to queue overflow. The network delay between all participants is assumed to be τ. We consider two reliable multicast protocols: senderinitiated and receiver-initiated. These protocols correspond to protocol A and protocol N1 described in [13, 16], respectively. Sender-initiated (ACK-based) protocol: Upon transmitting a new packet the sender initiates an ACK list and a timer for the packet. Packets are served by the receiver in a FIFO order. Whenever a receiver correctly receives (processes) a packet, it generates an ACK to be sent to the sender through a pointto-point channel. The sender updates the ACK list for the corresponding packet whenever a new ACK is received. If by the time the timeout expires the ACK list does not contain all receivers, the packet is retransmitted to all receivers and a new timer is initiated. Thus, packet retransmission is prioritized over the first transmission of a packet with a higher sequence number. After the packet is successfully delivered to all receivers it is discarded by the sender. Receiver-initiated (NAK-based) protocol: The sender transmits new packets unless a NAK message is received. Whenever a NAK is received, the missing packet is retransmitted to all receivers. The receiver is responsible to detect missing packets and to send appropriate NAKs. NAKs are transmitted over a point-to-point channel. To protect against losses of retransmitted data packets or NAKs, the receiver retransmits NAKs for the packets that are still missing when their timeout expires. Note that the above protocols do not prohibit optimizations aiming at reducing the feedback processing overhead in the transmitter and the receiver. For example, receivers may batch multiple ACKs/NAKs and send them in a single packet. We assume that the sender operates under a rate control mechanism that limits its maximal transmission rate to rt , and analyze the delay as a function of rt . Our results demonstrate the importance of enforcing such a rate limit; we show that adjusting the rate control to an optimal value can significantly reduce the latency of messages and increase the probability of messages to be delivered within a certain deadline. The analysis presented in this paper can be employed by a congestion management mechanism to enforce an efficient sending rate. For example, a congestion management mechanism at the sender may estimate the state of congestion of its receivers according to feedback gathered from the network and the receivers (e.g., the rate at which ACKs are accepted and the average number of packet retransmissions). Based on the estimation of the state of congestion, the transmission rate can be tuned in an efficient manner; since rate adjustments are made according to the most up to date feedback, the sender will be able to converge faster to an efficient rate control and maintain it dynamically. While an interesting problem on its own, the details of the rate adjustment mechanism are beyond the scope of this paper. The analysis is not concerned with packet ordering. It assumes that packets are delivered to the application independent of their transmission order. The simulator, however, considers both ordered and unordered delivery. Processing times such as data packet processing time, the time to deliver a message to the application and packet discard time, are represented by random variables. The expectance of random variable X is denoted by E[X]. Table 1 presents the notations used throughout the paper for processing times and other system variables. More details on processing times are found in Section 3. 3 DELAY ANALYSIS TRANSFER OF BULK-DATA In bulk-data transfer the data to be transmitted is partitioned into n packets. All the packets are assumed to be ready for transmission in the scenario beginning. The analysis is concerned with the delay until all packets are received and processed by all the receivers. We assume that the sender operates under a transmission rate limit rt and that each receiver has a buffer (or queue) capable of storing q packets; in order to simplify the analysis we assume that n À q. The analysis is divided into two scenarios which we call R p rt rr Yp Ya Yn Yt Yf Yd M q τ Number of receivers Probability that the packet is lost on its way to a receiver (does not include packet drop due to receiver buffer overflow) Maximal transmission rate imposed on the sender Rate at which packets are removed from receiver buffer Time to process a packet reception at a receiver Time to process and transmit an ACK at a receiver Time to process and transmit a NAK at a receiver Time to process a NAK timeout at a receiver Time to feed a new packet to the application at a receiver Time to discard a packet at a receiver Number of transmissions required to successfully deliver a packet to all receivers Size of a receiver’s queue Network delay between the sender and a receiver with these phases are random variables denoted by Yp , Ya and Y f , respectively. If we assume that there are no packets in the receiver buffer upon the arrival of the last expected packet, then this packet is processed as soon as it is received. Under this assumption, the expectance of the time T it takes to deliver the last packet to the receiver’s application is1 E[T ] = nE[M] + τ + E[Yp ] + E[Ya ] + E[Y f ]. rt (2) If the receiver queue is not empty upon receipt of the last expected packet, the processing time of enqueued packets should be added to the sum in (2). However, assuming n À q, this additional processing time can be ignored, since it becomes negligible in comparison to the overall scenario execution time. We note that although we make this assumption here to simplify the analysis, the simulator takes this processing delay into consideration. Table 1. Notations table. 3.2 congestion-free and congestion-abundant. In the congestionfree scenario the transmission rate is such that receiver-side congestion is avoided, i.e., receiver buffers are unlikely to overflow and therefore the packets that reach a receiver are almost never discarded. In the congestion-abundant scenario the transmission rate is such that the probability of receiver buffer overflow is not negligible and therefore packets are occasionally discarded. In subsections 3.1 and 3.2 we explore the behavior of the sender-initiated protocol in the two aforementioned scenarios, while in subsection 3.3 we analyze the receiver-initiated protocol. 3.1 Congestion-Free Scenario In this scenario packets are not discarded. However, packets may still be lost in the network. If we assume an independent packet loss probability p per destination then, as shown in [13], the expected number of transmissions required for all R destinations to receive a packet correctly is ∞ E[M] = ∑ (1 − (1 − pm−1 )R ) (1) m=1 The expected number of transmissions required for all the destinations to receive all the packets is nE[M]. Consequently, the transmission of the last packet finishes, on average, nE[M] rt time units after the transmission of the first packet begins. The processing of a packet that is not discarded upon arrival to the receiver consists of three phases: (i) packet reception from the network, (ii) ACK transmission, and (iii) delivery of the packet to the application. The delays associated Congestion-Abundant Scenario When the transmission rate is high enough, the receiver queue may not be able to accommodate all incoming packets and consequently packets are discarded. This happens when the packet arrival rate exceeds the rate at which packets are removed from receiver buffers, i.e., when rt (1 − p) > rr . Note that packet may be discarded even when this condition does not hold, for example, if packet service rate fluctuates significantly. In the analysis of this scenario we focus on the state in which the receiver’s queue is full (or nearly full). Thus, we ignore the initial phase in which receiver’s queue has not been filled yet and the scenario ending phase in which the queue is not being refilled. Since we assume that n À q, we expect these phases to be relatively short and therefore to have minor influence on the analysis results. Nevertheless, the simulation does not neglect these phases. As with packet losses, we assume that packet discarding events at all receivers are independent and are independent of previous discarding events. While this assumption is far from reality it simplifies the analysis considerably. We note that simulations we conducted with different models of dependencies between receivers indicate that the results are close to those obtained under the above assumption. Under the above assumption we can extend (1) to estimate the expected number of packet transmissions. On average, during a time period t the transmitter sends rt t packets; 1 Throughout the analysis, we assume serial processing of the receiver, e.g., packet reception, ACK transmission, and packet delivery to the application are not executed in parallel. If, for example, multiple CPU’s are used, at least some of the tasks could be parallelized; however, we do not refer to parallelization opportunities. some of these packets are lost in the network. Only rr t arriving packets are inserted into the buffer; the remaining packets are lost in the network or discarded due to buffer overflow (as rt (1 − p) > rr ). Thus, the probability that a packet successfully reaches its destination, i.e., that it is not lost in the network or discarded, is rrrt . Hence, we may extend the notion of packet loss probability to be p0 = 1 − rrrt . Consequently, the expected number of packet transmissions according to (1) is E[M] = ∞ ∞ m=1 m=1 rr ∑ (1−(1− p0m−1 )R ) = ∑ (1−(1−(1− rt )m−1 )R ) (3) The packet service rate rr depends on receiver-side processing delays. Consider the amount of processing resulting from receiving the copies of a particular packet. Processing of a discarded packet incurs an overhead of Yd . The probability that a receiver discards a transmitted packet is rt (1−p)−rr . Therefore, among the transmitted E[M] copies of rt r the packet, rt (1−p)−r E[M] packets are discarded. A packet rt that is not discarded incurs a reception overhead Yp and an acknowledgement transmission overhead Ya . The probability that a transmitted packet is inserted into the receiver’s queue is rrrt . Therefore, rrrt E[M] packets among the E[M] copies of the packet incur the reception and acknowledgement overheads. Only one of the packet copies is delivered to the application and incurs a delivery overhead Y f . To sum up, receiverside processing time of all the copies of a particular packet is D = + rt (1 − p) − rr E[M]E[Yd ] + rt rr E[M](E[Yp ] + E[Ya ]) + E[Y f ] rt (4) In order to obtain the rate at which packets are removed from a receiver buffer, i.e., rr , we normalize (4) by the coefficient of the E[Yp ] + E[Ya ] component. This component represents the part of the receiver work which is done for each packet it D handles. Hence, the time to process a single packet is E[M] rr and the packet service rate is rr = E[M] rrr t D the q − 1 queued packets. We assume this is indeed the case because this is a more conservative assumption. For the same reason, we also assume that the last (q+1) packets are all forwarded to the application. Consequently, the expected delay of bulk-data delivery is E[T ] = nE[M] + τ + (q + 1)(E[Yp ] + E[Ya ] + E[Y f ]) rt 3.3 Receiver-Initiated Protocol Analysis The analysis of a receiver-initiated protocol is similar to that of the sender-initiated protocol. In the congestion-free scenario the delivery time of the last packet differs from the result presented in (2) only in the absence of the E[Ya ] component. This is due to the fact that in a receiver-initiated protocol there are no ACK messages and because the last packet delivery does not trigger NAK transmission. Hence, the expected delivery time in the absence of congestion is E[T ] = nE[M] + τ + E[Yp ] + E[Y f ]. rt 1 rt (1−p)−rr rt E[Y f ] E[Yd ] + E[Yp ] + E[Ya ] + rr E[M] rr . (5) There are two unknowns in (3) and (5): E[M] and rr . These equations can be solved iteratively by assigning an initial value to one of the variables. The last packet is expected to reach the receiver queue after nE[M] rt + τ time units. In the worst case the queue is nearly full and so the receiver will process this packet after finishing processing the packet that is currently being serviced and (7) To estimate delivery time in the presence of congestion we build on the analysis of Pingali et al. [13] which showed that, when using a receiver-initiated protocol, the receiver work required for an original packet is E[M](1 − p)E[Yp ] + p p2 E[Yn ] + E[Yt ] + E[Y f ]. (8) 1− p 1− p In the above equation Yn is the time to prepare and transmit a NAK and Yt is the time to process a NAK timeout, which occurs if a packet that has been requested from the sender is still missing. To determine the work required for each one of the n original packets we need to apply two modifications to (8). First, recall that when packets are discarded we assume that the loss probability at a receiver is p0 = 1 − rrrt (and not p). Second, we take packet discarding overhead into the account. Hence, the work required for each original packet in our analysis is rt or as presented below: (6) D = E[M](1 − p0 )E[Yp ] + + + p0 E[Yn ] + 1 − p0 p02 E[Yt ] + E[Y f ] + 1 − p0 rt (1 − p) − rr E[M]E[Yd ]. rt (9) The receiver processing rate, rr , is obtained through normalizing D by the coefficient of the E[Yp ] component, as done in (5). (Note that the coefficient of E[Yp ] in (9) is identical to the corresponding coefficient in (4).) Thus, rr = E[M](1 − p0 ) . D (10) Since (3) also holds for the receiver-initiated protocol, E[M] and rr can be calculated from (3) and (10). The delivery time of the last packet in this case differs from the delivery time of a sender-initiated protocol shown in (6) only in the absence of the E[Ya ] component. Hence, E[T ] = 4 nE[M] + τ + (q + 1)(E[Yp ] + E[Y f ]). rt DELAY ANALYSIS MESSAGE TRANSFER OF (11) SHORT- In the short-message scenario messages are generated during burst periods and each message is sent in a single packet. In contrast to the bulk-data delay analysis, here we are concerned with the latency of each packet. Message latency is defined to be amount of time between message generation and completion of message delivery to the receiver-side application in all receivers. The following analysis refers solely to the sender-initiated protocol; the analysis of the receiverinitiated protocol is similar. For simplicity, we assume that packets are generated at a constant rate rg , so that packet j is generated rjg time units after the scenario begins. In addition, rg is assumed to be greater than rt . The last assumption corresponds to the bursty nature of the explored applications. If a timeout of a packet expires, the sender retransmits it before sending out packets that have never been transmitted. Thus, the sender behaves as if it has a transmission window, of size w = timeout ∗ rt , that contains all the packets that have been transmitted at least once but have not been acknowledged by all the receivers. Upon receipt of the last ACK for a packet in the window, the acknowledged packet is removed from the window and the packet with the lowest sequential number which has not been transmitted before enters the window. We divide packet latency into three components. The first is the time period between creation of a packet and its first transmission. The second is the time period between the packet’s first transmission and its last retransmission; during this period the packet is in the transmission window. The third component is the time period between the last retransmission of the packet and its delivery to the application on the last receiver. The analysis of the three latency components employs E[M] and rr . In the case of congestion-free scenario, E[M] 1 . In is obtained from (1), while rr = 1 E[Yp ]+E[Ya ]+ (1−p)E[M] E[Y f ] the case of congestion-abundant scenario, the values of E[M] and rr are given in (3) and (5), respectively. The analysis of the first latency component (the time between the creation of packet j and its first transmission) divides into two cases: (i) 0 < j ≤ w and (ii) w < j ≤ n. In the former case, the first transmission of packet j occurs immediately after the first transmission of packet j −1, i.e., this transmission ends rjt time units after the scenario begins. Thus, the first latency component in this case is rjt − rjg . In the latter case, packet j enters the transmission window after j − w packets are acknowledged and after w − 1 packets that are in the window have been transmitted at least once. On average, an acknowledged packet has been transmitted E[M] times. In the worst case the last transmission of the w − 1 packets that are still in the window was their last transmission, which means that each of these packets has been transmitted approximately E[M] times as well. Therefore, the number of packet transmissions before packet j has entered the window is bounded by (( j − w) + (w − 1))E[M] = ( j − 1)E[M]. Consequently, in the worst case, the first transmission of packet j ends ( j−1)E[M]+1 time units after the scenario bert ginning. Thus, the first latency component in this case is ( j−1)E[M]+1 − rjg time units. rt The second latency component (the time between the first transmission of a packet and its last retransmission) depends on the number of times a packet is transmitted. Since a packet is transmitted, on average, E[M] times and the interval between retransmissions is timeout + r1t , this period is expected to be (E[M] − 1)(timeout + r1t ) time units. To estimate the third latency component (the time between the last retransmission of a packet and its delivery to the last receiver) we assume the worst case, in which the queue contains q − 1 packets when packet j arrives to the receiver. This case is highly probable with the congestionabundant scenario. In this case packet j is delivered after the receiver handles q + 1 packets (the packet that was being processed at the arrival time of packet j plus the q packets that are in the queue). Hence, the third latency component is τ + q+1 rr . Note that if the receiver queue was empty at the time packet j had arrived, the packet would have been processed immediately and the third latency component would be τ + E[Yp ] + E[Ya ] + E[Y f ] time units. This case is common with the congestion-free scenario. To sum up, the expected latency of packet j is E[T ] = j j 1 q+1 − +(E[M]−1)(timeout + )+τ+ (12) rt rg rt rr when j ≤ w, and ( j − 1)E[M] + 1 j − + rt rg 1 q+1 + (E[M] − 1)(timeout + ) + τ + (13) rt rr E[T ] = when w < j ≤ n. The above analysis can be employed to predict message latency in the ON/OFF model. It is most accurate if n À q and if the packets generated during an ON period are successfully delivered before the next ON period begins. Note that if new packets are generated before all previous ON periods’ packets are delivered, each ON period causes the accumulation of more packets, and hence the system would not reach a steady state. Nevertheless, the simulation also refers to this non-steady state. Note that the expressions introduced in Section 3 are not adequate for evaluating the latency of packet j in the shortmessage scenario, as they rely on different assumptions. For example, the latency evaluation presented in Section 3 refers solely to the last bulk-data packet, and hence we assume that from the moment the last packet enters the receiver queue, no other packet arrives at the queue; thus the receiver processing rate is no longer reduced due to discarding events. This assumption does not hold for the short-message scenario, where we are interested in the latency of all messages (packets). 5 SIMULATION FRAMEWORK In order to obtain more insight into the problem, we have implemented a simulation framework based on the system model. The simulator consists of several modules that represent the application layer, the messaging system and the underlying network. The simulation environment provides control over all the parameters considered by the analysis such as the number of receivers, various processing times, loss probability, queue sizes, network delay and transmission rate. The simulator enables us to explore the problem without the limitations imposed by the analytic model. It is free of any simplifications made by the analysis. For example, the simulator does not ignore the effects of queueing ramp-up nor the dependencies between packet drop events. In addition, the simulator allows to study the distribution of various variables of interest, while the analysis only provides their expected value. 6 ANALYSIS AND SIMULATION RESULTS In this section we present computational results derived from the analytic model, as well as results obtained through simulation. This section is concerned with both bulk-data transfer and the short-message scenarios. The values of processing times and network parameters are based on the results of [1] and on our own experiments with a commercial messaging middleware we developed in IBM Haifa Research Labs [14]. Throughout this section we normalize the presented results so that a time unit is equal to the time it takes a receiver to properly handle a single packet. Consequently, in the sender-initiated protocol E[Yp + Ya + Y f ] = 1 and in the receiver-initiated protocol E[Yp + Y f ] = 1. The normalized transmission rate is then given in packets per time unit. In the sender-initiated protocol, we set E[Yp + Ya ] = 120/121 and E[Y f ] = 1/121. In the receiver-initiated protocol we set E[Yn ] = 20/121 and E[Yt ] = 1/121. In addition, in both protocols, the expected discarding overhead is E[Yd ] = 0.3, network loss is p = 0.01 and a receiver queue size is q = 50. (See Table 1 for a more elaborate description of the aforementioned parameters.) In our experiments we consider receiver sets of sizes 1, 10, 100 and 1000. 6.1 Bulk-data Transfer Results In this section the bulk-data consists of n = 10000 packets. 6.1.1 Computational Results Sender-initiated protocol. Figure 1 presents E[M] (the expected number of transmissions per packet) as a function of the transmission rate limit in the sender. It shows that up to a transmission rate of 1.0, E[M] remains constant for all four receiver sets. As the transmission rate increases, E[M] increases exponentially; the larger the receiver set is the greater E[M] gets. Note that the computational result graphs introduced in Figures 1- 6 were obtained by combining the analysis results of both the congestion-free (Section 3.1) and the congestionabundant (Section 3.2) scenarios. In particular, Figure 1 was produced by employing (1) for transmission rates up to 1.0 and (3) for higher transmission rates. In Figure 2 (produced using (2) and (6)), we show the estimated delivery time of the last data packet. It demonstrates how enforcing a transmission rate that is either too low or too high can cause significant increase in delay; the effect becomes stronger the larger the receiver set is. Figure 3 presents the estimated delivery time of the last data packet for the case packet discarding overhead is ignored; it employs (2) and (6) similarly to Figure 2, yet considers E[Yd ] to be zero. In this case E[M] linearly increases for rates higher then 1.0 and thus the overall delivery time seems to converge. Comparing Figure 2 and Figure 3, we can clearly see that without taking into account the overhead associated with discarding packets, congestion cannot be properly evaluated. Receiver-initiated protocol. Figure 4 introduces the estimated delivery time of the last data packet according to the analysis of the receiver-initiated protocol (it was obtained using (7) and (11)). Up to a transmission rate of 2.0 the behavior (under different receiver sets) is similar to that of the senderinitiated protocol (Figure 2). However, at very high transmission rates we encounter an interesting inversion phenomenon. Above a certain transmission rate the delivery time obtained Figure 1. Computational results: E[M] as a function of transmission rate. Figure 2. Computational results: delivery time as a function of transmission rate. Figure 3. Computational results: delivery time as a function of transmission rate, when discarding events are not considered as resource consuming. Figure 4. Computational results of the receiver-initiated protocol: delivery time as a function of transmission rate. Figure 5. Computational results of the receiver-initiated protocol: delivery time as a function of transmission rate, when NAK generations are not considered as resource consuming. Figure 6. Computational results of the receiver-initiated protocol: delivery time as a function of transmission rate, when discarding events are not considered as resource consuming. Figure 7. Simulation vs. computational results: delivery time comparison with 10 receivers. Figure 8. Receiver-initiated protocol- simulation vs. computational results: delivery time comparison with 10 receivers. Redundant Non-redundant Packets Redundant NAKs packets packets discard discard per handled handled fraction fraction sec per sec per sec 1 receiver 94% 0% 18640 0 1190 10 receivers 78% 57% 6330 2640 1630 Table 2. Statistics comparison between a single receiver configuration and 10 receivers configuration under the receiverinitiated protocol. for a large receiver set is better than that of a smaller receiver set. The inversion phenomenon is surprising since, as one expects, the larger the receiver set is the more packets reach each receiver (since more packets are retransmitted). The explanation to the inversion phenomenon lies in the amount of time a receiver spends processing redundant packets (i.e., packets that were already processed and successfully delivered). As the receiver set increases, the fraction of retransmissions increases and so does the discarding of redundant packets. While discarding a packet that was not previously processed by a receiver generates a NAK, discarding a redundant packet does not generate a NAK. Hence, with a smaller receiver set a receiver generates NAKs more frequently. Since generating NAKs consumes resources, the overall processing rate of the receiver is reduced and the number of packets it has to discard increases. The reduced processing rate of each receiver causes degradation in the delivery time; above a certain transmission rate this degradation accelerates faster when the receiver set is smaller, due to the larger number of NAKs that are generated. To better understand the inversion phenomenon, Table 2 shows some statistics regarding the work a receiver performs in a single receiver and 10 receivers configuration. The results have been obtained by simulating delivery of 10000 packets under a 2.0 transmission rate. It is observed that for 10 receivers, the portion of discarded packets in the total number of packets that arrive to a receiver’s queue is smaller by 16% (second column) and that 57% of the discarded packets in this configuration are redundant ones (third column). As a consequence, in the single receiver configuration a receiver generates almost three times more NAKs (column four). Although in a 10 receivers configuration a receiver processes redundant packets (column five), the overall processing rate of non-redundant packets is higher (column six) and hence the delivery time is shorter. We observed that the inversion phenomenon depends on the NAK processing time; as the NAK processing time becomes smaller the inversion occurs at a higher transmission rates (for example, when E[Yn ] = 5/121 the inversion between a single and 10 receivers occur at a rate of 3.0) . When completely neglecting NAK processing times, the inversion phenomenon disappears, as shown in Figure 5 (Figure 5 employs (7) and (11) similarly to Figure 4, yet it considers E[Yn ] to be 0). For completeness we have also explored the delivery time when neglecting the processing time required to discard a packet, i.e., using (7) and (11) with E[Yd ] set to zero. The results are presented in Figure 6 which shows similar behavior to the equivalent sender-initiated protocol presented in Figure 3. The main difference between the two is that in Figure 6 the delivery time does not seem to converge on high rates; the reason is that in the receiver-initiated protocol, as the transmission rate increases more NAKs are generated causing an extra overhead. 6.1.2 Simulation Results In order to facilitate the comparison between the simulation results and the computational results, we set the simulation time unit to be the amount of time it takes a receiver to process a single packet in the absence of packet discarding. Consequently, the simulation time unit is set to 0.1 milliseconds (ms). The network delay is set to 4 time units (0.4 ms). Choosing the data retransmission timeout in the senderinitiated protocol and the NAK retransmission timeout in the receiver-initiated protocol requires some tuning. A short timeout may cause redundant retransmissions (and consequently unnecessary processing) while a large timeout will delay retransmission of the lost packets. We set the timeout to 100 time units (10 ms) in both protocols. We use uniformly distributed processing times so that a processing time with an average x is uniformly distributed in the range [0.5x, 1.5x]. The interval between packet transmissions is also uniformly distributed according to the examined transmission rate. Sender-initiated protocol. Figure 7 compares the results of the bulk-data transfer simulation to the corresponding computational results, in the context of the sender-initiated protocol. The bulk consists of 10000 packets that should be delivered to 10 receivers. The comparison shows that the computational results match the simulation results; simulation results for 1, 100 and 1000 receivers, which are not shown here, show a similar match. Receiver-initiated protocol. Figure 8 compares the delivery time in the bulk-data transfer simulation (10000 packets that should be delivered to 10 receivers) to the corresponding Figure 9. Analysis vs. Simulation: latency of 1000 packets generated during a single ON period. computational results, in the context of the receiver-initiated protocol. We observe that for most parts the computational and simulation results are similar. However, above a certain (high) rate the delivery time measured in the simulation drastically increases. This deflection is also observed in the experiments with 100 and 1000 receivers but not in the case of a single receiver. The origin of this phenomenon is the randomized variation of the simulation parameters. As a result of this variation, the rate at which packets are forwarded to the application is smaller in some receivers. These slow receivers continue to work long after all packets are delivered to most of the destinations. Effect of ACK loss. To study the effect of ACK loss on the expected number of packet transmissions and the delivery time, we conducted two simulations using a set of 10 receivers. In the first simulation ACKs are not lost, whereas in the second ACK loss probability is 0.01. In both experiments data packet loss probability is 0.01. The results show that for transmission rates of up to 1.0 approximately 8% more packets are transmitted in the case with ACK loss. For higher rates, however, the difference rapidly decreases until it stabilizes around 1%. The difference in delivery time exhibits a similar behavior. The effect of ACK loss at higher transmission rates is less significant since the probability that a data packet, for which the ACK was lost, is dropped in another receiver is greater; in this case the fact that the ACK was lost has no effect since the packet will be retransmitted anyway. Effect of ordered delivery. We have conducted simulations of both ordered and unordered delivery, and found no fundamental difference between the delivery times. This is expected since the average number of transmissions per packet does not depends on the delivery order. 6.2 Short-Message Transfer Results This subsection presents computational and simulation results for the case of bursty transmission of short messages. Unless otherwise mentioned, we use the same parameters that were used to obtain the bulk-data results. We present results for the sender-initiated protocol; results of the receiverinitiated protocol did not differ substantially and hence are not included. ON/OFF analysis vs. simulation. We start by comparing simulation results with the message latency derived from the analysis presented in Section 4. The employed setting is as follows. During an ON period, which lasts 333.33 time units, 1000 packets are generated at a rate of three packets per time unit. The transmission rate limit is 1.5. We assume that the following OFF period is long enough to allow all 1000 packets to be delivered before the next ON period begins. Figure 9 compares message latencies observed in the simulation with the computational results. The curve which is denoted simulation-ordered introduces the results of a simulation in which packets are delivered to the application in the order of their sequence numbers. The curve denoted simulationunordered presents the results of a simulation in which packets are delivered to the application without ordering limitations. The former curve shows that packets are delivered in bursts. This is due to the fact that a lost packet delays the delivery of preceding packets which are not lost. Thus, when a lost packet is finally delivered, packets which have already been processed by the receiver but were not forwarded to the application are delivered at once. Since the analysis in Section 4 ignores the forwarding-in-order issue, the latency curve derived from the analytic model is comparable to the simulation-unordered curve rather than to the simulationordered curve. Figure 9 shows that most points of the simulationunordered curve are below the corresponding points at the analysis curve. This is due to the fact that in the beginning (as well as in the end) of the simulation run, the queues are not full and therefore packets are not discarded as assumed by the analysis. Consequently, the first packets in the simulation are delivered earlier than expected by the analysis, which improves the latency of the rest of the packets in the simulation run. High frequency ON/OFF simulation. Next, we ran a simulation of an application that employs shorter ON/OFF periods. In this setting each ON period lasts 50 time units, during which the application generates packets at a rate of three packets per time unit. An OFF period lasts 250 time units, during which no packets are generated. Thus, on average, the application generates 0.5 packets per time unit (recall that each receiver is capable of processing one packet per time unit). The size of the receiver set is 10 and the timeout value is 50 time units. In this measurement, our default setting employs ordered packet delivery (later we provide a comparison to the unordered case). Note that in this setting the assumption that n À q, employed throughout the analysis, does not hold. Figure 10 presents the latency of each packet in the first ten ON/OFF periods for sender transmission rate limits of 0.5, 1.0, 1.5 and 2.0. The trend depicted in each graph continues Figure 10. Simulation: 10 phases of 50 time units ON periods followed by a 250 time units OFF periods- latency measurements. as the simulation progresses. It turns out that with rates of 1.0 and 1.5 all the packets that are generated during an ON period are delivered before the beginning of the next ON period; hence, packets experience low latency. Note that with a rate of 1.5 all packets injected during an ON period are delivered before the next ON period begins, despite the fact that some packets are discarded by the receivers due to congestion. With transmission rates of 0.5 and 2.0 not all injected packets are delivered before the next ON period commences. For a rate of 0.5 there is a small accumulation of packets in each round. Although the transmission rate limit of 0.5 is equal to the average packet generation rate, the sender cannot keep up with the application’s submission rate. This is due to the fact that the sender must also retransmit packets which are lost in the network. At the rate of 2.0 the receiver’s queue fills up which leads to numerous packet discards. Consequently, the number of retransmissions increases resulting in a congestion collapse where the overall transmission rate exceeds the processing capability of a single receiver. Overall, when targeting 10 receivers, we have observed that with rates ranging from 0.6 to 1.7 no packets are accumulated between rounds (ON/OFF cycles). When running the same experiments with 100 receivers the accumulation-free range reduces to rates ranging from 0.9 to 1.2. This is due to the fact that with 100 receivers a packets is sent, on average, more times (until it reaches all receivers) than with 10 receivers (see, for example, Figure 1). Hence, under the same transmission rate the last retransmission of a packet in the case of 100 receivers is expected to occur later (compared to the 10 receivers case), which means that packets will be transmitted for a longer period of time. Probability of timely delivery. Since the context of our research is real-time applications, we have evaluated the probability of delivering a packet before its deadline. We consider a short-message application which generates messages according to an ON/OFF process with the parameters employed in the high frequency ON/OFF simulation. We recorded the latency of each message over 1000 ON/OFF phases. The simulation was repeated for transmission rates ranging from 0.1 to 3.3. Figure 11 presents the probability of on-time delivery to 10 receivers for different deadlines (50, 100, 150 and 200 time units). As expected, the probability decreases as the deadline requirement gets tougher. It is also observed that in extremely low and high transmission rates the probability of timely delivery is practically zero. With 100 receivers the on-time delivery probability graph is much more narrow than that of 10 receivers. Non-negligible delivery probabilities are obtained only for transmission rates ranging from 0.9 to 1.2. Figure 12 presents the on-time delivery probability under a 125 time units deadline with receiver sets of 1, 10 and 100. As expected, the probability of timely delivery decreases as the number of receivers increases. Interestingly, the corresponding receiver-initiated simulation yields similar results, i.e., the inversion phenomenon does not occur in this setting. The reason for that is that unlike the bulk-data transfer simulation where packet are transmitted continually (except for tail cases), here the transmitter experiences significant pauses during packet retransmissions. These pauses reduce the receivers’ pressure and thus the inversion phenomenon is avoided. Figures 11 and 12 show that transmission rates that are Figure 11. Simulation: delivery by deadline probability with 10 receivers under various deadlines. Figure 12. Simulation: delivery by deadline probability with different receivers sets. 7 Figure 13. Simulation: delivery by deadline probability with 10 receivers under various deadlines, when an ordered delivery is not required. slightly higher than 1.0 usually achieve the best probability of timely delivery. This contrasts with bulk-data transfer in which a 1.0 transmission rate produces the shortest delivery times (see Figure 7). The main reason for this is that as long as the rate is not too high, packets that are sent during the ON period are not discarded but rather are stored in the receiver queue and are processed during the OFF period. In addition, with 1.0 rate a receiver may have idle periods which are caused, for example, by the loss of the previous packets or by a favorable variation of processing times; with a rate higher than 1.0 the amount of idle time decreases. As a result, the receiver service rate improves and the message latency decreases. Probability of unordered timely delivery. Figure 13 presents probability of timely delivery to 10 receivers when packets can be delivered out-of-order. These results were obtained using the same ON/OFF settings that were used for in-order delivery and are hence comparable with the results presented in Figure 11. The comparison reveals that in the case of unordered delivery the probability of timely delivery is considerably higher than that of in-order delivery; this is particularly evident under tight deadlines. The reason for this is that in the case of ordered delivery a missing packet delays the delivery of all preceding packets. CONCLUSIONS In this paper we study aspects related to real-time message dissemination. We considered two types of applications; one that typically generates large messages and another generating short messages. We studied the effects of bursty data generation and how they can lead to congestion at the receivers. Both sender-initiated and receiver-initiated protocols have been studied. We provided an analytic model that draws the dependency between transmission rate limit and the expected message latency. The simulation results indicate that the simplifications made by the analysis have a negligible effect. The presented analytic model can be incorporated in a transmission rate control logic to attain high probability of timely delivery. REFERENCES [1] B. Carmeli, G. Gershinsky, A. Harpaz, N. Naaman, H. Nelken, J. Satran, and P. Vortman. High throughput reliable message dissemination. In SAC ’04: Proceedings of the 2004 ACM symposium on Applied computing, pages 322–327, 2004. [2] D. Chiu and R. Jain. Analysis of the increase/decrease algorithms for congestion avoidance in computer networks. Journal of Computer Networks and ISDN, 17:1–14, 1989. [3] A. Erramilli, M. Roughan, D. Veitch, and W. Willinger. Selfsimilar traffic and network dynamics. Proceedings of the IEEE, 90(5):800–819, 2002. [4] G. Hasegawa and M. Murata. Survey on fairness issues in tcp congestion control mechanisms. IEICE Transactions on Communications, E84-B(6):1461–1472, 2001. [5] Van Jacobson. Congestion avoidance and control. In ACM SIGCOMM ’88, pages 314–329, Stanford, CA, aug 1988. [6] R. Jain and S.A. Routhier. Packet trains - measurements and a new model for computer network traffic. IEEE Journal on Selected Areas in Communications, 4(6):986–995, 1986. [7] Java Community Process. JSR-1: Real-time Specification for Java 1.0, January 2002. [8] W. E. Leland, M. S. Taqqu, W. Willinger, and D. V. Wilson. On the self-similar nature of ethernet traffic (extended version). IEEE/ACM Transaction on Networking, 2(1):1–15, 1994. [9] C. Maihöfer and R. Eberhardt. A delay analysis of reliable multicast protocols. In Proceedings of the 2001 International Symposium on Performance Evaluation of Computer and Telecommunication Systems (SPECTS), pages 1–9, 2001. [10] Object Management Group (OMG). Real-time CORBA Specification, formal/05-01-04, January 2005. [11] O. Ozkasap and M. Caglar. Traffic characterization of transport level reliable multicasting: comparison of epidemic and feedback controlled loss recovery. Computer Networks, 50(9):1193–1218, 2006. [12] K. Park, G. Kim, and M. E. Crovella. On the effect of traffic self-similarity on network performance. In Proceedings of SPIE International Conference on Performance and Control of Network Systems, 1997. [13] S. Pingali, D. F. Towsley, and J. F. Kurose. A comparison of sender-initiated and receiver-initiated reliable multicast protocols. In Proceedings of the Sigmetrics Conference on Measurement and Modeling of Computer Systems, pages 221–230, 1994. [14] Reliable multicast messaging (rmm). http://www.haifa.il.ibm.com/projects/software/rmsdk/. [15] J. Widmer, R. Denda, and M. Mauve. A survey on tcp-friendly congestion control. IEEE Network, 15(3):28–37, 2001. [16] M. Yamamoto, H. Ikeda, J. F. Kurose, and D. F. Towsley. A delay analysis of sender-initiated and receiver-initiated reliable multicast protocols. In Proceedings of the INFOCOM ’97. Sixteenth Annual Joint Conference of the IEEE Computer and Communications Societies. Driving the Information Revolution, page 480, 1997. [17] X. Zhang and K. G. Shin. Delay analysis of feedbacksynchronization signaling for multicast flow control. IEEE/ACM Transactions on Networking, 11(3):436–450, 2003.