>> Jason Williams: Great. Thanks everyone for coming. ... Henderson from University of Cambridge. Matt is nearly done...

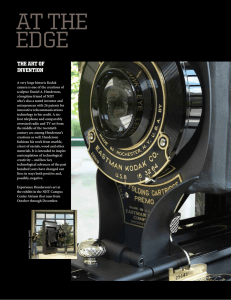

advertisement

>> Jason Williams: Great. Thanks everyone for coming. It's a pleasure to introduce Matt Henderson from University of Cambridge. Matt is nearly done with his PhD. He's going to be finishing in December. He is in the Dialog Systems Research Group at Cambridge which I'm very fond of because that's also where I took my PhD. I think he's doing some really interesting work with dialog state tracking using recurrent neural networks and he's going to tell us about that today. He is on his way back to Cambridge having just finished an internship this summer at Google. He has a Google Fellowship that is funding his PhD. Thanks very much for stopping on your way back and we are looking forward to your talk. And I'll mention, too, in addition to the people in the room there are also people who are watching online, about this many, again, at least. >> Matt Henderson: Okay. Thank you very much, Jason, and everybody. Thanks for the invitation and it's my pleasure to talk a little bit about dialog state tracking, and in particular applying recurrent neural networks to this problem. First of all, just an overview of what is dialog state tracking. An example dialog, I'll show you what the dialog state is at each turn. In the dialog about restaurant information the first time the user asks for something that's in the center. We annotate the dialog state with price range equals cheap and area equals center. An example of adding goals is the next one where the user says and I wanted to serve Indian food. To that we add in food equals Indian to the goal as part of the dialog state. Then they're told there is no such place. There's nothing serving Indian in the center, we get an example of the goal changing. Fit can go from Indian to now Chinese and then say the system offers them Seven Days, which is a nice Chinese restaurant in Cambridge. Then I asked for the phone number. So part of the dialog state might also be the fact that they have requested the phone number. We might also track other things such as the search method which is kind of how the user is interacting with the system at that point. As a simple example at the end they just want to end the conversation so the search method is finished. So dialog state tracking is tracking this structured output through a sequence of inputs from both the system and the user. This is the task that we are going to try to apply with recurrent neural networks state. This year with Jason Williams and Blaise Thompson, my colleague in Cambridge who organized these two research challenges, the dialog state tracking challenges, DSTC2 and DSTC3. DSTC2 looked at these restaurant information dialogs like the one I just presented there. There were lots of labeled dialogs used for training and a large test set of mismatched dialogs. You were asked to output your distribution of the dialog state over each turn. In DSTC3 you looked at the challenge of extending the domain, so there was all the data from DSTC2 and restaurant information under the test set which extended the domain which now not only had restaurants but hotels and also cafés and pubs and a bunch of new slots. All of this data is available. It's not all labeled. And it's available on the website there. You can just search using your favorite search engine for dialog state tracking challenge and you should find that. This is an overview of comparing the two challenges. So there's the ontology for DSTC2 and DSTC3. First you'll note that there's a bunch of new slots that didn't appear in DSTC2, the restaurant information one. Also, if you look at type, the cardinality increase from 1 to 3 in the third challenge. That's because we're not just talking about restaurants, but also pubs and coffee shops. Not only that, also the possible values for each slot changed between the two challenges. Just for interest there is a list of all of the research teams that competed in either or both of those state challenges, including Microsoft Research, of course. Let's take a step back and consider how is this problem usually done starting from speech recognition? The normal approach is to split into two, to have a spoken language understanding step which takes as input the speech recognition and outputs dialog acts. Dialog acts would be a combination of a speech act like deny and form and request, request alternatives, goodbye, acknowledge, all of these kinds of things with a possible slot value assignment. Here the example is deny food equals Italian, which might correspond to know. I didn't say I want Italian. Then the dialog state tracking takes the output of spoken language understanding these dialog acts and updates its internal state and outputs what it thinks the new dialog state is. For example, these things from the first slide, something like fit equals Chinese, area equals South. What I'm looking at here is using recurrent neural networks to go straight from the speech recognition to outputting the dialog states. This would have the advantage of avoiding any possible bottleneck in the system by forcing us to output distribution over a dialog act. Also, by eliminating the need to design this ontology or this grammar of what all the possible dialog acts are that we need to model. This is an overall picture of the RNN model which is used for dialog state tracking. It takes its input, the speech recognition, which is ASR, automatic speak recognition as well as the last system action and it will update the internal memory m and output p which in this case is a probability factor which gives the probabilities of each value for a particular slot. It's also possible to chain these types of models together to structured joint distribution. I'll come back to that. One of the main challenges is generalizing to unseen dialog states. There might be some very rare values, for example, Jamaican food rarely gets seen whereas Chinese food is seen quite a lot in the data. Also, in the third dialog state tracking challenge remember there were actually slots that we had not seen before ever, so we want to be able to transfer learning from examples we have seen to rarely seen dialog states. >>: In terms of design [indiscernible] itself, is the intention of incorporating these slots from SLU into [indiscernible] states of the [indiscernible] or is it [indiscernible] >> Matt Henderson: It's kind of bypassing all of SLU except we do need to define what slots exist and what their possible values might be. >>: Do you have to have a [indiscernible] or is there [indiscernible] >> Matt Henderson: Yeah. The idea is if we label what the dialog state is we don't need to come up with all the possible speech actions that might exist to change your goal or change your dialog state. For example, this idea of denying a slot, we can just learn that that exists in the data. >>: [indiscernible] requires machine action. That's part of [indiscernible] >> Matt Henderson: Know. That's a separate thing. I suppose we could just get the ingrams from what the system said, for example. >>: Can I ask you something about the preview? >> Matt Henderson: Sure. >>: I usually think of an RNN is having an input, and output and a memory. Here I think p is serving as the output. That's the quantity you are really interested in. And that is feeding back in so it's sort of consuming its own output. I was curious if that was a conscious design choice. >> Matt Henderson: Yeah. It's meant to, there is a baseline system which operates very simply on p which is called the focus baseline. Basically by a simple linear transform of p every time based on what it sees from the SLU. At the very worst, as another case this type of model could just emulate that baseline since it's getting guessing at its previous input p it can emulate this focus baseline which we published with the results. Of course you could also not let p go back in and part of m could be p. Another reason is that we actually kind of in some way factor the recurrent neural network by slots, by value for a given slot. It's useful for at one of the factors which is looking at assigning certain confidence to a given value to know what it was given in its previous turn. We would need at some point to either factor m into one component for each value or it's kind of more natural to use p. >>: [indiscernible] P is the probability factor for each value but your evaluation tasks [indiscernible] do you take the maximum probability… >> Matt Henderson: Actually, I didn't really explain so well, but output should be a distribution. >>: [indiscernible] and so you use [indiscernible] to measure? >> Matt Henderson: We use L2 to measure, for evaluation. For training it's trained by maximizing probability of the whole sequence. >>: [indiscernible] m that's a factor [indiscernible] is it part of the network [indiscernible] vector or is it something that you have separately that you has the weight between network and [indiscernible] >> Matt Henderson: I think maybe in a couple of slides I kind of have explicit mathematical representation of it, but m is getting put back in and it's treated as if it's basically part of the features that are part of the input. It comes in with the same weights as the features. It seems like utility too. >>: One quick question? [indiscernible] >> Matt Henderson: That's what I'm coming into. I was touching on the idea of generalization and the idea taken here is with feature representation to kind of de-lexicalize the ASI so that these examples would actually have very similar vectors f s where s is each slot and fsv is where s is a slot and v is a value. That's because in each case we have a value told by the slot name, so Chinese food and Jamaican food intuitively will want to be able to transfer learning so if someone says I want value slot, then that is contributing to the positive of the hypothesis of slot equals value. Then this is to represent the ASRN best list. If an ASRN best list is weighted sentences and then f just stores the ingrams for n equals 1, 2, 3 typically weighted according to where they came from in the ASRN best list. Then fs consists of the ingrams where we have tagged the slot values and the slot names, so we get these extra components. And then fsv is something similar for v equals Indian and Italian. We've tagged the value. You'll note that these two vectors in the in the bottom two rows are very similar in terms of where they have nonzero components. They have the same nonzero components. That allows us to transfer example, learning from examples, you know, Italian food and Indian food. And then this is the actual structure of the RNN. The gray box here is activated for each value that we managed to tag in the de-lexicalization process. A neural network with a single scaler output gv is expanded when we unroll the RNN and it takes its input the tag features for the value and the slots as well as just all the ingrams that are just stored in f. Also f will store the representation of the machine action. It also takes the pv which is kind of what I was alluding to with your question, Jason, about why it's nice to let p back in so we can actually take the corresponding component of p and make that part of the input to the network. That’s a scaler for each value which can then go into Softmax to give us p and m just evolves in a typical single layer kind of way. Then p prime and m prime are for the next turn and this will get activated again. >>: Do you create the gray boxes dynamically? >> Matt Henderson: Yes. >>: And that means that if I see the word Italian somewhere in any of the ASR then I now have a gray box for Italian. If there are common confusions, say some trigram that is optimum use for the word Italian, then you would have to know about that apriority in order to create that gray box. In other words, there's no direct connection from, I guess it would be from f to p prime that would let you sort of in for or learn common [indiscernible] >> Matt Henderson: It sounds like a planted question because the next slide is this. So I will say this is useful. As you said, we don't need to actually know ahead of time what values exist, which is attractive in some ways, for example, training across lots. We can just learn this gray box and it would just get activated for every value that we recognize. There might be, we might not know ahead of time. Someone looks at their phone and we see where they are in their GPS and then we know what [indiscernible] [indiscernible] >>: [indiscernible] what is the [indiscernible] vector here and what's the feedback for [indiscernible] on that recurrent [indiscernible] >> Matt Henderson: M is the memory and the calculation of gv I've written is a neural network. That can have as many hidden layers as you like in there. >>: [indiscernible] >>: [indiscernible] gv is the [indiscernible] >> Matt Henderson: Gv is calculated as neural network all of its inputs, so there is a hidden layer involved in the calculation of gv. There is also a hidden layer involved in the calculation of m prime given f and m. >>: [indiscernible] solve that p and fs as part of the neural network. I think [indiscernible] in terms of high-end structure. [indiscernible] >> Matt Henderson: Yeah. It could. The thing is that p is used as the output to tell us what it thinks the dialog state is, but m is this. It just learns to use this however it wants to. >>: [indiscernible] >> Matt Henderson: Yeah. That would work. You could say m could be absorbed as a hidden layer. I suppose so. Yeah. So there would be sort of a more structured [indiscernible] for g. >>: [indiscernible] only represents the history of f? Does f have any information about p or something like that? >> Matt Henderson: That's true, yeah. >>: And the m prime is calculated using the neural networks, not the simple dialog state. >> Matt Henderson: Actually, I think we use a logistic, something like sigma of w times f plus another w times n, but it could be whatever. >>: Can you tell me what p sub n is? >> Matt Henderson: P sub n, oh yeah, that needs some explanation. There is some extra hypothesis that no value has been mentioned yet for that slot, so that’s stored somewhere. So we just say it's stored as the last value of n and p. >>: So neural networks [indiscernible] sequence. What is the sequence represent here? >> Matt Henderson: Prime means the next time step, so this could be copied below, yeah. >>: [indiscernible] so m includes history derived from f. f, let's say that you train this on data that contains some set of slots and then you're going to run it on data that contains the new set of slots. m now doesn't have any abstraction in it that gives it some way of tracking some new slot it hasn't seen before. Is that… Does that kind of follow… >> Matt Henderson: Yeah, it does. There are things you can try like, for example, if you look at fs and there's summed over all slots might give you some interesting features, so that would tell you what's there, what kind of likelihood that a slot has been mentioned in a turn. Or you can take fv somewhere over values and then some over all slots. Actually, some of fv over values is part of fs. >>: f includes everything as well. f includes fs and fu, or…? >> Matt Henderson: Since fs is just like a tagging of f. >>: f is just [indiscernible] >>: [indiscernible] convergence? >> Matt Henderson: No. It's just [indiscernible] >>: It's just arrived [indiscernible] >> Matt Henderson: Yeah. The thinking there is that you could reconstruct the tagging potentially from the… >>: [indiscernible] just a wall of words. >> Matt Henderson: Yeah, that's right but I'm thinking you could add some abstraction and some useful abstraction might be something over s or that kind of thing or averaging or something. >>: [indiscernible] recurrent network, that each tag corresponds to one term. >> Matt Henderson: Yeah, that's right. >>: Rather than bidding in sequence. >> Matt Henderson: That's right. Although it could be interesting to run the recurrent neural network over the confusion that way. >>: [indiscernible] so many turns, right, like five turns, ten turns? >> Matt Henderson: Something like 20 turns his typical. >>: [indiscernible] >> Matt Henderson: Yeah. >>: [indiscernible] practice a little dialog, maybe just five or ten, that's a lot. [indiscernible] >>: [indiscernible] >> Matt Henderson: Yeah. >>: [indiscernible] >> Matt Henderson: Yeah, sure. So if pn is a special probability or a special contribution to the probability which is the probability that nothing has been mentioned for that slot. At the beginning of the turn the pn should be one. And then if you say Chinese food or something, then all the probability goes to Chinese. There will always be a sequence, there's zero or more turns at the beginning where you haven't mentioned a value for that slot. So it's just this sort of special value that gets a kind of… >>: [indiscernible] >> Matt Henderson: It's kind of like no, none or something. >>: [indiscernible] so these gray boxes exist for every value, but what happens if you have rarely seen or new words or something like that [indiscernible] something like that? Is there some parameter of time going on [indiscernible] values? >> Matt Henderson: Yeah. The idea is that when you're training the parameters in the gray box, because of regularization you’ll prefer to use weights which go from the tag value feature from fv to gv because that can potentially explain more examples. Something serving blank is a really sort of common frame or something for one thing the blank. So that means that you've seen Chinese food, Indian food and so on then you see this new thing, Jamaican, because it recognizes Jamaican from the ontology, it will, the parameters there will mean that you can get the large contribution, the Jamaican parts. If you want to sort of tune towards a known slot that you have data for, then what Jason was touching on there is maybe you can't find a tagging for a particular value. Maybe the ASR always confuses Chinese with some other word so we ever catch it. So we can add this component h which takes as input f and m and directly contributes to the Softmax. That leads us to learn these confusions and sort of value specific behavior. Training these models is done using stochastic gradient descent unrolling the whole network, but initialization is quite important to get some gains. The first thing to do is called shared initialization which means you train a slot independent model first across all the slots you have data for and then tweak it towards each individual slot. Another thing to do is because these inputs are quite large. They depend on the size of the vocabulary. In our case this may be about 5000 dimensional vectors which are coming as input to the network. We learn a sparse embedding of these features and that can be done, for example, using d-noising auto encoder. Some results are given here which just show the benefit of using these initialization techniques. Throughout this talk the main metrics I'll look at the joint goal accuracy and the joint goal L2 which look at the quality of our predictions for the goal constraint part of the dialog state. Obviously, accuracy is the fraction of turns that the top hypothesis correct, and the L2 is the L2 norm so the lower the better and that's looking at the quality of the scores of probability distributions in a way. Basically, the point here is the best results come from using these two techniques in tandem. >>: For the first of the two models that you showed if you never create a gray box or some value, then do you assume that the probability of that value is zero or do you have some kind of hedging guess the value should be? >> Matt Henderson: What we've done so far is just assume the contribution to the Softmax is zero, therefore all ones which haven't been activated have a constant probability. Then the model can learn dynamically how much to put on this hypothesis of none or null, so you can change what that constant is by, you know, even if you can tag anything intelligent, how much probability this says something or nothing so it would be flat and then up and down. But you could calculate a better possibility. >>: So they're all the same but not necessarily so? >> Matt Henderson: They are all the same but not necessarily the same across tens. This is showing how we can use this first model which is one we're talking about there to train a slot independent model, which as I mentioned in the previous slide it can be used to initialize training for slots that we do have data. Also we can use this slot independent model straightaway to track a slot that we haven't seen. For example, has TV is a new slot in the DSTC3 so in deployment we can use it straight away to track any new slots. This is an overview of the approach that was taken in the two challenges for training and deployment. In the DSTC2 Jason's approach and this RNN approach battle that the top. Jason's approach got the top accuracy, while the RNN did well for the L2 score. I'm showing here the ASR system, that means it only took as input the speech recognition and then the SLU system which took these dialog acts that I was mentioning before as its input. You see the ASR system did the best and we think that's because we're avoiding the bottleneck and avoiding the intermediate semantic representation. In the third challenge the RNN system basically came top for most of the metrics. Here I'm showing one system that took both the ASR and the SLUA and one system that just took the ASR in comparing it to the top comparable competing system. The RNN approach did well particularly for the accuracies and I think of particular interest is not so much the ASR plus SLU in this case, but just the ASR and that's because we're extending to a new domain and the question would arise where does this SLU come from. If we can do well without relying on a spoken language understanding component and that means we don't have to sort of explain that where we got this training data for this [indiscernible] understanding. But because the SLU was included with the training data when we train the system I use it and got improved accuracies from using this actual knowledge. The rest of the talk is going to look at how we can improve the accuracy of the ASR system slightly up to .63 by using an online unsupervised learning approach. I mentioned the word-based system is of most interest and because we're not assuming any training data for creating a new SLU, and also we are not using, not designing any intermediate semantic form. And we're going to present a technique which adapts the initial parameters and initial parameters come from this shared model that's trained across all slots and it can learn from the unlabeled examples that it sees while tracking dialogs. So it will update online and track dialog as it goes and try to improve its predictions. This is an example of a dialog that we want to be able to learn from. We're showing the distribution of what an initial model might output given the turn. If the user says they want Chinese food and initial model will be able to recognize the value Chinese as it matches identical, the string matches identically. But in the next turn when they're told there's nothing serving Chinese and they say they want something serving pizza because we realize that we're told there's no such matching place and they use the keyword serving which you might know corresponds to food, then the initial model knows or might think that it's changed away from Chinese, but not necessarily what to. There might be a flat distribution here from an initial model. But then when the user clarifies and says it's Italian that they are looking for, then because, again, the strings are matching there the model would be sure that they want Italian food. So the idea here is that we want to be able to propagate back where it's a constant [indiscernible] dialog back through turns to where we were kind of flat and unsure, but not so far back that we destroy what we had in the first turn. We are thinking we can boost up the probability of Italian, basically in the middle turn and learn that pizza is corresponding to a Italian. The way this is done is by defining unsupervised training criteria which compares the output of an initial model with output of a model which has updates of parameters w star. The basic idea is to use entropies, so h is the entropy of distribution and h when it's got two arguments is the cost entropy of distributions, so this sum here will weight pairs of consecutive outputs from the initial model in this new updated model. It weights the distances by the uncertainty that the initial model had. That will have the effect when you optimize this of giving a learning signal that goes backwards through the dialog but only so far as this hy. The y in it is high. The other term there, the regularization term which means our prior for our new [indiscernible] C w star should stay close to Winit. >>: [indiscernible] so the weight by the y init is fixed? >> Matt Henderson: Yeah. >>: And then [indiscernible] to that second term. >> Matt Henderson: This is, the yinit is fixed and then C is a function of w star and that's y star as a function of w star. This gives us a function c which is dependent on w star and not dependent on any labels, so we can use that stochastic gradient descent to try and give us better updates of parameters as we see dialogs, so the idea is to start tracking dialogs and once you've collected a batch of n then we run stochastic gradient descent to give us a new update of parameters and then keep tracking and to repeatedly do that. One simple experiment to do is to use the DSTC2 data but to delete all of the labels for the fit slot. In this experiment we have, the squares are on adaptive models and then the circles are after adaptation. In general, and also on average this gives improved results, so lower L2 and higher accuracy. By combining all the models using score average we get the filled in squares and filled in. In the end the combined unadapted model gets us something which is the best and it's comparable to the baseline which actually assumes its labels for the food in training, though it's kind of a modest improvement. And then this is, one of the last slides is performance of adaptation on the actual DSTC3 data broken down into new slots and old slots and though it's a small improvement but we do see best results from using adaptation on the joint accuracies and that's where we get this .623 number which is an improvement on what was entered into the challenge. In conclusion I shown a model which performs strongly in these research challenges and one key point that I didn't really touch on is that feature engineering doesn't require a lot of effort and that we're kind of using these raw ingram representations and we need to define how to tag them for the semantic representation. But really the idea is that the RNN can figure out what in combinations of these features are important to model the dialog. We present two models so we can generalize across all slots but also to learn specific behavior by including this actual component and we're able to track the state in word-based models with our anti-explicit semantic representation. And lastly, I presented some methods for adapting word-based RNN with the unlabeled data. Okay. Thank you very much. [applause] >>: [indiscernible] >> Matt Henderson: Yeah sure. The dimension of input is, as I said, about 5000 or so and then what we do for training is like, for example, in this graph here we're bearing parameters actually across for each point, but a hidden layer might be roughly 100 or so. >>: So you look at the [indiscernible] >> Matt Henderson: I have some picture here. This is the effect of what the big weight matrix that goes, so this would be your hundred or so. Maybe it's a bit more than 100 and this is 5000 input. And this is when we don't use the de-noising auto encoder as input and this is when we do use the de-noising auto encoder to initialize. So I don't see any structure here. >>: [indiscernible] >> Matt Henderson: Yeah, you mean the recurrency. >>: [indiscernible] >> Matt Henderson: I don't know. >>: I assume you used [indiscernible] >> Matt Henderson: Yeah. Unraveled the whole maybe 20 or so and then just… >>: [indiscernible] 20 turns? >> Matt Henderson: Yeah. >>: That's like 40,000 [indiscernible] >> Matt Henderson: Yeah roughly. >>: [indiscernible] is 5000x100? [indiscernible] >> Matt Henderson: Yeah, that's a good guess for it. >>: [indiscernible] the system adds to that. >> Matt Henderson: Yeah the system adds to it, a few hundred or so. >>: I mean on the x-axis there is a clear [indiscernible]. It's just a maxing code a lot of information [indiscernible] >> Matt Henderson: I think that might be it. >>: You can only use the [indiscernible], so are you huge [indiscernible] >> Matt Henderson: The ASR constants are included by weighting the factors. >>: [indiscernible] >> Matt Henderson: I'll show you this. Here, for example, the weighting goes in there. We could get these weights possibly [indiscernible] rather than [indiscernible]. That's how we include the constants is by scaling the [indiscernible]. >>: [indiscernible] >> Matt Henderson: Yeah. >>: [indiscernible] >> Matt Henderson: Yes. >>: I'm curious in the adaptation if you did any error analysis. I'm wondering, I get that it on average improved. I wonder if it improved a small number of cases or if it ended up deteriorating, causing deterioration from any cases and then causing improvement on slightly more cases. >> Matt Henderson: Yeah. I don't have anything like that. I did look at kind of examples where it was doing something right that it had been before and trying to figure out how did it last. Was at this example that I just made up like sent me pizza and that kind of thing? And that never happened. It's more likely to be things like learning that serving means food, that sort of thing, so it knows. And also it really helps for the don't care hypothesis, so like none of these. That does depend quite well on the slots, but, you know, you might say serving any food. It doesn't matter or something, but then when you are talking about area you say things like anywhere and that is kind of unique to this sort of… It was doing a lot better on these don't care values. But it would be, yeah that's definitely something to look at. >>: [indiscernible] suppose ASR makes no errors, so for example, [indiscernible] it is correct 100 percent and nothing else. Basically to change this whole thing out are you going to get really perfect [indiscernible] >> Matt Henderson: It would be cool to try it. I had hoped that output [indiscernible] delta distribution at the end to the correct… >>: That means [indiscernible] that because the correct recognition result were the words may not necessarily correspond to the correct slot depending on the information. For SLU you might run into errors. [indiscernible] how much would that error contributed to the final [indiscernible] >> Matt Henderson: You mean they might express things in ways that we didn't expect them to? >>: I mean [indiscernible] problem. Maybe [indiscernible] think about for some bigger domain issue just for the [indiscernible] processing [indiscernible] error, to what extent your approach is going to be viable. >> Matt Henderson: What's happening here is it's kind of keyword splicing in a way, right? As long as we had, if we are using this model it doesn't have h which just requires you to tag the value. As long as we had tagged them and we have enough examples to train it, then it would work. >>: [indiscernible] >> Matt Henderson: Yeah. I guess the answer is that you need to have examples. >>: But one of the main advantages of neural network it gives you generalization [indiscernible] so if you see things in the past that don't give you the exact sample [indiscernible] further example, but more often than not it is going to generalize that one, so I suppose [indiscernible] have to decide [indiscernible] example in the test how you approach [indiscernible] when you have [indiscernible] example literally [indiscernible] but listen to some [indiscernible] available through [indiscernible] >> Matt Henderson: We don't have any sort of constructive examples where there's an obvious inference that has to be made, I guess. >>: [indiscernible] and then that would be the challenge for language processing [indiscernible] >> Matt Henderson: We're talking about semantic decoding and stuff but it's really shallow semantics. >>: [indiscernible] >> Matt Henderson: It contains mismatched data and obviously we have this huge test set which is an extended domain that we have never seen any labels for. But there's no kind of, I guess, logical inference that we can measure for that or something. >>: I remember you tried something to deal with that. [indiscernible] >> Matt Henderson: Yeah sure, so h is like this here. This is allowing us to really tune to value specific behaviors, for example, this guy always computes with this other guy and yeah. I'm not sure if that relates to something more hard NLP type problems. >>: The example would be that Italian food is healthy, for example, if somebody wants to have healthy food. And all these things are in the [indiscernible] things that somebody asked about the food [indiscernible] pick up some Italian food or something. >> Matt Henderson: The answer to that is like if we had that labeled and like healthy food is when they said they want Italian, then this model for h could pick up on that activation and say whenever we see healthy we might base [indiscernible] Italian. So it has the capacity to learn stuff like that and also the adaptation techniques can learn like the pizza is Italian thing. >>: [indiscernible] knowing that exact [indiscernible] meaning you don't have a label, yet in the training set you have [indiscernible] direct information [indiscernible] >> Matt Henderson: I think it could be able to do that as well because the transition weight should be learning the dialog as it progresses reasonably slowly. They are not changing their minds every turn. So if we had partially labeled dialog, for example, then we could do something similar to what I had on the slide where we propagated it backwards because it's not going to change its weights to say that the transitions are changing really quickly all of a sudden. Then we would say maybe healthy means Italian. I think there's a capacity to learn all these kinds of things. >>: [indiscernible] >> Matt Henderson: Not explicitly anyway. >>: [indiscernible] and if the [indiscernible] gets really bad [indiscernible] before it gets more [indiscernible] >> Matt Henderson: It would take dialog send more open than main dialogs and also more loose and not so much slow filling. Uh-huh. >>: [indiscernible] >> Matt Henderson: This one? >>: [indiscernible] you want model that exploits the fact that there is some relation in the answer [indiscernible] outputs after you [indiscernible] when the answer [indiscernible] as being [indiscernible] so you train the model there that when the [indiscernible] example [indiscernible] that you will exploit the information that follows [indiscernible] then it would be possible that the next step would always be to predict the occurrence [indiscernible] >> Matt Henderson: Something like the goal doesn't change really quickly. >>: When in the last slide you said there were interesting applications [indiscernible] RNN. What do you think, how do you think that these would be helpful? I don't know if you talk about these when you produced that last slide, but if you would speculate how you think that this kind of [indiscernible] be helpful in kind of language model [indiscernible] >> Matt Henderson: This is part of the word based RNN. Do you mean to something that isn't dialog state tracking like, did you say language model like, a language model like? Yeah. It's kind of, I don't know if there's a very, in language modeling we always observe certainty of the labels. I'm not sure how we would ever be in a case where we would be training in a language model without labels. If a word gets deleted, I don't know. >>: It's kind of because in some way could be a kind of reaction, because you exploit something about the future to refine what you have in the present. I understand that there is not this kind of formulation in language, but when you have a set that it is a right [indiscernible] but this would be useful in order to get some more, I mean if we got in this setting and it was not the best, let's try to correct the error. But let's try to get more general representation of what's happening in the second step. [indiscernible] tool different stuff [indiscernible] pizza. It could be whatever, but [indiscernible] would still be Italian. It is a kind of rotating, more general semantic, a presentation of what's happening [indiscernible] perhaps. You know saying okay, the states around these times that should be the same. Or perhaps, I don't know. >> Matt Henderson: Yeah, cool idea. I like the idea of considering running backwards through time as well. If you had, you would basically be training your model so that it could predict the future and if you know that you can predict the future then you know it must be smart. Yeah, I'm wondering, I think in some ways this is quite specific to the task because I'm really thinking about exploiting what happened in dialog. It's a particular attribute of this type of sequence that there will be moments where things are changing and then they snap to something else, but there might be like your example some other applications and that kind of thing. Yeah. Okay. >> Jason Williams: Great. Can we thank the speaker once again? Thank you very much. [applause]