1

advertisement

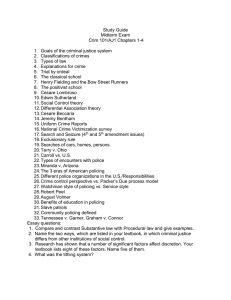

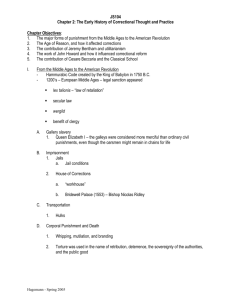

1 >> Leonardo de Moura: It is my pleasure to introduce Cesare Tinelli, Cesare is one of the founders of the SMT initiative. He has worked in the CDC-3 team, the CDC-4 mod evolution calculus. He has does many [indiscernible] and today he is going to talk about his model checker called KIND. >> Cesare Tinelli: Thank you. Thanks a lot for the invitation, Leonardo. I'll tell you, I'll mention KIND, but I'll tell you about the research that we've done around this model checker, which we hope is general enough that it can be used also in other contexts. Of course, we have implemented it in the context of our KIND model checker. This is a program lasting several years now that has involved students and other collaborators. I'd like to acknowledge these ones. George Hagen; a current visitor from France, Pierre-Loic Garoche, and two post-docs, Yeting Ge and Teme Kahsai. So just to put this in perspective, our goals here are, well, pretty much the usual ones in verification. Make verification practical, in particular improving performance and scaleability, but we are focusing on embedded software for the simple reason that, well, two reasons. One, it's generally easier to reason about embedded software. And two, it's more critical to prove such kind of software correct or at least potential users are more interested in that. We also focus on simpler problem, approving safety or dually, invariants of properties or we look at just synchronous systems. Our goals were to capitalize on the, initially when we started, and they still are, to capitalize on the various improvements and enhancements in automated reasoning to, in particular, they go beyond the sort of propositional logic-based approach that had been common in verification, in particular in model checking, going back from symbolic model checking, using BDDs and set-based approaches. And, of course, the key technology for us is SMT, which allows us to reason about logics that are more powerful than proposition logic. One of the things that it gives us, SMT techniques, one of the pluses that they give us is that we can easily reason about finite state and infinite state systems when we use them. 2 So what is the general approach if we can't to do an SMT based safety checking? I'll be calling it safety checking because, again, that's what we're doing. Given some formal description of a system, M and the property that we show. Boy system, I mean transition system here. Convert that automatically into some fragment of restore logic. We'll build in theories for efficiency reasons and try to prove or disprove the property automatically using inductive arguments. Of course, we would like to SMT solver as the main engine. One of the bonuses is that using this technology or these technologies, it allows us to work well both with control and with data properties and systems, which is usually not the case or didn't used to be the case for previous approaches. Let me give you very little technical background. This is mostly notation. This is stuff that at least the audience here understands and knows well. So we can look at transition systems as usual, as defined by triple SIT, where S is the state space, I is the set of initial space, and T is the transitional relation over the states that defines the behavior of the system. But as I mentioned, we will act on code such a system in a suitable logic. So for generality here, we can consider, affect all of the approaches that I am describing here apply to any logic where you have decidable entailment, okay. Any logic, in fact, when you have efficient decidable entailment or can take a proposition logic if you want to, as in any of the SMT logics, or other logics, such as the EPR fragment. Let's assume that we have a set of variables in this logic and some distinguished terms that we call values. Now, as you all know, typically, in such a setting, then you can encode stays as tuples of values. The set of initial states can be represented precisely usually by a formula that is satisfied exactly by the tuples that represent the initial states. And then you can, or we assume that you can encode that transition relation by a formula in this logic into tuples of variables and tuples if the states are represented by tuples, one for the current state and one for the next state. So standard stuff. State properties, of course, can be encoded as formulas over an end tuple of variables, okay. So syntax here, the notation that I'm using, for instance, for the variables denotes just that. I of X is a formula whose free variables are among X, vector X. 3 Okay. So remember, we want to if we're doing safety, we can't to prove typically that a property is invariant for a system. So we're interested in showing. Okay. We're interested to showing that a given property is satisfied by all reachable states. This is usually hard problem and so what we try to do is to approximate it by showing that a property satisfies some stronger conditions. A sweet spot usually is that if you show that a property is inductive, then, of course, you have shown that it is invariant. So you try to do that automatically. There are other notions here, such as validity and triviality in a sense that also give you invariants, but these are, you know, showing properties, the property have these things is usually simple. I'm mentioning them because they'll come up in the invariant generation procedure I'll be describing next. So again, this is just to introduce the terminology. The notion of inductive property can be extended a bit in terms of k-inductive property where typically, traditionally an induction, when you define a property to be inductive, you basically say, well, it's satisfied by the initial state and it's preserved by one-step transitions. K-induction essentially says that the property is preserved by K step transitions. So why is that interesting? Well, because inductive properties are zero inductive in this sense, and, well, there are properties that are k-inductive for some K greater than zero, but they are not inductive. In fact, so a property is k-inductive is also K plus 1 inductive. So there is a larger set of properties that we could hope to prove automatically to be invariant, and why can we do that in this setting? Because we are assuming that all of these guys here are formulas represented in our logic and entailment is decidable in that logic. So to prove our property k-inductive, you just have to run this test and then run this test in principle. In practice, of course -- yes? >>: Is it true that if a property holds on a system, then it is k-inductive for some ->> Cesare Tinelli: is no. No, this is what I was going to say next. And the answer 4 >>: Not even for finite systems? >> Cesare Tinelli: For finite systems, the answer is still no. Only for finite system, you can use improvements to the basic k-induction algorithm to show any property to be invariant based on the fact that the search space is finite, okay. But for infinite state systems, there is definitely the case, there are invariant properties that are not k-inductive. In fact, k-induction is fairly weak method by itself. It's very easy, it's nice. You can use SMT provers off the shelf. But he won't get you a lot, okay. Well, which is why you want to improve on it. Now, of course, some improvements go in a completely different direction, such as interpretation-based model checking, okay. >>: Which can also be used in k-inductive systems. >> Cesare Tinelli: >>: Of course. I mean -- You can come up with something k-inductive. >> Cesare Tinelli: property. A simply based model checking can prove k-inductive >>: No, I mean you can use k-inductiveness with interpolates is what I'm saying. >> Cesare Tinelli: And vice versa, in fact. It's one of the things I want to discuss with you later. For us, it's a nice framework, because as I mentioned, it's simple to implement, and you can improve on it. These are the pros of using k-induction as a way to prove invariance. I mention these. Also, this is another interesting point. The base case of k-induction is basically bounded model checking so that means you're guaranteed using k-induction to find violations of the properties as they exist. What, of course, you're not guaranteed is to prove that an invariant property holds unless it is k-inductive. And this is as it should be, because in the infinite state case, at lease, invariance is not a recursively -- I mean, the set of invariable properties is not recursible and innumerable. So we cannot hope to have completeness. 5 What we can hope to have is to improve on whatever method you have so that the precision of the method is higher. That is, it answers yes for more invariant properties. For more and more invariant properties. >>: So you have a property that is k-inductive. And there's a procedure that takes a k-inductive property and creates another property that's inductive. What's the size increase? >> Cesare Tinelli: Say this again. >>: So for every k-inductive property, there is a stronger property that is inductive for finite state system. >> Cesare Tinelli: >>: Possibly, yes. So can you characterize the trade-off, or is there a non-trivial -- >> Cesare Tinelli: >>: Stronger property, yes. You mean [indiscernible] tradeoffs or -- Well. >> Cesare Tinelli: For me, I wouldn't know how to compute that. I don't have a general mechanism on how to compute that property that you're referring to. >>: Take the k-inductive property and gives you -- >>: You could probably do it if you had variable -- >>: You could do something like that if you do quant fire elimination, but -- >>: So the question is [indiscernible]. >> Cesare Tinelli: No, there is more. I mean, this is what I'm going to say now. So there are these cons that some properties are k-inductive, are very large Ks, so it takes a long time to get there. And if the transition relation is large, the unfoldings are going to eventually kill you. And as I said some properties are not k-inductive at all so how can ->>: Is there any truth for what type of systems some. 6 >> Cesare Tinelli: Say this again? >>: Machines representing reactive systems, K k-induction is good for. Programs with loops and recursive procedures ->> Cesare Tinelli: No, no, we don't do programs with loops at all, because those are not transition systems in the sense, right. >>: Sure they are. >> Cesare Tinelli: You can convert them. >>: Sure they're transition systems. So I'm asking are those types of transition systems amenable to -- when you say some types of systems, I mean, what sort of systems? Induction ->> Cesare Tinelli: I mentioned before, we're interested in embedded systems. In particular, we work on models of embedded systems. I mean, in our experience, we've been working on model checking, high level specifications of systems that haven't been implemented yet. >>: So you're saying even for those systems, those embedded systems, k-induction is good for some but not for others? >> Cesare Tinelli: Definitely. And it's good that you're asking me questions, but I'm going to tell you all these things as we go. I mean, this is part of the talk. >>: Sorry. This is just the way we work here. >> Cesare Tinelli: >>: You made the comment that the serving data properties is not RD. >> Cesare Tinelli: >>: That's fine. For infinite state systems. Why is that? >> Cesare Tinelli: Because you have undecidability in general of -- 7 >>: But let's say I can start illuminating them, and then for each one, I can check whether it is inductive or not. So eventually, I would have a numerator. >> Cesare Tinelli: >>: I didn't say inductive. I said invariant. Oh, I sew. >> Cesare Tinelli: Yes, so of course the set of inductive properties is recursively innumerable, but they don't exhaust -- inductiveness is a sufficient condition to invariant. If something is inductive, it's invariant, but not necessarily vice versa. This is why the problem, the verification problem is hard, because we want to prove inductive. We want to prove invariants well, then, we use poor man's version of invariants, which, are for instance, inductiveness. When it works, it's great. When it despite work, we have figure out why. So this slide is introducing an intuition here that allows us to do better, okay. But again, better, not necessarily in a proven way. We don't have a systematic way to do this. So why is it that k-induction is not complete for invariance? You might have the inductive step which we can't represent here as a satisfiability question fails. So if inductive steps fails, means that a formula like that one is satisfiable. >>: [indiscernible]. >> Cesare Tinelli: >>: Say this again? This is? It has an extra [indiscernible] -- >> Cesare Tinelli: Oh, yeah, I apologize. Thanks for debugging. This is a spurious argument here. So if this formula fails, it's because we have a sequence of states along the transition relations where P is true for the first state, K state, and then it's false for the other one. If P is invariant, it must be that this sequence is unreachable. There's no other explanation. 8 So one way to improve induction is to say, well, I don't want to consider this sequence. Eliminate it. This is the general way you elect to do it. One way to achieve that is to strengthen the description of the transition relation. If you have a tighter description in your logic of the transition relation, hopefully that set at zero there will not be satisfying any pairs, TX, X prime so you won't have that contra example. Fat finger so how can you do that? Well, suppose you know another invariant about the system. You can strengthen the description of the transition relation by just saying that, well, I want a predicate of X also that satisfies invariant. I don't want an arbitrary X that satisfies this formula. It also has to satisfy this invariant and I have. Now, the tighter this invariant, the better this formula will be at discarding irrelevant; that is, unreachable states. So this is one possible way to improve k-induction. In fact, it gives you a lot of power if you're able to generate good invariants. The question is, of course, how do you do that? Okay? >>: You described the strengthening the transition relation, but a different point of view, the same as boot strap invariants from [indiscernible]. >> Cesare Tinelli: What do you mean by boot strap? >>: So J is an invariant, and when you take k-induction, you basically just add the [indiscernible] formulas. >> Cesare Tinelli: everywhere. >>: Well, yeah. The effect is the same. So is there a way to boot strap k-induction? You're adding J here So here J is -- >> Cesare Tinelli: I don't know the answer in general. What are good ways. can give you one way that we have been using, and I'll get to that later. Well, maybe I'll get to that later. Depending on your questions. So let's leave some suspense here. I Okay. So again, there are stronger approaches to prove invariants using inductive argument. Well, again, interpolation-based model checking, extra interpretation, PDR now and so on. The good thing about k-induction is it's simple but you can improve on it and make it as good as other approaches. By adding invariants. How did you generate invariants. There are a lot of ways. 9 One thing that we want to try to do is on the face of it is fairly silly, but it worked rather well for us. The key is, of course, in how you implement it. So what is the idea? Suppose you don't really know better. What you could do is to say, well, I'm going to generate a just large set of formulas that could be invariant just by luck, and I'm going to check them for invariance by checking that they're k-inductive, but I'm going to do this as efficiently as I can and hope to get lucky. And then any invariant that I can get out of this, I'm going be using for k-induction, okay? Now, you call this a smart brought force, because it is a brute force mechanism. But to make it effective, you have to be a bit smart about it. >>: [indiscernible]. >> Cesare Tinelli: >>: Sorry? Use something like Houdini. >> Cesare Tinelli: Something like Houdini or something like what is that system? Diken, but you have some heuristic ways to generate loss of candidate invariants. Then the difference here is that we actually prove these to be invariants, versus in Diken, it's just given to the user and the user has to figure it out. >>: Diken is just a way of playing out a bunch quickly by doing execution. >> Cesare Tinelli: Well, we throw away a bunch very quickly and then the remaining ones we also prove to be conductive to be sure that they are. They are invariant. The key here is, very quickly, and starting with a very large set. So you want to implement your large set of conjectures, C, as compactly as possible and sift it, sift there it as quickly as possible. And SAT or SMT solvers are really good at this stuff. So we capitalize again on our technology. So what is our scheme? We have a general invariant discovering scheme that again assumes an encoding system in a given logic and then the scheme is parameterized by a template formula, R, which represents some binary relation 10 over a domain, one of the domains of your system. system. Over your types of your We assume this method, this scheme, is parameterized by the procedure you use to generate the candidate set at the beginning. Use whatever you want. Whatever method you can come up with that generates terms that might being in to instantiate a template with. So let me give you concrete examples. So one example of R would be -- sorry, I got it backwards there. One example of R would be implementation. So implementation over [indiscernible] terms. Another one could be standard less than over arithmetic terms. So if this is the template that is implication or less than, the argument we could put in there are, well, whatever arguments of the proper type that your logic as sentence. So SMT logic with arithmetic terms, and you could have examples like this. Notice that the instantiation can give you arbitrarily sophisticated formulas, depending on the terms you start with. So here, I do have a less then that I've instantiated, but, in fact, I have some control embedded in it because I can use ITEs. Similarly here, implication is not just between Boolean terms but between arithmetic terms. >>: This is highly exposing, right? >> Cesare Tinelli: Yes. I'm just saying we allow this. Now if you want to use our system or our approach, it is your business to come up with the initial set. Then we can take it from there. Okay? Now, right now, in our experiment, what we do is we look at the description of the system, and we want to consider all possible sub terms. Obviously, this is not going to scale, and, in fact, there are other things you want to throw in that are not just sub-terms that you can come up with. But, you know, let's assume you have a with an I to generate that set. Then how does the mechanism work? Well, we use in a sense BMC, or the base case of k-induction to discard as many of these conjectures as possible from a set by finding real counter examples to the invariants. Once we have filtered that set down to one that we are happy with, or, you know, that heuristically is small enough, then we try to prove the remaining elements of the set to be k-inductive for some K. The ones that we fail with, 11 just conservatively throw away. The ones that we succeed, we keep. In the process of doing this, if the initial generations of terms is not too smart, will give you a lot of trivial invariance. Trivial in the sense that I flashed you before. Those are not really useful. They are invariants, but they're not really helpful. So a third optional phase would be try to identify those and discard them. Because they're just junk. Of course, identifying trivial invariants is expensive, so sometimes you can just leave them there, okay. It really depends on the particular ->>: [indiscernible] be good to put it in -- >> Cesare Tinelli: Very good. So, in fact, this is why we don't discard them often, because some of the trivial invariants are not eliminating any original states. However, there are limits and so they are helping, they might actually end up helping the SMT solver. Very good. Only the valid ones are the ones that probably don't help all that much. Okay. So the mechanism is just what I describe here. Well, we have some animations, but it's not going to give you much more than what I told you. Suppose these are all our conjectures. If we take their conjunction, we're making a very strong claim here. We're saying all these guys hold. We test them in the initial states, okay. And sure enough, we're going to get lots of counter examples that allow us to remove some of these candidates, and we do this until we use a fixed point for initial states. That is all the conjectures that we have do hold for all of the initial states. When we repeat the same process -- sorry, I'm going quickly here because I already mentioned this stuff. We repeat the same process for states reachable in one step, reachable in two steps and so on. Where do we stop? Well, heuristically, we stop if, when we are at frame K and we go from frame K to K plus one, nothing changes. That doesn't really mean that we've found a good spot. It's just heuristically one that we decided to do. Why is that? It's possible, body from K to K plus one, nothing change, but maybe some of the stuff could be as counter examples some bigger K, okay. It's better, you know, better heuristics could be used to do that. That works for 12 us for now. So once we get to this point, then the conjectures are conjectures that possibly are invariant. We try to prove them k-inductive. Simply by doing the k-induction step. Anything that falsifies the k-induction step is thrown away, again to be on the safe side. Anything that remains has been proven to be invariant. One thing that I want to stress that I didn't with conjunctions. We don't do them one at a Why? Because when you get a counter example, example eliminates several conjectures at the away as much junk as possible. say is that notice that we work time. We do them all together. usually get lucky. A counter same time. So you want to throw Also, as you know, proving something stronger by induction is usually easier. So if you try to prove that all of them are inductive, all the ones that have remained, it's usually easier than to prove it individually. Okay. So some of these conjectures have been generated. We're done. As I mentioned, the question is how do we do this efficiently by representing the set compactly and by processing it; that is, the set of core conjectures efficiently. So we have two algorithm for doing this, which depend on the size of the domain in question. So remember, the template is a partial order with respect to some domain. So Booleans was one example. The integers was another example. We have a general mechanism that works for any binary domain, and for any non-binary domain. Really, the distinction is here finite up to a certain amount and greater than that. But binary is the sweet spot, really. So I'll show you about the procedure we do in the binary case and then again, you can imagine, well, examples will all be about Booleans, but it could be about any binary domains. To talk about that, however, I need to introduce a notion before. So consider that we have a set of terms over the domain that we're working on. And we have a sequence of valuations of these terms. So in our algorithm, the valuation comes from the counter examples. But now, just consider any valuation. So, for instance, if we're talking about arithmetic, consider these valuations 13 for the terms, okay. The sequence of size three. Each of these sequences induces a partial ordering over the terms, which is just the point-wise extension of the partial ordering or the domain. So here, I have that the term X is less than X plus one. But Y is less than 3Y and so on, okay. So having this ordering is going to be helpful in what I'm going to discuss later. Right now. If we look at the Boolean domain as an example of a binary domain, our set of conjectures is going to be a set of implications between terms. So C here is the set of terms we start with. Conjectures will be implications between these terms. So we start with the strongest conjecture possible. Then we say all terms are related. They all imply each other. Why? Because some conjecture can be expressed linearly by just a chain of equivalences, okay. So we start with this chain. We're going to represent our conjectures as graphs, as dags, in fact, where each node represents an equivalence class of terms with respect to the ordering that I have discussed so far. So a partial ordering induces an equivalence class. So every terms in and the nodes are related to each other. And then links between the nodes are going to represent the fact that every node -- sorry, every term in one node is smaller than every term in the other node. In the partial ordering, they're rebuilding with these assignments. The partial ordering in question, which is built out of the sequence, will be built incrementally as we build the sequence. So we start initially with the empty sequence, which means everything is in there, everything is related to each other. Then we extend the sequence with the first counter model we get in the process that I described before, and the second and so on. So we have a graph that starts to be really compact and starts growing as with he get counter examples to the less than relation. >>: [inaudible]. >> Cesare Tinelli: >>: Say that again? You're familiar with Vanike's method? >> Cesare Tinelli: Yes, in fact, I should have said this at the beginning. This work is inspired by that paper. For though since you know that, what we 14 do is everything that that method does and more. We definitely identify all equivalences between terms, which was the original motivation there because we were doing circuits, but we do more than that, because we also do implications instead of equivalences. So suppose we have this situation here. So the conjecture is all terms of equivalent and assume that we get a counter model or a model that gives these values, zero for false and one for true to the terms. So what does that mean? Well, it's not true that these terms are equivalent, okay. According to the ordering that we're building. So we break that bubble into the ones that we have seen to be false so far and the ones we have seen to be true. So what do we know? With the current evidence, these guys could all be equivalent and these guys could all be equivalent, but definitely these guys are not equivalent to guys here because we have a counter exam. But as far as we know, everything in here could imply everything in here. We don't have evidence to the contrary yet. >>: When you say everything in here do you mean a conjunction of all those guys? >> Cesare Tinelli: >>: Well, each one. This is an equivalence class. Individually? >> Cesare Tinelli: Each of them individually can imply that. Each guy here can imply that. Remember, we're assuming that these guys are equivalent so pick a representative, whatever. >>: Right. So that edge -- >> Cesare Tinelli: >>: Means any term in here -- Representing a quadratic. >> Cesare Tinelli: Exactly. So this is the compactness that we get. representing now a quadratic number of implications lineally. We are Next counter example. Well, some of the assignments here are going to break some of these bubbles again. In particular, here, both bubbles are broken. 15 But what do we know about these two bubbles? The same thing I mentioned before. Well, in terms they're not equivalent, but I only have a counter argument for one direction. For the other one, I have to place conservatively and maintain it so far. What about these guys? Well, these guys can be connected by remembering the previous link. So the previous link is somehow, well, in a sense inherited by the new guys, and the point of our algorithm is coming up with a systematic and sound way to do this inherence. I mean, that's the nontrivial part there. And so but, you know, if you look at in terms of ordering, notice that the links respect the ordering. These guys are smaller than these guys in the point wise ordering between these pairs, and it's true for all that we get here. The trick here is that we don't want this link. Technically, this link is possible, because, because everything here is smaller than everything here. But this link is redundant because we have these two. So the algorithm also tries to minimize redundant links. Now, look at this as a conjecture. What is it saying? It's saying I have quadratically men implication between these two guys, between these guys, and none between these guys I need to represent, because they are a consequence of transitivity of my partial order. So we do this in a sense, modular transitivity, and we maintain this set of conjectures very compactly. When we give it to the SMT or the SAT solver, we literally do this. Here, this is a chain of inequalities. Sorry, a chain of equivalences. We quick one guy and we say that it implies some representative here and so on. So we get a very small set of formulas that represent our large conjecture. And, well, again, we continue to -- there are other cases like this one where things are broken this way, and things are broken this way. But there's actually a finite number of configurations that you need to consider. Why? Because since you are in a binary domain, there's only a finite -- since you're in a finite domain, it's only a finite number of ways to break of graph and inherent the links. In an infinite domain, that's not the case. So this algorithm would not work. In particular, when I have to connect to new nodes, I have to figure out which of the ancestors I should connect these nodes to. In the binary case, I know I need to lock at most of the grandfather. In the infinite case, I could have to go, you know, an unbounded number. I would have to look at an unbounded number of ancestors. So this algorithm doesn't 16 work in the infinite case. It's the way we're doing it is linear in the number of nodes and edges, and this is most likely, we haven't done a precise analysis, at the cost of optimality. That is, sometimes we do get those redundant edges that I mentioned. It's just too expensive to make sure that you never have redundant edges. If you throw a few redundant edges in the final formula, it's really not a big deal. >>: So how does this relate to [indiscernible] method if at all. If it's very conjecturing or you make a which is that two formulas are equal, you sort of at locations. I'm trying to understand ->> Cesare Tinelli: The obvious answer is [indiscernible] is a way to check satisfiability. Here, we are trying to show equivalence between these guys or implications. So I don't see an immediate connection. Maybe there is one, but I don't see it right now. All right. In the numeric domains, we have generalized this to numeric domains. We can use the graph. However, we can use partial order sorting. That is, again, we have all these tuples. And these tuples represent counter models I've seen so far, associated to classes of terms. And these are the terms that we want to order. So we ord terms according to these tuples that they're associated with, and the ordering is refined -- so the important thing here is that this is an incremental step. Every time that you have a new counter model, then you have an ordering on a slightly larger tuple, larger by one. However, the current partial ordering that you have is change with respect to the previous one only in a number of small ways, okay? So this allows us, this incrementality allows us to do some operations quickly and, in fact, we've come up with a new partial sorting algorithm that, well, it's cubic, but it's cubic in the number of nodes, the height of the partial ordering, and the width. >>: And in numeric domain, the partial order is just the -- >> Cesare Tinelli: The pointwise extension of less than. is over tuples, remember. >>: Oh, yeah, that's right. The partial ordering 17 >> Cesare Tinelli: So the ordering over the individual elements is just less than. And over tuples is the pointwise extension. So this tuple is smaller than this one. >>: You're saying you have some language and you're trying to find the strongest theory expressful in that language which is consistent with your evidence, which is here, your tuple. >> Cesare Tinelli: >>: The theory being the conjecture, yes. Representation of that theory. >> Cesare Tinelli: Definitely, yes. The idea in general is not, I mean, it's fairly simple. As I said, the contribution is in the actual mechanisms implemented. >>: Trying to deal with large theory, right. Something that's going to be quadratic in size or in the number of terms that you've got. >> Cesare Tinelli: >>: That's right. So the methods used for -- >> Cesare Tinelli: For what? >>: [indiscernible] various generation of templates use counter examples to refine sets of terms. So your method have these directions. >> Cesare Tinelli: That's why it's good to give talks to these audiences. I'm not aware of that paper. Yesterday, I discovered another paper I wasn't aware of. >>: So the main idea there is to have -- you want to [indiscernible] input/output pairs. >> Cesare Tinelli: >>: Yeah, right. So you're talking about the learning algorithm, okay. And so you learn these what are the possible variants. 18 >> Cesare Tinelli: So anytime they have a conjecture that is based on what I've seen so far and they modify the conjecture, is that what they do? I mean, you could see this as a learning algorithm in that sense, right? As Ken says. You have a theory and the theory explains your observations and you're trying ->>: Use the SMT solver. >> Cesare Tinelli: Use the SMT solver as your tutor. The tutor is giving you the answers, giving you -- well, in this case, it's om done by counter examples, not by positive examples. So it's a very limited form of learning. Learning by counter examples. But it's essentially what we're doing. Yes? >>: Maybe I didn't understand that part. these are runs by the -- So when you say counter examples, >> Cesare Tinelli: So imagine the first conjecture. The first conjecture says all these terms are equivalent so you try to prove that in the initial states when you start. You say the initial state condition implies that. Sure enough, you're going to get a lot of counter examples. Throw away that. And you keep doing it. Right? But a very restrictive kind of learning. >>: But counter examples to put up -- >> Cesare Tinelli: It's reminiscent of version spaces, some ancient learning techniques except that inversion spaces you start with an under approximation and an over approximation and use your positive and negative counter examples to have them converge. The problem with version space is that often, actually, it will go like that. In this case, we're just doing one direction. We start, well from bottom up. We start with a very, very strong conjecture, and we weaken it, weaken it, weaken it until it explains all the observations that we've seen. And so as I said, in the numeric case, you can do similar things. We have an algorithm that on the face of it is cubic, except in practice H, the height of our graphs, is usually small because it depends on the kind of problem. It doesn't -- the good thing is this measure does not depend on the size of the initial set. Well, it doesn't depend quadratically on the size of the initial set. 19 >>: And is the initial set. >> Cesare Tinelli: And is the number of nodes and so the initial number of terms. It depends on the intrinsic properties of this ordering. So you can get lucky and have very flat situations or you go very quickly. >>: [inaudible]. >> Cesare Tinelli: >>: Say again? H times W is greater than [indiscernible]. >> Cesare Tinelli: Well, they are related, right. Suppose the height is always one, then the width is exactly N. Or vice versa. Suppose the width is one, then the height is going to be exactly N. So in the worst case, with he get quadratic, essentially, for us. Okay, in the worst best case --. >>: No, he's making an important point. N. So really ->> Cesare Tinelli: >>: He's saying that HW is at least order Yeah, by itself, by itself is order N. So this is -- Is order N squared. >> Cesare Tinelli: Yes. I said before that we want compact in the sense of less than N square. We don't quite get that. But this is actually quite good, because existing learning algorithms are effectively cubic. Existing partial ordering algorithm are effectively cubic. For our purposes, often H is bounded. And so, in fact, this is quadratic. It's good enough. Results. So we worked with -- so our KIND model checker, we created a module that generates invariants integrated into the model checker. In fact, one thing that I haven't described to you is that the real implementation now is incremental. Incremental meaning that we don't wait until the end to produce the invariants. For every K, we're able to produce invariants. Which means that since KIND is parallel, we feed invariants as they are discovered to the KIND process, as KIND is trying to prove by induction the properties you start with. Which means that as soon as they're generated is given to KIND, KIND uses [indiscernible] k-induction and often, this leads to effectively making the property that you wanted easily provable with the invariant. But again, 20 you don't have to wait until the process finishes. >>: So you're using this Houdini-like technique. You're generating counter examples to your induction which go into your [indiscernible]. Do those counter examples to induction come from k-induction or one induction? >> Cesare Tinelli: K-induction. >>: K-induction. So that means that you could have had -- there are conjectures you could have thrown out at K equals 2 that will come back in at K equals three, right? >> Cesare Tinelli: The ones that we've thrown out in the BMC's run; that is, the base case of k-induction are definitely -- that's exactly what we do. The ones that we throw out at K. Before, I said the ones that we falsify in the inductive step, we throw away. We don't actually throw them away. We keep them around, hoping that they are K plus one inductive. That's exactly the process. The ones that we prove for K, so what do we do. At K equals 2, some stuff is not conductive, set aside. The stuff that remains, try again. Set aside. Some stuff is too inductive, so we will succeed. We will always succeed. Because in the worst case, we get true. True is to inductive. So, you know, we will pass those and then move to the other ones. So there is always some stuff that we can do. >>: So you have some kind of a loop where you keep increasing the K? >> Cesare Tinelli: >>: Yes. I see. >> Cesare Tinelli: Well, there is a loop in the invariant generation and there are two loops in the k-induction proper, that is in the model checker. The model checker is doing the base Ks, and the K inductive stuff also in parallel. Everything goes on in parallel. And then they synchronize at proper times. So the k-induction step of the model checker fails, asks the invariant generation, do you have anything for me. If it does, then it uses them. When it succeeds, it asks the base case, have you found any counter example? Have you arrived to K, because if you haven't, I have to wait. 21 >>: Do you have some kind of a picture of this? >> Cesare Tinelli: >>: Yes. I do. I would love to walk through that picture. >> Cesare Tinelli: Actually, do you mind if we go over that picture later, because I want to tell you about the results. So we've integrated this in kind, and we've worked with Boolean -- sorry. I flashed you this. KIND works over the -- the [indiscernible] kind is the Lustre language. For those of you that know, I don't have to say anything. Those of you that don't know, it's just yet another language to express transition systems. You know, something like SMV. If you're familiar with SMV, we were inspired by Lustre. >>: No. >> Cesare Tinelli: that you don't have types. By infinite an approximation of But there are definitely similarities, okay. So except a restriction to finite types. You can have infinite types business, I mean, of course, mathematical integer is machine integers and that kind of stuff. All right. So we produce invariants of this form where these guys are Boolean Lustre terms or this form where these guys are arithmetic Lustre terms. And again, notice that since we look at conjunctions of these invariants, double implication is effective equivalence so we discover equivalence between sub streams or even arithmetic equalities. And benchmarks we tried. We had, well, about a thousand loose benchmarks where we have a model and a single property to be shown invariant. If you run KIND in a decent configuration here, an inductive mode that is trying to prove invariants, KIND can prove 33% of these benchmarks, can prove these benchmarks to be valid, meaning that the property is invariant. Can disprove 47% by finding actual counter example, and is unknown, cannot solve 21%. Now, we throw in the invariant generators. So again, no invariant we can solve. If we now focus just on the valid or unsolved properties, under the assumption that the unsolved properties are probably valid. Because we have run kind for a long time in BMC mode, couldn't find any counter examples. They're most likely valid. 22 >>: By the way, are Lustre programs finite state? >> Cesare Tinelli: >>: No, they're not finite state. They are integers? >> Cesare Tinelli: Yes, yes. >>: But the theories that you're using are only integers and proposition logic. >> Cesare Tinelli: Integers, proposition logic, reels. In fact, you also use an interpretive function symbols for convenience. They're not really needed. >>: For what do you use an integer function? >> Cesare Tinelli: We represent -- Lustre is a data flow language so, in fact, its variables are streams. And we represent streams as functions over the natural numbers in our encoding. It doesn't have to be like that. It's just convenient for us to do it that way. It's not necessary to achieve the same sort of results. All right. So that's the current situation that is no invariants. You throw in Boolean invariants, then we go from 61% accuracy to 77. With an integer invariance, we go to just integer invariance, we go to 82. If we put them together, we go to 86. This might not look too impressive with respect to these two. You only go from 82 to 86. But let me point out that integer invariants often contain Boolean invariants in them and vice versa. For instance, a Boolean invariance might get generated. A Boolean conjecture might be generated is one of the form X less than Y equals true. So this is effectively a less than invariant that, however, your recasting is a Boolean one and vice versa. So these guys are not really disjoint. There's lots of overlap. >>: So your terms in these experiments, are these just terms that occur in the programs? >> Cesare Tinelli: measure. Yes, plus a few more terms that we throw in for good 23 >>: So do you have any idea what's going on with the remaining 72? What are you going to do now? Suppose I gave you a million dollars and say I want an answer to all 72. >> Cesare Tinelli: We want to produce smart -- improve the initial phase, the phase where we generate candidate conjectures, okay. We've actually done it in a follow-up paper that I don't have time to tell you about. For a lot of these systems, there's a business of modes. These systems of modes, which I essentially are finite state situations. And when you encode them in Lustre, you lose mode information. And you can try to recover mode information experimentally, and then throw in invariants that have to do with the modes. They are not evident in the Lustre encoding. I can tell you about this offline. So we've done that, and we have experiments that show if you throw in these other guys that talk about mode invariants, mode invariants will be something like if X is 1, then Y is either one or 2 or 4 or something like that. Where X is encoding some mode and Y is encoding some other mode. If you throw those in, well, you should not have lost those in the first place. But if the encoding is not so good, which is the case often, you lose them. If you throw those in, you get more mileage. >>: How much? >> Cesare Tinelli: I can't talk about these ones, because these ones actually don't have a lot of modes. We've done it for another one, for another set of benchmarks. And to those, we go to something like 91%, 92. But again, these numbers don't mean much. I want to stress these results might say something about the goodness of our method or might say something about the properties of our variant set. There's always an issue of how representative these things are. However, one thing I want to say, we have an industry user, used in kind, our friends at Rockwell Collins, and they tell us they're quite happy with this feature for the sort of things they do. So we just have anecdotal evidence ->>: I think you're downplaying how cool this is. This is very cool for the 24 following reason. generation ->> Cesare Tinelli: >>: Now we have set a bar for any future sophisticated invariant Right, you have to be at least as good as -- Because this is so simple. >> Cesare Tinelli: Yeah, well, again, in the spirit, I'm not underselling or saying it's -- the idea is simple. The execution is not. >>: That it's simple is the best compliment I can pay you. >> Cesare Tinelli: consistency. >>: What's the time value [indiscernible] benchmark solved. >> Cesare Tinelli: >>: But the execution is very sophisticated to get that I forget again. It was on the previous slide. >> Cesare Tinelli: I did have it? Five minutes? Actually, I do have more, but, again, you know ->>: What if you put it back to -- >> Cesare Tinelli: meaning small? >>: 120 seconds, sorry. Is nothing, but have you seen the benchmarks. Nothing Yes. >> Cesare Tinelli: But have you seen the benchmarks? Maybe the benchmarks are not that impressive, right. They go from fairly small to you moderately large, not huge. >>: Can you double it? >> Cesare Tinelli: Doubling doesn't help. If a property is not key inductive, with respect to the transition relation and the invariants you have, doubling the time is not going to do it. Go infinite time. It's not going to prove it. 25 So the only thing that you can hope here is that you throw in more and more invariants. >>: You get rapidly diminishing returns. >> Cesare Tinelli: >>: Yeah. You get a few out there capable of -- >> Cesare Tinelli: But let me show you, I have these interesting results here. I want Ken to see these. The valid ones, that is, the ones that are provable without invariants, adding invariants accelerates stuff. As you're saying, without invariants, the percentage of problems, properties, there are more than one k-induction. More than inductive. That is, k-inductive for real is 14% in the set. When you throw in the invariants, we go down to 3. So you're really trivializing the property with these invariants, which just gives us a good measure that these are good invariants, okay? Because here we can compare. In fact, it's also true for the other ones. Well, in what sense? For the unsolvable ones, conjecture K equals infinity. It's probably that the K is too big. We don't get it. It's just that K does not exist. So we accelerate from infinity, again, to very small K. In some cases, we have 20, but, I mean, those are rare. Invariants are hard quality. And also, gives you some hope that the smarter techniques you come up with to produce high quality invariants, the better this is going to work. Architecture now accepts any invariant generator. You don't like this stuff, give me your -- plug in your invariant generator. As long as it is a stream generator, then everything is going to work. Just nicely. In fact, and I want to -- I'm going over time, but I want to conclude with this interesting observation. So our users don't care about a single property, right. They have 20, 3050 properties they want to prove about a system and they want to prove them all at the same time. They want to prove all of them, okay? So you can't prove them separately, just it takes forever. It's inadequate. You can try to prove them together as just a conjunction. But if any one of them is not invariant, then you're done. The conjunction is false and so that breaks away if you're doing this in a naive way. Also, even if they're all invariants, since we're doing k-induction, some could 26 be -- even if they are all inductive or k-inductive, some might be inductive for a small K, some for a big K so we have modified our induction algorithm so that it does something similar to what I mentioned earlier. That is, it considers the conjunction but then it looks at the properties individually and throws those away that are definitely not invariant. It keeps the other ones and so on. Now, what happens if you in as an invariant while properties actually help of, oh, okay, I tried to conjecture. prove a property to be one inductor? We throw it back we're trying to prove the other ones. So the each other. But a lot more than in the standard sense prove all of them at the same time. I have a stronger There's a slight difference here. Once you prove one invariant, then you don't have the obligation to reprove it. You just throw in as an invariant. It cuts search space like crazy. Often, we're able to prove using this thing. We're able to prove all the properties without generating auxiliary invariants at all. They just help each other. Which kind of makes sense because if you think how people write properties, often properties are related and some of them are, in fact, lemas for other properties. >>: Who are you using? >> Cesare Tinelli: >>: Okay. Rockwell Collins. They design in Lustre? >> Cesare Tinelli: They actually write their designs in Simulinks and then they have produced a converter from Simulink models to Lustre models and then they feed them to KIND. >>: Do they use other model checkers? >> Cesare Tinelli: So, yes, initially they have a nice picture. They go to Lustre and then they went to Sal, new SMV, PVS, sol prover, you know, the commercial system. And something else and now I forget. Now they have added KIND and from what they're telling me right now, they're happy enough with KIND, they're just using KIND. >>: And have they given you numbers of how KIND compares -- 27 >> Cesare Tinelli: No. Even if they wanted, they couldn't give me numbers, because this is all anecdotal, okay. They've been playing with their models and so on. They've not done a serious study, comparison study. They say we couldn't prove this with Kruger, but KIND is just fine or actually Kruger is actually faster here, but we don't really mind, because KIND is fast enough, you know. Again, I don't want to make any claim here that it's the only thing that they need. In fact, they're keeping all the others. But in the latest rounds with all these invariants and so on, they said that they're quite happy. >>: in. The method itself, can you say something whether there's anything specific >> Cesare Tinelli: No, not at all. In fact, my last slides talks about kind too that we have started developing that will be disconnected from Lustre. Just have a Lustre front end and then we'll be able to accept other languages. >>: [inaudible]. >> Cesare Tinelli: Maybe. We'll see. In fact, when we started, I thought that we could capitalize on Lustre's specific encoding to do better, and turns out really, it's not really necessary to be tied to Lustre. >>: Is the semantics of Lustre well defined that there is agreement among pretty much everybody what it means? >> Cesare Tinelli: Yes. Lustre is defined formally. One catch I should explain is that Lustre is a specification language, but it has -- it's an executable specification language. So it has real data types. It has machine integers and floating points, and we don't really work with Lustre. We work with idealized Lustre so we can't catch all -- we have to stand around some of these issues by ->>: You float your model as real -- >> Cesare Tinelli: However, notice there's nothing specific in our approach. Sorry, nothing coded in our approach that limits us in this way. That is, once we get an SMT solver, that's good enough with floats or with bit vectors, then we can just switch to the accurate semantics. Everything else works. I mean, at least in principle. In practice, we'll have to see. You wanted the 28 architecture. This is the main architecture, assuming just one invariant generator. But, in fact, you could have several. And they all go bid -- they all work independently, and also, the architecture is such that they synchronize only rarely, only as needed. This is very low latency. This guy is a BMC. It just shoots until it finds a counter example. When it finds a counter example, tells this guy I found a counter example for property P1. So this guy knows that [indiscernible]. When this guy proves a property, it double checks with this guy and says, I proved a property to be three inductive. Have I gotten to three yet? If you haven't gotten to three, I'll wait. But if you have a right to three, there's no problem. I can really certify to be k-inductive. Guy is a lot faster, this is just BMC, in fact, anytime the inductive set succeeds, it doesn't have to wait anything at all. If K is staying here, this is 200. The invariants are just sent in a message queue to -- well, the general message queue to this guy. And anytime the inductive step fails, it looks for invariants. If any invariants has arrived, it's used. If no invariants have arrived, then K is increased. So that means that if the invariant generator keeps generating invariants, it's possible for K never increase more than one. >>: When you say that the [indiscernible], is it generating invariants, or is it generating candidates? >> Cesare Tinelli: >>: Invariants. So basically, k-induction is also going on inside -- >> Cesare Tinelli: Yeah, but this is just an artifact of our method. >>: But it is promising when something comes across N3 there, it is promising this is truly invariant. >> Cesare Tinelli: It's really an invariant. Right now, we have another -- we have worked on another invariant generation, based on [indiscernible] interpretation techniques, which is still incremental in this sense. That is, it computes a post fixed point. But instead of waiting until the final computation; that is, the post fixed point is being computed. At any intermediate step, it tries to figure out that pieces of the current elements are invariant. If they are, they are sent back. They are sent to the guy. 29 So, I mean, any incremental invariant generator will plug in here. Again, but they have to be invariant. That's the invariant that the system uses. The summary, I don't think I need to give to you. Future work, KIND 2, I mentioned. We want to work on more invariant generation techniques. One of the reasons I'm here is to pick people's brain. I'm going to try to do that with Ken. I think we can use interpolation ideas to generate more invariants. >>: [inaudible]. >> Cesare Tinelli: Yeah. >>: The other slide. So if all the candidate was happening in the right box, then why is there so much time difference between the base processor and the [indiscernible]. All they're doing ->> Cesare Tinelli: They're a good question. Let's ignore this for now. Suppose we didn't have invariants. The base process is just doing BMC. This is doing real work in, in a sense, because it's trying prove that a property is k-inductive. And unless a property is k-inductive, or say zero inductive, it's going to fail and then it's going to increment, okay. So it's always going to generate larger and larger traces. >>: That's the same thing. >> Cesare Tinelli: >>: Yes, but this starts from the initial state. I see. >> Cesare Tinelli: The initial state, especially if your initial state is small, it's going to generate very few traces. Here, in induction, you don't make any assumption on the set of states. So it can be anything. There are a lot more solutions here than there are here. So that means a lot more to do to prove stuff or disprove it. I mean, it's the reason why BMC is really efficient as opposed to model checking proper. I mean, when you're trying to prove something, that something holds, it's a lot harder than trying to find counter examples. Now, of course, if the system, if the model is big enough, even BMC might slow down or die, because you're doing [indiscernible]. But then the usual 30 techniques can be used, by abstraction. KIND actually has one form of abstraction, where you actually don't work on the real transition relation. You work on an abstract one. If you find a counter example, you verify that it's a real one. If it's not, you will find the usual Seger stuff. >>: What is the abstraction that you're doing there? >> Cesare Tinelli: We just do. What is it called? I forget the name, but it's -- programs are defined in terms of equations. So every stream has a definition. So we throw away definitions. So if you look at circuits, like considering a signal to be an input signal, you throw away the [indiscernible] that define that. And then when we need to refine, we throw in -- well, of course, you do it in a way that capitalizes again on the SMT solver. For instance, as usual, if we find a counter example, then we do an analysis of unsatisfiable core to see which signals we should really throw a definition in on. You know, usual stuff. But not too sophisticated. Any other techniques you could imagine applying. This is all orthogonal here. >>: Did you try to measure if the events are useful. of events, I guess. I mean, you take a bunch >> Cesare Tinelli: No, we haven't done any of these studies yet. What I can tell you, for instance, why do we have these settings where, for instance, I don't know if I have them here, you can eliminate the trivial invariants and the redundant edges, right. Here, we are using the strong version of our invariant generation algorithm that tries to minimize the number of redundant edges, but we don't care about eliminating trivial invariants. If you do, if you set this to false, yes, you get a tighter set of invariants but, in fact, it's not better because you spend a lot of time trying to eliminate the real [indiscernible] and since you're eliminating lemas, you're also asking the solver to do more work. These are difficult things, questions to answer. We haven't done a serious study on this. But, of course, it's something that should be done because at some point, you're going to swamp the system with -- the good thing is we've been using yikes, and I guess this is the same. You can throw a lot of crap at them, right. So generate lots of invariants, a lot of them are used less. Well, they turn out not to be harmful. So we haven't been motivated so far. At the same time, our systems have been relatively small. I would imagine if 31 systems are big, you have to start being careful about these things.