>> Kaushik Chakrabarti: Professor Keogh is an associate professor... computer science and engineering department at University of California-

advertisement

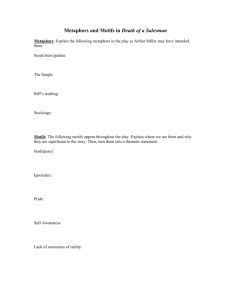

>> Kaushik Chakrabarti: Professor Keogh is an associate professor in the computer science and engineering department at University of CaliforniaRiverside. His research interests are in the areas of machine learning and information retrieval, although the special focus is in the area of mining and searching in large time series datasets. And he's actually a really well-known name in the time series indexing area. Actually, if you enter time series indexing in one of the most popular search engines, actually suggests Professor Keogh's name as one of the query suggestions. He has authored over 100 papers and he has several best paper awards in SIGMOD, ICDM and KDD. Today he is going to talk about a set of primitives for mining time series datasets. So without further ado, it is all yours, Eamonn. >> Eamonn Keogh: Thank you for attending the talk. I like kind of controversial talks or strange claims. So here's one. I'm going to claim that for mining time series datasets that these three tools, shapelets, motifs and discords, are all you need, that it kind of subsumes everything else out there. And if you can do these things properly, everything else is going to be easy. So hopefully you will either believe or not that at the end, but that's the claim. Here is an outline of the talk. I'm going to talk about what are motifs, discords and shapelets. I'm going to kind of gloss over how you find them efficiently. It actually, of course, very important for massive datasets. I'm not going to show any data structures or algorithms. I'm going to basically try to convince you it is useful for lots of case studies, and then hopefully you believe they are useful when you think about to how to find them more efficiently. As I mentioned, a lot of this work I conduct with my students Jin-Wien who is here today. So, again, the disclaimer actually is that -- I'm not going to talk about the algorithms, data structures, notation very much. It is really to try to convince you that these things actually are very, very useful. So just briefly on the subject of the ubiquity of time series to convince you that time series is actually useful problem to solve, you probably already believe them because time series is everywhere: In finance, in query logs, even in video in some sense can be kind of extracted. Time series can be pulled out of this. And even that it's not typical time series, things like handwriting or music can be kind of massaged into time series, as we shall see. So time series is ubiquitous. We are going to be able to mine it. How are we going to do that? So, actually, here's the only page of notation. It is very trivial. And the important fact is that these time series which, of course, can be very massive, billion data points, are not the tip of the interests of the global properties. We don't really care about the maximum or the minimum or the average of the entire data set. Almost always we are interested in is small subsections. These subsections can be extracted by a sliding window. You simply pick a length, we say 5 seconds, and you start it across and you can pull out the entire set of subsections. And as we'll see in a moment, motifs, discords and shapelets are nothing but subsections with special properties. And special properties actually make them very interesting and useful, again as we'll see in a moment. So let's jump right into the first example which is time series motifs. What are these time series motifs? If we look at this industrial data set here, a question you could ask is: Do the patterns repeat themselves ever? Let's say at the length of this pink bar here. So do I find a pattern here that repeats, say, here and so on and so forth? Even in a small data set by eyes that have to do this, here's actually the best answer. So it happens that this thing here repeats approximately right here. If you look at the zoom in here, they are not identical, of course. That would be too easy to solve. But they are very, very similar. You kind of have to believe that something caused it to be that similar. Maybe the same mechanical set of valves opened and closing at the right times produced a signal here which repeats. Okay. So you can find them. What use are they? I will tell you some examples in a moment. But you can kind of guess the utility of this. If you find this motif and you notice that after you see it the first time, within five minutes the boiler explodes, then you actually have a warning system that in the future, if you see this again, you can actually sound an alarm and be ready for an explosion or whatever it is. So these are some case studies in motif discovery. These are all from the last year or so. The first actually came from these guys in Harvard. They came to us and they want to build a dictionary of all possible brain waves. People have been trying this for the last, I think, 40 years but always by hand, by having doctors look at these things and pull out these things by knowledge. But these want to do it actually totally black box. Dump in all the data and have a dictionary pop out. And the problem is that for just one hour of EEG in one trace, it takes about 24 hours of fast code to find the answer. And, of course, they haven't got just one item. It has eight hours from a person. They haven't just one trace but maybe 128 from a skull cap. And they have thousands of patients and so on and so forth. So there is no way to do this actually using fast brute force search. This gives you an idea what the data looks like. This is one hour of data, and here's a tenfold zoom in, tenfold zoom in, tenfold zoom in. So they are really interested in data at this kind of scale. This is where things basically happen. At the longest scales, it is kind of irrelevant what happens in that sense. That's local patterns that we're interested in. Okay. So here's the one hour of data. And there are 5 billion possible pairs you could actually take randomly from this, compare them and see how similar they are. And of those, the most similar pair is the motif. It is the most similar repeated pattern in this entire data set. And as it happens, this is the answer right here. Actually, looks a bit like a square root sign. So what is this good for? How interesting is this? Well, first of all, of course, there has to be some motif in the data set by definition. Something will pop out. But does it actually have any meaning? And so one thing is it is kind of suggestive. If we search for these patterns, we find some similar examples in the data again and in other data sets. So it kind of suggests potentially to have some meaning. And more interestingly, if you look at the literature, we actually find this is a known pattern. This is actually from a recent pattern, and people have actually known about this before. It has a medical name. It has a medical cause and so on and so forth. So you basically discovered this automatically without any domain knowledge. So what are motifs good for in general? What would you use them for? Here's kind of a trivial example that's kind of interesting. Look at some text here. And each individual word is actually a standard English word, nothing exciting, interesting about this text in general. Imagine this is actually a time series stream. What you find actually is that the example in the green section of the text, some letters are unrepresented. So here's the expected frequency and the observed frequency of T, for example, and they're pretty close. But here the expected frequency of E is about 13% and the observed frequency is exactly 0. So E happens never in this case, which is actually very surprising. So it is unrepresented. And then the pink text, here's the expected frequency of Z, and the observed frequency of Z and, of course, Z is much more overrepresented than you expect by chance. So this is true for the discrete strings as it is for DNA and for English text. But now in motifs, we can actually do this for time series. So the previous example I showed you, I can actually see where it occurs in this dataset. And the two original examples are here and here, and these other check marks are other similar examples. And what you actually see is that they happen pretty much at uniform frequency, but occasionally you'll find they don't appear here at all. I call this a motif vacuum. So for some reason, this motif doesn't appear here and it is kind of suggested that maybe at this time something unusual happened in the brain process. In a few moments I will show you another example actually on a similar dataset but with different doctors that's actually even more telling than this one here. So here's one example of things you can do with motifs. You can find anomalous time periods, either by the overabundance of a pattern or by the non-existence of a pattern. So here's another fun case study with insects. So this guy actually here, the beet leafhopper, is a very interesting insect. It is basically a vegetarian mosquito, if you'd like, right? So unlike -- instead of attacking animals and humans, it actually attacks plants. It sticks in the stylus, and it sucks out nutrients from the plant. And that by itself actually isn't particularly harmful for the plant. That's fine. But the problem is if one plant has a disease and this guy is going from plant to plant, then very rapidly all your plants have a disease. And this caused about $400 million of damage in California each year. It is actually a very nasty insect for that reason. So the good news actually is that we can wire this guy up, right? Well, what we actually do, or etymologists do is they attach a small gold wire to its back. They complete the circuit into the ground literally, or into the plant, and they measure the voltage change, or whatever it is, over time. So now we have a time series for this insect as it does its behavior. So the good news, we can wire this guy up. But the bad news is that that is really, really nasty and messy. So here's some examples. It is very difficult to see any kind of structure. It looks pretty much random and nasty. So how are we actually going to explore this data and understand what's going on? Well, the answer you might guess is motifs. So here's one small subsection of 14 minutes. We look for motifs in this dataset, and we find one here and here which correspond to this and this. And, once again, when you see this you can't believe this is a coincidence, right? This is so similar, it surely must mean something. As it happens, the beauty of this actually is that we do have video which we can index back into. So we can go back into the video at this time period and this time period and see what actually happens. And what turns out is this actually corresponds in a moment the stylette is actually injected into the plant. This is what the pathogen actually looks like. And the second example here, which you see is much more complicated and noisy but, nevertheless, it is the second best motif, if you'd like, in this case, actually what happens is the insect builds up some honeydew from its rear and the honeydew actually eventually causes a bridge between the insect and the plant, changes the route, then the B breaks off and we go back down to zero here. So the cool thing is, we can actually take tens and hundreds and millions of time series data points and we can summarize them into a few nice events. So prior to this basically we have a grad student looking at these videos hour after hour after hour with a notebook looking for interesting behavior, which is not very scalable. With this, at least we can say "go look at these time periods, something appears to be happening here which is actually prototypical or interesting," or what have you. So one thing you could do with this -- we actually haven't done this, but this is something we're interested in -- is the following, which is if you find these motifs, you can simply just call them with different discrete labels. This is -- I'll call it A. This is I'll call it B. I might have a C pattern, a D pattern, so on and so forth. And now you can convert these incredibly massive time series noisy datasets into a base of discrete strings. So B, B, C, A, B, C, C and so on and so forth. And once you have these, you can actually now search for patterns with kind of classic algorithms for DNA or for other kinds of discrete symbols. So, for example, you might find that if you see a B followed by another B, then the next thing you are going to find is going to be a C with very, very high probability. Again, we actually haven't quite got this far yet, but this is actually next on our list. So one more example for motifs. This is actually again from another experiment with EEGs although with a different set of doctors. This is actually from University of California-San Diego. So these doctors have lots of traces, very high dimensional, very, very nasty. And they have the following problem, which is they're showing people pictures actually much more complicated than this of surveillance -- satellite surveillance. And occasionally there will be an airplane in the picture. And when the person sees that, they're supposed to click a little button. And what you actually want to do essentially is read their mind and figure out when they see an airplane. So here's the basic experimental setup. So they've actually tried this with various hard-coded rules. The question is, could you do this automatically? And so what we actually do is we look for motifs. So we find some motifs in this dataset. Here's one example. And we ask ourselves, do these motifs actually appear with different frequency depending upon what the person is actually seeing? And so what we are going to do actually is plot the time series and every time we see one of these, we are going to put down a green dot. When we do that, here's what we see. Basically, independent traces, if you'd like. This is time moving forward, and at this point, it will show a little signal. And look at what we see. So normally these green dots are pretty much uniformly distributed, but right after they see the stimulus at the right delay of latency, we see an incredible burst of these motifs. And then shortly after that, it is harder to see. There is almost a vacuum of motifs. They don't actually appear here again for a while, and then they settle back into normal frequency again. The doctors were very impressed with this because they could actually do this before but with hard-coded rules that they actually observed with humans and doctors, backwards and forwards. This, of course, is totally black box. They simply look for motifs that are correlated with the actual class itself. So here's one final example of motifs. Just to give you an idea of scalability that we're interested in, we have 40 million small thumbnail images. Here's some examples here. And we want to find new duplicates. It is actually exact duplicates are even to find with Hashem. The problem is to find near duplicates. And so, of course, these are not time series, but we actually can simply convert the color images to red, green, blue histograms which are, basically, pseudo time series so that we can look for them. And, of course, as you might guess, 40 million things is not going to live in main memory. It is going to live on disk, and it is going to be scurried because the brute force algorithm will be right erratic. And quite erratic when the data lives on disks means a lot of trashing backwards and forwards. This will actually take a long time to do naively. Actually, if you do this naively with the disk trashing, we are looking at a couple hundred years of time to solve this problem. With Hashem, we can actually solve this. So here's the answers. Here are the repeated patterns. And actually they're not identical in any case. So if you look at the dog here, it has a red -- or that little dot here which doesn't appear here, the [inaudible] here has something lit up here which is not lit up here. And the equation of a difference. So there are new duplicates, not exact duplicates which we could find. And, amazingly, we could actually find these, I think, in a little bit over 24 hours, so not 200 years but in a day. So the last idea we'll use motifs for is what we call motif joins. In the past, you can imagine we had one series and the motifs can come from anywhere. But suppose I simply divided that into two halves and I say one must come from here, one must come from here. That makes logical sense. I can do that. What are they good for? So imagine you're in NASA, for example, and you have some rocket telemetry and five years ago this rocket exploded or crashed and then just recently this rocket exploded or crashed. You might want to ask yourselves, what is the common thing between these two things? Maybe it has something to do with the anomaly. So you do a motif join and you find that this pattern appears in both of the crash ones. You test to see if it appears in the non-crash ones and so on and so forth. So a motif join can be quite useful. One question is, would it be scalable because these datasets will be quite large. Let's see how scalable motif joins could be. So, first of all, let me mention something, is you can convert DNA into a time series. It is actually a [inaudible] transform. The idea would simply be that you walk across the DNA and if you see a G, you go up one unit. If you see an A, you step up two units. If you see a C, you step down one unit and so on and so forth. So you simply walk across the DNA producing the time series. Okay. So here's two primates. Notice they actually have the same kind of hairline, which is actually interesting. And we can convert their DNA into time series. If we do that, we actually have 3 billion time series here, 3 billion time series here. So a lot of data. And we notice actually that the human has 23 chromosomes, the monkey has 21. So somewhere in history either we gained two or they lost two or some other combination actually separates those. What that means is we do an alignment or a join here. We can't expect a straight line which we'd have from human to human, right? Somehow there must be kind of a non-linear join in this. We're actually going to find that. What we're going to do is take a small sliding window of a length 1,024, slide it across here, slide it across here these two very large datasets and find the pair that joins the best. So if we do that, where does it appear? The answer is it appears right here which is not very interesting to see at this scale. Let me just take this section here and zoom in on it. And I added a few extra points, so the second join, the third join, the fourth join up to a few thousand. What you actually see, of course, here is that it looks like, like is almost certainly the case, that human chromosome 2 is actually composed of monkey chromosome 12 and 13. And you can also see, for example, that all of 12 maps to 2, but for 13, there is actually two little gaps here which presumably appear somewhere else in DNA. You have to actually go and look for those separately. So the points actually simply show you the scalability of this. If you can join two datasets the size of 3 billion, 3 billion, you can problem solve problems in industrial scale. So, again, I'm going not going to talk about algorithms very much, but how long does this all take? Naively, it would take in square time to compare all to all and that for any non-trivial dataset would be very, very nasty. And for that reason, there is dozens of researchers that have solved this problem approximately, typically in analog end time with very high constants but only for approximate answers. So the answer they give you is good but not optimal. Recently, Wien, one of my students here, have come up with a beautiful exact algorithm which is actually incredibly fast. And to give you an idea how fast it is for the EEG dataset, those guys were very smart computer scientists and Ph.D. and medical doctors, they can actually solve this for one hour in 24 hours, one hour of data. We can actually do one hour of data in about two minutes. And, again, for the other example with 40 million time series which live on disk, we can solve this in -- I say hours. Actually probably tens of hours. Let's say a day or two, so actually really scalable enough to handle these massive datasets. So a quick summary of motifs and I will go on to the other examples. We can find motifs now in very large datasets, and they have some potentially very interesting things we can do with them. We can monitor the frequency of these motifs in data stream to anomaly detection. And we can even sound an alarm if we don't see a pattern, right? If I don't see this pattern for five minutes, that's unusual. I can sound an alarm. Usually motifs -- usually anomaly detection only works when you see something. This actually could work when you don't see something, which is kind of interesting. And there is a few things we can do with this like find the motifs and streams, which is kind of basically future work. Okay. Shift gears very slightly and talk about something different, which is shapelets. Actually, shapelets are basically supervised motifs, as we'll see. So I'm going to show you this actually in the shape domain, but it works really for time series, as we'll see in a moment. So here we have two different classes of shapes, stinging nettles and false nettles. Let's say you want to classify these, tell them apart. One problem actually is they look very, very similar at a global scale, and the problem is that they also can have insect damage, like you have here. So any kind of global measure tends to work very, very badly. So the idea of shapelets actually is to say, let's ignore the global measures. Let's zoom in and find local patterns that might tell these things apart. So how are we going to do this? First of all, we take the shape and convert it to a time series. There are many ways of doing that. We have a way of doing it, but it doesn't really matter too much. The point of this actually is one to a map, and I can go back from this to the different shape, if I wanted to. And, again, this is actually a global pattern for a leaf now; but small subsections of it, like the subsection here, might be all it takes to distinguish these two classes. So I'm actually going to look for all possible subsequences to find the best such pattern. And it happens to be, in fact, this one right here. What you actually see is that for false nettles, the pattern looks like this. But the closest possible pattern in the true nettles actually looks radically different. And the reason now is obvious. For true nettles, the leaf joins at 90 degrees, essentially 90 degrees. But for the false nettles, the angle is much shallower. Actually another rule, if you look back, you see, oh, yeah, that kind of makes sense. So you are going to use this fact to make a decision tree very easily, right? The decision tree works like this. You simply get a new leaf to classify. You find all the subsequences of the right length and you compare to this one here. If one of those shapelets -- if one of those subsequences is less than 5.1 from this, you say it is a false nettle. Otherwise, you say it is a true nettle. And actually as it happens, of course, in this case it is very robust to leaf bites, especially around here. One cool thing about this actually is not only can it classify very accurately in many cases, but unlike other classifiers, it tells you why, right? So we get accurate results here, but we can also go back and brush this on to the shape and say, the reason why these things are different has something to do with whatever happens around here. And we actually figure out why the difference exists, which is very useful in some domains. So briefly, how do we actually decide which shapelet is the one to use? So for this subsequence here, I have to test every possible subsequence from everywhere of every possible length from tiny to very, very long, from every single shape in my database. So how do I actually choose this particular subsequence in the shapelet? Well, for every subsequence candidate, what I do is I put it here and I sort all the objects in my database based upon the distance to that candidate. And what I hope to find is that on my number line, all of one class -- see, the blue class is on this side. All of the red class is on this side, and I can separate them with a clean split point here. In this example actually, I have a pretty good example, not a perfect example. One thing here actually is out of order. Maybe a different shapelet would actually pull this blue thing back here, this red thing back here and I would have a perfect separation. Now, one small problem with this actually is that if I do this naively, it would take a long time. Even for a tiny total dataset of length 200 with 200 objects in it, there is about 8 1/2 million of these calculations to do. Each one of these calculations actually isn't just moving dots around. Each time you place the dot, you have to do a lot of distance measures, different things. So naively, it could take a long time. As you may guess, we actually have ways of speeding up, which I will briefly talk about in a moment. This makes it actually useful for visual domains. On my campus, actually we have 1 million of these things, mostly in covered boxes. They actually have been photographed. We have actually classified them, not only classified them but classified them by being robust to things like broken points and classified them with some explanation of why they're classified that way. So we've done this, and here's the answer. So, again, we can take these [inaudible] points, we can build a decision tree which actually is quite accurate, as it happens. But it also tells you why it made the decision in some sense. So the first split here is based upon this subsequence here which corresponds, if I brush it back, to this section here. So what it basically says is if you have this kind of a deep, concave dish at the base, you are a clovis. That's what defines a clovis. It isn't this point here because actually it is very common in all kinds of things, but this is unique to clovis. And, likewise, the second subtree here has this decision based upon this shape here, which you can brush back to this. And what it basically says is if you have a side notch here, you're avonlea. But if you don't, you're not. That makes a split right here. So, once again, we're actually more accurate. We're a lot faster to actually classify, which is kind of not that important in this domain. In some domains it can be. But the real cool thing actually is, it is telling you why it made a decision. One last example before I move on, this is a classic problem called gun-no gun. The young lady in the question is either pointing over there at the wall or she is pointing the gun at the wall. And I'm going to classify this. We can do this quite accurately, a little more accurate than previous people have done this. But more importantly actually it kind of tells you why I made the decision. So the shapelet actually brushes back here, which I can brush back into the video, and it turns out actually that in this case, the young lady is quite a small young lady, has a very heavy gun. And she puts it back on the holster, it basically has an overshoot. The inertia carries her hand further past the holster, then she puts it back in again. It is a subtle thing, but it only occurs in this example as we can actually guess then it is the difference between the two classes. So just a brief one slide to show you the scalability and the accuracy on a classic benchmark. So finding the shapelets is the slow part. Once you find them, classification as a decision tree, it is incredibly fast. But finding them can be quite slow. So here in this classic dataset to find the best shapelet can take us in a brute force algorithm about five days. With some clever [inaudible] ideas, we are actually going to find the exact answer in a few minutes instead. So we're actually going to find these things really fast for large datasets. More interestingly is we are a lot more accurate than many people in many domains. So here actually in this problem, there is actually 2,000 things in a training set. If we just use ten of those things, a tiny fraction in the training set, we're not quite as good as the best known approach in the world. But if we use 20 things, which is only 1% of the entire dataset, we're already better than the best approach. And as we add a few more things in, we get better and better again. And so why are we so much better than everything else? The trick is basically the shapelet in this case essentially finds the pattern just right here is the key difference. Shapelets can actually ignore most of the data. And as it happens for many problems, throwing away most of the data is the key thing. The difference is only in a small subtle place. And shapelets can do that. All the other approaches are basically forced to account for all the data. You are going to find some noise. You are going to overfit. You are going to cause problems. Okay. So the last thing I'm going to talk about are time series discords, which again are simply just some more subsequences with special properties. So what's the property in this case? So discords are the subsequences which are maximally far from the nearest neighbor. So, for example, if I had this subsection here, it actually looks like that one there. Or if I have this subsection here, it looks pretty much like that subsection there. But as it happens for this subsection here, its nearest neighbor somewhere in here is very, very far aware and that's what the definition of "discord" actually is. As it happened in this case, it does correspond to a known phenomenon in the special dataset. It has found a true anomaly. So here's some examples of discords. Here's a Web log we have from you guys a couple years ago. And you can see that most things have kind of classic patterns. So many things have this daily periodicity. I guess it is because people simply go to work and have more Internet access at work. And they actually have well-known patterns. Here's actually a pattern that you can see which are called anticipated bursts, for movies or book -- new book, whatever it is. What you see it actually is a big buildup in excitement. The movie is released and then people get bored and the excitement falls off. Of course, what is this bump here? DVDs, right? Actually, you see kind of a similar pattern but little bit different for dead celebrities. So for dead celebrities, you see a small interest. He is found dead in Bangkok or whatever it is. You see a big spike in interest, which falls off again, right? So the point actually is that most things are set on something else. So Germany might be similar to Austria. Stock market might be similar to finance. Spiderman is similar to Star Wars and so on and so forth. So given this, what's the most unusual thing you can find in all the English words? It is a tough puzzle, right? It is not easy what it is. The answer actually is full moon. And why is that? Well, most things have a periodicity of a day. Some things have a periodicity of one year. Some things have a periodicity of a month. At the end of the month, your insurance expires. People look for insurance at the end of the month for some reason, right? But full moon is the only thing that has a periodicity of exactly that of a full moon. And so its periodicity is unlike everything else in the world. It is the only thing you can observe from anywhere on the planet and people apparently go out for a walk, see the full moon, it looks pretty. They go home they hit the search engine, they type in "full moon." So the periodicity is exactly that of a lunar month. Kind of an interesting little puzzle there. I don't want to beat this to death but the discords actually work not only in onedimensional space but also in two-dimensional and three-dimensional and other spaces. The cool thing actually is they work incredibly well. So we've actually compared this to many other approaches, and the problem is many approaches find anomalies in time series, typically have four or five or six parameters. And you have to tune them and set them and you can make them work well for one dataset, but you rarely generalize to other datasets. The cool thing about discords actually is they have exactly one parameter, which is the length of them. Once you set that, there is nothing else you can tune. You walk away. It gives you the answer and, surprisingly, it often is the right answer. I think actually simplicity here is not a weakness, it's a strength because if you have lots of parameters, you are going to overfit. It is almost impossible to avoid that. I won't go into this in great detail but actually one question is: How do you know if the discord you find is really unusual? Because, once again, there has to be some kind of discord in every dataset, even if it is not very meaningful. So one trick you can do is -- in this case, there is a dataset that has two real anomalies which are known by external sources. If you simply sort them based upon the discord distance, what you actually find is that the background normal stuff is relatively low and there is a big jump, discontinuity, into discords. So by looking at this plot here with a knee plot, an elbow plot, you can probably kind of guess that this actually corresponds in threshold for true anomalies in this dataset. Just to go back to the example we have with this young lady with the gun. So in the entire video sequence, which is quite long, we actually look for discords in two-dimensional space. And we found actually it exists right here. So why is this unusual? Once again, we go back to the original video and find the answer. So normally the girl is very diligent and she points the gun, returns the gun, points the gun multiple times for this video. But in one sequence beginning right here, she returns the gun and she actually misses the holster or she fumbles around a little bit. She gets embarrassed. She looks at the cameraman and she begins to double over and laughs and jokes around, then realizes she's wasting time and gets back into character and returns back to normal. So here discord finds in two-dimensional space the interesting, unusual anomaly for this dataset. Once again, if you list naively, it could be really, really nasty because the discord algorithm requires you to actually look for every subsequence compared to every other subsequence that's quadratic. And quadratic algorithms can be nasty especially if the data lives on disk. As it happens, we have a data algorithm that you can actually do this very, very fast. For disk it basically takes two scans of the data and you can find the right answer. So we can do this actually for 100 million objects, which is about a third of a terabyte, in about 90 hours. This is actually a few years old. Now it can probably do a little bit better than that. But again, it is actually very impressive. A brute force algorithm for this would take thousands of years. So, again, I know you might not be that impressed. You are guys are with Microsoft with 100 million objects. But by most academic standards, that's a really, really, really, big dataset. Just to define how big is it, the classic thing you say is a needle in a haystack. Suppose that actually all the time series in the hundred million examples I gave you was a straw in a haystack. How big is the haystack? Well, the haystack would be about this size. This is actually to scale, 262 meters. As it happens, there's actually a much harder problem than that needle in the haystack because when you find the needle, you know you found the needle and you're done. What I'm really asking you here is find the one piece of straw that's least like all the other pieces of straw. That's a much harder problem. And, again, it is kind of surprising you can find the exact answer in tens of hours and not thousands of years. So, again, I've been selling these discord ideas for a while. They are very, very simple, almost insultingly simple but work very well. And recently got some nice confirmation of that. So Vippin Kumar actually had some students test this. So they tested on 19 very different kinds of datasets from all kinds of domains, and they tested the nine most famous techniques of anomaly detection out there. And they actually found that discords win virtually every time. I think once or twice they come in second place; but essentially discords, even though they're insultingly simple, work incredibly well. And I could make the same claim for shapelets and motifs. They are very, very simple ideas, but simple ideas tend to work very well in my experience. And certainly in every domain we've tried, this has been the case. And, again, the reason why I think they actually do work so well is because there's basically very few parameters to mess with essentially. The only parameter really is the length, and even that in some cases you can actually remove that as a parameter and have no parameters. And, finally, they are actually scalable and parallelizable. You can actually do all kinds of clever tricks and make this really, really fast. Now what I would like to do -- we haven't done yet actually -- is to port this to streaming data. So imagine instead of saying in this batch dataset, what's the discord or motif, saying, in the last one hour of a window that moves forever, what's the most unusual discord you've seen? What's the best motif you've seen? They are tricky problems. In the motif case actually, we maybe can find it, the answer. Mueen is working on that. For the discord case actually it is quite difficult and we are not sure the answer can be computed exactly. Okay. So the overall conclusions, motifs, motif joins, shapelets, discords are really very simple but very effective tools that we can use for understanding massive, massive datasets, at least in a batch case and potentially also in a streaming case. My personal philosophy is that motifs -- that parameters are bad. Every time you add a parameter, you half the utility of your idea. So if you have a reasonably good idea and you have five parameters, you half how good it is, half it again, half it again, half it again. It is not that good of an idea basically. And motifs and all these other things are great because they're very few to no parameters. And as always, if you have cool datasets, cool problems, we're very interested in those. Before I go, a quick plug. I'm giving a talk next week in Paris, a tutorial, on how to do good research. It's basically designed for young faculty and grad students who are maybe not from a big powerhouse like CMU or MIT who are trying to do good research and actually get it published. And I've gotten lots of great ideas from people all over the world. If you have any interesting ideas for helping these people, these grad students and faculty, I would love to hear them. So, again, it might be simply that you have reviewed papers recently and you said, "These guys had a good paper but they did this and it condemned the paper," so what is "this" that condemned the paper. If you tell me, I will try to summarize that and actually give that information back out to the community. Great. I'm all done at this point. Any questions? Comments? Sir? >> Question: So your one parameter is the window slides? >> Eamonn Keogh: Yes. >> Question: How do you handle that, say, in your motifs? >> Eamonn Keogh: Actually, it is surprisingly easy in many cases. So, like, for cardiology, the doctors will tell you that the interesting stuff happens at about one second. What happens in five minutes is kind of irrelevant because it will drift in that range. It makes no difference. What happens in a millisecond in a heartbeat has almost no interest, too, right? So they kind of know the natural scale for interesting stuff is about that. So for the entomology thing again, the entomologist suggests the light scale actually is about two or three seconds, right? Beyond that it is kind of random unless you miss things. One thing simply to ask the expert, sometimes you can basically, because it is efficient enough, you can search over some range. So essentially you would say, pick half a second and double that a few times and with some statistic measure, the statistical significance is what you find. And I think the real length is actually 2.1 seconds, and here's what we find in that range. So a combination of domain experts and the search over parameter usually solves the problem. >> Question: Do you think there might be a way to automate that? Like, look at, I don't know, [inaudible] or transforms or along those lines? >> Eamonn Keogh: I think there is probably somebody doing that. I mean, maybe someone more statistically smarter than me potentially, right, is probably the true answer. My guess is probably some kind of entity actually would make this. So the way of actually looking at doing this is actually by looking at this problem as a compression problem. So if you actually have motifs, you can compress well because you simply just take the occurrences of the motif. You give it a letter A in your dictionary and you kind of compress the data. So if you basically say, well, the best motif lengths are the one that compressed the data the most, which has some plausibility in some domains, then actually you could simply do a hands-off thing, find the best compression and, say, This is the true structure you could actually have. We actually are working on that. >> Question: So, for example, if you look at the moon for various [inaudible], sliding windows motifs, the meaningfulness of the motif would probably give some answer, right? If it is a true shut window, you could get a lot of false motifs. If it is too long, no motifs at all. >> Eamonn Keogh: Somebody could do that, right? There is kind of a sweet spot, I think, if you have a very short thing, then almost everything matches. For very, very long windows, nothing matches because curse dimensionality, as you might guess, everything is equally distant. If you plot the statistics, you see this nice clean bump here and it is typically what you expect to be the right answer for most domains, yes. So, like, for dance and martial arts, if you try it on motion capture, it will be about half a second. That seems to be kind of a plausible length, and move and dance might be about that length. And it repeats 11 times in a dance. And even the best dancer dancing to very synchronized music, they can't exactly repeat themselves with great fidelity much longer than a few seconds because it will go out of phase of themselves eventually. Sir? >> Question: What's your decision criteria? How do you decide [inaudible]. >> Eamonn Keogh: Sorry, once more. >> Question: How do you decide [inaudible]? >> Eamonn Keogh: This is Euclidean distance. Euclidean distance is a very simple measure. Since actually for classification problems, Euclidean distance works incredibly well. There are many other kinds of measures you could have, like the number time warping, logarithmic subsequence. Actually, there is at least 50 different measures out there for time series. We actually do a test on classification problems. Euclidean distance actually basically wins every single time. It is kind of unfortunate because you would like the clever idea to work, but the simplest thing you can imagine, Euclidean distance works very, very, very well. These are all based upon Euclidean distance. >> Question: So Euclidean distance is fresher? >> Eamonn Keogh: In the motif case, you minimize that Euclidean distance. You are simply finding the Euclidean distance as the minimum value. Once you learn that minimum value, you can actually maybe make a threshold out of that. So it is true actually that for small datasets, non-Euclidean things actually work a little bit better. So the intuition is -- let's say you a face like my friend Kaushik here. If I have a million people in a room, there is a good chance I will find a similar face to his and there would be very little difference or warp in between them. But in a smaller room, I can't find someone that looks like him so much. I have to warp or change his face more to match someone else's face. So for very small datasets, Euclidean distance actually can't handle the irregularities and the warp and the changes. And so number time warping or some other kind of metric can work very well. Once a data is reasonably large, as it turns out, Euclidean distance works beautifully. Anyone else? >> Kaushik Chakrabarti: Okay. Thank you. >> Eamonn Keogh: Thank you. [applause]