>> Daron Green: So I first met Ian many... story. The story he was trying to sell me...

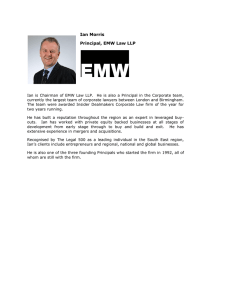

advertisement

>> Daron Green: So I first met Ian many years ago when he was trying to sell me a story. The story he was trying to sell me then was parallel prolog. Goes back some years, Ian, right? And then I think it was Fortran M, I think it was, which was the formal methods in Fortran that complicated mix of things. And Ian has done many things and the latest things and the latest thing he's been doing is looking at infrastructure for collaboration grid middleware and things like that. But he has many more things to his expertise. And he's always been involved in more projects than I could believe possible. And so I'm sure Ian's going to tell us some of the things he's learned and this certainly chimes very much his topic today with what we're trying to do in external research with the scientific community. So Ian, delighted to have you in Redmond. >> Ian Foster: Okay. Do I need to hold my hand, too? I met Tony when he was at South Hampton, and he tried to recruit me there, arguing that my mother-in-law lived just across the (inaudible) and that would be a positive reason. He didn't know my mother-in-law. So anyway, I'm -- what I want to do is talk to you about some ideas we are pursuing at the university of Chicago in Argonne in the context of the Computation Institute. So this is something I took on a couple of years ago running the Computation Institute. I think I have a slide about it. And you know, the goal is to further investigations of challenging problems in the sciences often system level problems that, you know, require the integration of expertise from many different disciplines. We have about 100 fellows, 60 staff, and a fair range of projects and one of the things that this work has led me to do is to become increasingly interested in the problems of how we accelerate the data analysis process, the research process and the tools that we might need to achieve that. So we'll start off with a little historical slide just to remind us how life used to be back in the 1600s if we were interested in something like this we would go through a fairly simple process by which we got some money, built an observatory. This is Tycho Brahe by the way. And then you know, we'd recruit the young upcoming fellow like Kiplas (phonetic), he'd analyze our data and eventually learn something of interest. Along the way he might some say poison his advisor in order to get access to the data, so even back then access to data was a challenge. But clearly, you know, the amount of data that people were dealing with back then was not a tremendous problem. So if we forward 400 years and see how things have changed. So clearly automation of the data collection processes resulted in probably nine mind orders of magnitude increase in data rates. The amount of data that we have access to just in astronomy is around about a petabyte this year or next year. A major change of course is that the number of investigators has changed dramatically from around about one back in 1600 to certainly 10s of thousands nowadays in astronomy and maybe a million or more if you count amateur astronomists, with computational speeds, the amount of literature, things are very different. And of course this results in new problems for the investigator. So if we look at another field that is also of interest, biomedical research. So at the same time if you wanted to perform some research to your main problem was finding a body to cut up, which was quite a challenge back then, but nowadays of course things have similarly changed in a dramatic way. This is a slide put together by John Wooley (phonetic) who tries to quantify the number of entities, not the amount of entities that one needs to on deal with if one is investigating either biomedical research or basic health care research. And the associated numbers of course are changing on very dramatic exponentials and in every field from astronomy to biomedicine we see people struggling with the fact that what used to fit in their notebook then on their floppy disk then on their hard drive no longer seems quite so easy to deal with. So the next slide is a cultural reference that you may -- actually, no, that's the slide after is a cultural reference. Here's a slide that quantifies one effect of that. So this is a log plot on the Y axis, it's the number of gene sequences that are being collected in the last decade or so. And then the blue line is the number of annotations, for example annotations asserting knowledge or belief about the function of particular genes. And you can see that's increasing far less rapidly, and that is a sign that people around able to keep up with the amount of data that's been produced in terms of the analysis that's been performed. So this is the slide of the subtle cultural reference. Does anyone recognize this? The Black Night from Monte Python and the Holy Grail. You know, he is saying it's just a flesh wound and his head is up. And of course many scientists will simply say we just need a bigger disk and a work station and we'll be okay. But I think increasingly people are realizing that that is no longer the case. So instead, you know, what I believe we see a need for is something we might call an open analytics environment. So let me explain what that is. And I've adopting a cooking metaphor for some reason that's a super computer down on the bottom. What is an open analytics environment? This is something into which many people, certainly individual investigators, but teams also can pour their data, whether scientific measurements, information about the network structure of their data or their domain, the scientific literature. We'll come back to that in a second. Into which we can also pour programs, service definitions, stored procedures and rules and out of which we can then extract results. And we'll look in a bit more detail on what is involved in each of these steps in a second. And we want this environment to have at least virtually the property that we no longer think of what we can do with our data in terms of what is possible on a single computer. But think at least ideally in terms of you know if we had unlimited storage, unlimited computing, if we weren't constrained by the fact that different pieces of data are in different formats. If we didn't have to worry about the fact that the analysis routine we got from our friend is written from Netlab and we're running on a platform that doesn't support it, et cetera, et cetera. And also if these tools supported all of the collaborative processes that really make part of the scientific problem solving process, we could version things, we could document their provenance, we could collaborate with others in analyzing them, we could annotate them with arbitrary annotations. If we had such a system, which is very far from what most researchers have available to them today, I think we could really transform the way that research happens in a wide variety of fields. So let's look at -- oh, first of all, I know the word open is sometimes one that isn't popular here at Microsoft, so I thought I'd put up a -- I got a dictionary out to see what it meant. And of course it means many things besides the ones that Richard Stallman (phonetic) likes to -- I guess he doesn't use open, he use other things. But having the interior immediately accessible, generous, liberal or bountiest and then not constipated is the one I like the best. Okay. So what goes in. So I'll talk about some of the applications that we're working with, and I'm sure you've got your own applications that you're familiar with, at least some of you, but as I indicated before, you know, scientific data which comes in many different forms, image text, tabular data, image data, this is functional MRI data. In the biological sciences of course we've got many, many instruments each of which is capable of generating data at extremely high rates. Network data, the scientific literature, there's a few others there I'm sure, different instrument data. Clinical data in the case of the biomedical sciences. If you're a political scientists, online news feeds. These are all things that if we can put them in to a common place will allow us to pursue interesting connections and I'll mention some of those connections in a second. We want to be able to then establish and assert connections between things and tag data with hypotheses, you know, putative connections, perhaps putative beliefs regarding the reliability of the data or the -- or its meaning. We also then want to be able to throw in programs and you know programs can be workflows, this is a Taverner workflow, they can be parallel programs, this is a swift program, the system that developed at Chicago by Yun Zhao (phonetic), one of your staff here when he was a Ph.D. student there, or rules, you know, perhaps or in stored procedures. You know, programs written things like, using things like map produce or Dryad, SQL procedures, et cetera, et cetera, programs written in many different programming notations. Optimization methods and so on and so forth. And of course ideally we want to be able to associate these with programs. So when data comes in, it can easily be operated on by any of these procedures and transformed into a form that may then be operated on by some other procedure. It's amazing to see the tremendous enthusiasm that map produce which purports to do that for a very simple class of problems that's generated, I mean it's almost laughingly simple but people see it as a somewhat liberating tool for data analysis. And then I couldn't think of how to illustrate this part of it with beautiful pictures so let's just talk. You know, what do we want to do to make this happen? We need to be able to run any program, store any data without regard for format, without regard for issues of scaling. And of course scaling issues always arise in practice, but we do want to be able to operate on terabytes and perhaps petabytes of data and perform computations that involve the comparison of many elements within different terabyte and even petabyte data set. So that means substantial scale. It also means the ability to do away with the platform differences that currently hinder so much into operation. So, you know, the tremendous popularity of platforms like Amazon EC2 clearly have something to tell us there. There should be built in indexing so we can keep track of what's in there and what can operate and what. And then under the cover provisioning mechanism so that we can allocate resources to these different potentially very large demands fairly easily. And then what comes out, so I was particularly proud of this graphic, so what I'm trying to illustrate here though is as this data comes out of this thing that we should be able to, you know, it will be rich data that has a provenance information associated with it. So you know, this data was generated by this Taverner workflow, one of its inputs was this network that came from somewhere else and maybe these are the, you know, the people that have expressed some opinions on the validity of that workflow. You know, and over here we've got a parallel computation that generated this piece of data that operated on this MRI data using an algorithm described in this piece of scientific literature. I think you get the picture. Okay. So and then finally, you know, I think the notion that analysis is not just a one-off procedure, it's a process, we need to take that into -- bear that in mind. You know, as we over time we add data, we transform it, we annotate it, we search for it, we add to it, we tag it. We may visualize it. Others may come in and discover it, extend it, we may group things together in different ways. We may share it or not with others. These are things that need to be supported in this sort of environment. Okay. So I guess I'll skip this slide just to -- so so far I've talked in the very abstract sense. And an obvious question that you might ask was was this some huge central, what's the word nowadays, cloud, or is it some distributed environment that links many different subclouds, if you like? I think the answer has got to be both. Many of the algorithms that we need to be able to execute require strong locality of reference and so have to be performed on data that's century located but ultimately data will end up being distributed and to match those two worlds things need to be able to move from one place to another. Okay. So now I want to talk a bit about some of the things that we're doing at Chicago in an effort to, you know, realize some of these capabilities. And of course we realize that this is a vast endeavor and we're not going to be able to undertake it by ourselves and that's one of the reason why I was interested in coming out and visiting here. So first of all, here is a list of some of the applications that we're working with. There are quite a few others, but these are all areas in which we have particular expertise, either at the university or at the Argonne National Lab. Astrophysics. So this is a exploding type two -- type 1A supernova. So they're using supercomputer simulations. A group at Chicago has shown for a putative mechanism that seems for the first time believable in explaining why supernova happened. This is turns out to be a data problem because what they produce is tens of terabytes of data that then needs to be made available to the community and compared observational data so it becomes a data resource. Cognitive science. Huge amounts of work on different aspects of MRI studies, for example. East Asian studies. You might not have thought of that as being data intensive but it turns out this group has been going to China and taking extensive photographic surveys of various temples and thanks to help from (inaudible) we've had the Photosynth group helping them to visualize some of their data. Economics is in my view a discipline that is about to explode as a consumer and producer of data. I'll say a bit more about that in a minute. But this is a fairly detailed map of occupational choice and wealth in Thailand. Something that turns out to be of great interest computationally. Environmental science of course is familiar to us. Epidemiology. Also genomic medicine. We're doing a lot of work in that area, although I won't say too much about it here. Neuroscience, so this is a little chip thing you can stick in typically a monkey's brain, but you could stick it in your own, if you wanted, and get large amounts of data. Political science. So I have a friend at Google New York and thanks to him we're getting a feed of about two million online news articles a day and sticking them into a database and then performing data intensive analyses on a database to look at things like political slot in newspaper reporting. So sociology, physics, so on and so forth. So there's a huge number of people, each of which has got their own data intensive science to perform, each of which is currently limited in their ability to perform that science. So for example, these guys they perform what was the world's largest turbulent simulation last week. It took them a week, 11 million CPU hours, then took them, I think three weeks to move the data back to Chicago and about three or four months to perform the analysis because of the limitations of the tools they have available. The economics guys have been spending the last year working out how to transform their data to make it available to their analysis routines. The political science people we have started helping them sooner but they're still really struggling with how you reliably download feeds of millions of articles a day and then process the data. But what I find really exciting is the connections that start to appear when you start thinking of this data as being in a single place. So here is a few. Looking at economics of development in Thailand, well, that's in many ways tightly related to issues of environmental modeling and climate change. Those in turn are related to issues of epidemiology if you're trying to plan a malaria eradication program, then you're going to want to have information that these guys have got on access to transportation, wealth, distribution of hospitals and so forth. And you even may be interested in how articles about various eradication schemes are reported in the press. So the political science news feed start to become useful. We have a big economics project that we're getting underway and part of the relating to climate change and one of the issues that people were interested in is how do you persuade people to make environmentally sound choices so then the cognitive science work, perhaps that's (inaudible) as well, but that's taking things just a little bit further. Second thing we've been doing over the last few years is putting in place some very substantial hardware infrastructure. I'll just say a few words about this. So we -- this is a fun computer. Has anyone heard of a sycortex system before? It's based on a mips processor, so it's got 1,000 chips, 6,000 cores, does about 6 tear-offs per second. But the neat thing it only consumes 15 kilowatts. So it's a sort of a very small low power system. This is a somewhat larger medium power system, the second fastest civilian supercomputer in the world, the IBM VGP. >>: (Inaudible). >> Ian Foster: This is at Argonne, also. We're also getting one for the computation institute of Chicago. We're using both of these for data intensive computing. We've got access to remote systems like the TeraGrid. And then we have -- we're just in the midst of acquiring our own so-called Petascale selective data store which is intended to -- it's our first attempt at building a system that can service this cauldron, if you like, the framework for investigating some of these issues. So of course it could be more substantial but this is what you get for a couple million dollars. So it's got about 500 terabytes of reliable storage, a computer cluster attached with very high I/O bandwidth and some build in GPUs, multi core system for high performance analysis. And then the goal is to put in place the mechanisms that will allow us for dynamic provisioning of this both data and computing parallel analysis routines, remote access mechanisms, data realtime and stage data ingest mechanisms and perhaps start looking at how we might offload to remote data centers if they were available. >>: (Inaudible). >> Ian Foster: Yes. So that was a request from some of the biologists who are very concerned about having take the (inaudible). Well, I mean this is 2A raid but of course if that particular device is destroyed, then you've lost your data, so they want to be able to move things to a remote location. >>: I was just questioning (inaudible). >> Ian Foster: Yeah, that's a good question. The -- there's a couple of reasons for it. >>: (Inaudible). >> Ian Foster: No, I know. >>: (Inaudible). >> Ian Foster: Yeah. Tape is always about to become the technology of the past. >>: IBM's system? >> Ian Foster: Yes. That's interesting. So we've just got the money from NSF and IBM is our current vendor, so we haven't actually signed any contracts, but that's one. And this is DDN system. DDN, Data Direct Networks. They're the vendor here. And this is, you know, PC cluster and currently IBM is the system integrator. >>: One more question about that (inaudible) data centers I mean are we physically moving tapes or are we sending it over a network? >> Ian Foster: Yeah, that's a good question. Whenever possible we move data over networks because it's easier, but of course one option is also to move ranks and that clearly has very high bandwidth. >>: Ian in earlier projects you said that they generate enough data that it took three weeks to move it back over to the U.S.? >> Ian Foster: Yes, they were moving it from Livermore to Chicago and it was only moving at 20 megabits per second. >>: Out of curiosity (inaudible) capacity, why wouldn't you put this stuff on tape or disk and ship it over next day? >> Ian Foster: Yeah, that's a good question. Why didn't they do it? Probably no one there was read to mount it on to the disk, maybe they didn't have the capabilities to do that. >>: Okay. >> Ian Foster: But it's certainly in that case would have been a perfectly reasonable solution. I'm no network bigot, as it were. Anyway, let's now go on and say a few words about some of the methods that we've been in various projects developing methods that address various of the problems described in the -- at the beginning of this talk. Now these methods is a complete -- none of them is either individually or in its entirety is a complete solution but they do provide us some tools that have allowed us to move forward in some useful way. So we've got work on HPC system software at Argonne, which is I mean Microsoft has supported some of the work on impatch, parallel virtual filing system, work I'll say a bit more about on collaborative data tagging, data integration work, which addresses this challenge of separating representation and semantics of data, high performance data analytics and visualization, various tools we work with herdoop (phonetic) and we have our own system called Swift, which we think is better for expressing what you might call loosely coupled parallelism, particularly on data intensive applications, dynamic provisioning mechanisms, service authoring tools many of them developed in collaboration with the cancer biology community. Provenance recording and query. We have done a lot of work in service composition and workflow. We're working a lot now with the Taverner system. I guess it was that developed with the UKE science program? >>: (Inaudible). >> Ian Foster: Yes, it's great. We have got the cancer biomedical informatics grid to adopt that as their technology of choice. Virtualization management and distributed data management. And I'll say a few more -- a little bit about some of these things, not too much. >>: (Inaudible) in Taverner, we spent a little time in the UK doing software engineering on the research tool Taverner and when I went to see (inaudible) they complained it was unfair. >> Ian Foster: They gave them an unfair advantage, yes. >>: It was documented work. >> Ian Foster: Yeah. The CAB people, this is the National Cancer Institute's cancer biomedical informatics grid, are incredibly careful and thorough in their evaluations, so I felt very pleased that they chose to use our global software for a lot of their work, but they also adopted Taverner after a many month evaluation process, so that was very pleasing as well. Say a few words. This is work that Zack Onesteroff (phonetic) here has really be leading, so I could even ask, but maybe I'll talk about it. I put up this map here of Thailand because this is some of the data from one of the Thai surveys and if someone has ever worked with social science data this is very typical documentation. You've got some -this is a very rudimentary description of the data set. These are some of the variables. They have helpful names like RR7B, or HC3, and if you go off and read up on a manual you can find out that this is a representation of the name of the household owner I believe. So a typical problem with social science data and I think a lot of scientific data is its impenetrable relatively impenetrable to anybody but the expert. So we believe that collaborative tagging or tagging tools can be used to allow individuals to start to basically tag document the powers that they follow through data perhaps, you know, identify the fields that they use in particular studies maybe to annotate data or procedures that can be used to compute new data products. And then if you allow these tags to be shared, then you get the collaborative or tagging or social networking part of the system where one individual working on a data set can learn if the experience of others. >>: This is different from microformats? >> Ian Foster: I'm not a very deep -- I don't have a deep understanding of microformats but I think yes, you might use microformats I think to structure these tag. >>: And you choose not to use (inaudible) and things like that? >> Ian Foster: Right. So as you probably know, there's a big argument that ranges between the believers and structured and unstructured metadata or tags. I think what we're doing is actually neutral with respect to that, and in fact we have people at the university who want to start defining ontologies that could be used to structure these tags or alternatively, to infer ontologies from the tags that are applied. I think that's sort of an independent issue. But so tagging of course in social networking are very familiar concepts, they are often applied to photos and Web pages. Here we're trying to apply them to structured scientific data. I think it could lead us in very exciting results. Certainly the people we describe it to, the scientists find it very, very attractive. A second thing I'll just mention addresses a problem I was talking about with I think Jose just before the talk was the problem that so much scientific data actually has this sort of structure, this is a typical if you look at a functional MRI data set, this is what you might see. So Yun Zhao here was struggled with this problem for quite a while in his Ph.D. thesis. So here you've got some -- if you have a bit of inside knowledge, you know that these file names and directory names encode information about the structure of the data and that the real structure looks like this, it's a nice logical hierarchically organized collection of MRI images with runs that comprise of volumes that when aggregated describe a subject and subjects form parts of groups and groups form parts of studies and so forth. So what we've been doing with the XML data typing and mapping project is developing tools that allow you to map smoothly between these two views and then use an XML representation of the logical view to describe your analyses with the appropriations happening on the physical world as needed. So you end up with these nice -- well, there's an XML representation but this is the logical structure that you'll use to write your programs and under the covers we're going to be reaching in and accessing data which may be stored in file systems in databases, in other forms. And to come back to that in a second, when we talk about one of the tools that we've developed to perform data analysis, so a common problem that we've encountered and the Dryad group here I think is addressing similar concerns is that you know people could be individuals, groups, et cetera, come in and they want to perform analyses on these logically structured hierarchically organized data sets, for example they may want to take one of these studies and compare a second study or they may want to scale the members of one study in order to adapt it to a reference image and then see how individuals brain function changes over time, for example, following recovery from a stroke, which is one study that we're currently involved in. And that involves can be sometimes literally millions of fairly complex computational tasks. One wants to be able to express that concisely, map this on to the appropriate computing resources and because we want -- we're focused on process, feed the results back into our open analysis environment for further analysis. And so the Swift system that we developed allows us to express these sorts of analyses using nice high level functional programming syntax. So here we for example have a procedure reorient run, which we apply to a run, and for each volume in the run we're going to apply a reorient function. Pretty straightforward stuff but relative to what's actually happening under the covers people are operating on files and complex directory structures. It's quite amazingly simple. So this is the sort of thing that we're using in our own implementations of this open analysis environment. >>: (Inaudible) magic that happens. >> Ian Foster: Yes. >>: Because mapping from that into what's actually taking place (inaudible) is the key thing. >> Ian Foster: Right. >>: So could you just say a little bit more about ->> Ian Foster: Yeah, so the magic, much of which was implemented by young Yun Zhao by the way, was ( fairly straightforward. So here you're calling this reorient run function, you're passing in a point or two. A run now this for each function, for each volume in that -- well, there's two runs being passed in, I guess. For each volume in that run -- well, that involves an operation to look at the underlying physical construct, which in this case is a directory, find out how many files in the directory and return an XML structure representing the files in that directory. You're then going to invoke this program, and it turns out this program via another little interface definition is a I think probably a Netlab program in this case, so there will be some logic to dispatch that computation to the appropriate place for the computation to occur, and this could be on a machine such as the sycortex machine or our -- or the ped's cluster. And then eventually the results are either left there for further computation or gathered back to some archival storage to be stored. And so on and so forth. And well, yeah, the result is something like this, where each of these blue dots is a potentially complex computation. I have another -- so we're tracking provenance using this simple provenance data model. You know, so you've got procedures that are called and multiple procedure calls linked together into workflows and workflows operate on data sets and data sets may have annotations and so on and so forth. There's been quite a bit of work in building communities consensus for how to -- for issues of how to record provenance. Roger Baja (phonetic) has been at various of these meetings, the international provenance and annotations workshops which we've been holding jointly with again the UK people. I'll say just a few words about multi level-scheduling. This is sort of interesting. So you know, the common in our view, you know, it's not enough to simply make data available for people to query, we need to be allow people to compute on data. And if you want to be able to compute on data, then you'll sometimes have very large amounts of computation to perform, particularly when you're performing a comparison. So we need to be able to dynamically allocate resources for that computation to occur. And we've done a favorite of work on building so you can ignore this faded out part, but this is sort of the runtime system for the Swift system. We dynamically allocate execution nodes, virtual execution nodes on a variety of different computational platforms, Amazon EC2, open science grid, TeraGrid and more recently on our big local parallel computing platforms. >>: (Inaudible). >> Ian Foster: Yes? >>: So the kind of applications that you're considering how much of sort of the smarts at what you need to do involve managing I/O versus managing CPU? >> Ian Foster: Of course the answer is it depends and so I'd say in the early ones we've worked with the smarts have focused on CPU and increasingly now we're addressing problems where the smarts involve I/O and we need to be either caching data or moving computation to the data. >>: That change happen because you sort of feel like you (inaudible) that are necessary on the computation or do you see a trend with that sort of pushing things in the direction of placing higher (inaudible) consideration by (inaudible)? >> Ian Foster: No, I think it's more that we feel we know how to address the computation so now we can address a wider range of problems. This shows what happens when you run this is a problem where I/O is not a big concern. Each of these little dots is a task running on this 6,000 core sycortex machine. So this is a large parameter study using a molecular dynamics code. I'll say a few words also about the issues of distributed data management. So this is a, you know, a typical use case with which we work where we data is being produced at a scientific observatory, in this case the Gravitational Wave Observatory, and I guess they are not a big believer in sophisticated data management. So they simply replicate all of their data to all of the sites participating this experiment, which of course makes data look held the issues for analysis fairly straightforward. And that means they are replicating about a terabyte of data per day to eight sites and using some of the software that we've developed, they are able to do this with a lag time of around about typically not more than an hour from when the data is initially produced at the observatory. Now clearly data replication is only a partial solution but it's often a useful solution for making sure that the data you need to analyze is available where you need to analyze it. So now I wanted to take a few minutes and talk about a couple of applications before we wrap up here. And show how we're applying these methods in a -- in various context. So the first one is a system called the social informatics data grid developed by Ben Berthtol (phonetic) and his colleagues at Chicago. He's now actually at Indiana University but the development continues at Chicago and UIC. And as you may guess from this picture their interest is in enabling the multimodal analysis of cognitive science data, so they've got people doing things and they're filming them and videoing them and sometimes they'll be taking various out of sense of data and of course they then want to compare them with other people, other experiments and also analyze the data in various ways. And historically this community has been incredibly bad at sharing data. So what the Sigrid (phonetic) system has done despite the name it's really basically open analysis environment, it's a system into which many people can pour their experimental data in different forms into which we can register analysis programs, mostly Swift scripts, we can associate metadata and then using a variety of platforms, many of them both Web based and other client, rich clients we can browse data, search it, we can preview content, we can transfer from one format to another, download it, analyze it and so forth. So in a sense in a very disciplined specific way it's instantiation of an open analysis environment. And this apparent has been very successful, they're starting to get a lot of data loaded up into this, and you can look at it using Web portal tools that let's you search things or download things. Elan is a system from I'm not sure where from, maybe Germany, it's a rich client tool. This is a system from the UK called the, what is it, the digital replay system, which you can use to search and then grab data from this repository and, yeah, it's a very -- I thought I might have had an example of a Swift script running. But it's a -as far as the average user is concerned, they have their nice traditional client tools but it's reaching out over the network and accessing these very rich databases which themselves are backed up by the power of high performance parallel computers as needed. Let me mention one more -- one or two more examples. So this is a project that we're getting underway with some startup funding from Chicago and Argonne and we're also talking to the MacArthur foundation about it. The goal here is to address the challenges of socioeconomic and environmental modeling. We have a very grand title, the community integrated model for economic and resource trajectories for human kind or (inaudible). We have economists, environmental scientists, numerical methods people, geographers, et cetera, all involved in addressing these questions. If you want to ask questions like, okay, there's a shortage of food in be Africa, is that due to biofuels policies in the U.S. So it's easy to say yes, but it's not obvious one way or the other. If you want to be able to answer that question, you need to be able to gather data from many sources, incorporate models of agriculture, transport, (inaudible) policy, et cetera. Put in place state of the art economics methods that address dynamics, foresight, uncertainty, have high resolution and put this in -- pull these together in order to well answer that question then. What we believe, you know, the community is ready for is a sort of open platform in which different people can contribute different component models and data in order to ask these questions. And I would say, you know, this is also potentially applicable to problems and areas like epidemiology and development economics. In fact, one of the early applications we're working with is in development economics. So this is a picture I showed earlier. So this is actually a model showing predicted levels of entrepreneurship, i.e., non-subsistence farming in various parts of Thailand based on a very simple model that assumes that development is only based on your access to wealth where wealth is determined by your sort of family structure. And previous occupation. The red shows that we're predicting that entrepreneurship is occurring when it's not, so we're overpredicting in this northeast part of the country. If we then build a model that takes into account distance to major cities, then you can see we (inaudible) there, green shows that we're getting a pretty much perfect match. So this emphasizes that in order to study these economic and development issues we need access to real large amounts of geocoded data and then the computational models that can take this data and perform first computation. So this is running on a modest scale parallel computer, it's using data that's obtained from Thailand and manually transcoded, but you know we think we can automate a lot more of those processes. I think I've got one last application. So this is maybe of interest to some of you, relates to text mining. So this is a typical biologist, you know, there are literally certainly hundreds of thousands of biological articles produced every year. Clearly no one reads them all. So how to we make sense of what is still in many cases the primary means of communicating scientific data? So ideally of course people would encode their data in semantic Web representations but in practice they don't, they write a paper instead because that gets them tenure or promotion. So there's a group in the computation institute led by Andre Rizetski (phonetic) that's been building tools to basically automate some of the knowledge extraction processes from this data. So he has a system called gene ways initially developed at Colombia before we hire him away from there. But it takes so far biomedical articles, he's gone through about half a million at this point I think. Looks for statements of the form, you know, this enzyme catalyzes this reaction or this gene signals for this and then builds up putative networks, cellular networks which basically capture what is the information, at least a subset of the information contained in those articles. And once you've done that you can do really wonderful things. One thing you can do is combine this with information about observed phenotypes, for example, you might have some of these, one of these families that people have studied where you find out the certainly people have certain diseases. Now, if you're looking at anything other than very simple single gene diseases there isn't enough data to make reasonable assignments of potential cause and effect in particular genes but if you add in this reaction network from information you can start to look for genes that affect things in the same area of the network and use that. And they are getting some good results with for example finding potential multi gene causes for breast cancer. You can also do other things. You can take that data, these vast collections of assertions of either belief or fact, depending on how you view them, and then combine them with other information, for example citation network information, so this is some citation network data obtained by another guy and use that perhaps as another source of evidence regarding the strength of belief you may put into various statements. You know, five people make one statement and one person makes another contradictory statement you might believe that the first statement is probably true and the second is not, but maybe you'll find out that the first five people all come from the same lab in which case you put less belief in that statement. So there's lots of interesting things like that that we're working to do. >>: Is there a relationship between the algorithms that you've shown in the consecutive slides to relationship between Andre's argument made (inaudible). >> Ian Foster: No. Quite different. But we are combining the where with Andre James and I are working now to combine some of this information with this information in useful ways. Okay. So that was the -- I just wanted to show a bit more detail about three applications, all of which involve lots of complex data and compilated computation. My belief is -- our belief is that all three of these have a common need for what this open analytics environment, something that will allow us -- will basically reduce the barriers to putting data in, putting programs and rules in mixing those together, matching what matches with what matches -- matching things together and then taking results out in a way that allows you to make sense of what happened and then addressing these other concerns. Of course the challenge we face is that we've got various building blocks, we can buy hardware, the hardware is far from adequate and the building blocks we've got to certainly far from adequate so we'll be eager to work with people here on doing something like this on a much larger scale. And you know, I'd say you know my belief is that if lots of people are sort of sniffing around this problem of how you create community repositories for data that are more than just ifty (inaudible) and Google has announced or will announce plans to create a huge place where all the world scientific data can be placed. There's sites like swivel that do that on a smaller scale but I think there is an opportunity to do something that is overtakes the competition in that way and really has a big impact. Okay. So thank you very much for your attention. (Applause) >> Daron Green: Any questions for Ian? >>: Ian, maybe it was the (inaudible) slide again. Does the prove dent (inaudible) is the tightly coupled with Swift so does Swift naturally carry some of the metadata that allows you to track provenance? >> Ian Foster: Yes, that's the idea. And I say there's a slide hesitation there just because in earlier version it was completely integrated. I think we've just finished reintegrating it into the latest version of the system. But the data model was fairly mutual with respect to the system that you're using to perform the computation but the idea is and the reality is just recently that we now do generate such assertions as computations perform. And that's actually got all sorts of interesting consequences as probably Roger could also tell you. You could start, you know, reason about what computations have been performed and which have not. You can, you know, talk about you know, how efficiently people are using the system, which data is popular and which is not and so forth. Yes? >>: So (inaudible) the dream of sharing all this data and then is the way to do that is through federation or are we actually just putting this stuff so that everybody can access it and not really worrying about, you know, retaining access control or basically security privileges and stuff like that for different users? >> Ian Foster: There's quite a few questions mixed up there together. I know the Google guys, their plan is that you upload the data and it's got to be on a creating commons license that anyone can use. And of course there's a lot of data that has that property but also a lot of data that does not. So I believe that access control does have to be addressed and is important. Secondly, you know, I think ultimately we have to be federating because of course they'll be more than one data center in the world. At the same time, you know, an awful lot of people don't want to maintain their own data. I think, you know, this cloud notion is very compelling to many people. Not certainly to many it is not, but you know, if I look around the university of Chicago and Argonne, there are literally thousands of little databases, mostly write only essentially, you know, XL files, floppy disks, tapes of various sorts and I think also many people that have whether the exponential growth is sort of crossed some threshold in terms of their ability to manage. And so I think and that's in centralization is a very powerful and quite attractive concept for them. >>: In some areas like the National Library of Medicine David Lipman (phonetic) believes that it's better to have it all under his control. But I don't think that's fine for a subset of the literature but I think that's a scalable model. >> Ian Foster: Yeah. And even there, well, the literature, you're right. So even the various ->>: (Inaudible). >> Ian Foster: Even those databases there are other people who grab that data and perform their own curation of that data and their own annotations perhaps automatically generated annotations regarding, you know, inferred function or who perhaps, you know, have different versions of the same gene and so you know, I think there are a potentially a big distributed truth maintenance problem trying to find out what's going on. That's an area also of interest to us. >>: At the cyber infrastructure advisory committee a couple weeks ago Bruce Takay (phonetic) and I got them to agree that they would provide the (inaudible) they should have an open data publication policy. >> Ian Foster: Absolutely. >>: (Inaudible). And I don't know what will happen to that suggestion but it's in the minutes and it's official. >> Ian Foster: I mean, it's a great idea. I think at the same time it's -- as long as there's a significant cost associated with publication. >>: That's the problem. >> Ian Foster: Then people are going to be reluctant to perform it. So we need to find ways of stream lining the process. >>: The other thing is also the cost of curating and updating data. I mean, you need (inaudible) for example I'm told it's over 100 full-time curators. >> Ian Foster: I do wonder how much of that, perhaps I'm naive here, but how much of that we can automate. I'm not sure. Yes? >>: I think we have to ->>: I'm not very familiar with the Swift, but to what degree has (inaudible) basically unleash the power of (inaudible)? I mean it seems like even though it looks like a lot of functions (inaudible) every function can be very (inaudible). >> Ian Foster: Yes, so it ->>: (Inaudible). >> Ian Foster: It's not a -- I mean it's really parallel logic programming in another guise but it's addressing a very at least currently I mean there's something very simplistic really making it -- allowing people to coordinate the execution of many individual sequential programs. So they can be parallel programs that are coordinated. In some cases they are. But it turns out that's how many people do actually think of the work that they need to do. Certainly many problems don't fit into that framework, but especially in the -- we find in the social sciences and biological sciences a lot seem to have that property. >>: So how do I know whether my (inaudible)? >> Ian Foster: I think we'd find out in a few minutes if we sat down and talked about it. >>: I mean is it a more general model ->> Ian Foster: Yes, it is, because it has, you know, herdoop is -- has basically of course map and reduce. Here we can perform, we have map operations but also more complex forms of interaction. Yeah? >>: (Inaudible). >> Ian Foster: Yes. Yes, I think -- I can't remember if we tried running it on Windows but it's all written in Java so it would run, yeah. >>: So (inaudible) environment that you present these big part of, it's a huge super main frame has people you me has people looking at ways to extract subsets of that to warranty ->> Ian Foster: Right. I think that's and that's obvious got to be part of the model. You know, for example in the economics people that we're working with, they're very interested in allowing -- I mean, this is actually in a sense, you know, the current mode of working is your data is remotely and when you want to operate on that, you grab a subset and of course that's something that people want to do, and they'll continue to want to do. But I think the more to the extent we can start to push analysis into the cloud, you know, I think we will empower users because the sheer quantities of data that people want to operate on are too large for people to bring them locally. Another large project we are involved in is called the Earth System Grid which is -provides access to all of the intergovernmental panel on climate change data which is several -- I think 100 terabytes or more. And the current figure of merit that we have there is the amount of data that people download to their work stations for analysis. And that's running at a terabyte of data a day or so. But you know in my view ultimately the goal should be that that number goes down, not up, because clearly it's climate model data sets goes from tens of terabytes to petabytes in size is no longer possible for people to >>: (Inaudible) analysis of data. So when (inaudible) you are talking about terabytes, you know, terabytes and more. But them as people start. >> Ian Foster: Yes. >>: Finding facts and (inaudible) and looking and aggregating the data, that data is reduced and (inaudible) increases. >> Ian Foster: Absolutely. So given ->>: That level to makes. (Brief talking over) >> Ian Foster: And I think ultimately you are interacting with the systems from your desktop, right, and that should be a rich interface, not a simple interface. >>: (Inaudible) commingling of economic issues, how do you pay for this issue along with computation issues. When you say data curation, you just mean I'm going to store it somewhere and I'm going to make sure. >>: That's not what I mean by -(Brief talking over) >>: But I think part of the point we should consider, making sure their compute resources. >> Ian Foster: Absolutely. >>: So that you can do function shipping basically rather than data shipping and it becomes more of a (inaudible) optimization you know. >> Ian Foster: Because otherwise the data is just put away somewhere to die and it's never accessed. And you know, one of -- I think, we -- many people are stall a bit, think in terms of data analysis as a person looking at data but increasingly it's got to be programs looking at data. As Alex Solay (phonetic) says, the amount of data is increasing exponentially, the number of analysts is constant, more or less, so clearly each question is going to involve an exponential amount of data unless you are missing opportunities. >>: But the people aspect is actually pretty (inaudible) because the question as to what degree, you know, how much in terms of compute resources as a relative to the actually storage of data or (inaudible) free, you know, along with the data versus you know I'm willing to pay so you either make sure they're compute resources close to the data or I pay for you to give me tapes and I'll do it on my own and (inaudible). >> Ian Foster: We need to wrap up. But another potential opportunity is there are, you know, there's people ask the same question repeatedly and that, I mean, certainly in an environmental data, you know, climate data, what they do is they go through and compute some predefined set of commonly asked questions, you know, seasonal means of sea-surface temperature. So if you want a seasonal mean, that's really easy to get, but if you want something slightly different then you better download the whole data set. But maybe many people would like something different. >> Daron Green: Okay. I think we better wrap up. >> Ian Foster: Yes. Thank you. >> Daron Green: And those of you want to talk to him afterwards I'm sure he's willing to stick around. So thanks very much indeed. (Applause)