DRAFT Eaters of the Lotus: Landauer’s Principle

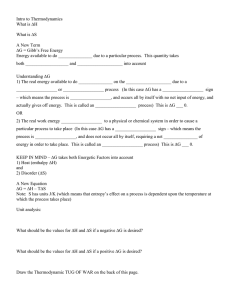

advertisement