Document 15930404

advertisement

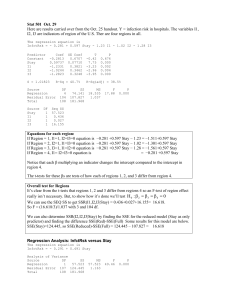

5/01/03 252y0341 Introduction This is long, but that’s because I gave a relatively thorough explanation of everything that I did. When I worked it out in the classroom, it was much, much shorter. The easiest sections were probably 1a, 2a, 2b, 2c, 7b, 7c, and 7d in Part I. There are some very easy sections in part I if you just follow suggestions and look at p-values and R-squared. After doing the above, I might have computed the spare parts in 3b in Part II. Spare Parts Computation: SSx x 2 nx 2 990401 11260 .636 2 x 2867 243158 .6 x 260 .636 n 11 Sxy xy nx y 4369154 11260 .636 1189 .36 y 13083 1189 .36 y n 959263 .6 11 SSy y 2 ny 2 20022545 111189 .36 2 4462195 .7 And not recomputed them every time I needed them in Problems 4 and 5 ! Only then would I have tried the multiple regression. Many of you seem to have no idea what a statistical test is. We have been doing them every day. The most common examples of this were in part II. 7 10 .06087 and p 2 .09709 . Hey look! They’re different! Whoopee! And you Problem 1b: p 3 115 103 think that you will get credit for this? Whether these two proportions come from the same population or not, chances are they will be somewhat different, you need one of the three statistical tests shown in the solution to show that they are significantly different. Problem 3c: Many of you started with s x2 x 2 nx 2 n 1 990401 11260 .363 2 243158 .6 24315 .86 10 10 s 24315 .86 155 .90 This is fine and you got some credit for knowing how to compute a sample variance, though you probably had already computed the numerator somewhere else in this exam. But then you told me that this wasn’t 200. Where was your test?: 5/01/03 252y0341 ECO252 QBA2 FINAL EXAM May 6, 2003 Name KEY Hour of Class Registered (Circle) I. (18 points) Do all the following. Note that answers without reasons receive no credit. A researcher wishes to use demographic information to predict sales of a large chain of nationwide sports stores. The researcher assembles the following data for a random sample of 38 stores. Use .10 in this problem. That’s why p-values above .10 mean that the null hypothesis of insignificance is not rejected. Row 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 Sales 1695713 3403862 2710353 529215 663687 2546324 2787046 612696 891822 1124968 909501 2631167 882973 1078573 844320 1849119 3860007 826574 604683 1903612 2356808 2788572 634878 2371627 2627838 1868116 2236797 1318876 1868098 1695219 2700194 1156050 643858 2188687 830352 1226906 566904 826518 Age 33.1574 32.6667 35.6553 33.0728 35.7585 33.8132 30.9797 30.7843 32.3164 32.5312 31.4400 33.1613 31.8736 33.4072 34.0470 28.8879 36.1056 32.8083 33.0538 33.4996 32.6809 28.5166 32.8945 30.5024 30.2922 31.2911 33.0498 32.9348 31.8381 31.0794 32.1807 31.6944 34.0263 34.7315 30.5613 33.5183 32.3952 29.9108 Growth Income 0.8299 0.6619 0.9688 0.0821 0.4646 2.1796 1.8048 -0.0569 -0.1577 0.3664 2.2256 1.5158 0.1413 -1.0400 1.6836 2.3596 0.7840 0.1164 1.1498 0.0606 1.6338 1.1256 1.4884 4.7937 1.8922 1.8667 1.7896 0.2707 3.0129 23.4630 0.7041 -0.1569 0.7084 0.1353 0.3848 0.7417 0.6693 0.1111 26748.5 53063.8 36090.1 32058.1 47843.4 50181.0 30710.1 29141.7 25980.2 18730.9 31109.2 35614.1 23038.4 34531.7 30350.4 38964.9 49392.8 25595.7 29622.6 31586.1 39674.6 28879.0 24287.1 46711.2 33449.8 31694.5 25459.2 47047.3 26433.2 33396.7 26179.4 33454.6 42271.5 46514.8 27030.8 42910.1 40561.4 22326.0 HS 73.5949 88.4557 73.5362 79.1780 84.1838 93.4996 78.0234 70.2949 70.6674 63.7395 76.9059 82.9452 65.2127 73.4944 80.2201 87.5973 85.3041 65.5884 80.6176 80.3790 79.8526 81.2371 70.2244 87.1046 80.2057 75.2914 77.6162 85.1753 74.1792 81.6991 73.4140 73.7161 78.6493 80.9503 66.8057 77.8905 79.3622 58.3610 College 17.8350 31.9439 18.6198 20.6284 35.2032 41.7057 28.0250 15.0882 10.9829 13.2458 19.5500 20.8135 16.9796 32.9920 22.3185 24.5670 30.8790 17.4545 18.6356 38.3249 23.7780 16.9300 19.1429 30.8843 26.5570 28.3600 19.2490 35.4994 18.6375 41.1130 17.8566 26.5426 29.8734 24.5374 14.1390 20.8340 19.0309 10.6729 In the data above ‘Sales’ is the total sales in the last month, ‘Age’ is the median customer age, ‘Growth’ is the population growth rate in the last ten years, ‘Income’ is median family income, ‘HS’ is percent (Don’t forget that it is in per cent) of potential customers with a high school diploma, ‘College’ is percent of potential customers with a college degree. To start with, the researcher runs ‘sales’ against each independent variable individually with the following results. Comments that answer the question on Page 3 appear in red. MTB > regress c1 1 c2 Regression Analysis: Sales versus Age The regression equation is Sales = 931626 + 21783 Age Predictor Coef Constant 931626 Age 21783 slope is insignificant. SE Coef 2851421 87750 T 0.33 0.25 P 0.746 0.805 The p-value above .10 shows us that the 5/01/03 252y0341 2 S = 919493 R-Sq(adj) = 0.0% Extremely low R-sq – Little explanation of Y. R-Sq = 0.2% Analysis of Variance Source DF SS MS Regression 1 52099324721 52099324721 Residual Error 36 3.04368E+13 8.45467E+11 Total 37 3.04889E+13 F 0.06 P 0.805 Same p-value, same conclusion. Unusual Observations Obs Age Sales Fit SE Fit Residual St Resid 17 36.1 3860007 1718106 353724 2141901 2.52R 22 28.5 2788572 1552797 376045 1235775 1.47 X R denotes an observation with a large standardized residual X denotes an observation whose X value gives it large influence. MTB > regress c1 1 c3 Regression Analysis: Sales versus Growth The regression equation is Sales = 1595571 + 26834 Growth Predictor Coef SE Coef T P Constant 1595571 161301 9.89 0.000 Growth 26834 39601 0.68 0.502 The p-value above .10 shows us that the slope is insignificant. S = 914467 R-Sq = 1.3% R-Sq(adj) = 0.0% Extremely low R-sq – Little explanation of Y. Analysis of Variance Source Regression Residual Error Total DF SS MS 1 3.83946E+11 3.83946E+11 36 3.01050E+13 8.36249E+11 37 3.04889E+13 F 0.46 P 0.502 Same p-value, same conclusion. Unusual Observations Obs Growth Sales Fit SE Fit Residual St Resid 17 0.8 3860007 1616609 151819 2243398 2.49R 30 23.5 1695219 2225167 878449 -529948 -2.09RX R denotes an observation with a large standardized residual X denotes an observation whose X value gives it large influence. MTB > regress c1 1 c4 Regression Analysis: Sales versus Income The regression equation is Sales = 299877 + 39.2 Income Predictor Constant Income slope is significant. S = 849860 Coef 299877 39.17 SE Coef 554447 15.71 R-Sq = 14.7% T 0.54 2.49 P 0.592 0.017 The p-value below .10 shows us that the R-Sq(adj) = 12.3% Small R-sq compared with HS regression. Analysis of Variance Source Regression Residual Error Total DF SS MS 1 4.48747E+12 4.48747E+12 36 2.60014E+13 7.22262E+11 37 3.04889E+13 Unusual Observations Obs Income Sales 17 49393 3860007 Fit 2234579 SE Fit 276038 F 6.21 P 0.017 Residual 1625428 St Resid 2.02R R denotes an observation with a large standardized residual 3 5/01/03 252y0341 MTB > regress c1 1 c5 Regression Analysis: Sales versus HS The regression equation is Sales = - 2969741 + 59660 HS Predictor Constant HS significant. Coef -2969741 59660 S = 802004 SE Coef 1370956 17669 R-Sq = 24.1% T -2.17 3.38 P 0.037 0.002 The p-value below .10 shows us that the slope is R-Sq(adj) = 21.9% Best R-sq of the lot. Analysis of Variance Source Regression Residual Error Total DF SS MS 1 7.33335E+12 7.33335E+12 36 2.31556E+13 6.43210E+11 37 3.04889E+13 Unusual Observations Obs HS Sales 17 85.3 3860007 38 58.4 826518 Fit 2119509 512081 F 11.40 SE Fit 192928 358068 P 0.002 Residual 1740498 314437 St Resid 2.24R 0.44 X R denotes an observation with a large standardized residual X denotes an observation whose X value gives it large influence. MTB > regress c1 1 c6 Regression Analysis: Sales versus College The regression equation is Sales = 789847 + 35854 College Predictor Constant College S = 871330 Coef 789847 35854 SE Coef 439508 17582 R-Sq = 10.4% T 1.80 2.04 P 0.081 0.049 R-Sq(adj) = 7.9% Significant if alpha is .10. Small R-sq compared with HS regression. Analysis of Variance Source Regression Residual Error Total DF SS MS 1 3.15714E+12 3.15714E+12 36 2.73318E+13 7.59216E+11 37 3.04889E+13 Unusual Observations Obs College Sales 6 41.7 2546324 17 30.9 3860007 Fit 2285170 1896988 SE Fit 347197 189865 F 4.16 P 0.049 Residual 261154 1963020 St Resid 0.33 X 2.31R R denotes an observation with a large standardized residual X denotes an observation whose X value gives it large influence. 1. On the basis of the material above the researcher decides that ‘HS’ is the best single predictor of sales. Please explain why. Consider the values of R 2 and the significance tests on the slope of the equation. According to the equation showing the response of sales to HS, how much will sales rise if there is a 1% increase in people with a high school diploma. The average store has sales of $1638487. Relative to this, what percent increase in sales would be caused by a 1 (per cent) increase in ‘HS’. (4) Solution: We could rule out some of these because of pathetically low R-sq or insignificant coefficients. The HS regression has the highest R-sq and a significant coefficient for the independent variable. Sales go up by 59660 for each rise of 1 in HS. This is about 3.7%. 4 5/01/03 252y0341 2. The researcher tries to improve the prediction by adding another variable. Since there were 4 other variables than ‘HS,’ there are four regressions below. Do any of them represent an improvement on ‘HS’ alone? Why? Look at the significance tests on the coefficients of the new variables and the adjusted R 2 . In order to put this in perspective, the average values of the independent variables are shown below. Age Growth Income College HS 32.450 1.599 34175 23.67 77.24 Take the best of the four regressions below and give the value of sales that would be predicted for a store with average value of the independent variables and explain by what percent sales would rise if ‘HS’ went up by 1. How much does this differ from the prediction using ‘HS’ alone. (4) MTB > regress c1 2 c5 c2 Regression Analysis: Sales versus HS, Age The regression equation is Sales = - 2126081 + 60953 HS - 29076 Age Predictor Constant HS Age Coef -2126081 60953 -29076 S = 811809 SE Coef 2678378 18226 78952 R-Sq = 24.3% T -0.79 3.34 -0.37 P 0.433 0.002 0.715 Significant – way below 10 per cent. Insignificant. R-Sq(adj) = 20.0% Analysis of Variance Source Regression Residual Error Total Source HS Age DF SS MS 2 7.42273E+12 3.71137E+12 35 2.30662E+13 6.59034E+11 37 3.04889E+13 F 5.63 P 0.008 DF Seq SS 1 7.33335E+12 1 89382656452 Unusual Observations Obs HS Sales 17 85.3 3860007 Fit 2023658 SE Fit 325389 Residual 1836350 St Resid 2.47R R denotes an observation with a large standardized residual MTB > regress c1 2 c5 c3 Regression Analysis: Sales versus HS, Growth The regression equation is Sales = - 2959336 + 59494 HS + 1506 Growth Predictor Constant HS Growth Coef -2959336 59494 1506 S = 813360 SE Coef 1412551 18355 36079 R-Sq = 24.1% T -2.10 3.24 0.04 P 0.043 0.003 0.967 Significant Insignificant!. R-Sq(adj) = 19.7% Analysis of Variance Source Regression Residual Error Total DF SS MS 2 7.33450E+12 3.66725E+12 35 2.31544E+13 6.61555E+11 37 3.04889E+13 F 5.54 P 0.008 5 5/01/03 252y0341 Source HS Growth DF Seq SS 1 7.33335E+12 1 1152089260 Unusual Observations Obs HS Sales 17 85.3 3860007 30 81.7 1695219 Fit 2116944 1936614 SE Fit 205088 786380 Residual 1743063 -241395 St Resid 2.21R -1.16 X R denotes an observation with a large standardized residual X denotes an observation whose X value gives it large influence. MTB > regress c1 2 c5 c4 Regression Analysis: Sales versus HS, Income The regression equation is Sales = - 3089379 + 62540 HS - 3.0 Income Predictor Constant HS Income Coef -3089379 62540 -3.01 SE Coef 1715239 30098 25.26 T -1.80 2.08 -0.12 P 0.080 0.045 0.906 Insignificant. S = 813216 R-Sq = 24.1% R-Sq(adj) = 19.7% This is the highest R-sq. But if Sales go up by 62540 for a rise of 1 in HS, the increase of 3.8% isn’t much of a change from our previous result. Analysis of Variance Source Regression Residual Error Total Source HS Income DF SS MS 2 7.34273E+12 3.67136E+12 35 2.31462E+13 6.61320E+11 37 3.04889E+13 F 5.55 P 0.008 DF Seq SS 1 7.33335E+12 1 9375516635 Unusual Observations Obs HS Sales 17 85.3 3860007 Fit 2096954 SE Fit 272313 Residual 1763053 St Resid 2.30R R denotes an observation with a large standardized residual MTB > regress c1 2 c5 c6 Regression Analysis: Sales versus HS, College The regression equation is Sales = - 3193739 + 64448 HS - 6161 College Predictor Constant HS College Coef -3193739 64448 -6161 S = 812572 SE Coef 1627759 25486 23343 R-Sq = 24.2% T -1.96 2.53 -0.26 P 0.058 0.016 0.793 Insignificant. R-Sq(adj) = 19.9% Analysis of Variance Source Regression Residual Error Total Source HS College DF SS MS 2 7.37935E+12 3.68967E+12 35 2.31096E+13 6.60273E+11 37 3.04889E+13 F 5.59 P 0.008 DF Seq SS 1 7.33335E+12 1 45998746314 Unusual Observations Obs HS Sales Fit SE Fit Residual 17 85.3 3860007 2113692 196709 1746316 R denotes an observation with a large standardized residual St Resid 2.22R 6 5/01/03 252y0341 3. In desperation the researcher tries to add all the variables at once. a. What does the ANOVA show? (2) b. Do any of the coefficients have a wrong sign? (Remember there is nothing wrong with a negative coefficient unless you can give a reason why it shouldn’t be negative) (1) c. Which of the coefficients are significant? (2) Lots of you answered this, few of you gave reasons, and many of you seem to have thought that every positive coefficient was significant. d. Do an F test to show if addition of all the variables improved the regression. To do this drop a few zeros. Take the Regression Sum of squares in the regression with ‘HS’ alone as 7.333, the regression sum of squares after adding all the new variables as 7.454 and the error sum of squares as 23.303. (This should have read 23.034 but it wouldn’t change anything) I’m getting this from the ANOVA table below and the sequential SS table below it by dividing all the SS’s by 10 12 since only their relative size matters. (3) e. To put the results in perspective try again to predict the sales that a store with the mean values of the independent variables would have and what percent increase in sales would come from an increase of 1 in ‘HS.’ How does this compare with our prediction when we used ‘HS’ alone? f. The column marked VIF (variance inflation factor) is a test for (multi)collinearity. The rule of thumb is that if any of these exceeds 5, we have a multicollinearity problem. None does. What is multicollinearity and why am I worried about it? (2) Solution: a) The p-value for the ANOVA is .095 indicating that we can reject the null hypothesis of no explanatory power for the regression at 10%, but not 5%. b) The negative coefficient on College doesn’t look very reasonable do we really think that being a college graduate cuts your demand for athletic equipment? It seems that many of you thought that the coefficient of age could not be negative because age can’t be negative. If you had been thinking you would have realized that areas with older residents might buy less sports equipment. c) HS is significant at the 10% level because its p-value is below 10%. d) ANOVASource SS DF MS F HS 7.333 1 Others 0.121 4 Error 23.034 32 Total 30.049 37 7.333 11.78 .03025 0.05 0.6225 If you check the F table for 1 and 32 DF, the 5% value is 4.15 and the 1% value is 7.50, so we would have to accept the null hypothesis of no improvement. e) The regression says Sales = - 2270706 + 62735 HS - 27384 Age - 5702 College + 2.4 Income + 2084 Growth =-2270706 + 62735(77.34) – 27384(32.450) – 5702(23.67) + 2.4(34175) + 2084(1.599) =-2270706 + 4845651 – 888611 – 134966 + 82020 + 3332 = 1636720. Still about 3.8%. f) Multicollinearity is close correlation between the independent variables and makes accurate values of the coefficients hard to get. MTB > regress c1 5 c5 c2 c6 c4 c3; SUBC> vif. Regression Analysis: Sales versus HS, Age, College, Income, Growth The regression equation is Sales = - 2270706 + 62735 HS - 27384 Age - 5702 College + 2.4 Income + 2084 Growth Predictor Constant HS Age College Income Growth S = 848433 Coef -2270706 62735 -27384 -5702 2.45 2084 SE Coef 3696533 35090 93046 28359 30.53 44098 R-Sq = 24.4% T -0.61 1.79 -0.29 -0.20 0.08 0.05 P 0.543 0.083 0.770 0.842 0.937 0.963 VIF 3.5 1.3 2.7 3.8 1.4 R-Sq(adj) = 12.6% 7 5/01/03 252y0341 Analysis of Variance Source Regression Residual Error Total Source HS Age College Income Growth DF SS MS 5 7.45407E+12 1.49081E+12 32 2.30348E+13 7.19839E+11 37 3.04889E+13 F 2.07 P 0.095 DF Seq SS 1 7.33335E+12 1 89382656452 1 26200610077 1 3524785887 1 1608397623 Unusual Observations Obs HS Sales 17 85.3 3860007 30 81.7 1695219 Fit 2038662 1899886 SE Fit 360453 826437 Residual 1821346 -204667 St Resid 2.37R -1.07 X R denotes an observation with a large standardized residual X denotes an observation whose X value gives it large influence. 8 5/01/03 252y0341 II. Do at least 4 of the following 7 Problems (at least 15 each) (or do sections adding to at least 60 points Anything extra you do helps, and grades wrap around) . Show your work! State H 0 and H1 where applicable. Use a significance level of 5% unless noted otherwise. Do not answer questions without citing appropriate statistical tests. Remember: 1) Data must be in order for Lilliefors. Make sure that you do not cross up x and y in regressions. 1. (Berenson et. al. 1220) A firm believes that less than 15% of people remember their ads. A survey is taken to see what recall occurs with the following results (In these problems calculating proportions won’t help you unless you do a statistical test): Medium Mag TV Radio Total Remembered 25 10 7 42 Forgot 73 93 108 274 Total 98 103 115 316 a. Test the hypothesis that the recall rate is less than 15% by using proportions calculated from the ‘Total’ column. Find a p-value for this result. (5) b. Test the hypothesis that the proportion recalling was lower for Radio than TV. (4) c. Test to see if there is a significant difference in the proportion that remembered according to the medium. (6) d. The Marascuilo procedure says that if (i) equality is rejected in c) and (ii) p 2 p3 2 s p , where the chi – squared is what you used in c) and the standard deviation is 2 what you would use in a confidence interval solution to b), you can say that you have a significant difference between TV and Radio. Try it! (5) Solution: I have never seen so many people lose their common sense as did on this problem. Many of you seemed to think that the answer to c) was the answer to a) in spite of the fact that .15 appeared nowhere in your answer. An A student tried at one point to compare the total fraction that forgot with the total fraction that remembered, even though the method she used was intended to compare fractions of two different groups and the two fractions she compared could only have been the same if they were both .5, since they had to add to one. a) From the formula table. Interval for Confidence Hypotheses Test Ratio Critical Value Interval Proportion p p0 p p z 2 s p pcv p0 z 2 p H 0 : p p0 z H : p p p 1 0 pq p0 q0 sp p n n q 1 p q0 1 p0 H 1 : p .15 It is an alternate hypothesis because it does not contain an equality. The null hypothesis is thus H 0 : p .15. Initially, assume .05 and note than n 316 , x 42 so that p0 q0 x 42 .15.85 .1329 . p .0004075 .02019 . This is a one-sided test and n 316 n 316 z z .05 1.645 . This problem can be done in one of three ways. p 9 5/01/03 252y0341 (i) The test ratio is z p p0 p .1329 .15 0.8470 . Make a diagram of a normal curve with a .02019 mean at zero and a reject zone below - z z.05 1.645 . Since z 0.8470 is not in the 'reject' zone, do not reject H 0 . We cannot say that the proportion who do not recall is significantly below 15%. We can use this to get a p-value. Since our alternate hypothesis is .1329 .15 H 1 : p .15 , we want a down-side value, i.e. P p .1329 P z .02019 Pz .8470 Pz 0 P0.85 z 0 .5 .3023 .1927 . Since the p-value is above the significance level, do not reject H 0 . Make a diagram. Draw a Normal curve with a mean at .15 and represent the p-value by the area below .1329, or draw a Normal curve with a mean at zero and represent the p-value by the area below -.85. (ii) Since the alternative hypothesis says H 1 : p .15 we need a critical value that is below .15. We use pcv p0 z p .15 1.645.02019 .1168. Make a diagram of a normal curve with a mean at .15 and a ‘reject’ zone below .1168. Since p .1329 is not in the 'reject' zone, do not reject H 0 . We cannot say that the proportion is significantly below 15%. pq . To make the 2-sided confidence interval, n p p z 2 s p , into a 1-sided interval, go in the same direction as H 1 : p .15 We get (iii) To do a confidence interval we need s p pq .1369 .8671 .000376 .01938 . Thus the interval is p p z s p n 316 .1369 1.645 .01938 .1687 . p .1687 does not contradict the null hypothesis. sp 7 10 .06087 , n1 115 and p 2 .09709 , n 2 103 . 115 103 Confidence Hypotheses Test Ratio Critical Value Interval pcv p0 z 2 p p p 0 p p z 2 sp H 0 : p p0 z If p0 0 p H 1 : p p0 p p1 p2 b) We are comparing p 3 Interval for Difference between proportions q 1 p s p p1q1 p2 q 2 n1 n2 p 0 p 01 p 02 or p 0 0 p If p 0 p p01q 01 p02 q 02 n1 n2 Or use s p s p p0 q 0 1 n1 1 n2 n p n2 p2 p0 1 1 n1 n 2 p3 q3 p 2 q 2 .06087 .93913 .09709 .90291 .00049709 .00085108 .00134808 .03672 n3 n2 115 103 p p3 p 2 .03622 , p0 n p n 2 p 2 115 .06087 103 .09709 7 10 .07798 (Yes p 0 1 1 .07798 , but why waste 115 103 n1 n 2 115 103 your time?) .05, z z.05 1.645 . Note that q 1 p and that q and p are between 0 and 1. p p 0 q 0 1 n1 1 n3 .07798 .92202 1115 1103 .0013233 .03638 10 5/01/03 252y0341 H 0 : p 3 p 2 H 0 : p 3 p 2 0 H0 : p 0 Our hypotheses are or or H1 : p 0 H 1 : p 3 p 2 H1 : p 3 p 2 0 There are three ways to do this problem. Only one is needed p p 0 .03622 0 0.9956 Make a Diagram showing a 'reject' (i) Test Ratio: z p .03638 region below -1.645. Since -0.9956 is above this value, do not reject H 0 . (ii) Critical Value: pcv p0 z p becomes pcv p0 z p 2 0 1.645 .03638 .5985 . Make a Diagram showing a 'reject' region below - 0.6985. Since p .03622is not below this value, do not reject H 0 . (iii) Confidence Interval:: p p z s p becomes p p z sp 2 .03622 1.645 .03672 0.02418 . Since p .02418 does not contradict p 0 , do not reject H 0 . c) DF r 1c 1 12 2 H 0 : Homogeneousor p1 p 2 p 3 H 1 : Not homogeneousNot all ps are equal O R F Total M 25 73 98 T 010 R Total 007 042 093 108 274 103 115 316 pr .13291 .86709 1.0000 .2052 5.9915 E On 1 2 3 Total pr 13 .0252 13 .6897 15 .2846 42 .000 .13291 Oft 84 .9748 89 .3103 99 .7154 274 .000 .86709 Total 98 .0000 103 .000 115 .000 316 .000 1.00000 The proportions in rows, p r , are used with column totals to get the items in E . Note that row and column sums in E are the same as in O . (Note that 2 19.0224 335.0229 316 is computed two different ways here - only one way is needed. Too many of you wasted your time computing both of the last two columns, with E so large, you only needed the O and E columns and the last column) Row 1 2 3 4 5 6 O 25 73 10 93 7 108 316 E 13.0252 84.9748 13.6897 89.3103 15.2846 99.7154 316.000 E O -11.9748 11.9748 3.6897 -3.6897 8.2846 -8.2846 0.0000 E O2 143.396 143.396 13.614 13.614 68.635 68.635 O E 2 E 11.0092 1.6875 0.9945 0.1524 4.4905 0.6883 19.0224 O2 E 47.984 62.713 7.305 96.842 3.206 116.973 335.022 Since the 2 computed here is greater than the 2 from the table, we reject H 0 . 11 5/01/03 252y0341 d) The Marascuilo procedure says that if (i) equality is rejected in c) and (ii) p 2 p3 2 s p , where the chi – squared is what you used in c) and the standard deviation is 2 what you would use in a confidence interval solution to b), you can say that you have a significant difference between TV and Radio. OK – We already have DF r 1c 1 12 2, .2052 5.9915 s p p3 q3 p 2 q 2 .06087 .93913 .09709 .90291 .00049709 .00085108 .00134808 .03672 n3 n2 115 103 p p3 p 2 .03622 . I guess we really should use .2025 7.3778 2.7162 and 22 s p 2.7162 .03672 .09974 . Since p 2 p3 is obviously smaller than this, we do not have a significant difference in these 2 proportions. 12 5/01/03 252y0341 2. (Berenson et. al. 1142) A manager is inspecting a new type of battery. These are subjected to 4 different pressure levels and their time to failure is recorded. The manager knows from experience that such data is not normally distributed. Ranks are provided. PRESSURE Use low 1 2 3 4 5 8.0 8.1 9.2 9.4 11.7 rank normal 11 12 15 16 19 7.6 8.2 9.8 10.9 12.3 rank high rank whee! rank 8 13 17 18 20 6.0 6.3 7.1 7.7 8.9 4 5 7 9 14 5.1 5.6 5.9 6.7 7.8 1 2 3 6 10 a. At the 5% level analyze the data on the assumption that each column represents a random sample. Do the column medians differ? (5) b. Rerank the data appropriately and repeat a) on the assumption that the data is non-normal but cross classified by use. (5) c. This time I want to compare high pressure (H) against low - moderate pressure (L). I will write out the numbers 1-20 and label them according to pressure. 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 H H H H H H H L H H L L L H L L L L L L Do a runs test to see if the H’s and L’s appear randomly. This is called a Wald-Wolfowitz test for the equality of means in two nonnormal samples. Null hypothesis is that the sequence is random and the means are equal. What is your conclusion? (5) Solution: a) This is a Kruskal – Wallis Test, Equivalent to one-way ANOVA when the underlying distribution is non-normal. H 0 : Columns come from same distribution or medians equal. I am basically copying the outline. There are n 20 data items, so rank them from 1 to 20. Let n i be the number of items in column i and SRi be the rank sum of column i . n 1 2 3 4 5 8.0 8.1 9.2 9.4 11.7 11 12 15 16 19 SR1 73 n i 7.6 8.2 9.8 10.9 12.3 . 8 13 17 18 20 SR2 76 6.0 6.3 7.1 7.7 8.9 4 5 7 9 14 SR3 39 5.1 5.6 5.9 6.7 7.8 1 2 3 6 10 SR4 22 To check the ranking, note that the sum of the four rank sums is 73.0 + 76.0 + 39.0 + 22.0 = 210.0, and nn 1 20 21 210 . that the sum of the first n numbers is 2 2 12 SRi 2 3n 1 Now, compute the Kruskal-Wallis statistic H nn 1 i ni 12 73 .02 76 .02 39 2 22 2 321 12 1 5329 5776 1521 484 63 11 .9143 . 20 21 5 5 5 5 420 5 If the size of the problem is larger than those shown in Table 9, use the 2 distribution, with df m 1 3 , where m is the number of columns. Compare H with .2053 7.81475 . Since H is larger than .205 , reject the null hypothesis. 13 5/01/03 252y0341 b) This is a Friedman test ,equivalent to two-way ANOVA with one observation per cell when the underlying distribution is non-normal. H 0 : Columns come from same distribution or medians equal. Note that the only difference between this and the Kruskal-Wallis test is that the data is cross-classified in the Friedman test. 1 2 3 4 5 8.0 8.1 9.2 9.4 11.7 4 3 3 3 3 SR1 16 7.6 8.2 9.8 10.9 12.3 3 4 4 4 4 SR2 19 6.0 6.3 7.1 7.7 8.9 2 2 2 2 2 SR3 10 5.1 5.6 5.9 6.7 7.8 1 1 1 1 1 SR4 5 Assume that .05 . In the data, the pressures are represented by c 4 columns, and the uses by r 5 rows.. In each row the numbers are ranked from 1 to c 4 . For each column, compute SRi , the rank sum of column i . To check the ranking, note that the sum of the four rank sums is 16 + 19 + 10 + 5 = 50, and that the sum of cc 1 the c numbers in a row is . However, there are r rows, so we must multiply the expression by r . 2 rcc 1 545 SRi 50 . So we have 2 2 12 SRi2 3r c 1 Now compute the Friedman statistic F2 rc c 1 i 12 16 2 19 2 10 2 52 355 12 256 361 100 25 75 14 .04 . Since the 100 545 size of the problem is larger than those shown in Table 10, use the 2 distribution, with df c 1 , where c is the number of columns. Again, .2053 7.81475 . Since our statistic is larger than .205 , reject the null hypothesis. c) . This time I want to compare high pressure (H) against low - moderate pressure (L). I will write out the numbers 1-20 and label them according to pressure. 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 H H H H H H H L H H L L L H L L L L L L If we do a runs test, n 20 , r 6, n1 10 and n 2 10 . Can you see why r 6 ? I have underlined alternate runs to help you. The runs test table gives us critical values of 6 and 16. The directions on the table say to reject randomness if r 6 or r 16 . Since the sequence is not random, the means are not equal. 14 5/01/03 252y0341 3. A researcher studies the relationship of numbers of subsidiaries and numbers of parent companies in 11 metropolitan areas and finds the following: Area parents 1 2 3 4 5 6 7 8 9 10 11 653 391 352 261 226 218 202 151 141 138 134 2867 subsidiaries x2 xy 426409 152881 123904 68121 51076 47524 40804 22801 19881 19044 17956 990401 1702371 670174 653664 320508 198880 146278 307848 134239 67962 78522 88708 4369154 y x 2607 1714 1857 1228 880 671 1524 889 482 569 662 13083 y2 6796449 2937796 3448449 1507984 774400 450241 2322576 790321 232324 323761 438244 20022545 a. Do Spearman’s rank correlation between x and y and test it for significance (6) b. Compute the sample correlation between x and y and test it for significance (6) c. Compute the sample standard deviation of x and test to see if it equals 200 (4) Solution: a) First rank x and y into rx and r y respectively. (As usual, people tried to compute rank correlations without ranking, I warned you!) Compute d rx ry and d 2 . Area parents 1 2 3 4 5 6 7 8 9 10 11 subsidiaries x y rx r y d rx ry d 2 653 391 352 261 226 218 202 151 141 138 134 2607 1714 1857 1228 880 671 1524 889 482 569 662 11 10 9 8 7 6 5 4 3 2 1 66 11 9 10 7 5 4 8 6 1 2 3 66 0 1 -1 1 2 2 -3 -2 2 0 -2 0 0 1 1 1 4 4 9 4 4 0 4 32 d 0 is a check on the correctness of the ranking. 6 d 192 632 From the outline: r 1 1 1 1 0.1455 0.8545 11121 nn 1 1111 1 Note that 2 s 2 2 The 11 line from the rank correlation table has n .050 .025 .010 .005 11 .5273 .6091 .7000 .7818 H 0 : s 0 If you tested at the 5% level, reject the null hypothesis of no relationship if rs is above .5273 H 1 : s 0 H 0 : s 0 or, if you tested at the 5% level, reject the null hypothesis if rs is above .6091. So, in this H 1 : s 0 case we have a significant rank correlation. 15 5/01/03 252y0341 b) We need the usual spare parts. From above n 11, xy 4369154 and y 2 x 2867, y 13083, x 2 990401, 20022545. (In spite of the fact that most column computations were done for you, many of you wasted time and energy doing them over again.) Spare Parts Computation: x 2867 x 260 .636 n 11 SSx y n y x nx 2 990401 11260 .636 2 2 243158 .6 Sxy xy nxy 4369154 11260 .636 1189 .36 959263 .6 13083 1189 .36 11 SSy y 2 ny 2 20022545 111189 .36 2 4462195 .7 The simple sample correlation coefficient is r XY nXY X nX Y 2 R 2 nY 2 square root of XY nXY Sxy 959263 .6 X nX Y nY SSxSSy 243158 .34462195 .7 .8480827 2 2 2 2 2 2 2 2 2 r .8480827 .9209 . From the outline, if we want to test H 0 : xy 0 against H1 : xy 0 and x and y are normally distributed, we use t n 2 r 1 r n2 2 .9209 1 .9209 11 2 .9209 2 .01688 .9209 7.088 . .12993 9 2.262 , we reject H 0 . Note that R-squared is always between 0 and 1 and that correlations are Since t .025 always between -1 and +1. c) From the formula table (but the outline is better) Interval for Confidence Hypotheses Interval VarianceH 0 : 2 02 n 1s 2 2 Small Sample .25 .5 2 H1: : 2 02 VarianceLarge Sample s 2DF z 2 2DF We already know n 11 and SSx x 2 nx 2 H 0 : 2 02 x 2 Critical Value n 1s 2 cv2 02 n 1s 2 .25.5 2 z 2 2 2DF 1 H1 : 2 02 2 Test Ratio nx 2 990401 11260.6362 243158.6 . Assume .05 . SSx 243158 .6 24315 .86 s 24315 .86 155 .90 n 1 n 1 10 H 0 : 200 2 n 1s 2 10 24315 .86 6.0790 . Only the test ratio method is normally used. 02 200 2 H 1 : 200 then s x2 10 20 .4832 Make a diagram. Show a curve with a mean at 10 and rejection zones DF n 1 10. .2025 10 20 .4832 and below 210 3.2470 . Since your value of 2 is between the two (shaded) above .2025 .975 critical values, do not reject H 0 . 16 5/01/03 252y0341 4. Data from the previous page is repeated: Area parents 1 2 3 4 5 6 7 8 9 10 11 653 391 352 261 226 218 202 151 141 138 134 2867 subsidiaries x2 xy 426409 152881 123904 68121 51076 47524 40804 22801 19881 19044 17956 990401 1702371 670174 653664 320508 198880 146278 307848 134239 67962 78522 88708 4369154 y x 2607 1714 1857 1228 880 671 1524 889 482 569 662 13083 y2 6796449 2937796 3448449 1507984 774400 450241 2322576 790321 232324 323761 438244 20022545 a. Test the hypothesis that the correlation between x and y is .8 (5) b. Test the hypothesis that x has the Normal distribution. (9) c. Test the hypothesis that x and y have equal variances. (4) Solution: a) From the previous page we know n 11 and XY nXY Sxy 959263 .6 X nX Y nY SSxSSy 243158 .34462195 .7 .8480827 2 R 2 2 2 2 r .8480827 .9209 or r 2 2 2 959283 .6 493 .112112 .39 . From the Correlation section of the outline: If we are testing H 0 : xy 0 against H 1 : xy 0 , and 0 0 , the test is quite different. 1 1 r z ln We need to use Fisher's z-transformation. Let ~ . This has an approximate mean 2 1 r ~ n 2 z z 1 1 1 0 and a standard deviation of s z of z ln , so that t . n3 sz 2 1 0 (Note: To get ln , the natural log, compute the log to the base 10 and divide by .434294482. ) H 0 : xy 0.8 Test when n 11, r .9209 and r 2 .8481 .05 . H 1 : xy 0.8 1 1 r 1 1 .9209 1 1.9209 1 1 ~ z ln ln ln ln 24 .28445 3.18984 1.5949 2 1 r 2 1 .9209 2 0.0791 2 2 1 1 0 z ln 2 1 0 sz 1 1 .8 1 1.8 1 1 ln 2 1 .8 2 ln 0.2 2 ln 9.0000 2 2.19722 1.09861 ~ z z 1.5949 1.09861 1 1 1 0.35355 . 1.404 . Compare this Finally t n3 11 3 8 sz 0.35355 with t n2 2 t .9025 2.262 . Since 1.404 lies between these two values, do not reject the null hypothesis. 17 5/01/03 252y0341 1 1 r ~ z10 log . This has an approximate mean of 2 1 r ~ n 2 z z 10 0.18861 and a standard deviation of s z 10 , so that t . 10 n3 s z 10 Note: To do the above with logarithms to the base 10, try 1 1 0 z 10 log 2 1 0 b) From the previous page we know n 11 , x x nx x nx SSx 2 2 2 x 2867 260 .636 , n 11 990401 11260.636 243158.6 and 2 2 SSx 243158 .6 24315 .86 s 24315 .86 155 .90 n 1 n 1 10 Use the setup in Problem E9 or E10. The best method to use here is Lilliefors because the data is not stated by intervals, the distribution for which we are testing is Normal, and the parameters of the xx distribution are unknown. We begin by putting the data in order and computing z (actually t ) and s proceed as in the Kolmogorov-Smirnov method. For example, in the second row 138 260 .636 O 11 - so z 0.79 , O 1 because there is only one number in each interval, n 155 .90 each value of O is 1 .0909 . Since the highest number in the interval represented by Row 2 is n 11 z 0.79 , Fe F 0.79 Pz 0.79 Pz 0 P0.79 z 0 .5 .2852 .2146 . s x2 Row 1 2 3 4 5 6 7 8 9 10 11 x 134 138 141 151 202 218 226 261 352 391 653 z -0.81 -0.79 -0.77 -0.70 -0.38 -0.27 -0.22 0.00 0.59 0.84 2.52 O O Fo n D Fe Fo Fe 1 .0909 .0909 1 .0909 .1818 1 .0909 .2727 1 .0909 .3636 1 .0909 .4545 1 .0909 .5455 1 .0909 .6364 1 .0909 .7273 1 .0909 .8192 1 .0909 .9091 1 .0909 1.0000 11 0.9999 .5 .5 .5 .5 .5 .5 .5 .5 .5 .5 .5 - .2910 .2852 .2794 .2580 .1480 .1064 .0871 = = = = = = = = + .2224 = + .2995 = + .4941 = .2090 .2146 .2206 .2420 .3520 .3936 .4129 .5000 .7224 .7995 .9941 .1181 .0328 .0521 .1216 .1025 .1519 .2235 .2273 .0968 .1096 .0059 From the Lilliefors table for .05 and n 11 , the critical value is .249. Since the maximum deviation (.2273) is below the critical value, we do not reject H 0 . c) From the previous page SSy s 2y y 2 ny 2 n 1 y 2 nxy 2 20022545 111189.362 4462195.7 SSy 4462195 .7 446219 .57 s x2 n 1 10 x 2 nx 2 n 1 If we follow 252meanx4 in the outline: Our Hypotheses are H 0 : x2 y2 SSx 243158 .6 24315 .86 n 1 10 and H 1 : x2 y2 . DF x n 1 10 and DFy n 1 10 , Since the table is set up for one sided tests, if we wish to test H 0 : x2 y2 , we must do two separate one-sided tests. First test DFx, DFy 10,10 3.72 F.025 F.025 and then test s 2y s x2 s x2 s 2y 24315 .86 0.0545 against 446219 1 DFy, DFx 10,10 3.72 F.025 . If 18 .351 against F.025 .0545 either test is failed, we reject the null hypothesis. Since 18.351 is larger than this critical value, reject H 0 . 18 5/01/03 252y0341 5. Data from the previous page is repeated: Area parents 1 2 3 4 5 6 7 8 9 10 11 653 391 352 261 226 218 202 151 141 138 134 2867 subsidiaries x2 xy 426409 152881 123904 68121 51076 47524 40804 22801 19881 19044 17956 990401 1702371 670174 653664 320508 198880 146278 307848 134239 67962 78522 88708 4369154 y x 2607 1714 1857 1228 880 671 1524 889 482 569 662 13083 y2 6796449 2937796 3448449 1507984 774400 450241 2322576 790321 232324 323761 438244 20022545 a. Compute a simple regression of subsidiaries against parents as the independent variable. (5) b. Compute s e . (3) c. Predict how many subsidiaries will appear in a city with 50 parent corporations. (1) d. Make your prediction in c) into a confidence interval. (3) e. Compute s b0 and make it into a confidence interval for 0 . (3) f. Do an ANOVA for this regression and explain what it says about 1 . (3) Solution: We need the usual spare parts. From above n 11, x 2 990401, xy 4369154 and y 2 x 2867, y 13083, 20022545. Spare Parts Computation: (Repeated from previous page) SSx x x 2867 260 .636 x 243158 .6 y 13083 1189 .36 y 959263 .6 n 11 n 11 Sxy SSy 2 nx 2 990401 11260 .636 2 xy nxy 4369154 11260 .636 1189 .36 y 2 ny 2 20022545 111189 .36 2 4462195 .7 b1 Sxy SSx xy nxy 959263 .6 3.9450 x nx 243158 .6 2 b0 y b1 x 1189 .36 3.9450 260 .636 161 .15 2 Yˆ b0 b1 x becomes Yˆ 161.15 3.9450 x . b) We already know that SST SSy y 2 nxy 2 20022545 111189.362 4462195.7 and that XY nXY Sxy 959263 .6 R .8480827 SSx SSy 243158 .34462195 .7 X nX Y nY SSR b Sxy b xy nx y 3.9450 959263 .6 3789091 .2 or 2 2 2 2 2 1 2 2 2 1 SSR b1 Sxy R 2 SST .84808274462195.7 3784311 SSE SST SSR 4462195 .7 3784311 677984 .77 s e2 SSE 677984 .77 75331 .6 n2 9 19 5/01/03 252y0341 s e2 or y 2 x ny 2 b12 2 nx 2 n2 So s e 75331 .6 274 .447 ( s e2 4462195 .7 3.9450 2 243158 .3 9 677917 75324 .1 9 is always positive!) c) If Yˆ 161.15 3.9450 x and x 50 , the prediction is Yˆ 161.15 3.945050 358.4. 2 1 X0 X . In d) From the outline, the Confidence Interval is Y0 Yˆ0 t sYˆ , where sY2ˆ s e2 n X 2 nX 2 ˆ ˆ this formula, for some specific X 0 , Y0 b0 b1 X 0 . Here X 0 50 , Y0 358.4 , X 260.636 and n 11 . . Then X 0 X 2 2 75331 .6 1 50 260 .636 75331 .6.27337 20593 .6 and 11 243158 .6 n X 2 nX 2 9 sYˆ 20593.6 143.505 , so that, if tn 2 t.025 2.262 the confidence interval is sY2ˆ 1 s e2 2 Y0 Yˆ0 t sYˆ 358 .4 2.262 143 .5 358 325 . This represents a confidence interval for the average value that Y will take when x 50 and is proportionally rather gigantic because we have picked a point fairly far from the data that was actually experienced. e) The outline says 2 1 X2 75331 .6 1 260 .636 75331 .60.37025 27891 .35 . So s b20 s e2 n X 2 nX 2 11 243158 .6 s b 27891 .35 167 .01 . 0 So the interval is 0 b0 t sb0 161.15 2.262167.01 161 378. This indicates that the intercept is 2 not significant. f) We can do a ANOVA table as follows: Source SS DF MS Regression SSR MSR 1 Error Total SSE SST n2 n 1 F MSR MSE MSE Source SS DF MS F Regression 3784311 1 3784311 50.234 Error 677985 9 75332 Total 4462196 11 1,9 5.12 Note that F.05 so we reject the null hypothesis that x and y are unrelated. This is the same as saying that H 0 : 1 0 is false. 20 5/01/03 252y0341 The Minitab output for this problem follows: The regression equation is subno = 161 + 3.94 parno Predictor Constant parno S = 274.4 Coef 161.2 3.9450 SE Coef 167.0 0.5566 R-Sq = 84.8% T 0.97 7.09 P 0.360 0.000 R-Sq(adj) = 83.1% Analysis of Variance Source Regression Residual Error Total DF 1 9 10 SS 3784219 677882 4462101 Unusual Observations Obs parno subno 1 653 2607.0 7 202 1524.0 MS 3784219 75320 Fit 2737.2 958.0 F 50.24 SE Fit 233.5 89.0 P 0.000 Residual -130.2 566.0 St Resid -0.90 X 2.18R R denotes an observation with a large standardized residual X denotes an observation whose X value gives it large 21 5/01/03 252y0341 6. A chain has the following data on prices, promotion expenses and sales of one product (You can do x x 1 2 100. Store 1 2 3 4 5 6 7 8 9 10 11 12 ): Computation of this sum is in red. It might help to divide y , x1 y and x 2 y by 10 and y 2 by sales promotion y x1 x2 x12 4141 3754 5000 4011 3224 2618 3746 3825 1096 1882 2159 2927 38383 59 59 59 59 79 79 79 79 99 99 99 99 948 200 400 600 600 200 400 600 600 200 400 400 600 5200 3481 3481 3481 3481 6241 6241 6241 6241 9801 9801 9801 9801 78092 y2 x 22 Store 1 2 3 4 5 6 7 8 9 10 11 12 price 40000 160000 360000 360000 40000 160000 360000 360000 40000 160000 160000 360000 2560000 x1 y 17147881 14092516 25000000 16088121 10394176 6853924 14032516 14630625 1201216 3541924 4661281 8567329 136211509 244319 221486 295000 236649 254696 206822 295934 302175 108504 186318 213741 289773 2855417 x1 x 2 11800 23600 35400 35400 15800 31600 47400 47400 19800 39600 39600 59400 406800 x2 y 828200 1501600 3000000 2406600 644800 1047200 2247600 2295000 219200 752800 863600 756200 17562800 y 3198.58, x1 79.0000 and x 2 433.333. a. Do a multiple regression of sales against x1 and x 2 . (10) b. Compute R 2 and R 2 adjusted for degrees of freedom. Use a regression ANOVA to test the usefulness of this regression. (6) d. Use your regression to predict sales when price is 79 cents and promotion expenses are $200. (2) e. Use the directions in the outline to make this estimate into a confidence interval and a prediction interval. (4) f. If the regression of Price alone had the following output: The regression equation is sales = 7564 - 55.3 price Predictor Constant price Coef 7564.3 -55.26 S = 605.6 SE Coef 863.6 10.71 R-Sq = 72.7% T 8.76 -5.16 P 0.000 0.000 R-Sq(adj) = 70.0% Analysis of Variance Source Regression Residual Error Total DF 1 10 11 SS 9772621 3667664 13440285 MS 9772621 366766 F 26.65 P 0.000 Do an F-test to see if adding x 2 helped. (4). The next page is blank – please show your work. I suggested that we divide y values by 10 and y squared by 100 to make computations more tractable. No one did, so I have redone this on the following page. After a year of statistics, too many of you decided that x1 y ? x1 x 2 and did something similar for some of the other sums. Where have you been? 5/01/03 252y0341 22 y 3838.3, x1 948, x 2 5200, x 78092, y 1362115.09, x y 285541.7, x y 1756280.0 and you should have found Solution: a) (With y divided by 10) n 12, x 2560000, x x 406800. 2 2 2 1 2 1 2 1 2 y 3838 .3 319 .858 , x x First, we compute y x2 x 2 n n 1 12 948 79 .00 , and 12 1 n 5200 433 .333 . Then, we compute our spare parts: 12 SST SSy y ny 1362115 .09 12 319 .858 2 134405 * 2 2 x y nx y 285541 .7 1279 319 .858 17684 Sx y X Y nX Y 1756280 12433 .333 319 .858 93020 SSx1 x12 nx12 78092 12792 3200* SSx2 X 22 nX 22 2560000 12433.3332 306670 * and Sx x X X nX X 406800 1279 433 .333 4000 . Sx1 y 1 2 1 2 2 1 2 1 2 1 2 * indicates quantities that must be positive. Then we substitute these numbers into the Simplified Normal Equations: X 1Y nX 1Y b1 X 12 nX 12 b2 X 1 X 2 nX 1 X 2 X Y nX Y b X X 2 2 1 1 2 nX X b X 1 2 2 2 2 nX , 2 2 17684 3200 b1 4000 b2 93020 4000 b1 306670 b2 and solve them as two equations in two unknowns for b1 and b2 . These are a fairly tough pair of equations to solve until we notice that, if we multiply 4000 by 0.8 we get 3200. The equations become 17684 .0 3200 b1 4000 b2 If we add these together, we get 56732 241336 b2 . This means that 74416 .0 3200 b1 245336 b2 56732 b2 0.23507 . Now remember that 3200 b1 4000 b2 17684 4000 .23507 17684 241336 16743 .72 5.2324 . Finally we get b0 by solving b0 Y b1 X 1 b2 X 2 16743 .72 . So b1 3200 319 .858 5.232 79.00 0.2351 433 .333 319 .858 413 .328 101 .877 631 .31 . Thus our equation is Yˆ b b X b X 631.31 5.232X 0.2351X . which are 0 1 1 2 2 2 2 2 x 948, x 5200, x 78092, y 136211509, x y 2855417, x y 17562800 and you should have found a) (The way most of you did it) n 12, x 2560000, x x 406800. 1 y 38383, 1 2 2 1 2 1 2 1 2 First, we compute y x2 x n 2 y 38383 3198 .58 , x x n 12 1 n 1 948 79 .00 , and 12 5200 433 .333 . Then, we compute our spare parts: 12 23 5/01/03 252y0341 SST SSy y ny 136211509 12 3198 .58 2 13440541 * 2 2 x y nx y 2855417 1279 3198 .58 176837 Sx y X Y nX Y 17562800 12433 .333 3198 .58 930197 SSx1 x12 nx12 78092 12792 3200* SSx2 X 22 nX 22 2560000 12433.3332 306670 * and Sx x X X nX X 406800 1279 433 .333 4000 . Sx1 y 1 2 1 2 2 1 2 1 2 1 2 * indicates quantities that must be positive. Then we substitute these numbers into the Simplified Normal Equations: X 1Y nX 1Y b1 X 12 nX 12 b2 X 1 X 2 nX 1 X 2 X Y nX Y b X X 2 2 1 1 2 nX X b X 1 2 2 2 2 nX , 2 2 176837 3200 b1 4000 b2 930197 4000 b1 306670 b2 which are and solve them as two equations in two unknowns for b1 and b2 . These are a fairly tough pair of equations to solve until we notice that, if we multiply 4000 by 0.8 we get 3200. The equations become 176837 .0 3200 b1 4000 b2 If we add these together, we get 567220 .6 241336 b2 . This means 7444157 .6 3200 b1 245336 b2 567220 .6 2.3503 . Now remember that 241336 3200 b1 4000 b2 176837 4000 2.3503 176837 167435 .8 that b2 So b1 167435 .8 52 .324 . Finally we get b0 by solving b0 Y b1 X 1 b2 X 2 3200 3198 .58 52 .324 79 .00 2.3503 433 .333 3198 .58 4133 .596 1018 .4625 6313 .71 . Thus our equation is Yˆ b0 b1 X 1 b2 X 2 6313.71 52.324X 1 2.3503X 2 . b) (The way I did it) SSE SST SSR and so SSR b1 Sx1 y b2 Sx2 y 5.232 17684 0.2351 93020 92523 21869 114392 * SST SSy R2 y 2 2 ny 134405 so SSE 134405 114392 20013 * SSR 114392 0.851 . If we use R 2 , which is R 2 adjusted for degrees of SST 134405 freedom R 2 n 1R 2 k 110.851 2 .818 . n k 1 Source Regression Residual Error Total DF 2 9 12 9 SS 114392 20013 134405 the ANOVA reads: MS 57186 2223.7 F 25.72 F.05 4.26 Since our computed F is larger that the table F, we reject the hypothesis that X and Y are unrelated. b) (The way most of you did it) SSE SST SSR and so SSR b1 Sx1 y b2 Sx2 y 52.324 176837 2.3503 930197 9252819 2186242 11439061 * SST SSy y 2 2 ny 13440541 so SSE 13440541 11439061 2001480 24 5/01/03 252y0341 R2 SSR 11439061 0.850 . If we use R 2 , which is R 2 adjusted for degrees of SST 13440541 freedom R 2 n 1R 2 k 110.850 2 .817 . Source Regression Residual Error Total n k 1 9 DF 2 9 12 SS 11439061 2001480 13440541 the ANOVA reads: MS 5719530 222386.7 F 25.72 F.05 4.26 Since our computed F is larger that the table F, we reject the hypothesis that X and Y are unrelated. c) X 1 79, X 2 200 (My way) Yˆ 631.31 5.23279 0.2351200 631.31 413.33 47.02 265.00 (Your way) Yˆ 6313.71 52.32479 2.3503200 6313.71 4133.60 470.06 2650.17 d) We need to find s e . The best way to do this is to do an ANOVA or remember that s e2 SSE , and n k 1 that you got SSE 13440541 11439061 2001480 SSE 2001480 9 2.262 . The outline says that an approximate s e2 222386 .67 , so s e 471 .6 . t .025 n2 9 s 471 .6 2650 308 and an approximate confidence interval is Y0 Yˆ0 t e 2650 .17 2.262 12 n prediction interval is Y Yˆ t s 2650 .17 2.262 471 .6 2650 1067 . 0 0 e e) We can copy the Analysis of Variance in the question Analysis of Variance Source Regression Residual Error Total DF 1 10 11 SS 9772621 3667664 13440285 MS 9772621 366766 F 26.65 P 0.000 But we just got Source Regression Residual Error Total DF 2 9 12 SS 11439061 2001480 13440541 MS 5719530 222386.7 F 25.72 F.05 4.26 Let’s use our SST and itemize this as Source DF SS X1 1 9772621 X2 1 1666440 9 12 2001480 13440541 Residual Error Total MS F 9772621 43.94 1666440 7.49 F.05 1,9 5.12 F.05 F 1,9 5.12 .05 222386.7 The appropriate F to look at is opposite X2. Since 8.10 is above the table value, reject the null hypothesis of no relationship. Yes the added variable helped. 25 5/01/03 252y0341 I also ran this on the computer. Regression Analysis: sales versus price, promotion The regression equation is sales = 6314 - 52.3 price + 2.35 promotion Predictor Constant price promotio Coef 6313.6 -52.324 2.3507 S = 471.5 SE Coef 812.9 8.404 0.8584 R-Sq = 85.1% T 7.77 -6.23 2.74 P 0.000 0.000 0.023 R-Sq(adj) = 81.8% Analysis of Variance Source Regression Residual Error Total Source price promotion DF 1 1 DF 2 9 11 SS 11439515 2000770 13440285 MS 5719757 222308 F 25.73 P 0.000 Seq SS 9772621 1666894 26 5/01/03 252y0341 7. The Lees present the following data on college students summer wages vs. years of work experience blocked by location. Years of Work Experience Region 1 2 3 1 16 19 24 2 21 20 21 3 18 21 22 4 14 21 26 a. Do a 2-way ANOVA on these data and explain what hypotheses you test and what the conclusions are. (9) (Or do a 1-way ANOVA for 6 points.) The following column sums are done for you: x 1 69, x 2 81, n1 4, n 2 4, x 2 1 1217 and x 2 2 1643. So x1 17.25,and x 2 20.25. b. Do a test of the equality of the means in columns 1 and 3 assuming that the columns are random samples from Normal populations with equal variances (4). c. Assume that columns 1 and 3 do not come from a Normal distribution and are not paired data and do a test for equal medians. (4) d. Test the following data for uniformity. n 20. (6) Category 1 2 3 4 5 Numbers 0 2 0 10 8 Solution: a) This problem was on the last hour exam. 2-way ANOVA (Blocked by region) ‘s’ indicates that the null hypothesis is rejected. Region Exper 1 Exper 2 Exper 3 sum count mean Sum of squares x i.. n i SS x1 x2 x3 x i. x i2. 1 16.0 19.0 24.0 59.0 3 19.6667 1193 386.78 2 21.0 20.0 21.0 62.0 3 20.6667 1282 427.11 3 18.0 21.0 22.0 61.0 3 20.3333 1249 413.44 4 14.0 21.0 26.0 61.0 3 19.3333 1313 413.44 Sum 69.0 +81.0 +93.0 =243.0 12 20.25 5037 1640.78 4 +4 +4 = 12 nj x 243 , From the above x x 243 20.25 . n SSC 20.25 x =5037 =1248.19 17.25 20.25 23.25 1217 +1643 +2177 297.56 +410.06+540.56 x j SS x 2j n 12 2 j x j n 12 , SST x x 2 ij 5037 , 2 ij x 2 i. 1640 .78 x 2 .j 1248 .19 and n x 5037 12 20 .25 2 5037 4920 .75 116 .25 . 2 n x 41248 .19 12 20 .25 2 4992 .76 4920 .75 72 . This is SSB in a one way 2 ANOVA. SSR ( SSW SST SSC SSR 52.0 ) n x 2 i i. n x 31640 .78 12 20 .25 2 4922 .34 4920 .75 1.59 Source Rows (Regions) SS . 1.59 DF 3 Columns(Experience) 72.00 2 2 MS . 0.53 F. 0.075 36.00 5.062 F.05 F 3,6 4.76 ns F 2,6 5.14 s H0 Row means equal Column means equal Within (Error) 42.67 6 7.112 Total 116.25 11 So the results characterized by years of experience (column means) are significantly different. 27 5/01/03 252y0341 Note that if you did this as a 1-way ANOVA, the SS and DF in the Rows line in the table would be added to the Within line. Computer version: Two-way ANOVA: C40 versus C41, C42 Analysis of Variance for C40 Source DF SS MS C41 3 1.58 0.53 C42 2 72.00 36.00 Error 6 42.67 7.11 Total 11 116.25 F 0.07 5.06 P 0.972 0.052 b) The data that we can use is repeated here. 1217 417 .25 2 2177 423 .25 2 8.91667 , n1 4, x 3 23 .25, s 32 4.91667 , n3 4. 3 3 H 0 : 1 2 H 1 : 1 2 Test Ratio Method: d x x1 x3 17.25 23.25 6.00 DF n1 n2 2 4 4 2 6 .05, x1 17 .25, s12 sˆ2p n1 1s12 n2 1s22 n1 n2 2 sd sx sˆ p 1 1 n1 n2 = 38.91667 34.91667 26 .75 14 .75 6.91667 6 6 6.91667 1 1 6.916667 0.5 4 4 6 t .025 2.445 3.458333 1.85966 H 0 : 1 2 H : 0 Our hypotheses are or 0 H 1 : 1 2 H 1 : 0 x 0 6.00 0 t 3.226 so reject null hypothesis. s x 1.85966 Of course, you could also do this with a critical value. If D 1 2 , then d cv D0 ts d 0 2.445 1.85966 , or a confidence interval, both of which should give you the same answer. c) The null hypothesis is H 0 : Columns come from same distribution or medians are equal. The data are repeated in order. The second number in each column is the rank of the number among the 11 numbers in the two groups. 14 1 21 4.5 16 2 22 6 18 3 24 7 21 4.5 26 8 . 10.5 25.5 Since this refers to medians instead of means and if we assume that the underlying distribution is not Normal, we use the nonparametric (rank test) analogue to comparison of two sample means of independent samples, the Wilcoxon-Mann-Whitney Test. Note that data is not cross-classified so that the Wilcoxon Signed Rank Test is not applicable. H 0 : 1 2 H 1: 1 2 . We get TL 10 .5 and TU 25 .5 . Check: Since the total amount of data is 4 + 4 = 8 n , 10.5 +25.5 must equal nn 1 88 36 .They do. 2 2 28 5/01/03 252y0341 For a 5% two-tailed test with n1 4 and n 2 4 , Table 6 says that the critical values are 11 and 25. We accept the null hypothesis in a 2-sided test if the smaller of thee two rank sums lies between the critical values. The lower of the two rank sums, W 10.5 is not between these values, so reject H 0 . d) This is basically Problem E11. This is the problem I used to use to introduce Kolmogorov-Smirnov. I stopped because everyone seemed to assume that all K-S tests were tests of uniformity. Five different formulas are used for a new cola. Ten tasters are asked to sample all five formulas and to indicate which one they preferred. The sponsors of the test assume that equal numbers will prefer each formula - this is the assumption of uniformity. Instead none prefer Formulas 1 and 3; 1 person prefers Formula 2; 5 people prefer formula 4 and 4 people prefer Formula 5. Test the responses for uniformity. Solution: H 0 : Uniformity or H 0 : p1 p 2 p3 p 4 p5 , where p i is the proportion that favor cola Formula i. Since uniformity means that we expect equal numbers in each group, we can fill the E column with fours or just fill the next column with pi 15 . Cola O 1 2 3 4 5 Total 0 2 0 10 8 20 O n 0 .1 0 .5 .4 1.0 Fo E 0 .1 .1 .6 1.0 4 4 4 4 4 20 E n .2 .2 .2 .2 .2 1.0 Fe .2 .4 .6 .8 1.0 D .2 .3 .5 .2 0 The maximum deviation is 0.5. From the Kolmogorov-Smirnov table for n 20 , the critical values are .20 .10 .05 .01 CV .232 .265 .294 .352 If we fit 0.5 into this pattern, it must have a p-value of less than .01, or we may simply note that if .05 , 0.5 is larger than .294 so we reject H 0 . Can you see why it would be impossible to do this problem by the chi-squared method? 29