Teaching and Learning Physical Science in Urban Secondary Schools:

Teaching and Learning Physical Science in Urban Secondary Schools:

Assessing the Assessments

Session Organizer and Chair : Joan Whipp, Associate Professor of Education,

Marquette University

Discussant : David Hammer, Associate Professor of Physics and Education, University of Maryland

Panel of Presenters :

Michele Korb: Clinical Assistant Professor of Physics and Education, Marquette

University

Mike Politano: Assistant Professor of Physics, Marquette University

Mel Sabella: Associate Professor of Physics, Chicago State University

Kristi Gettelman, Physical Science Teacher, Wisconsin Conservatory of Lifelong

Learning, Milwaukee WI

Kelly Kushner, Physical Science Teacher, Shorewood Intermediate School, Shorewood

WI

Megan Ferger, Physical Science Teacher, Roosevelt Middle School, Milwaukee WI

Overview

In the current testing climate brought on by No Child Left Behind , much has been written about the negative effectives of high-stakes testing on teaching and learning (Linn, Baker

& Betebenner, 2002) and the great need and importance of using ongoing and multiple assessment measures to evaluate instructional programs, student learning and teacher development (Shepard, 2000). This interactive symposium explores the complexity of doing so in a teacher development project aimed at improving the teaching practices of middle and high school physical science teachers and the learning of their students in science. Drawing from multiple assessment measures, we describe the conflicting findings from our study on what happened to both teachers and their students in this project and the limitations and strengths of the various assessments instruments we used for evaluation. At the same time, we hope to demonstrate the rich and complex picture of

“what happened” that the ongoing and multiple assessments provided.

Connection to Literature and Theoretical Frameworks

There have been numerous calls for reform in science teaching during the last decade

(National Commission on Science and Mathematics Teaching in the 21 st

Century, 2001;

National Research Council, 1996, 1999; National Center for Educational Statistics,

1999). These calls for reform emphasize the need for what is called inquiry-based, learner-centered or constructivist teaching practices which have been outlined by many

(Driscoll, 2005; Herrington & Oliver, 2000; Jonassen & Land, 2000; Land & Hannafin,

2000; McCombs & Whisler, 1997; Windschitl, 2002). Such practices include use of: 1) complex, real-world situations where students actively construct meaning, linking new information with what they already know; 2) problem-based activities; 3) varied ways for students to represent knowledge; 4) opportunities for knowledge-building from a variety of perspectives; 5) collaborative learning activities; 6) activities that develop students’ metacognitive and self-regulation processes; 7) emphasis on critical thinking rather than just getting “right answers”; 8) support for student reflection on their learning processes; and 9) ongoing assessments that provide continual feedback on the development of student thinking.

Physics education researchers have been leaders in answering the call for science teaching reform through a combination of research on student learning and use of that

research to construct instructional materials that promote conceptual change and learnercentered teaching. Some examples of physics research on student learning include the identification and address of student difficulties (Shaffer & McDermott, 2005), the development of a theoretical framework to understand student knowledge (Redish, 2004), and the understanding of student epistemologies (Hammer, 1994). Such research has been used to guide the development of a number of nationally known reform-based curriculum materials, including Physics by Inquiry (McDermott and the Physics

Education Group, 1996), Modeling Instruction in High School Physics (Hestenes, 1996), and Constructing Physics Understanding in a Computer-Supported Learning

Environment (Goldberg, 1997). Development of these materials is based on a cycle of research into student learning, development of curricula, implementation and then assessment of student learning. Up until now, however, the focus of this research and development has been largely in high school and college physics classes. Very little of this work has been attempted with middle or early high school teachers who teach physical science and who often lack strong content background in physics (Ingersoll,

1999). Furthermore, even less of this work has been attempted with middle or early high school teachers who work in urban settings.

Context and Project Description

For two years (2004-2006), eighteen middle and high school physical science teachers from ten urban Milwaukee middle and high schools participated in a U.S. Department of

Education Title II (Improving Teacher Quality) project, Modeling in Physical Science for

Urban Teachers Grades 6-10 that included two-week summer modeling institutes, four half-day follow-up modeling workshops, an online community, and classroom mentoring with a Marquette science educator. The curriculum for the summer institutes and followup activities was adapted from the Physical Science Modeling Workshop , a curriculum for middle school teachers that was developed at Arizona State University and based on the more well-known Modeling Instruction in High School Physics.

The project aimed at increasing teachers’ content knowledge of physical science, improving their instructional strategies (discourse management, assessment, content organization, inquiry methods, cooperative learning methods, and use of classroom technologies) and increasing collaboration among physical science teachers in Milwaukee urban schools. During the summer institutes and Saturday workshops, taught by a high school physics teacher trained in modeling at Arizona State, teachers had opportunities to work in teams on specific applications of the modeling curriculum to their particular grade level and school context as well as share materials, methods, and reflections on progress in implementing modeling in their classroom. In addition, throughout the life of the project, networking and support among project participants were encouraged through ongoing dialogue in an electronic community and on-site mentoring by a university science educator who visited each of the participating teacher’s classrooms at least once each semester for clarification, reinforcement, and context-specific adaptations of the professional development activities.

In order to evaluate the effectiveness of this project, we used a variety of methods to assess a number of different outcomes: pre and post test scores of teachers and their students, surveys, interviews, online discussions, classroom observations, and action research studies. As we looked at results from the assessment of content knowledge in both teachers and their students, using tools like the Physical Science Concept Inventory

(PSCI) , we saw small changes from pre- to post-instruction. Despite this, most of the teachers involved in the program were able to cite major changes in both their teaching and in what was happening to the students in their classes. These changes were cited by the teachers in interviews and in responses to self-assessment surveys given to the teachers. The discrepancy between these two very different types of assessment measures

underlines the importance of utilizing multiple means of assessment in the evaluation of any professional development project.

Summary of Individual Presentations on Modes of Inquiry and Findings

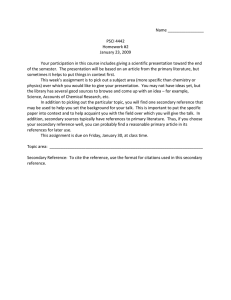

#1 Using Pre and Post Test Scores to Assess Teacher and Student (Mike Politano,

Assistant Professor of Physics, Marquette University)

Changes in both teacher and student understanding of physical science and related math skills were evaluated with pre and post administrations of The Physical Science Concepts

Inventory (PSCI). The PSCI is a 25 item multiple-choice diagnostic that assesses understanding of topics in physical science. It consists of released questions from Trends in International Mathematics and Science Study (TIMSS), the National Assessment of

Educational Progress (NAEP), the Classroom Test of Scientific Reasoning (Lawson,

1999), and other research-based instruments.

Different teachers used the modeling method to different extents and some did not cover all topics covered in the PSCI. Consequently, the expectation was that there would be a gain in scores as a result of participating in the program but that gain would vary from class to class. In addition, we might have expected that because this was an early implementation of these new materials, teacher and student gains on the PSCI would be modest.

The gain was measured using the equation developed by Hake (1998). The Hake gain is calculated using .

The PSCI was given to the participating t4acher in the program as well as to the students in the middle school classes. To evaluate the effectiveness of the modeling materials for the middle school students, the PSCI was given pre/post two times during the grant period. In the second year of the study, the only teachers who administered the PSCI were those who implemented the modeling method with their students at some significant level.

Probably because the baseline scores of the teachers were quite high (73%), their gains on the test were not significant. The students taking the PSCI also had relatively small gains (4% to about 40% in the final implementation). Looking at the overall group, the scores went from about 30% correct to 36% in the final implementation. Interpreting data from multiple-choice tests is often extremely challenging and care must be exercised in interpreting the results. It is often unclear whether improved performance on the instrument is an indicator of improved conceptual understanding or whether a lack of improvement on the instrument indicates a lack of improvement in conceptual understanding.

The small gains from pre- to post-test, for the majority of classes, were surprising to those of us involved in the project because they conflicted with our classroom observations of the teachers and their students as well as our interviews with the teachers over the course of the project. These conflicting findings suggested the limitations of simply using a pre and post diagnostic test like the PSCI as a measure of what happened. in this project. First, teachers reported that many of their urban students who eventually became enthusiastic about learning through modeling have serious reading problems and tune out when faced with any standardized objective test that requires reading skills.

Secondly, teachers in this project struggled with initial implementation of modeling due to student resistance although were successful in implementing modeling during the project’s second year. This slow start suggested that by the end of the project, teachers were actually in the early stages of implementation, which could explain their students’ modest student gains on the diagnostic. Finally, unlike the well-tested Force Concept

Inventory (Hake, 1998; Hestenes, Wells & Swackhamer, 1992), the PSCI is a new instrument with less data supporting its reliability.

#2 Using Self-Report Instruments to Assess Improvements in Teaching and Learning.

(Mel Sabella, Associate Professor of Physics, Chicago State University)

To get further perspective on how the participating teachers were changing their teaching practices and how they were thinking and feeling about those changes, we used a variety of surveys, interviews, and reflection questions throughout the project. For example, several surveys were given to the participating teachers that provided them with the opportunity to self assess their implementation of the new materials.

One of these instruments was an attitude survey that drew from the Maryland Physics

Expecations Survey (Redish, Steinberg & Saul, 1998), the Epistemological Beliefs

Assessment for Physical Science (White, Elby, Frederiksen & Schwarz, 1999), and the

Epistemological Questionnaire (Schommer, 1990).These instruments were used to better understand what teachers valued regarding the program . By doing this as a pre- and posttest, the instrument provided evidence on how teachers and students changed as a result of participating in the physical science modeling project. We found that there was little change toward expert-like views after approximately one year of implementing the modeling method. This is somewhat to be expected since these attitudes are very difficult to change. Other researchers who have studied the evolution of student attitudes during the university introductory physics class have observed that student attitudes tended to diverge from the attitudes and expectations of experts (Redish, Saul & Sternberg,1998).

Despite these modest changes in the performance on the PSCI and Attitudes Surveys, interviews and online reflections indicated that teachers were seeing important changes in their classrooms. These included students being more active in class, students gaining confidence in their abilities, etc. Teachers believed that the use of the modeling method benefited their students by helping to improve their understanding of the underlying concepts and the connection between mathematics and physical science. Teachers also remarked that the modeling approach helped them create an interactive learning environment in which they were in a position to conduct ongoing assessment of their students’ knowledge and conceptual development.

Although, by themselves, self-report measures can often be limited, taken together, they can often elaborate and enrich other data sources. In this project, the surveys, interviews and online reflections also indicated that many of the participating teachers were significantly adapting the modeling curriculum to fit their particular teaching contexts and students. Many of the middle school teachers, for example, reported how they were applying modeling not only in their physical science teaching but also in their teaching of chemistry, biology, and social science. In addition, many teachers reported significant adaptations of the modeling curriculum to fit the needs of their particular students, for example those with low reading skills. The fact that these teachers felt comfortable making these adaptations of their own initiative emerged as a major strength in this program.

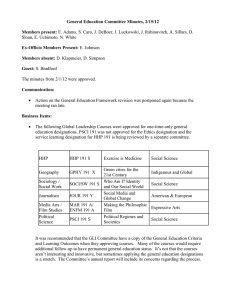

Note: The tables in the Appendix of this report provide detail on teacher performance on the various assessment instruments used with the participants in this project and discussed by the first two presenters.

#3 Using an Observation Tool to Assess Teaching Reform: The RTOP. (Michele Korb,

Assistant Clinical Professor of Education and Physics, Marquette University)

Based on current national science and mathematics standards for learner-centered, inquiry-based teaching, The Reformed Teaching Observation Protocol (RTOP),

developed at Arizona State University, is a standardized observation assessment instrument that attempts to detect the degree to which science classroom instruction exhibits “reformed” or “constructivist” teaching methods. The instrument specifies a set of 25 scored, observable classroom behaviors or items related to lesson design and implementation, propositional and procedural content knowledge, communicative interactions and student/teacher relationships. The instrument has been widely studied and used and demonstrates high inter-rater reliability. High scores on the RTOP correlate well with increased student conceptual understanding of science in a number of studies

(Lawson, Benford, Bloom, Carlson, Falconer, Hestenes, Judson, Piburn, Sawada, Turley

& Wyckoff, 2002). Throughout this project, I visited each of the participating teachers in their classrooms twice a year and completed the RTOP as part of my observation. Data from the RTOP observations indicated that over time there was a considerable increase in a number of behaviors that characterize reform-based instruction in these teachers, including use of instructional strategies and activities that respected students’ prior knowledge and preconceptions; lessons designed to engage students as members of a learning community; demonstration of a solid grasp of the subject matter content; encouragement of abstract thinking, student use of models, drawings, graphs, concrete materials, and manipulatives to represent phenomena; teacher questioning that triggered divergent modes of thinking; high proportion of student talk between and among students; climate of respect for what others had to say; encouragement of the active participation of students; teacher patience with students; and teacher acting as a resource person, supporting and enhancing student investigations. The implementation of the R-

TOP seemed to be an important component to our modeling project and the professional development of the teachers. It provided teachers with a common language to talk about their teaching and to reflect on their changing teaching practices. However, the RTOP may not be as useful as a program evaluation tool since the teachers were only observed once a semester. It was difficult to use it as a basis for generalizing about the quality and quantity of teacher changes in this project.

#4 Using Action Research to Assess the Improvement of Teaching and Learning

(Megan Ferger, Kristi Gettelman, and Kelly Kushner, middle school physical science teachers)

We have been conducting action research on our implementation of modeling into our teaching of physical science concepts and principles of measurement. In our research, we have been addressing these questions: 1) How did our students’ understanding of physical science concepts and measurement principles change? 2) How, if at all, did our students’ attitudes toward science change? 3) In what ways, if any, has student engagement in the scientific process increased – i.e. making hypotheses, collecting and analyzing data, and forming conclusions to understand and explain physical science concepts and principles of measurement? Using both quantitative and qualitative methods of inquiry for our research, we have drawn from multiple data sources: pre and post testing of our students on the physical science and measurement concepts, our own classroom observation logs, student science logs, student attitude surveys, and student interviews.

Our data analysis indicates that: 1) Our students showed the greatest gains on standardized tests when they were taught with a blended approach of traditional and modeling methods; 2) Our students showed the greatest gains in their understanding of principles of matter (based on pre/post testing and our observation data); 3) Our students showed the least gains in their understanding and ability to apply advanced measurement principles (based on pre/post testing and observation data); 4) Student resistance to modeling was a barrier that needed to be addressed initially; our students were too used to being given right answers rather than thinking for themselves. Over time, however, student attitudes toward science became more positive. 5) Since we have implemented the modeling curriculum, student engagement in scientific processes has increased.

By conducting ongoing action research on our implementation of the modeling curriculum, we have : 1) Developed a better understanding of the physical science concepts that we are teaching; 2) Become more focused on student misconceptions and how to address those misconceptions in teaching; 3) gained a better understanding of how our students view science; 4) Discovered how changing our practice could impact student learning; 5) Become more conscious of our own instructional design and planning and how to use student work samples and classroom discussion to guide and inform planning 6) Become more aware of how we taught and how our students learned or didn’t learn science content 7) Changed our focus from teacher to student-driven instruction and assessment.

As we look forward to our future teaching in physical science, all of us plan to continue this process of action research to monitor student learning and inform our teaching. We will continue to use pre and post testing and other data sources as well as comprehensive data analysis to refine our teaching techniques that will impact our students’ learning. We also plan to present our research results to our colleagues so that more math and science teachers in our schools adopt the modeling curriculum. Finally, we are thinking about adapting modeling methods to teach a variety of science content, rather than just physical science and to use whiteboard presentations in other areas of curriculum to promote student-to-student questioning and understanding.

References

Driscoll, M. P. (2005). Psychology of learning for instruction, 3 rd

ed . Boston:

Allyn & Bacon.

Goldberg, F. (1997). The CPU Project: Students in control of inventing physics ideas. Forum on Education , American Physical Society .

Hake, Richard. (1998) Interactive-engagement vs. traditional methods: A six thousand-student survey of mechanics test data for introductory physics courses.

American Journal of Physics. 66 , 64-74.

Hammer, D. (1994). Epistemological beliefs in introductory physics. Cognition and Instruction, 12 (2), 151-183.

Herrington, J. & Oliver, R. (2000). An instructional design framework for authentic learning environments. Educational Technology, Research and Development ,

48 (3), 23-48.

Hestenes, D. (1996). Modeling methodology for physics teachers. Proceeding of the International Conference on Undergraduate Physics Education, College Park.

Hestenes, D. (1997) Modeling Methodology for Physics Teachers. In E. Redish &

J. Rigden (Eds.) The changing role of the physics department in modern universities.

(Part II) American Institute of Physics . 935-957.

Hestenes, D., Wells, M & Swackhamer, G. (1992). Force concept inventory, The

Physics Teacher , 30 , 141-151.

Ingersoll, R.M. (1999) The problem of underqualified teachers in American secondary schools, Educational Researcher, 28 (2), 26-37.

Jonassen, D.H. & Land, S.M.(2000). Preface. In D.H. Jonassen & S.M. Land

(Eds.), Theoretical Foundations of Learning Environments (pp. iii-ix), Mahwah, NJ:

Erlbaum.

Land, S.M. & Hannafin, M.J. (2000). Student-centered learning environments. In

D.H. Jonassen & S.M. Land (Eds.), Theoretical Foundations of Learning Environments

(pp. 1-23). Mahwah, NJ: Erlbaum.

Krajcik, J.M., Marx, R., Blumenfeld, P., Soloway, E., Fishman, B. (2000).

Inquiry-based science supported by technology: Achievement among urban middle school students . Ann Arbor: University of Michigan Press.

Lawson, A.E. (1999). What should students learn about the nature of science and how should we teach it? Journal of College Science Teaching , 28 (6), 401-411.

Lawson, A. E., Benford, R., Bloom, I., Carlson, M. P., Falconer, K. F., Hestenes, D.

O., Judson, E., Piburn, M. D., Sawada, D., Turley, J., & Wyckoff, S. (2002). Evaluating

College Science and Mathematics Instruction: A Reform Effort That Improves teaching

Skills. Journal of College Science Teaching , 31 (6), 388-93.

Linn, R. L., Baker, E.L. & Betebenner, D.W. Accountability systems: Implications of requirements of the No Child Left Behind Act of 2001. Educational Researcher , 31 (6),

3-16.

McCombs, B. L. & Whisler, J.S. (1997). TheLlearner-centered Classroom and

School: Strategies for Enhancing Student Motivation and Achievement . San Francisco:

Jossey-Bass.

McDermott and the Physics Education Group at the University of Washington

(1996). Physics by Inquiry . New York: John Wiley & Sons.

National Center for Education Statistics. (1999). Third International Mathematics and Science Study Report (TIMSS-R).

Washington, DC: US Department of Education.

National Commission on Mathematics and Science Teaching for the 21 st

Century (2000).

Before It’s Too Late . Jessup, MD: Education Publications Center.

National Research Council (1996). National Science Education Standards.

Washington, D.C: National Academy Press.

National Research Council (1999). Designing Mathematics or Science Curriculum

Programs: A Guide for Using Mathematics and Science Education Standards.

Washington, D.C: National Academy Press.

Redish, E.F. (2004). A Theoretical Framework for Physics Education Research:

Modeling student thinking, in E. F. Redish and M. Vicentini (Eds.), Proceedings of the

International School of Physics, "Enrico Fermi" Course CLVI. Amsterdam: IOS Press.

Redish, E.F., Steinberg,R. F.& Saul, J.M. (1998). American Journal of Physics , 66 ,

212-224.

Schommer, M. (1990). Effects of beliefs about the nature of knowledge on comprehension. Journal of Educational Psychology , 82 , 498-504.

Shaffer, P.S. and L.C. McDermott (2005). A research-based approach to improving student understanding of kinematical concepts,” American Journal of Physics , 73 (10)

921-931.

Shepard, L. A. (2000). The role of assessment in a learning culture. Educational

Researcher , 29 (7), 4-14.

Wells, M., Hestenes, D. &. Swackhamer, G. (1995) A modeling method for high school physics instruction, American Journal of Physics, 63 , 606-619.

White, B., Elby, A., Frederiksen,J., & Schwarz, C (1999). The Epistemological

Beliefs Assessment for Physical Science. Paper presented at the annual meeting of the

American Educational Research Association, Montreal.

Windschitl, M. (2002). Framing constructivism in practice as the negotiation of dilemmas: An analysis of the conceptual, pedagogical, cultural, and political challenges facing teachers. Review of Educational Research , 72 (2), 131-175.

.

.

Q #

15

16

17

18

19

20

21

22

23

24

25

9

10

11

12

13

14

5

6

7

8

1

2

3

4

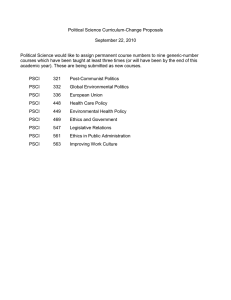

Appendix

Physical Science Concept Inventory

The PSCI is a multiple-choice diagnostic that assesses student understanding of topics in Physical

Science. The inventory is based on an instrument constructed by the Physics Underpinnings Action

Research Team; Arizona State University; June, 2000. Many questions on the PSCI come from standardized tests such as the Lawson Test of Scientific Reasoning (Lawson), the Chemistry Concept

Inventory (Mulford and Robinson), the Trends in International Mathematics and Science Study

(TIMSS), and the Energy Concept Inventory (Swackhammer et al.).

Student Performance on PSCI

Topic

Mass conservation

Mass conservation

Volume by displacement

Volume by displacement

Volume, Proportions

Volume, Proportions

Control of variables

Control of variables

Graphing-extrapolation

Equation from situation

Equation from graph

Graphing-interpolation

Area

Mass comparisons

Mass-Vol Relationships

Conservation of Matter

Conservation of Matter

Kinetic Molecular Theory

Atomic Model

Conservation of Matter

Conservation of Matter

Heat Transfer

Heat Transfer

Heat Transfer

Heating and Boiling total % correct

Attitudes Survey

14%

16%

25%

5%

12%

10%

12%

31%

47%

47%

53%

19%

31%

23%

29% baseline

(N=213)

(%correct)

67%

64%

31%

22%

13%

10%

40%

31%

74%

35%

49%

16%

20%

25%

5%

14%

10%

13%

30%

43%

46%

51%

20%

31%

22%

35% pretest (F04)

(N=610)

(%correct)

61%

55%

30%

23%

14%

15%

32%

25%

68%

31%

45%

18%

30%

23%

38%

15%

24%

25%

6%

14%

11%

11% posttest (S05)

(N=395)

(%correct)

65%

59%

33%

28%

12%

15%

32%

30%

69%

26%

49%

39%

58%

55%

31%

22%

26%

16%

30%

12%

23%

23%

4%

9%

8%

6% pretest (F05)

(N=298)

(%correct)

65%

55%

34%

27%

17%

15%

31%

26%

68%

32%

49%

39%

44%

55%

30%

29%

43%

32%

44%

22%

27%

31%

7%

23%

16%

16% posttest (S06)

(N=262)

(%correct)

72%

71%

40%

37%

17%

16%

31%

29%

72%

36%

48%

43%

56%

53%

36%

Results on Attitudes Survey (old and new versions).

% favorable responses

Data

1. Learning science makes me change some of my ideas about how the physical world works.

2. This class is different than other science classes I have taken.

3. We spend more time in this class conducting experiments and discussing ideas than I thought we would.

4. I developed a better understanding of science by using the whiteboards to jot down my ideas and models.

5. If I came up with two different approaches to a scientific problem and they gave different answers, I would not worry about it.

6. "Understanding" science basically means being able to recall something you've read or been shown.

7. I feel like I learned a lot in this science class because of the type of instruction that was used.

8. Spending a lot of time (half an hour or more) working on a science problem is a waste of time.

9. If you are ever going to be able to understand something, it will make sense to you the first time you see it.

10. When it comes to science, most students either learn things quickly, or not at all.

11. I developed a better understanding of science by discussing my ideas with my classmates and my teachers.

12. Often, a scientific principle or theory just doesn’t make sense. In those cases, you have to accept it and move on, because not everything in science is supposed to make sense.

13. The most important part of being a good student of science is memorizing facts.

14. The most important part of being a good student of science is being able to learn by talking to classmates and conducting experiments.

15. Given enough time, almost everybody could learn to think more scientifically, if they really wanted to.

16. When I ask a question I wish my teacher would give me the answer. This would help me learn science.

17. I feel confident that I will succeed in my next science class.

18. I did better than I thought I would in my science class.

19. Only very few specially qualified people are capable of really understanding science.

20. I am capable of learning science.

21. A significant problem in my science courses is being able to memorize all the information I need to know.

A

21% 33% 8% 19% 0% 10%

70% 50% 44% 56% 75% 48%

51% 50% 52% 44% 75% 29%

22% 17% 8% 75% 50% 71%

71% 67% 48% 81% 100% 90%

53%

9%

B

12% 33%

28% 17%

19% 50%

Section

C

8%

8%

4%

D1

17% 16% 38%

E

0%

D2

68% 83% 52% 69% 75% 62%

70% 67% 64% 81% 75% 71%

45% 33% 48% 50% 100% 33%

60% 33% 60% 63% 75% 29%

38% 75% 33%

69% 50% 62%

71% 50% 44% 50% 100% 29%

45% 50% 16% 56% 75% 29%

38% 33% 12% 75% 75% 81%

28% 17% 12% 69% 50% 71%

53% 50% 64% 75% 100% 57%

38% 75% 48%

14% 33% 16% 69% 25% 76%

68% 50% 68% 44% 75% 57%

77% 33% 52% 63% 75% 71%

38%

Pre

(9/04, overall)

63%

31%

43%

56%

62%

42%

39%

32%

61%

24%

22. I am thinking about studying science when I get to college.

25% 50% 52% 63% 50% 43%

Average favorable Response

45% 42% 34% 58% 66% 52%

The Attitudes Survey consists of statements, in which students are asked whether they agree or disagree on a five point Lickert Scale. Statements from the Attitudes survey were obtained from the

Maryland Physics Expectations Survey (MPEX, University of Maryland PERG), the Epistemological

Beliefs Assessment for Physical Science (EBAPS, Elby) and the Epistemological Questionnaire (M.

Schommer.)

32%

Workshop Survey 1.1

At the end of the initial inservice workshop (Summer 04), teachers were given a survey to evaluate the workshop and their progress in the workshop. The ratings given by the participants are summarized in the table below.

Ratings on Workshop Survey

Rate your overall

Experience. (1-10)

How well were the workshop materials structured into models?

How clear was the modeling instructional cycle?

Rating:

# of participants:

Rating:

# of participants:

Rating:

10

4

5

(very well)

8

5

(very clear)

9 8 7

3 5 3

4 3 2

7

4

1

3

0

2

5

1

1

(not well)

0

Were your expectations for the workshop fulfilled?

Please rate how beneficial each of the following components of the

Modeling Curriculum were in helping you improve as a teacher.

How confident are you in using the technology presented

(SimCalc, Graphical

Analysis, etc.)?

# of participants:

Rating:

Rating:

Laboratory activities

Whiteboard preparation and discussions

Printed materials

Group work with peers during Workshop

Rating:

6

5

(exceeded expectations)

7

5 (very beneficial)

8

12

7

7

5 (very confident)

6

5 4 0

4 3 2

4

4

8

4

7

7

2

5

3

0

0

2

2

0

2

0

0

0

0

1

(not at all clear)

0

1

(fell below expectations)

0

1 (not beneficial)

0

0

0

0

4 3 2 1 (not at all

7 1 confident)

0

The table below shows the comments that participants provided on the workshop survey.

Responses have been paraphrased for easier reading. Each response is separated by a “;”

Comments on Workshop Survey

Comment question

How well were the workshop materials structured into models?

In what ways, if any, do you see models, contributing to the improvement of physical science and mathematics instruction?

How clear to you was the instructional modeling cycle in the current workshop?

In what ways, if any, do you see this cycle contributing to the improvement of your instruction?

In what specific ways, if any, has the workshop exceeded your expectations?

In what specific ways, if any, has the workshop fallen short of your expectations?

Paraphrased responses: practice in scaffolding; need more practical applications; most worked great - some things could be improved; made sense; well structured materials; good group discussion; good model for measurement; organization of materials by objective easier to engage students; models are complex for middle school teacher; the math will help the students solve problems and get data; students teaching each other; addressing misconceptions; learning problem solving; makes people really understand; helps students grasp concepts; will help; cross curriculum teaching; improves engagement; more inclusive classroom; cross curriculum teaching; greater retention; student centered; good for underlining key concepts; building on concepts will help students understand; use in 7th grade helped with questioning; some parts better than others; good review of content and methodology; instructors-curriculum-activities were awesome; logical sequence of lessons; would like to see how modeling fits in a 50 min class; or a week of class application; clear but slow paced; sometimes did not see where curriculum was going until after-not always a bad thing provides resources; helps with rusty material; students working together will learn more.; help students with foundations; allowing and encouraging involvement; helps create flow between topics; better view of teaching; adds fun; more hands on; allow more connection between facts; use of graphical analysis-computer programs; better questioning-better understanding of misconceptions; solidifies key concepts; good to investigate one topic thoroughly

Great materials; materials were awesome-lessons made things really clear; helped me increase my knowledge of modeling; free lab equipment to start school; demonstrated new teaching method that is practical and can be implemented; nice selection of people involved; more useful information; is what I expected; great tips-modeling instruction; I am much more confident in my physical science knowledge-helpful for conveying concepts to students; refresher on content was great

Spent a lot of time bringing colleagues up to speed; none; expected more time spent on showing us how to teach the content than us learning the content; none; slow pace-off track too often; pacing was sporadic; somewhat slow pace-but this was due to wide range of backgrounds; too much time on teaching-felt that more curriculum should have been covered with more modeling applications; a bit slow paced; did not cover as much content as expected-lots of time spent on answering questions about teaching.; moved to slowly and did not cover all of the expected modeling sections

In what ways, if any, has the workshop affected your views about physical science or mathematics?

What changes, if any, has the workshop convinced you are necessary to bring about in your teaching practice?

Comment on the workshop leaders and their role in accomplishing the goals of the workshop.

Please provide other comments and/or recommendations for future workshops. clarified how math is the language of science; views of math are enhanced; but did not help with applying ps to life; given me more confidence in doing math; I like deriving equations rather than memorizing; great ideas on integrating math into science teaching; made it seem more intuitive; increased interest and knowledge; none-believe that students will become engaged and motivated; fun to take and to teach; I could do this; cemented my knowledge and confidence; great fun

Fostering collegiality between students; involve students more in applying math to everything model it; let students discover and share more; white boards; more discussion and working together; less emphasis on the math and more on making sure students can explain in words; ready to bring these materials to classroom; fun and hands on; utilize methods that more actively engage students-blend resources-texts-labs-activities-white boarding; infusion of math relationships with curriculum; more student derived knowledge; will incorporate more student driven labs; excited about trying modeling; using more discovery than lecture; I'll use it

Dynamic-encouraging-recognized strengths and weaknesses-dedicated; rushed through curriculum-unaware of the students-unable to keep up; very effective-knew their stuff; great instructors-could move faster; too much time spent on classroom management and other topics not related to modeling-

Some participants monopolized the class ; teachers were awesome-teaching experience was really beneficial; excellent leadership; leaders very structured in presenting materials-helpful; Clear-flowing lecture; excellent job of managing classroom-intelligent and independent-kept class on task-complemented each other; good people doing a good and difficult job; excellent knowledgepreparation-reliability- and fun; great educators-did not feel they understood urban students or the urban teaching environment; great instructorsknowledgeable and experienced; instructors were enthusiastic-things seemed disorganized at first-but by the end all materials and activities went smoothly-

More attention could be paid to staying on track; Personal- competent-did a great job

Blue water and unsqueaky stools; use instructors that are familiar with the audience and their students; learned a lot; don't let participants talk in lab-move on right away to get through more material; less emphasis on teaching the content to us; more on teaching us how to teach; excellent workshops; new stools-faster pace; treat adults as adults- minimize lecturing if we get off task- less anecdote - more instruction- got a bit behind- allow more time for teachers to communicate; with each other and share ideas; great workshop with great instructors; thank you; would like to see how modeling fits in a 50 min class-or a week of class application; instructors understood that time is valuable; look forward to continuing

Participant Experience Survey 1.1

The Participant Experience Survey was given before the summer in-service in 7-04 and then again at the end of the program in 6-06. The data is summarized in a series of tables that give the percentages of responses at the beginning of the program (7-04) and at the end of the program (6-06). 17 teachers responded to the survey in 2004 and 11 responded in 2006.

Current Classroom Characteristics

How often do

Dates: 7-04 6-06 7-04 6-06 7-04 6-06 7-04 6-06 7-04 6-06

Regularly you ask students to work in groups in your class?

24% 27%

How often do you ask different groups to

How often do you use

(student-size, portable) discuss their ideas in class? whiteboards in conjunction with group work?

How often do you lecture

(for the majority of the class period)?

24% 18% 0% 18% 12% 0%

How often do you use a standard textbook?

18% 9%

Frequently

Sometimes

Seldom

Never

65% 65%

12% 9%

0% 9%

0% 0%

29%

41%

6%

0%

36%

36%

9%

0%

6%

18%

12%

65%

9%

64%

0%

9%

18%

35%

35%

0%

27%

27%

45%

0%

29%

41%

6%

6%

9%

55%

18%

9%

How has your teaching changed?

Describe how your teaching has changed as a result of the Modeling Workshop by responding to the following:

(Use a scale of 1 to 5 where 5 indicates strongly agree, 1 indicates strongly disagree.)

5

I now use more technology in the classroom.

12% 0%

My knowledge of concepts in physical science has improved.

53% 50%

I now use more inquiry-based instruction in the classroom.

35% 42%

I now engage my students in more group-work in the classroom.

47% 33%

I now engage my students in more discussions in the classroom.

18% 25%

12% 33% 29% 33% 29% 33% 29% 33% 53% 42% 4

3

2

1 total

35% 50%

29% 17%

12% 0%

N=17, N=12

6% 8%

12% 8%

0% 0%

N=17, N=12

29% 8%

6% 17%

0% 0%

N=17, N=12

18% 25%

6% 8%

0% 0%

N=17, N=12

18% 25%

12% 8%

0% 0%

N=17, N=12

5

4

3

2

1 total

Characteristics of the Classroom

Describe your classroom during the current semester:

How engaged were your students during the Modeling

Activities?

1: not engaged. 5: very engaged

50% 27%

31% 55%

19% 9%

0% 9%

0% 0%

N=16, N=12

Do you think your students would like to continue using the

Modeling Activities as part of their instruction?

1: not at all, 5: definitely

50% 33%

44% 58%

6% 8%

0% 0%

0% 0%

N=16, N=12

Do you think your students improved their understanding of

Physical Science as a result of the Modeling Activities you did with them?

1: not at all, 5: definitely

33% 45%

47% 36%

20% 9%

0% 9%

0% 0%

N=15, N=12