Discussion Class 3 Inverse Document Frequency 1

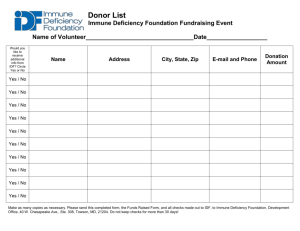

advertisement

Discussion Class 3 Inverse Document Frequency 1 Discussion Classes Format: Questions. Ask a member of the class to answer. Provide opportunity for others to comment. When answering: Stand up. Give your name. Make sure that the TA hears it. Speak clearly so that all the class can hear. Suggestions: Do not be shy at presenting partial answers. Differing viewpoints are welcome. 2 Question 1: Reading a Research Paper (a) Who are the authors of this paper and the letter? What is their background? Why did they write this paper and letter? (b) This paper was written at the time that the technique now know as tf.idf was under development. i What was the state of the art before this paper? ii What was the contribution of this paper? 3 Question 2. Summary of the first three pages of the paper Explain the statement, "We are concerned with obtaining an effective vocabulary for a collection of documents of some broadly known subject matter and size." The introduction to the paper explores this statement in the context of "a simple keyword or descriptor system". What is this? 4 Question 3. Summary of the first three pages of the paper The paper begins, "We are familiar with the notions of exhaustivity and specificity." Later it states, "We should think of specificity as a function of term use. It should be interpreted as a statistical rather than semantic property of index terms." (a) What is the semantic interpretation of specificity? (b) What is the statistical interpretation of specificity? 5 Question 4: Suggested Approaches The author explores several approaches to dealing with frequently occurring terms. Describe each approach and explain why it was rejected: (a) Term conjunction (b) Remove frequently occurring terms from requests 6 Question 5: Statistical specificity 7 Question 6: Weighting Sparck Jones suggests a weighting: Let f(n) = m such that 2m-1 < n <= 2m. Then where there are N documents in the collection, the weight of a term which occurs n times is: f(N) - f(n) + 1 Robertson suggests: log2(N/n) + 1 What are the differences between these two expressions? 8 Question 7: IDF and Probability Robertson notes that n/N is the probability p that an item chosen at random will contain a given term. He says: Suppose that an item contains the terms a, b, c in common with the question; let the values of p for these terms be pa, pb, pc respectively. Then the weight (‘level’) assigned to the document is: log(1/pa) + log(1/pb) + log(1/pc) = log(1/papbpc) What probability assumption does this expression depend on? Is it reasonable? 9 Question 8: IDF and Probability What are the arguments for and against adding +1 to the value of the IDF weighting? Robertson argues that the expression: log(1/pa) + log(1/pb) + log(1/pc) = log(1/papbpc) is a theoretical justification for not using the +1. How does this relate to similarity measures on the term vector space? 10