Vocabulary, word distributions and term weighting

advertisement

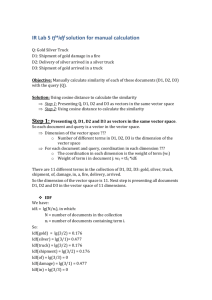

Term weighting and vector representation of text Lecture 3 Last lecture Tokenization – Token, type, term distinctions Case normalization Stemming and lemmatization Stopwords – 30 most common words account for 30% of the tokens in written text Last lecture: empirical laws Heap’s law: estimating the size of the vocabulary – Linear in the number of tokens in log-log space Zipf’s law: frequency distribution of terms This class Term weighting – Tf.idf Vector representations of text Computing similarity – – Relevance Redundancy identification Term frequency and weighting A word that appears often in a document is probably very descriptive of what the document is about Assign to each term in a document a weight for that term, that depends on the number of occurrences of the that term in the document Term frequency (tf) – Assign the weight to be equal to the number of occurrences of term t in document d Bag of words model A document can now be viewed as the collection of terms in it and their associated weight – – Mary is smarter than John John is smarter than Mary Equivalent in the bag of words model Problems with term frequency Stop words – Auto industry – Semantically vacuous “Auto” or “car” would not be at all indicative about what a document/sentence is about Need a mechanism for attenuating the effect of terms that occur too often in the collection to be meaningful for relevance/meaning determination Scale down the term weight of terms with high collection frequency – Reduce the tf weight of a term by a factor that grows with the collection frequency More common for this purpose is document frequency (how many documents in the collection contain the term) Inverse document frequency N number of documents in the collection • N = 1000; df[the] = 1000; idf[the] = 0 • N = 1000; df[some] = 100; idf[some] = 2.3 • N = 1000; df[car] = 10; idf[car] = 4.6 • N = 1000; df[merger] = 1; idf[merger] = 6.9 it.idf weighting Highest when t occurs many times within a small number of documents – Lower when the term occurs fewer times in a document, or occurs in many documents – Thus lending high discriminating power to those documents Thus offering a less pronounced relevance signal Lowest when the term occurs in virtually all documents Document vector space representation Each document is viewed as a vector with one component corresponding to each term in the dictionary The value of each component is the tf-idf score for that word For dictionary terms that do not occur in the document, the weights are 0 How do we quantify the similarity between documents in vector space? Magnitude of the vector difference – Problematic when the length of the documents is different Cosine similarity The effect of the denominator is thus to length-normalize the initial document representation vectors to unit vectors So cosine similarity is the dot product of the normalized versions of the two documents Tf modifications It is unlikely that twenty occurrences of a term in a document truly carry twenty times the significance of a single occurrence Maximum tf normalization Problems with the normalization A change in the stop word list can dramatically alter term weightings A document may contain an outlier term