– DataStage Streams Integration Mike Koranda

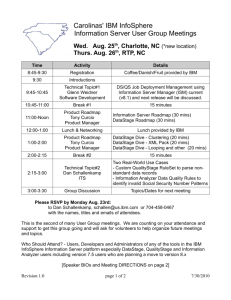

advertisement

Streams – DataStage

Integration

InfoSphere Streams Version 3.0

ClickKoranda

to add text

Mike

Release Architect

1

© 2012 IBM Corporation

Agenda

What is InfoSphere Information Server and DataStage?

Integration use cases

Architecture of the integration solution

Tooling

2

© 2012 IBM Corporation

Information Integration Vision

Transform Enterprise Business

Processes & Applications with

Trusted Information

Deliver Trusted Information

for Data Warehousing and

Business Analytics

Build and

Manage a

Single View

Secure Enterprise

Data & Ensure

Compliance

Address

information

integration in

context of

broad and

changing

environment

Simplify &

accelerate:

Design once

and leverage

anywhere

Integrate &

Govern Big

Data

Consolidate and

Retire

Applications

Make Enterprise Applications

more Efficient

3

© 2012 IBM Corporation

IBM Comprehensive Vision

Traditional Approach

New Approach

Structured, analytical, logical

Creative, holistic thought, intuition

Data

Data

Warehouse

Warehouse

Hadoop

Hadoop

Streams

Streams

Transaction Data

Internal App Data

Mainframe Data

Web Logs

Structured

Repeatable

Linear

Information

Integration &

Governance

Unstructured

Exploratory

Iterative

4

Text & Images

Sensor Data

OLTP System Data

ERP data

Social Data

Traditional

Traditional

Sources

Sources

New

New

Sources

Sources

RFID

© 2012 IBM Corporation

IBM InfoSphere DataStage

Industry Leading Data Integration

for the Enterprise

Simple to design - Powerful to deploy

Rich capabilities spanning six critical dimensions

Developer Productivity

Rich user interface features that

simplify the design process and

metadata management requirements

Transformation Components

Extensive set of pre-built objects that

act on data to satisfy both simple &

complex data integration tasks

Connectivity Objects

Native access to common industry

databases and applications exploiting

key features of each

5

Runtime Scalability & Flexibility

Performant engine providing unlimited

scalability through all objects tasks in

both batch and real-time

Operational Management

Simple management of the operational

environment lending analytics for

understanding and investigation.

Enterprise Class Administration

Intuitive and robust features for

installation, maintenance, and

configuration

© 2012 IBM Corporation

Use Cases - Parallel real-time analytics

6

© 2012 IBM Corporation

Use Cases - Streams feeding DataStage

7

© 2012 IBM Corporation

Use Cases – Data Enrichment

8

© 2012 IBM Corporation

Runtime Integration High Level View

Streams

DataStage

Job

Job

Streams

Connector

TCP/IP

DSSource /

DSSink

Operator

Composite operators that wrap existing

TCPSource/TCPSink operators

9

© 2012 IBM Corporation

Streams Application (SPL)

use com.ibm.streams.etl.datastage.adapters::*;

composite SendStrings {

type RecordSchema =

rstring a, ustring b;

graph

stream<RecordSchema> Data = Beacon() {

param iterations : 100u;

initDelay:1.0;

output Data : a="This is single byte chars"r,

b="This is unicode"u;

}

() as Sink = DSSink(Data) {

param name : "SendStrings";

}

config

applicationScope : "MyDataStage";

}

10

• When the job starts, the DSSink/DSStage stage

registers its name with the SWS nameserver

© 2012 IBM Corporation

DataStage Job

User adds a Streams Connector and configures properties and columns

11

© 2012 IBM Corporation

DataStage Streams Runtime Connector

Uses nameserver lookup to establish connection (“name” + “application

scope”) via HTTPS/REST

Uses TCPSource/TCPSink binary format

Has initial handshaking to verify the metadata

Supports runtime column propagation

Connection retry (both initial & in process)

Supports all Streams types

Collection types (List, Set, Map) are represented as a single XML column

Nested tuples are flattened

Schema reconciliation options (unmatched columns, RCP, etc)

Wave to punctuation mapping on input and output

Null value mapping

12

© 2012 IBM Corporation

Tooling Scenarios

User creates both DataStage job and Streams application from scratch

– Create DataStage job in IBM Infosphere DataStage and QualityStage

Designer

– Create Streams Application in Streams Studio

User wishes to add Streams analysis to existing DataStage jobs

– From Streams Studio create Streams application from DataStage

Metadata

User wishes to add DataStage processing to existing Streams application

– From Streams Studio create Endpoint Definition File and import into

DataStage

13

© 2012 IBM Corporation

Streams to DataStage Import

1.

2.

3.

4.

On Streams side, user runs ‘generate-ds-endpoint-defs’ command to generate an ‘Endpoint Definition

File’ (EDF) from one or more ADL files

User transfers file to DataStage domain or client machine

User runs new Streams importer in IMAM to import EDF to StreamsEndPoint model

Job Designer selects end point metadata from stage. The connection name and columns are populated

accordingly.

ADL

ADL

Streams

command

line or

Studio

menu

EDF

EDF

IMAM

Xmeta

FTP

14

© 2012 IBM Corporation

Stage Editor

15

© 2012 IBM Corporation

Stage Editor

16

© 2012 IBM Corporation

DataStage to Streams Import

1.

2.

3.

4.

On Streams side, user runs ‘generate-ds-spl-code’ command to generate a template application that

from a DataStage job definition

The command uses a Java API that uses REST to query DataStage jobs in the repository

The tool provides commands to identify jobs that use the Streams Connector, and to extract the

connection name and column information

The template job includes a DSSink or DSSource stage with tuples defined according to the DataStage

link definition

Xmeta

17

REST API

HTTP

Java

API

Streams

command

line or

Studio

menu

SPL

© 2012 IBM Corporation

DataStage to Streams Import

18

© 2012 IBM Corporation

Availability

Streams Connector available in InfoSphere Information Server 9.1

The Streams components available in InfoSphere Streams Version

3.0 in the IBM InfoSphere DataStage Integration Toolkit

19

© 2012 IBM Corporation