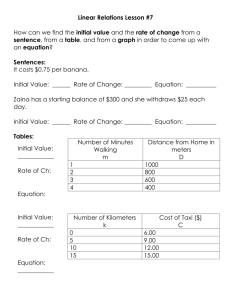

Peer-to-Peer in the

advertisement

Table 1: Summary of techniques used their advantages. Key K A G B F C E Nodes B, C and D store keys in range (A,B) including K. Problem Technique Partitioning Consistent Hashing High Availability for writes Vector clocks with reconciliation during reads Handling temporary failures Sloppy Quorum and hinted handoff D Peer-to-Peer in the Datacenter: Amazon Dynamo Figure 2: Partitioning and replication of keys in Dynamo ring. Traditional replicated relational database systems focus on the problem of guaranteeing strong consistency to replicated data. Although strong consistency provides the application writer a convenient programming model, these systems are limited in scalability and availability [7]. These systems are not capable of handling network partitions because they typically provide strong consistency guarantees. 3.3 Discussion Dynamo differs from the aforementioned decentralized storage systems in terms of its target requirements. First, Dynamo is targeted mainly at applications that need an “always writeable” data store where no updates are rejected due to failures or concurrent writes. This is a crucial requirement for many Amazon applications. Second, as noted earlier, Dynamo is built for an infrastructure within a single administrative domain where all nodes are assumed to be trusted. Third, applications that use Dynamo do not require support for hierarchical namespaces (a norm in many file systems) or complex relational schema (supported by traditional databases). Fourth, Dynamo is built for latency sensitive applications that require at least 99.9% of read and write operations to be performed within a few hundred milliseconds. To meet these stringent latency requirements, it was imperative for us to avoid routing requests through multiple nodes (which is the typical design adopted by several distributed hash Recovering from permanent failures Anti-entropy using Merkle trees Membership and failure detection Gossip-based membership protocol and failure detection. Table 1 presents a summary of the list of tech and their respective advantages. Mike Freedman 4.1 System Interface COS 461: Computer Networks Dynamo stores objects associated with a k interface; it exposes two operations: get() an operation locates the object replicas associate storage system and returns a single object or conflicting versions along with a context. T object) operation determines where the re should be placed based on the associated replicas to disk. The context encodes system object that is opaque to the caller and include the version of the object. The context inform with the object so that the system can verif context object supplied in the put request. http://www.cs.princeton.edu/courses/archive/spr14/cos461/ Last Lecture… F bits d4 u4 upload rate us Internet d3 d1 u1 2 upload rates ui download rates di d2 u2 u3 This Lecture… 3 Amazon’s “Big Data” Problem • Too many (paying) users! – Lots of data • Performance matters – Higher latency = lower “conversion rate” • Scalability: retaining performance when large 4 Tiered Service Structure produ bette SLA In th distri on expe wher Ama avera of w perfo Stateless Stateless Stora servi light mana SLA give durab trade effec Stateless All of the State 5 Figure 1: Service-oriented architecture of Amazon’s 2.3 Horizontal or Vertical Scalability? Vertical Scaling 6 Horizontal Scaling Horizontal Scaling is Chaotic • k = probability a machine fails in given period • n = number of machines • 1-(1-k)n = probability of any failure in given period • For 50K machines, with online time of 99.99966%: – 16% of the time, data center experiences failures – For 100K machines, 30% of the time! 7 Dynamo Requirements • High Availability – Always respond quickly, even during failures – Replication! • Incremental Scalability – Adding “nodes” should be seamless • Comprehensible Conflict Resolution – High availability in above sense implies conflicts 8 Dynamo Design • Key-Value Store via DHT over data nodes – get(k) and put(k, v) • Questions: – Replication of Data – Handling Requests in Replicated System – Temporary and Permanent Failures – Membership Changes 9 Data Partitioning and Data Replication • Familiar? • Nodes are virtual! Key K A – Heterogeneity G B • Replication: – Coordinator Node – N-1 successors also – Nodes keep preference list 10 C F E Nodes B, C and D store keys in range (A,B) including K. D Figure 2: Partitioning and replication of keys in Dyn ring. Traditional replicated relational database systems focus Handling Requests • Request coordinator consults replicas – How many? Key K A • Forward to N replicas from preference list G – R or W responses form a read/write quorum • Any of top N in pref list can handle req – Load balancing & fault tolerance 11 B C F E Nodes B, C and D store keys in range (A,B) including K. D Figure 2: Partitioning and replication of keys in Dyn ring. Traditional replicated relational database systems focus Detecting Failures • Purely Local Decision – Node A may decide independently that B has failed – In response, requests go further in preference list • A request hits an unsuspecting node – “temporary failure” handling occur 12 Handling Temporary Failures • E is in replica set Key K – Needs to receive replica A – Hinted Handoff: replica contains “original” node • When C comes back – E forwards the replica back to C G B XC F E Nodes B, C and D store keys in range (A,B) including K. D Add E to the replica set! Figure 2: Partitioning and replication of keys in Dyn ring. 13 Traditional replicated relational database systems focus Managing Membership • Peers randomly tell another their known membership history – “gossiping” • Also called epidemic algorithm – Knowledge spreads like a disease through system – Great for ad hoc systems, self-configuration, etc. – Does this make sense in Amazon’s environment? 14 Gossip could partition the ring • Possible Logical Partitions – A and B choose to join ring at about same time: Unaware of one another, may take long time to converge to one another • Solution: – Use seed nodes to reconcile membership views: Well-known peers that are contacted frequently 15 Why is Dynamo Different? • So far, looks a lot like normal p2p • Amazon wants to use this for application data! • Lots of potential synchronization problems • Uses versioning to provide eventual consistency. 16 Consistency Problems • Shopping Cart Example: – Object is a history of “adds” and “removes” – All adds are important (trying to make money) 17 Client: Expected Data at Server: Put(k, [+1 Banana]) Z = get(k) Put(k, Z + [+1 Banana]) Z = get(k) Put(k, Z + [-1 Banana]) [+1 Banana] [+1 Banana, +1 Banana] [+1 Banana, +1 Banana, -1 Banana] What if a failure occurs? Client: Data on Dynamo: Put(k, [+1 Banana]) [+1 Banana] at A A Crashes Z = get(k) Put(k, Z + [+1 Banana]) Z = get(k) Put(k, Z + [-1 Banana]) B not in first Put’s quorum [+1 Banana] at B [+1 Banana, -1 Banana] at B Node A Comes Online At this point, Node A and B disagree about object state •How is this resolved? •Can we even tell a conflict exists? 18 “Time” is largely a human construct • What about time-stamping objects? – Could authoritatively say whether object newer or older? – But, all events are not necessarily witnessed • If system’s notion of time corresponds to “real-time”… – New object always blasts away older versions – Even though those versions may have important updates (as in bananas example). • Requires a new notion of time (causal in nature) • Anyhow, real-time is impossible in any case 19 Causality • Objects are causally related if value of one object depends on (or witnessed) the previous • Conflicts can be detected when replicas contain causally independent objects for a given key • Notion of time which captures causality? 20 Versioning • Key Idea: Every PUT includes a version, indicating most recently witnessed version of updated object • Problem: replicas may have diverged – No single authoritative version number (or “clock” number) – Notion of time must use a partial ordering of events 21 Vector Clocks • Every replica has its own logical clock – Incremented before it sends a message • Every message attached with vector version – Includes originator’s clock – Highest seen logical clocks for each replica • If M1 is causally dependent on M0: – Replica sending M1 will have seen M0 – Replica will have seen clocks ≥ all clocks in M0 22 Vector Clocks in Dynamo • Vector clock per object • get() returns obj’s vector clock • put() has most recent clock – Coordinator is “originator” • Serious conflicts are resolved by app / client 23 Figure 3: Version evolution of an object over tim Vector Clocks in Banana Example Client: Data on Dynamo: Put(k, [+1 Banana]) [+1] v=[(A,1)] A Crashes Z = get(k) Put(k, Z + [+1 Banana]) Z = get(k) Put(k, Z + [-1 Banana]) at A B not in first Put’s quorum [+1] v=[(B,1)] at B [+1,-1] v=[(B,2)] at B A Comes Online [(A,1)] and [(B,2)] are a conflict! 24 Eventual Consistency • Versioning, by itself, does not guarantee consistency – If you don’t require a majority quorum, you need to periodically check that peers aren’t in conflict – How often do you check that events are not in conflict? • In Dynamo: – Nodes consult with one another using a tree hashing (Merkel tree) scheme – Quickly identify whether they hold different versions of particular objects and enter conflict resolution mode 25 NoSQL • Notice that Eventual Consistency and Partial Orderings do not give you ACID! • Rise of NoSQL (outside of academia) – – – – – 26 Memcache Cassandra Redis Big Table MongoDB

![Paul Charbonneau [], Département de Physique, Université de Montréal, Canada](http://s2.studylib.net/store/data/013086474_1-07f8fa2ff6ef903368eff7b0f14ea38f-300x300.png)