CS 1104 Help Session III I/O and Buses Colin Tan,

advertisement

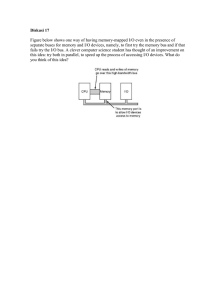

CS 1104 Help Session III I/O and Buses Colin Tan, ctank@comp.nus.edu.sg S15-04-15 Why do we need I/O Devices? • No computer is an island – Need to interact with people and other computers and devices. – People don’t speak binary, so need keyboards and display units that “speak” English (or Chinese etc.) to interface with people. – Communicating with other computers presents its own problems. • Synchronization • Erroneous transmission/reception • Error and failure recovery (e.g. Other computer died while talking) The Need for I/O • Also, there is a need for permanent storage: – Main memory, cache etc are volatile devices. Contents lost when power is lost. – So we have hard-disks to store files, data, etc. • Impractical to build all of these into the CPU – Too complex, too big to put on CPU – The varying needs of users mean that the types of I/O devices will vary. • Office user will need laser printer, but ticketing officer needs dot-matrix printer. Devices We Will Be Looking At • Disk Drives – Data is organized into fixed-size blocks. – Blocks are organized in concentric circles called tracks. The tracks are created on both sides of metallic disks called “platters”. – The corresponding tracks on each side of each platter form a cylinder (e.g. Track 0 on side 0 of platter 0, track 0 on side 1 of platter 0, track 0 on side 0 of platter 1 etc.) • Latencies are involved in finding the data and reading or writing it. Devices We Will Be Looking At • Network Devices – Transmits data between computers. – Data often organized in blocks called “packets”, or sometimes into frames. – Latencies are involved in sending/receiving data over a network. Devices We Will Be Looking At • Buses – Buses carry data and control signals from CPU to/from memory and I/O devices. • DMA Controller (DMAC) – The DMAC performs transfers between I/O devices and memory without CPU intervention. Disk Drives • Latencies involved in accessing drives: – Controller overheads: To read or write a drive, a request must be made to the drive controller. The drive controller may take some time to respond. This delay is called the “controller overhead”, and is usually ignored. • Controller overhead time also consists of delays introduced by controller circuitry in transferring data. – Head-Selection Time:Each side of each platter has a head. To read the disk, first select which side of which platter to read/write by activating its head and deactivating the other heads. Normally this time is ignored. Disk Drives • Latencies involved in accessing drives: – Seek Time:Once the correct head has been selected, it must be moved over the correct track. The time taken to do this is called the “seek time”, and is usually between 8 to 20 ms (NOT NEGLIGIBLE!) – Rotational Latency: Even when the head is over the correct track, it must wait for the block it wants to read to rotate by. • The average rotational latency is T/2, where T is the period (in seconds) of the rotation speed R (60/R if R is specified in RPM, or 1/R if R is specified in RPS) Disk Drives • Latencies involved in accessing drives: – Transfer Time: This is the time taken to actually read the data. If the throughput of the drive is given as X MB/s and we want to read Y bytes of data, then the transfer time is given by: Y/(X * 10^6) Example • A program is written to access 3 blocks of data (the blocks are not contiguous and may exist anywhere on the disk) from a disk with rotation speed of 7200 rpm, 12ms seek time, throughput of 10 MB/S and a block size of 16 KB. Compute the worst case timing for accessing the 3 blocks. Example • Analysis: – Each block can be anywhere on the disk • In the worst case, we must incur seek, rotational and transfer delays for every block. – What is the timing for each delay? • • • • • Controller Overhead - Negligible (since not given) Head-switching time - Negligible (since not given) Seek time Rotational Latency Transfer time. – How many times are each of these delays incurred? Example • A disk drive has a rotational speed of 7200 rpm. Each block is 16KB, and there are 16 blocks per track. There are 22 platters with 25 tracks each. The average seek time is 12ms. – What is the capacity of this disk? – How long does it take to read 1 block of data? Example • Analysis – Size: • How many sides are there? How many tracks per side? How many blocks per track? How big is each block? – Time to read 1 block • Throughput is not given. How to work it out? Network Devices • Some major network types in use today: – Ethernet • The most common networking technology • Poor performance under high traffic. – FDDI - uses laser and fibre optic technology to transmit data • Fast, expensive. • Slowly being replaced by gigabit ethernets. – Asynchronous Transfer Mode (ATM) • Fast throughput by using simple and fast components • Very expensive. • Example of daily ATM use: Singtel Magix (ADSL) Ethernet Packet Format Data Pad Check Preamble Dest Addr Src Addr 8 Bytes 6 Bytes 6 Bytes 0-1500B 0-46B 4B Length of Data 2 Bytes • Preamble to recognize beginning of packet • Unique Address per Ethernet Network Interface Card so can just plug in & use • Pad ensures minimum packet is 64 bytes – Easier to find packet on the wire • Header+ Trailer: 24B + Pad Software Protocol to Send and Receive • SW Send steps 1: Application copies data to OS buffer 2: OS calculates checksum, starts timer 3: OS sends data to network interface HW and says start • SW Receive steps 3: OS copies data from network interface HW to OS buffer 2: OS calculates checksum, if OK, send ACK; if not, delete message (sender resends when timer expires) 1: If OK, OS copies data to user address space, & signals application to continue Network Devices • Latencies Involved: – Interconnect Time: This is the time taken for 2 stations to “hand-shake” and establish a communications session – Hardware Latencies: There is some latency in gaining access to a medium (e.g. In Ethernet the Network Interface Card (NIC) must wait for the Ethernet cable to be free of other activity) and in reading/writing to the medium. – Software Latencies: Network access often requires multiple buffer copying operations, leading to delays. Network Devices • Latencies Involved: – Propagation Delays: For very large networks stretching thousands of miles, signals do not reach their destination immediately, and take some time to travel in the wire. More details in CS2105. – Switching Delays: Large networks often have intermediate switches to receive and re-transmit data (to restore signal integrity, for routing etc.). These switches introduce delays too. More details in CS2105. Network Devices • Latencies Involved: – Data Transfer Time: Time taken to actually transfer the data. If we wish to transfer Y bytes of data over a network link with a throughput of X MBPS, the data transfer time is given by: (Y bytes)/(X * 10^6) – Aside from the Data Transfer Time (where real useful work is actually being done), all of the other latencies do not accomplish anything useful (but are still necessary), and these are termed “overheads”. Network Devices • Note that if the overheads are much larger than the data transfer time, it is possible for a slow network with low overheads to perform better than a fast network with high overheads. – E.g. Page 654 of Patterson & Hennessy. Example • A communications program was written and profiled, and it was found that it takes 40ns to copy data to and from the network. It was also found that it takes 100ns to establish a connection, and that effective throughput was 5 MBPS. Compute how long it takes to send a 32KB block of data over the network. Example • Analysis: – What are the overheads? What is the data transfer time? Buses • Buses are extremely important devices (essentially they’re groups of wires) that bring data and control signals from one part of a system to another. • Categories of bus lines: – Control Lines: These carry control signals like READ/WRITE signals and the CLK signal. – Address Lines: These contain identifiers of devices to read/write from, or addresses of memory locations to access. Buses – Data Lines: These actually carry the data we want to transfer • Sometimes the data/address lines are multiplexed onto the same set of lines. This allows us to build cheaper but slower buses – Must alternate between sending addresses and sending data, instead of spending all the time sending data. Types of Buses • 3 Broad Category of Buses – CPU/Memory Bus: These are very fast (100 MHz or more), very short buses that connect the CPU to the cache system and the cache system to the main memory. If the cache is on-chip, then it connects the CPU to the main memory. – I/O Bus: The I/O bus connects I/O devices to the CPU/Memory Bus, and is often very slow (12 MHz to 66 MHz). – Backplane Bus: The backplane bus is a mix of the 2, and often CPU, memory and I/O devices all connect to the same backplane bus. Combining Bus Types • Can have several schemes: – 1 bus system: CPU, memory, I/O devices all connected to the memory bus. – 2 bus system: CPU, memory connected via memory bus, and I/O connected via I/O bus. – 3 bus system: CPU and memory connected via memory bus, I/O connected via small set of backplane buses. 1-Bus System Memory Bus CPU Tape Console Memory Disk • 1-bus system: CPU, memory and I/O share single bus. • Bad bad bad - I/O very slow, slows down the memory bus. • Affects performance of memory accesses and hence overall CPU performance. 2-Bus System CPU Memory Bus Memory Bus Adapter Tape I/O Bus Console Disk • 2-bus system: CPU and memory communicate via memory bus. • I/O devices send data via I/O bus. 2-Bus System • I/O Bus is de-coupled from memory bus by I/O controller. • I/O controller will coordinate transfers between the fast memory bus and the slow I/O bus. – Buffers data between buses so no data is lost. – Arbitrates for memory bus if necessary. • In the notes, the I/O controller is called a “Bus Adaptor”. Both words mean the same thing. 3-Bus System CPU Memory Bus Memory Bus Adapter Console Disk Bus Adapter I/O Bus Bus Adapter I/O Bus Backplane Bus Tape • Memory and CPU still connected directly – This is important because it allows fast CPU/memory interaction. 3-Bus System • A backplane bus interfaces with the memory bus via a Bus Adapter. – Backplane buses typically have very high bandwidth, • Not quite as high as memory bus though. • Multiple I/O buses interface with the backplane bus. – Possible to have devices on different I/O buses communicating with each other, with the CPU completely uninvolved! • Very efficient I/O transfers possible. Synchronous vs Asynchronous • Synchronous buses: Operations are coordinated based on a common clock. • Asynchronous buses: Operations are coordinated based on control signals. Synchronous Example (Optional) • A typical memory system works in the following way: – Addresses are first placed on the address bus. – After a delay of 1 cycle (the hold time), the READ signal is asserted. – After 4 cycles, the data will become available on the data lines. – The data remains available for 2 cycles after the READ signal is de-asserted, during which time no new read operations may be performed. Synchronous Example (Optional) CLK ADDR READ DATA Synchronous Example (Optional) • Given that the synchronous bus in the previous example is operating at 200MHz, and that the time taken to read 1 word (4 bytes) of data from the DATA bus is 40ns, compute the maximum memory read bandwidth for this bus (assume that the READ line is dropped only after reading the data). Assume also that the time taken to place the address on the address bus is negligible. Synchronous Example (Optional) • Analysis: – How long is each clock cycle in seconds or ns? – How long does it take to set up the read? (put address on address bus, assert the READ signal, wait for the data to appear, read the data, de-assert the READ signal) – How long does it take before you can repeat the READ operation? – Therefore, in 1 second, how many bytes of data can you read? Asynchronous Bus Example (Optional) • Asynchronous buses use a set of request/grant lines to perform data transfers instead of a central clock. • E.g. Suppose CPU wants to write to memory – 1. CPU will make a request by asserting the MEMW line. – 2. Memory sees MEMW line asserted, and knows that CPU wants to write to memory. It asserts a WGNT line to indicate the CPU may proceed with the write. – 3. CPU sees the WGNT line asserted, and begins writing. Asynchronous Bus Example (Optional) – 4. When CPU has finished writing, it de-asserts the MEMW line. – 5. Memory sees MEMW line de-asserted, and knows that CPU has completed writing. – 6. In response, memory de-asserts the WGNT line. CPU sees WGNT line de-asserted, and knows that memory understands that writing is complete. Asynchronous vs. Synchronous A Summary • Asynchronous Buses – Coordination is based on the status of control lines (MEMW, WGNT in our example). – Timing is not very critical. Devices can work as fast or as slow as they want without worrying about timing. – More difficult to design and build devices for async buses. • Need good understanding of protocol. • Synchronous Buses – Coordination is based on a central clock. – Timing is CRITICAL. If a device exceeds or goes below the specified number of clock cycles, system will fail (“clock skewing”). – However synchronous buses are fast, and simpler to design devices for it. Bus Arbitration • Often more than one device is trying to gain access to a bus: – A CPU and a DMA controller may both be trying to use the CPU-Memory bus. – Only 1 device can use the bus each time, so need a way to arbitrate who gets to use it. • Buses are common set of wires shared by many devices. • If >1 device tries to access the bus at the same time, there will be collisions and the data sent/received along the bus will be corrupted beyond recovery. – Solve by prioritizing: If n devices need to use the bus, the one with the highest priority will use it. Bus Arbitration • Bus arbitration may be done in a co-operative way (each device knows and co-operates in determining who has higher priority) – No single point of failure – Complicated • May also have a central arbiter to make decisions – Easier to implement – Bottleneck, single point of failure. Central Arbitration Dev1 Dev0 Req0 Dev2 Dev3 Req1 Req2 Bus Controller Req3 GNT0 GNT1 GNT2 GNT3 • Devices wishing to use the bus will send a request to the controller. • The controller will decide which device can use the bus, and assert its grant line (GNTx) to tell it. Distributed Arbitration • Devices can also decide amongst themselves who should use the bus. Dev0 Dev1 Dev2 Dev3 Req0 Req1 Req3 Req2 GNT0 GNT1 GNT2 GNT3 Request Grant • Every device knows which other devices are requesting. • Each device will use an algorithm to collectively agree who will use the bus. • The device that wins will assert its GNTx line to show that it knows that it has won and will proceed to use the bus. Arbitration Schemes • Round Robin (Centralized or Distributed Arbitration) – Arbiter keeps record of which device last had the highest priority to use the bus. – If dev0 had the highest priority, on the next request cycle dev1 will have the highest priority, then dev2 all the way to devn, and it begins again with dev0. Arbitration Schemes • Daisy Chain (Usually centralized arbitration) Req Bus Controller Req GNT Dev 0 GNT Req Dev 1 GNT Req Dev 2 GNT • Only 1 request and 1 grant line. • Request lines are relayed to the bus controller through the intervening devices. • If the bus controller sees a request, it will assert the GNT line Dev 3 Arbitration Schemes – The GNT line is again relayed through intervening devices, until it finally reaches the requesting device, and the device can now use the bus. – If an intervening device also needs the bus, it can hijack the GNT signal and use the bus, instead of relaying it on to the downstream requesting device. • E.g. If both Dev3 and Dev1 request for the bus, the controller will assert GNT. Dev1 will hijack the GNT and use the bus instead of passing the GNT on to Dev3. – Devices closer to the arbiter have higher priority. – Possible to starve lower-priority devices. Arbitration Schemes • Collision Detection – This scheme is used in Ethernet, the main LAN technology that connects computers together. – Properly called “Carrier Sense Multiple Access with Collision Detection”, or CSMA/CD. – In such schemes, all devices (“stations”) have permanent and continuous access to the bus: IBM Compatible Workstation Laptop computer Ethernet Mac SE/Classic IBM Compatible Arbitration Schemes • CSMA/CD Algorithm – Suppose a station A wishes to transmit: • Check bus, and see if any station is transmitting. • If no, transmit. If yes, wait until bus becomes free. • Once free, start transmitting. While transmitting, listen to the bus for collisions. – Collisions can be detected by a sudden increase in the average bus voltage level. – Collisions occur when at least 2 stations A and B see that the bus is free, and begin transmitting together. – In event of a collision: • All stations stop transmitting immediately. • All stations wait a random amount of time, test bus, and restart transmission if free. Arbitration Schemes • Advantages: – Completely distributed arbitration, little coordination between stations needed. – Very good performance under light traffic (few stations transmitting. • Disadvantages – Performs degrades exponentially relative to number of stations transmitting • If many stations wish to transmit together, there will be many collisions and stations will need to resend data repeatedly. • At worst case, effective throughput can fall to 0. Arbitration Schemes • Fixed Priority (Centralized or Distributed Arbitration) – Some devices have higher priority than others. – This priority is fixed. A Bus Analysis Example • Page 665 - The example that no one understands Suppose we have a system with the following characteristics: – A memory and bus system supporting block access of 4 and 16 32 bit words. – A 64-bit synchronous bus clocked at 200MHz, and each 64-bit transfer takes 1 cycle, and 1 cycle required to send an address to memory. – Two clock cycles between each bus operation (assume the bus is idle before an access) – A memory access tme for the first four words of 200 ns; each additional set of four words can be read in 20ns. Assume that a bus transfer of the most recently read data and a read of the next four words can be overlapped. • Find the sustained bandwidth for a read of 256 words for transfers that use 4 word blocks and 16 word blocks. A Bus Analysis Example • Analysis: A 200MHz clock means that each cycle is 5ns. 1. For 4-word block transfers: • Need 1 cycle to send address to memory • Need 200ns/5ns = 40 cycles to read the first 4 words. • The bus is 64-bits wide, which is 2-words. To send 4 words, need to send over 2 cycles (2 words per cycle). So need 2 cycles to send the data. • Need further 2 cycles idle time between transfers • This makes a total of 45 cycles. – To transfer 256 words, need a total of 256/4 = 64 transactions – Total number of cycles = 64 * 45 = 2,880 cycles. Bus Analysis Example – Each cycle is 5ns, so 2,880 cycles is 14,400ns. – Since each 64 transactions takes 14,400ns, total number of transactions = 64 * 1s/(14,400ns) – To find bandwidth: • It takes 14,400ns to transfer 256 words. So in one second, we can transfer (256*4) * 1s/(14,400ns) bytes. Bus Analysis Example • For 16-word blocks: – – – – – It takes 1 cycle to send the address 40 cycles to read the first 4 words 2 cycles to send the 4 words 2 cycles of idle time. Since we can overlap the read of the next 4 words (taking 20ns = 4 cycles) together with this 2-cycle transfer time and 2-cycle idle time, the data for the next 4 words will be ready once these 4 cycles are up. Bus Analysis Example • Hence it is now possible to send the subsequent four words in 2 cycles, and idle for two cycles. • Again, during this send-and-idle time of 4 cycles, the 3rd group of 4 words becomes available, and can be sent in 2 cycles, and idle for 2 cycles. • During this time the 4th group of 4 words becomes available and sent over 2 cycles, followed by an idle of 2 cycles. • Hence the total number of cycles required is: 1 + 40 + (2 + 2) + (2 + 2) + (2 + 2) + (2 + 2) = 57 cycles Bus Analysis Example • It takes 57 cycles per transaction, and total number of transactions = 256/16 = 16 transactions. • Total number of cycles = 16 * 57 = 912 cycles. • This is equal to 912 * 5ns = 4560ns. • Hence total number of transactions per second is equal to: 16 * (1s/4,560ns) = 3.51 M transactions/s • A total of (256 * 4) bytes can be sent in 4,560 ns, so in one second we can have a throughput of: (256 *4)/(1s/4560ns) = 224.56 MB/s Polling vs. Interrupts • After a CPU has requested for an I/O operation, it can do one of two things to see if the operation has been completed: – Keep checking the device to see if operation is complete - Polling – Go do other stuff, and when the device completes the operation, it will tell the CPU - Interrupts. Polling • In a polling scheme, the devices have special registers called “Status Registers”. • Status registers contain flags that indicate if an operation has completed, error conditions etc. • The CPU will periodically check these registers until either a flag indicates that the operation has completed, or another flag indicates an error condition. Why is Polling Good? • Polling is simple to implement – Just need flip-flops to indicate flag status. – Most of the work is done in software • Cheap! Simple to design hardware! Why is Polling Bad? • Polling basically works like this: – You are expecting a phone call. – Your phone does not have a ringer – You spend the entire day randomly picking up the phone to see if the other person is on the other end of the line. – If he isn’t you put the phone back down and try again later. – If he is, you start talking. • You can waste a lot of time doing this! Polling Example • Suppose we have a hard-disk with throughput of 4 MB/s. The disk transfers in 4-word chunks. The drives actually transfer data only 5% of the time. • How many times per second does the CPU need to poll the disk so that no data is lost? If the CPU speed is 500MHz, and if polls require 400 cycles, what portion of CPU time is spent polling? Polling Example • Analysis: – CPU has to poll regardless of whether drive is actually transferring data or not!! – Data is transferred at 4MB/second, in 4-word (i.e. 16-byte) chunks. – Therefore number of polls required/second is 4MB/16 which is equal to 250,000 polls. – Each poll takes 400 cycles, so total number of cycles for polling is 250,000 x 400 = 100,000,000 cycles! – Proportion of CPU time spent = 100x10^6/500x10^6 which is equal to 20% – So 20% of the time is just spent transferring data. Inefficient. Interrupts • Alternative: – CPU makes request, does other things. – When I/O device is done, it will inform the CPU via an interrupt. • This is like having a telephone with a ringer – You pick the phone up and talk only when it rings. – More efficient! Interrupt Example • Suppose we have the same disk arrangement as before, but this time interrupts are used instead of polling. Find the fraction of processor time taken to process an interrupt, given that it takes 500 cycles to process an interrupt and that the disk sends data 5% of the time. Interrupt Example • Analysis: – Each time the disk wants to transfer a 4-word (i.e. 16 byte) block, it will interrupt the processor. Number of interrupts per second would be 4MB/16 = 250,000 interrupts per second. – Number of cycles per interrupt = 500 – Therefore number of cycles per second to service interrupt is 500 x 250,000 = 125,000,000 or 125x10^6. – Percentage of CPU time spent processing interrupts per second is now (125x10^6)/(500x10^6) = 25% – Worse than before!! – BUT interrupts occur only when the drive actually has data to transfer! • This happens only 5% of the time! – Therefore actual percentage of CPU time used per second is 5% of 25% = 1.25%. Polling vs. Interrupts The Conclusion • Numerical examples show that polling is very expensive. – CPU has no way of knowing whether a device needs attention, and so has to keep polling repeatedly so as not to miss data. – In our example, even if the drive is idle 95% of the time, CPU still has to poll. • Interrupts allow CPU to do useful work during this 95% of the time. – So even if processing an interrupt takes longer (500 cycles vs. 400 cycles for polling, in the end a smaller portion of CPU time is used to process the device (1.25% vs. 20%). Interrupt Implementation • How are interrupts implemented? – Most CPUs have multiple interrupt lines (e.g. 1 interrupt for the disk drive, 1 for the keyboard etc.). – Each interrupt will have its own interrupt handler • Basically we don’t want a keyboard driver to be processing disk interrupts! – This means that when we have an interrupt, we need a way of knowing which handler we should call. • We really don’t want to call the drive handler when we have network messages. – The currently executing process is suspended as the handler is called. • Handler must save registers etc. so that the interrupted process can resume properly later on. Interrupt Implementation • Two solutions: – Interrupt Vector Tables • Interrupts are assigned numbers (e.g. Drive interrupt may be interrupt 1, network interrupts may be interrupt 2 etc.). • When an interrupt occurs, the CPU will check which interrupt it was, and get that interrupt’s number. • It will use the number and consult a look-up table. The table will tell the processor which handler to use. • The processor hands control over to that handler. • The look-up table used is called an Interrupt Vector Table. Table look-ups and handing-over of control (vectoring) is handled completely in hardware. • This scheme is used in INTEL processors. Interrupt Implementation • Second option: – Again we have multiple numbered interrupts just like before. – When an interrupt occurs, the interrupt number is placed into a “Cause Register”. – A centralized handler is called. It will read the Cause Register, and based on that it will call the appropriate routine. – Conceptually similar to the first option, except that the vectoring is done in software instead of hardware. – This technique is used in the MIPS R2000. Interrupt Implementation • Interrupts are prioritized – If 2 interrupts occur together, the higher priority one gets processed first. • Re-entrancy – Sometimes as an interrupt is being processed, the interrupt may occur again. – This will cause the interrupt handler to itself be interrupted. – The handler must be careful to save registers etc. in case something like this happens, so that it can restart correctly. Direct Memory Access • So far all the devices we’ve seen rely on the CPU to perform the transfers, operations, etc. – E.g. when the drive interrupts the CPU, the CPU has to go to the drive’s data registers, read the 4-words that the drive has just retrieved, and store them into the buffers in memory. – Consumes valuable CPU cycles. Direct Memory Access • A better idea would be to have a dedicated device (the Direct Memory Access Controller or DMAC) to do these transfers for us. – CPU sends a request to the DMAC. • Request includes information like where to get the data from (side#, track#, block# for disk, for example), how many words of data to transfer, and when to begin the transfer. • DMAC starts the transfer, copying the data directly between the device and memory (hence the name Direct Memory Access). – DMAC needs to arbitrate for memory bus. – When the DMAC is done, it will notify the CPU via interrupts (can also be via CPU polling). Summary • I/O is important so that we can interact with computers, and computers can interact with each other to transfer data etc. • I/O devices include stuff like keyboards, monitors, disks, network cards (NIC), etc. • I/O may be supported by polling or interrupts – Polling wastes CPU time, but simple to design hardware – Interrupts more complex, interrupt handling may take longer, but overall CPU cycles are saved. Summary • There are 3 main types of buses – Memory Bus – I/O Bus – Backplane Bus • Most systems are made of combinations of 2 or 3 of these buses. • Bus arbitration is required if >1 device can read/write the bus. Summary • Arbitration can be centralized or distributed. • Several schemes can be used to implement arbitration – Round-robin – Daisy-Chain – Fixed.