SLIDES: Probe, Count and Classify: Categorizing Hidden Web Databases

advertisement

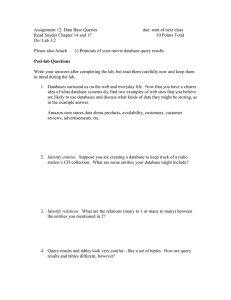

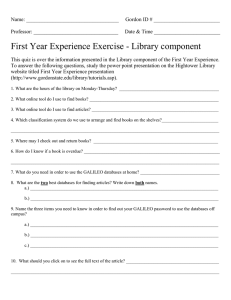

Probe, Count, and Classify: Categorizing Hidden Web Databases Panagiotis G. Ipeirotis Luis Gravano Columbia University Mehran Sahami E.piphany Inc. DIMACS Summer School Tutorial on New Frontiers in Data Mining Theme: Web -- Thursday, August 16, 2001 Surface Web vs. Hidden Web Keywords SUBMIT Surface Web Link structure Crawlable CLEAR Hidden Web No link structure Documents “hidden” behind search forms Do We Need the Hidden Web? Surface Web 2 billion pages Hidden Web 500 billion pages (?) Example: PubMed/MEDLINE PubMed: (www.ncbi.nlm.nih.gov/PubMed) search: “cancer” 1,341,586 matches AltaVista: “cancer site:www.ncbi.nlm.nih.gov” 21,830 matches Interacting With Searchable Text Databases Searching: Metasearchers Browsing: Yahoo!-like web directories: InvisibleWeb.com SearchEngineGuide.com Created Manually! Example from InvisibleWeb.com Health > Publications > PubMED Classifying Text Databases Automatically: Outline Definition of classification Classification through query probing Experiments Database Classification: Two Definitions Coverage-based classification: Database contains many documents about a category Coverage: #docs about this category Specificity-based classification: Database contains mainly documents about a category Specificity: #docs/|DB| Database Classification: An Example Category: Basketball Coverage-based classification ESPN.com, NBA.com, not KnicksTerritory.com Specificity-based classification NBA.com, KnicksTerritory.com, not ESPN.com Database Classification: More Details Thresholds for coverage and specificity Tc: coverage threshold (e.g., 100) Ts: specificity threshold (e.g., 0.5) Ideal(D): set of classes for database D Class C is in Ideal(D) if: D has “enough” coverage and specificity (Tc, Ts) for C and all of C’s ancestors and D fails to have both “enough” coverage and specificity for each child of C Tc, Ts “editorial” choices Ideal(D) SPORTS C=800 S=0.8 BASKETBALL S=0.5 Root HEALTH C=200 S=0.2 BASEBALL S=0.5 From Document to Database Classification If we know the categories of all documents inside the database, we are done! We do not have direct access to the documents. Databases do not export such data! How can we extract this information? Our Approach: Query Probing 1. Train a rule-based document classifier. 2. Transform classifier rules into queries. 3. Adaptively send queries to databases. 4. Categorize the databases based on adjusted number of query matches. Training a Rule-based Document Classifier Feature Selection: Zipf’s law pruning, followed by information-theoretic feature selection [Koller & Sahami’96] Classifier Learning: AT&T’s RIPPER [Cohen 1995] Input: A set of pre-classified, labeled documents Output: A set of classification rules IF linux THEN Computers IF jordan AND bulls THEN Sports IF lung AND cancer THEN Health Constructing Query Probes Transform each rule into a query IF lung AND cancer THEN health +lung +cancer IF linux THEN computers +linux Send the queries to the database Get number of matches for each query, NOT the documents (i.e., number of documents that match each rule) These documents would have been classified by the rule under its associated category! Adjusting Query Results Classifiers are not perfect! Queries do not “retrieve” all the documents in a category Queries for one category “match” documents not in this category From the classifier’s training phase we know its “confusion matrix” Confusion Matrix Correct class comp 10% of “Sports” classified as “Computers” sports health comp 0.70 0.10 0.00 sports 0.18 0.65 0.04 health 0.02 0.05 0.86 Probing results DB-real X 1000 5000 50 = 700+500+0 = 1200 180+3250+2 = 3432 20+250+43 = 313 10% of the 5000 “Sports” docs to “Computers” Classified into M . Coverage(D) ~ ECoverage(D) Confusion Matrix Adjustment: Compensating for Classifier’s Errors comp DB-real 1000 5000 50 = sports health comp 0.70 0.10 0.00 sports 0.18 0.65 0.04 health 0.02 0.05 0.86 -1 Probing results X 1200 3432 313 M is diagonally dominant, hence invertible Coverage(D) ~ M-1 . ECoverage(D) Multiplication better approximates the correct result Classifying a Database 1. 2. 3. 4. Send the query probes for the top-level categories Get the number of matches for each probe Calculate Specificity and Coverage for each category “Push” the database to the qualifying categories (with Specificity>Ts and Coverage>Tc) 5. 6. Repeat for each of the qualifying categories Return the classes that satisfy the coverage/specificity conditions The result is the Approximation of the Ideal classification Real Example: ACM Digital Library (Tc=100, Ts=0.5) Root Arts (0,0) Software (2060, 0.355) Computers (9919, 0.95) Hardware (2709, 0.465) C/C++ Health (0,0) Science (430, 0.042) Programming (1042, 0.18) Perl Java Visual Basic Sports (22, 0.008) Experiments: Data 72-node 4-level topic hierarchy from InvisibleWeb/Yahoo! (54 leaf nodes) 500,000 Usenet articles (April-May 2000): Newsgroups assigned by hand to hierarchy nodes RIPPER trained with 54,000 articles (1,000 articles per leaf) 27,000 articles used to construct estimations of the confusion matrices Remaining 419,000 articles used to build 500 Controlled Databases of varying category mixes, size Comparison With Alternatives DS: Random sampling of documents via query probes Callan et al., SIGMOD’99 Different task: Gather vocabulary statistics We adapted it for database classification TQ: Title-based Probing Yu et al., WISE 2000 Query probes are simply the category names Experiments: Metrics Expanded(N) Accuracy of classification results: Expanded(N) = N and all descendants Correct = Expanded(Ideal(D)) Classified = Expanded(Approximate(D)) N Precision = |Correct /\ Classified|/|Classified| Recall = |Correct /\ Classified|/|Correct| F-measure = 2.Precision.Recall/(Precision + Recall) Cost of classification: Number of queries to database Average F-measure, Controlled Databases 1.0 0.9 F-measure 0.8 0.7 0.6 0.5 DS [Callan et al.] PnC TQ [Yu et al.] 0.4 0.3 0 0.1 0.2 0.3 0.4 0.5 Ts, (Tc=8) 0.6 0.7 0.8 0.9 1 PnC =Probe & Count, DS=Document Sampling, TQ=Title-based probing Experimental Results: Controlled Databases Feature selection helps. Confusion-matrix adjustment helps. F-measure above 0.8 for most <Tc, Ts> combinations. Results degrade gracefully with hierarchy depth. Relatively small number of probes needed for most <Tc, Ts> combinations tried. Also, probes are short: 1.5 words on average; 4 words maximum. Both better performance and lower cost than DS [Callan et al. adaptation] and TQ [Yu et al.] Web Databases 130 real databases classified from InvisibleWeb™. Used InvisibleWeb’s categorization as correct. Simple “wrappers” for querying (only # of matches needed). The Ts, Tc thresholds are not known (unlike with the Controlled databases) but implicit in the IWeb categorization. We can learn/validate the thresholds (tricky but easy!). More details in the paper! Web Databases: Learning Thresholds 0.8 0.7 0.5 0.4 0.3 0.2 0.1 0 0.9 0.6 0.3 262144 0 65536 16384 4096 1024 Tec 256 64 16 4 1 F-measure 0.6 Tes Experimental Results: Web Databases 130 Real Web Databases. F-measure above 0.7 for best <Tc, Ts> combination learned. 185 query probes per database on average needed for classification. Also, probes are short: 1.5 words on average; 4 words maximum. Conclusions Accurate classification using only a small number of short queries No need for document retrieval Only need a result like: “X matches found” No need for any cooperation or special metadata from databases URL: http://qprober.cs.columbia.edu Current and Future Work • Build “wrappers” automatically • Extend to non-topical categories • Evaluate impact of varying search interfaces (e.g., Boolean vs. ranked) • Extend to other classifiers (e.g., SVMs or Bayesian models) • Integrate with searching (connection with database selection?) Questions? Contributions Easy, inexpensive method for database classification Uses results from document classification “Indirect” classification of the documents in a database Does not inspect documents, only number of matches Adjustment of results according to classifier’s performance Easy wrapper construction No need for any metadata from the database Related Work Callan et al., SIGMOD 1999 Gauch et al., Profusion Dolin et al., Pharos Yu et al., WISE 2000 Raghavan and Garcia Molina, VLDB 2001 Controlled Databases 500 databases built using 419,000 newsgroup articles One label per document 350 databases with single (not necessarily leaf) category 150 databases with varying category mixes Database size ranges from 25 to 25,000 articles Indexed and queries using SMART F-measure for Different Hierarchy Depths 1.00 0.95 0.90 F-measure 0.85 0.80 0.75 0.70 0.65 0.60 DS [Callan et al.] PnC TQ [Yu et al.] 0.55 0.50 0 1 Level 2 3 PnC =Probe & Count, DS=Document Sampling, TQ=Title-based probing Tc=8, Ts=0.3 Query Probes Per Controlled Database Interactions with Database 2000 1750 1500 1250 PnC DS TQ 1000 750 500 0 0.1 0.2 0.3 0.4 0.5 Tes 0.6 0.7 0.8 0.9 1 (a) Interactions with Database 2000 1750 1500 1250 PnC DS TQ 1000 750 500 250 1 2 4 8 16 32 64 Tec (b) 128 256 512 1024 2048 4096 8192 Web Databases: Number of Query Probes 600 400 300 200 100 1 4 16 64 256 1024 4096 16384 65536 262144 0.9 0.6 Tes 0.3 0 0 Tec Query Probes 500 3-fold Cross-validation 0.9 0.8 0.7 F-1 F-2 F-3 0.6 0.5 0.4 0.3 0.2 0.1 0 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 13 6 10 7 26 2 21 4 52 4 42 88 53 8 65 76 4 32 38 16 92 81 96 40 48 20 2 24 10 51 6 25 8 12 64 32 16 8 4 2 1 0 Real Confusion Matrix for Top Node of Hierarchy Health Sports Science Computers Arts Health 0.753 0.018 0.124 0.021 0.017 Sports 0.006 0.171 0.021 0.016 0.064 Science 0.016 0.024 0.255 0.047 0.018 Computers 0.004 0.042 0.080 0.610 0.031 Arts 0.004 0.024 0.027 0.031 0.298