correlation

advertisement

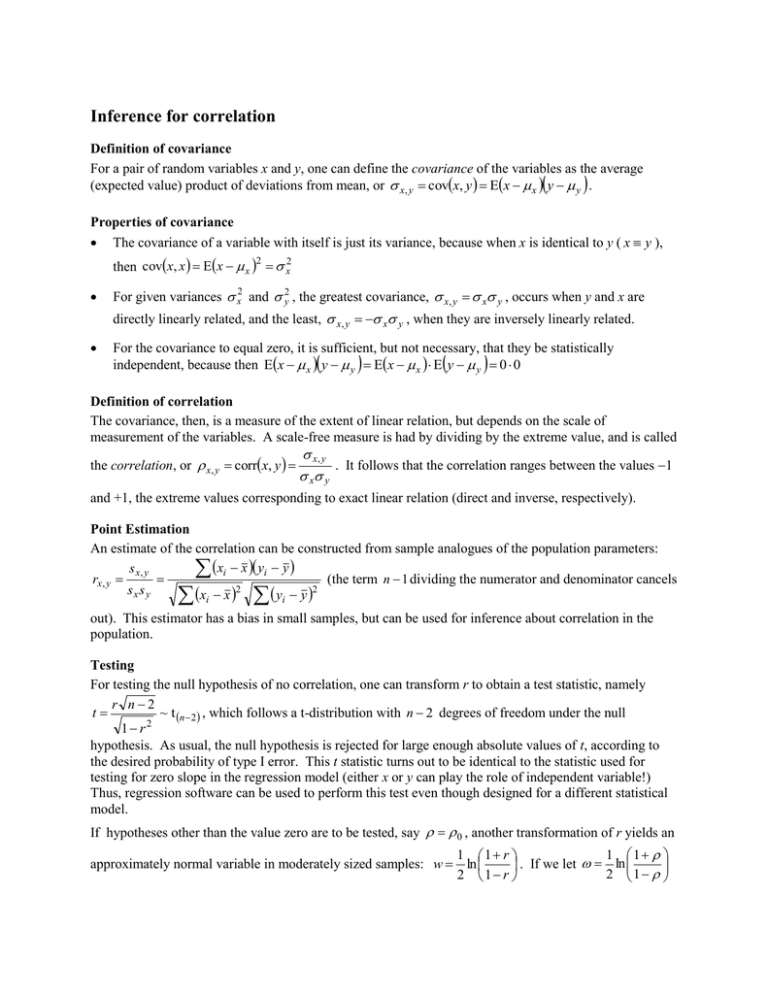

Inference for correlation Definition of covariance For a pair of random variables x and y, one can define the covariance of the variables as the average (expected value) product of deviations from mean, or x, y covx, y Ex x y y . Properties of covariance The covariance of a variable with itself is just its variance, because when x is identical to y ( x y ), then covx, x Ex x 2 x2 For given variances x2 and y2 , the greatest covariance, x, y x y , occurs when y and x are directly linearly related, and the least, x, y x y , when they are inversely linearly related. For the covariance to equal zero, it is sufficient, but not necessary, that they be statistically independent, because then Ex x y y Ex x E y y 0 0 Definition of correlation The covariance, then, is a measure of the extent of linear relation, but depends on the scale of measurement of the variables. A scale-free measure is had by dividing by the extreme value, and is called x, y . It follows that the correlation ranges between the values 1 x y and +1, the extreme values corresponding to exact linear relation (direct and inverse, respectively). the correlation, or x, y corrx, y Point Estimation An estimate of the correlation can be constructed from sample analogues of the population parameters: xi x yi y s x, y rx, y (the term n 1 dividing the numerator and denominator cancels sx s y xi x 2 yi y 2 out). This estimator has a bias in small samples, but can be used for inference about correlation in the population. Testing For testing the null hypothesis of no correlation, one can transform r to obtain a test statistic, namely r n2 t ~ t n 2 , which follows a t-distribution with n 2 degrees of freedom under the null 2 1 r hypothesis. As usual, the null hypothesis is rejected for large enough absolute values of t, according to the desired probability of type I error. This t statistic turns out to be identical to the statistic used for testing for zero slope in the regression model (either x or y can play the role of independent variable!) Thus, regression software can be used to perform this test even though designed for a different statistical model. If hypotheses other than the value zero are to be tested, say 0 , another transformation of r yields an 1 1 1 1 r approximately normal variable in moderately sized samples: w ln . If we let ln 2 1 2 1 r 1 be the correspondingly transformed population correlation, then w N , , i.e. approximately n 3 w unbiased with variance n 3 , and so a standard normal test statistic is formed as z . The n3 critical (rejection) region of the test is determined as usual according to the direction of the test (alternative) and the probability of type I error. Confidence interval for Confidence intervals for are obtained by back-transforming the corresponding interval for . This latter interval is constructed as w z1 , or e 2 1 e 2 1 2 1 n3 . To get the inverse transformation, we solve for in terms of , and apply this transformation to the upper and lower confidence limits, respectively; that is, the upper confidence limit for is obtained by substituting w z1 2 1 n3 for , and similarly for the lower limit. The resulting interval for is not symmetrical unless w happens by chance to be zero.