Probability and Statistics

advertisement

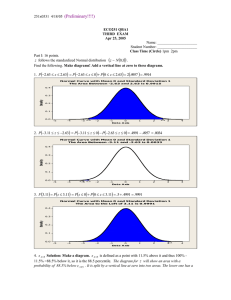

Probability and Statistics Probability vs. Statistics • In probability, we build up from the mathematics of permutations and combinations and set theory a mathematical theory of how outcomes of an experiment will be distributed • In statistics we go in the opposite direction: We start from actual data, and measure it to determine what mathematical model it fits • Statistical measures are estimates of underlying random variables Basic Statistics • Let X be a finite sequence of numbers (data values) x1, …, xn • E.g. X = 1, 3, 2, 4, 1, 4, 1 (n=7) • Order doesn’t matter but we need a way of allowing duplicates (“multiset”) • Some measures: – Maximum: 4 – Minimum: 1 – Median (as many ≥ as ≤): • 1, 1, 1, 2, 3, 4, 4 so median = 2 – Mode (maximum frequency): 1 Sample Mean • Let X be a finite sequence of numbers x1, …, xn. • The sample mean of X is what we usually call the average: n 1 X xi n i1 • For example, X = 1, 3, 2, 4, 1, 4, 1 , then μX=16/7 • Note that the mean need not be one of the data values. • We might as well write this as E[X] following the notation used for random variables Sample Variance • The sample variance of a sequence of data points is the mean of the square of the difference from the sample mean: n 1 2 Var(X) (xi X ) n i1 Standard Deviation • Standard deviation is the square root of the variance: n 1 2 (xi X ) n i1 2 X • σ is a measure of spread in the same units as the data σ Measures “Spread” • • • • • • • X = 1, 2, 3 μ=2 σ2 = (1/3) ∙ ((1-2)2+(2-2)2+(3-2)2) = 2/3 σ ≈ .82 Y = 1, 2, 3, 4, 5 μ=3 σ2 = (1/5) ∙ ((1-3)2+(2-3)2+(3-3)2+(4-3)2+(53)2) = 10/5 σ ≈ 1.4 Small σ Indicates “Centeredness” • • • • • • • • • • • X = 1, 2, 3 σ ≈ .82 Z = 1, 2, 2, 2, 3 μ=2 σ2 = (1/5) ∙ ((1-2)2+3∙(2-2)2+(3-2)2) = 2/5 σ ≈ .63 W = 1, 1, 2, 3, 3 μ=2 σ2 = (1/5) ∙ (2∙(1-2)2+(2-2)2+2∙(3-2)2) = 4/5 σ ≈ .89 (“Bimodal”) Covariance • Sometimes two quantities tend to vary in the same way, even though neither is exactly a function of the other • For example, height and weight of people Weight Height Covariance for Random Variables • Roll two dice. Let X = larger of the two values, Y = sum of the two values • Mean of X = (1/36) × (1×1 [only possibility is (1,1)] +3×2 [(1,2), (2,1), (2,2)] +5×3 [(1,3), (2,3), (3,3), (3,2), (3,1)] +7×4 + 9×5 + 11×6) = 4.47 Mean of Y = 7 How do we say that X tends to be large when Y is large and vice versa? Joint Probability • • • • • f(x,y) = Pr(X=x and Y=y) is a probability Sums to 1 over all possible x and y Pr(X=1 and Y=12) = 0 Pr(X=5 and Y=9) = 2/36 Pr(X≤5 and Y≥8) = 4/36 [(4,4), (4,5), (5,4), (5,5)] Covariance of Random Variables • Cov(X, Y) = E[ (X − μX) ∙ (Y −μY) ] • INSIDE the brackets, each of X and Y is compared to its own mean • The OUTER expectation is with respect to the joint probability that X=x AND Y=y • Positive if X tends to be greater than its mean when Y is greater than its mean • Negative if X tends to be greater than its mean when Y is less than its mean • But what are the units? Sample Covariance • Suppose we just have the data x1, …, xN and y1, …, yN and we want to know the extent to which these two sets of values covary (eg height and weight). The sample covariance is n 1 (xi X )(yi Y ) n i1 • An estimate of the covariance A Better Measure: Correlation • Correlation is Covariance scaled to [-1,1] XY Cov(X,Y ) E[(X X )(Y Y )] X Y Var(X)Var(Y ) • This is a unitless number! • If X and Y vary in the same direction then correlation is close to +1 • If they vary inversely then correlation is close to -1 • If neither depends on the mean of the other than the correlation is close to 0 Positively Correlated Data Correlation Examples http://upload.wikimedia.org/wikipedia/commons/d/d4/Correlation_examples2.svg FINIS