Generalized discriminant rule for binary data their descriptive parameters.

advertisement

Generalized discriminant rule for binary data

when training and test populations differ on

their descriptive parameters.

Julien Jacques1 and Christophe Biernacki2

1

2

Laboratoire de Statistiques et Analyse des Données, Université Pierre Mendès

France, 38040 Grenoble Cedex 9, France.

julien.jacques@iut2.upmf-grenoble.fr

Laboratoire Paul Painlevé UMR CNRS 8524, Université Lille I, 59655 Villeneuve

d’Ascq Cedex, France. christophe.biernacki@math.univ-lille1.fr

Summary. Standard discriminant analysis supposes that both the training sample

and the test sample are issued from the same population. When these samples are

issued from populations for which descriptive parameters are different, generalized

discriminant analysis consists to adapt the classification rule issued from the training

population to the test population, by estimating a link between this two populations.

This paper extends existing work available in a multi-normal context to the case of

binary data. To raise the major challenge of this work which is to define a link

between the two binary populations, we suppose that binary data are issued from

the discretization of latent Gaussian data. Estimation method are presented, and an

application in biological context illustrates this work.

Key words: Model-based discriminant analysis, relationship between populations,

latent class assumption.

1 Introduction

Standard discriminant analysis supposes that both the training labeled sample and

the test sample which has to be classified are issued from the same population. Since

works of Fisher in 1936 [Fis36], who introduced a linear discriminant rule between

two groups, numerous evolutions have been proposed ( [McL92] for a survey). All

of them concern the nature of the discriminant rule : parametric quadratic rule,

semiparametric rule or nonparametric rule.

An alternative evolution, introduced by Van Franeker and Ter Brack [Van93] and

developed further by Biernacki et al. [Bie02], considers the case in which the training

sample is not necessary issued from the same population than the test sample.

Biernacki et al. define several models of generalized discriminant analysis in a multinormal context, and experiment them in a biological situation, in which the two

populations consist of birds from the same species, but from different geographical

origins.

878

Julien Jacques and Christophe Biernacki

But in many domains, like insurance or medicine, a large number of applications

deal with binary data. The goal of this paper is to extend generalized discriminant

analysis, established in a multi-normal context, to the case of binary data. The

difference between the training and the test populations can be geographical (as in

the biological application previously quoted), but also temporal or other.

The next section presents the data and the latent class model for both training

and test populations. Section 3 makes the assumption that these binary data are

the discretization of latent continuous variables. This hypothesis helps to establish

a link between the two populations. It leads to propose eight models of generalized

discriminant analysis for binary data. Thereafter, in Section 4 the estimation of

model parameters is illustrated. In Section 5, an application in a biological context

illustrates that generalized discriminant analysis is more powerful than standard discriminant analysis or clustering. Finally, the last section discusses possible extensions

of the present work.

2 The data and the latent class model

Data consist of two samples : the first sample S, labeled and issued from the training population P , and the second sample S ∗ , not labeled and issued from the test

population P ∗ . The two populations can be different.

The training sample is composed by n pairs (x1 , z1 ), . . . , (xn , zn ), where xi is the

explanatory vector for the ith object, and zi = (zi1 , . . . , ziK ) with zik equal to 1 if the

ith object belongs to the kth group, and 0 if not (i = 1, . . . , n, k = 1, . . . , K) where

K denotes the number of groups. Each pair (xi , zi ) is an independent realization of

(X , Z) of distribution:

j

X|Z

k =1 ∼ B(αkj )

∀j = 1, . . . , d

and

Z ∼ M(1, p1 , . . . , pK ),

(1)

where B(αkj ) is the Bernoulli distribution of parameter αkj (0 < αkj < 1),

and M(1, p1 , . . . , pK ) defines

the one order multinomial distribution of parameters

P

p1 , . . . , pK (0 < pk < 1, K

k=1 pk = 1).

Moreover, using the latent class model assumption that explanatory variables

are conditionally independent ( [Cel91]), the probability function of X is:

f (x1 , . . . , xd ) =

K

X

k=1

pk

d

Y

j

j

αkj x (1 − αkj )1−x .

(2)

j=1

The test sample is composed by n∗ pairs (x∗1 , z1∗ ), . . . , (x∗n∗ , zn∗ ∗ ), where zi∗ are

unknown (variables are the same as in the training sample). These pairs are independent realizations of (X ∗ , Z ∗ ) of the same distribution as (X , Z) but with different

parameters, noted α∗kj and p∗k .

Our aim is to estimate the unknown labels z1∗ , . . . , zn∗ ∗ by using information from

both training and test samples. The challenge is to find a link between the populations P and P ∗ .

Generalized discriminant rule for binary data

879

3 Relationship between test and training populations

In a multi-normal context, a linear stochastic relationship between P and P ∗ is not

only justified (with very few assumptions) but also intuitive [Bie02]. In the binary

context, since no such intuitive relationship seems to exist, an additional assumption

is stated: it is supposed that binary data are issued from the discretization of latent

Gaussian variables. This assumption is not new in statistics: see for example works of

Thurstone [Thu27], who used this assumption in his comparative judgment model to

choose between two stimuli, or works of Everitt [Eve87], who proposed a classification

algorithm for binary, categorical and continuous data.

j

We suppose thus that explanatory variables X|Z

k =1 of Bernoulli distribution

B(αkj ), are issued from the following discretization of latent continuous variables

j

2

Y|Z

k =1 of normal distribution N (µkj , σkj ), and, as for discrete variables, we assume

j

that Y|Z

k =1 are also conditionally independent:

(

j

X|Z

k =1

=

j

0 if λj Y|Z

k =1 < 0

j

1 if λj Y|Z

k =1 ≥ 0

for j = 1, . . . , d,

(3)

where λj ∈ {−1, 1} is introduced to avoid choosing to which value of X j , 0 or 1,

corresponds a positive value of Y j . The task of the new parameter is thus to avoid

binary variables to inherit from the natural order induced by continuous variables.

We can thus derive the following relationship between αkj , and λj , µkj and σkj :

(

αkj =

j

p(X|Z

k =1

= 1) =

µ

Φ σkj

kj

1−Φ

µkj

σkj

if λj = 1

if λj = −1

(4)

where Φ is the N (0, 1) cumulative density function. It is worth noting, that the

assumption of conditional independence makes the calculus of αkj very simple. It

avoids to consider computation of multidimensional integrals, which are very complex to estimate, especially when the problem dimension (d) is large, what is often

the case with binary data.

As for the variable X , we suppose also that the discrete variable X ∗ is issued

from the discretization of the latent continuous variable Y ∗ . The equations are the

∗

same than (3) and (4), by changing αkj into α∗kj , µkj into µ∗kj and σkj into σkj

. The

∗

parameter λj is naturally supposed to be equal to λj .

In a Gaussian context, Biernacki et al. defined in [Bie02] the stochastic linear

relationship (5) between the latent continuous variable Y of P and Y ∗ of P ∗ by

assuming two plausible hypotheses: the transformation between P and P ∗ is C 1 and

∗j

j

∗

the jth component Y|Z

k =1 of Y |Z k =1 only depends on the jth component Y|Z k =1 of

Y|Z k =1 :

∗

Y|Z

∗k =1 ∼ Ak Y |Z k =1 + bk ,

(5)

where Ak is a diagonal matrix of Rd×d containing the elements akj (1 ≤ j ≤ d)

and bk is a vector of Rd .

Using this relationship, we can obtain after some calculus the following relationship between the parameters α∗kj and αkj :

880

Julien Jacques and Christophe Biernacki

α∗kj = Φ δkj Φ−1 (αkj ) + λj γkj ,

(6)

where δkj = sgn(akj ), sgn(t) denoting the sign of t and where γkj =

bkj /(|akj | σkj ).

Thus, conditionally to the fact that αkj are known (they will be estimated in

practice), the estimation of the Kd continuous parameters α∗kj is obtained from estimate of the parameters of the link between P and P ∗ : δkj , γkj and λj . The number

of continuous parameters is thus Kd. In fact, estimating directly α∗kj without using

population P or estimating the link between P and P ∗ is equivalent. Consequently

there is a need to reduce the number of free continuous parameters.

Thus, in order to reduce the number of these continuous parameters, it is necessary to introduce some sub-model by imposing constraints on the transformation

between the two populations P and P ∗ .

Model M1 : σkj and Ak are free and bk = 0 (k = 1, . . . , K). The model is:

α∗kj = Φ δkj Φ−1 (αkj )

with

δkj ∈ {−1, 1}.

This transformation corresponds either to identity or to a permutation of the

modalities of X .

Model M2 : σkj = σ, Ak = aId with a > 0, Id the identity matrix of Rd×d and

bk = βe, with β ∈ R and e is a vector of dimension d composed only of 1 (the

transformation is dimension and group independent). The model is:

′

α∗kj = Φ Φ−1 (αkj ) + λj |γ|

′

λj = λj sgn(γ) ∈ {−1, 1} and |γ| ∈ R+ .

with

The assumption a > 0 is made to have identifiability of the model, and it does

not induce any restriction. The same hypothesis is made in the two following models.

Model M3 : σkj = σk , Ak = ak Id , with ak > 0 for 1 ≤ k ≤ K, and bk = βk e,

with βk ∈ R (the transformation is only group dependent). The model is:

′

′

α∗kj = Φ Φ−1 (αkj ) + λkj |γk | with λkj = λj sgn(γk ) ∈ {−1, 1} and |γk | ∈ R+ .

Model M4 : σkj = σj , Ak = A, with akj > 0 for 1 ≤ k ≤ K and 1 ≤ j ≤ d, and

bk = β ∈ R (the transformation is only dimension dependent). The model is:

′

α∗kj = Φ Φ−1 (αkj ) + γj

with

′

γj = λj γj ∈ R.

′

Note that in model M4 , as the parameter γj is free, it includes the parameter

λj .

For these four models Mi (i = 1, . . . , 4), we take into account an additional

assumption on group proportions: they are conserved or not from P to P ∗ . Let Mi

be the model with unchanged proportions, and pMi the model with possibly changed

proportions. Eight models are thus defined.

Note that model M2 is always nested in M3 and M4 , and M1 can be sometimes

nested in the three other models.

Generalized discriminant rule for binary data

881

Finally, to automatically choose among the eight generalized discriminant models, the BIC criterion (Bayesian Information Criterion, [Sch78]) can be employed.

It is defined by:

BIC = −2l(θ̂) + ν log(n),

where: θ = {p∗k , δkj , λj , γkj } for 1 ≤ k ≤ K and 1 ≤ j ≤ d, l(θ̂) is the maximum

log-likelihood corresponding to the estimate θ̂ of θ, and ν is the number of free

continuous parameters associated to the given model. The model leading to the

smallest BIC value is then retained. There is now a need to estimate the parameter

θ, and the maximum likelihood method is retained.

4 Parameter estimation

Generalized discriminant analysis needs three estimation steps. We present the situation where proportions are unknown, the contrary case being immediate. The first

step consists of estimating parameters pk and αkj (1 ≤ k ≤ K and 1 ≤ j ≤ d) of

population P from the training sample S. Since S is a labeled sample, the maximum

likelihood estimate is usual ( [Eve84, Cel91]).

The second step consists of estimating parameters p∗k and α∗kj (1 ≤ k ≤ K and

1 ≤ j ≤ d) of the Bernoulli mixture described from both θ and S ∗ . For estimating

α∗kj , parameters estimates of the link between P and P ∗ are to be obtained: when

parameters δkj , γkj and λj of this link are estimated, an estimate of α∗kj is deduced

by equation (6). This step is described below.

Finally, the third step consists of estimating the group membership of individuals

from the test sample S ∗ , by maximum a posteriori.

For the second step, maximum likelihood estimation can be efficiently based on

the EM algorithm [Dem77]. The likelihood is given by:

n X

K

Y

∗

L(θ) =

p∗k

i=1 k=1

d

Y

α∗kj

∗j

xi

∗j

(1 − α∗kj )1−xi .

j=1

The completed log-likelihood is given by:

∗

lc (θ; z1∗ , . . . , zn∗ ∗ )

=

K

n X

X

zi∗k log p∗k

i=1 k=1

d

Y

α∗kj

∗j

xi

∗j

(1 − α∗kj )(1−xi

)

.

j=1

The E step. Using a current value θ (q) of the parameter θ, the E step of

EM algorithm consists to compute the conditional expectation of the completed

log-likelihood:

Q(θ; θ (q) ) = Eθ (q) [lc (θ; Z1∗ , . . . , Zn∗∗ )|x∗1 , . . . , x∗n∗ ]

n X

K

X

∗

=

i=1 k=1

(q)

tik

n

log(p∗k ) + log

d

Y

j=1

α∗kj

∗j

xi

∗j

(1 − α∗kj )1−xi

o

(7)

882

Julien Jacques and Christophe Biernacki

where

p∗k

(q)

tik

=

p(Zi∗k

=

1|x∗1 , . . . , x∗n∗ ; θ (q) )

=

(q)

d

Y

(α∗kj

∗j

(q) xi

)

(1 − α∗kj

(q) (1−x∗j

i )

)

j=1

K

X

p∗κ

(q)

κ=1

d

Y

(α∗κj

∗j

(q) xi

)

(1 − α∗κj

(q) (1−x∗j

i )

)

j=1

is the conditional probability that the individual i belongs to the group k.

The M step. The M step of the EM algorithm consists in choosing the value

θ (q+1) which maximizes the conditional expectation Q computed at the E step:

θ (q+1) = argmax Q(θ; θ (q) ).

(8)

θ∈Θ

We describe this step for each component of θ = {p∗k , δkj , λj , γkj }.

For the proportions, this maximization gives the estimate p∗k (q+1) =

Pn∗ (q) ∗

i=1 tik /n . For the continuous parameters γkj , we can prove for each model that

Q is a strictly concave function of γkj which tends to −∞ when a norm of the parameter vector (γ11 , . . . , γKd ) tends to ∞ (cf. [Jac05]). Also, we can use a Newton

algorithm to find the unique maximum of Q(θ; θ (q) ) on θ.

For discrete parameters δkj and λj , if the dimension d and the number of groups

K are relatively small (for example K = 2 and d = 5), the maximization is carried

out by computing Q(θ; θ (q) ) for all possible values of these discrete parameters.

When K or d are bigger, the number of values of δkj is too large (for example 220 for

K = 2 and d = 10), and so it is impossible to enumerate all possible values of δkj in

a reasonable time. In this case, we use a relaxation method which consists to assume

that δkj (respectively λj ) is not a binary parameter in {−1, 1} but a continuous one

in [−1, 1], named δ̃kj ( [Wol98] for example). Optimization is thus made with respect

to continuous parameter (with Newton algorithm as for γkj because Q is a strictly

(q+1)

concave function of δ̃kj ), and the solution δ̃kj

is discretized to obtain a binary

(q+1)

solution δkj (q+1) as follows: δkj (q+1) = sgn(δ̃kj

).

5 Application on a real data set

Models and estimation methods have been validated by tests on simulated data,

available in [Jac05]. We present here an application on a real data set.

The first motivations for which generalized discriminant analysis was developed

are biological applications [Bie02, Van93], in which the aim was to predict sex of

birds from biometrical variables. Very powerful results have been obtained with

multi-normal assumptions.

The species of birds considered in this application is Cory’s Shearwater Calanectris diomedea [Thi97]. This species can be divided in three subspecies, among

which borealis, which lives in the Atlantic islands (the Azores, Canaries, etc.), and

diomedea, which lives in the Mediterranean islands (Balearics, Corsica, etc.). The

birds of borealis subspecies are generally bigger than those of diomedea subspecies.

Generalized discriminant rule for binary data

883

Thus, standard discriminant analysis is not adapted to predict sex of diomedea with

a learning sample issued from the population borealis.

A sample of borealis (n = 206, 45% females) was measured using skins in several National Museums. Five morphological variables were measured: culmen (bill

length), tarsus, wing and tail lengths, and culmen depth. Similarly, a sample of

diomedea (n = 38, 58% females) was measured using the same set of variables. In

this example, two groups are present, males and females, and all the birds are of

known sex (from dissection).

To provide an application of the present work, we discretize continuous biometrical variables into binary data (small or big wings, ...).

We select the subspecies borealis as the training population and the subspecies

diomedea as the test population. We apply on these data the eight generalized discriminant analysis models, standard discriminant analysis (DA) and clustering. The

number of bad classifications and the value of the BIC criterion for generalized

discriminant analysis are also given by Table 1.

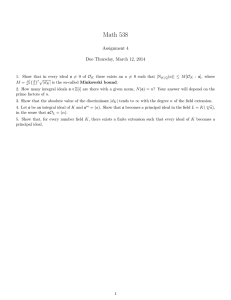

Table 1. Rate (in %) of bad classifications and value of the BIC criterion for test

population diomedea with training population borealis.

pM1 pM2 pM3 pM4 M1 M2 M3 M4 DA Clustering

Rate 57.9 26.3 23.7 21.0 57.9 23.7 15.8 18.4 42.1

23.7

BIC 269.7 222.5 220.5 237.0 267.3 221.6 221.5 233.6 648.4

-

If the results are compared according to the error rate, generalized discriminant

analysis with the model M3 is the best method, with 15.8% of error. This error is

lower than that obtained by standard discriminant analysis (42.1%) and by clustering

(23.7%). If it is the values of the BIC criterion which are used to choose a model,

three models of generalized discriminant analysis are emphasized (pM3 , M2 and

M3 ), including the model with the lowest error rate M3 .

This application illustrates the interest of generalized discriminant analysis with

respect to standard discriminant analysis or clustering. Effectively, by adapting the

classification rule issued from the training population to the test population, generalized discriminant analysis gives lower classification error rates than by applying

directly the rule issued from the training population (standard discriminant analysis), or by omitting the training population and applying directly clustering on the

test population.

We can remark that the assumption which supposes that binary data are issued

from the discretization of Gaussian variables (see data in [Bie02]) is relatively realistic in this application. Nevertheless, there exists a strong correlation between the five

biometrical variables, which violates the assumption of conditional independence.

6 Conclusion

Generalized discriminant analysis extends standard discriminant analysis by allowing training and test samples to arise from slightly different populations. Our contribution consists to adapt precursor works in a multi-normal context to the case of

884

Julien Jacques and Christophe Biernacki

binary data. An application in a biological context illustrates this work. By using

generalized discriminant analysis models defined in this paper, we provide a classification of birds according to their sex which is better than standard discriminant

analysis or clustering. Application of the method on data issued of the insurance

context is our next challenge.

Methodological perspectives for this work are also numerous. Firstly, we have

defined the link between the two populations using Gaussian cumulative density

function. Although it seemed initially difficult to find this link, a simple link was

obtained. It was not easy to imagine it, but it is well comprehensible afterwards. It

would be interesting to try other types of cumulative density functions (theoretical

reasons will have to be developed and practical tests will have to be carried out). Secondly, with this contribution generalized discriminant analysis is now developed for

continuous data and for binary data. To allow analyzing a large number of practical

cases, it is important to study the case of categorical variables (i.e. much than two

modalities), and thereafter the case of mixed variables (binary, categorical and continuous). Finally, it would be also interesting to extend other classical discriminant

method like non-parametric discrimination.

References

[Bie02]

Biernacki, C., Beninel, F. and Bretagnolle, V.: A generalized discriminant rule when training population and test population differ on their

descriptive parameters. Biometrics, 58, 2, 387-397 (2002)

[Cel91]

Celeux, G. and Govaert, G.: Clustering criteria for discrete data and latent

class models. Journal of classification, 8, 157-176 (1991)

[Dem77] Dempster, A.P., Laird, N.M. and Rubin, D.B.: Maximum likelihood from

incomplete data (with discussion). Journal of the Royal Statistical Society,

Series B 39, 1-38 (1977)

[Eve87] Everitt, B.S.: A finite mixture model for the clustering of mixed-mode

data. Statistics and Probability Letters 6, 305-309 (1987)

[Fis36]

Fisher, R.A.: The use of multiple measurements in taxonomic problems.

Annals of Eugenics, 7, 179-188, Pt. II (1936)

[Jac05]

Jacques, J.: Contributions à l’analyse de sensibilité et à l’analyse discriminante généralisée. PhD thesis of University Joseph Fourier (2005)

[McL92] McLachlan, G.J.: Discriminant Analysis and Statistical Pattern Recognition. Wiley, New-York (1992)

[Sch78] Schwarz, G.: Estimating the dimension of a model. Annals of Statistics,

6, 461-464 (1978)

[Thi97] Thibault, J-.C., Bretagnolle, V. and Rabouam, C.: Cory’s shearwater

calonectris diomedia. Birds of Western Paleartic Update, 1, 75-98 (1997)

[Thu27] Thurstone, L.L.: A law of comparative judgement. Amer. J. Psychol., 38,

368-389 (1927)

[Van93] Van Franeker, J.A. and Ter Brack, C.J.F.: A generalized discriminant for

sexing fulmarine petrels from external measurements. The Auk, 110(3),

492-502 (1993)

[Wol98] Wolsey, L.A.: Integer Programming. Wiley (1998)