Survey and Taxonomy of IP Address Lookup Algorithms

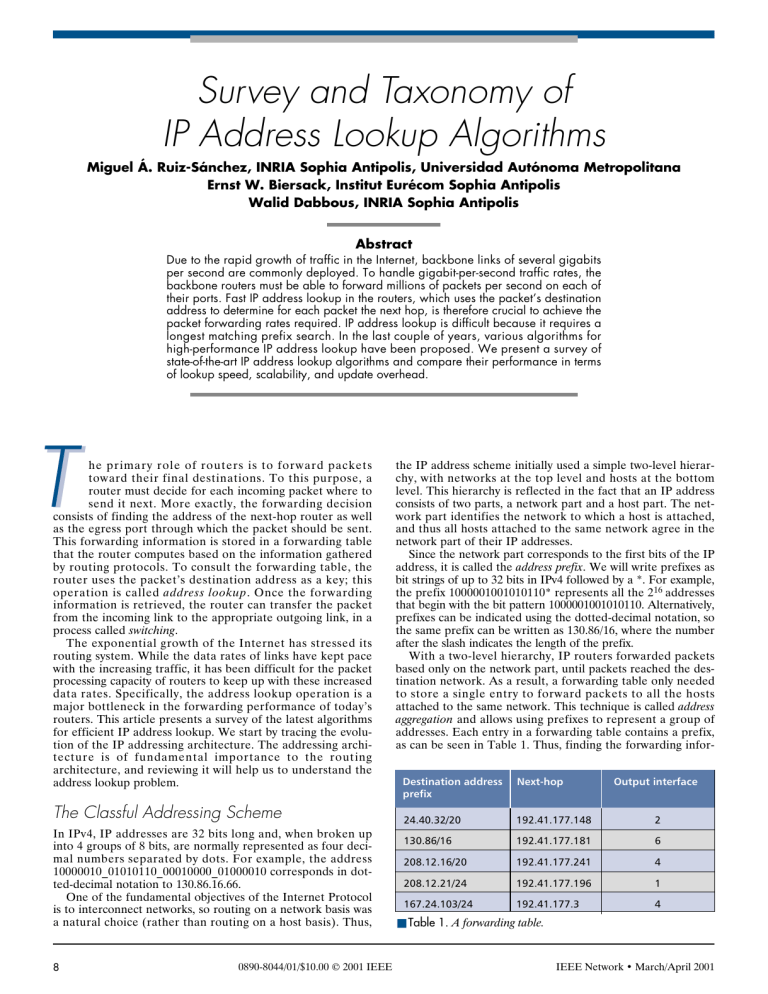

advertisement