CIFE CIFE Seed Proposal Summary Page 2013-14 Projects

advertisement

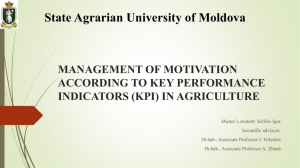

CIFE Center for Integrated Facility Engineering CIFE Seed Proposal Summary Page 2013-14 Projects Proposal Title: Statistical Analysis of KPIs: the Missing Links in the VDC Decision-making Process Principal Investigator(s): Calvin Kam (Civil and Environmental Engineering) Sadri Khalessi (Statistics) Martin Fischer (Civil and Environmental Engineering) Research Staff: Devini Senaratna (Statistics) Proposal Number (Assigned by CIFE):2013-03 Total Funds Requested: $72,867 First Submission? Yes If extension, project URL: NA Abstract: Key Performance Indicators (KPIs) help Architecture, Engineering and Construction (AEC) project teams to make informed decisions. The Virtual Design and Construction (VDC) Scorecard research shows that only 40% of AEC firms use KPIs to evaluate VDC, as compared to over 80% in other industries such as textile. Other industries use KPIs efficiently by analyzing correlations and providing insights for decision making. This aspect is a ‘missing link’ in the AEC industry. We will integrate statistical methods with KPIs to help AEC professionals in VDC decision-making. Building upon 108 cases from the VDC Scorecard results, we will conduct web-surveys to obtain VDC performance statistics to develop and enhance the KPIs. We will use statistical methods including Structural-equation Modeling, and Clustering to identify relationships between KPIs. We will present to CIFE members, as the final product of this SEED proposal, 3 statistical models for benchmarking, decision-prioritization, and prediction, to enhance VDC decision-making. Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process 1. Motivation Tracking KPIs and making timely decisions based on the KPIs have helped Architecture, Engineering and Construction (AEC) firms better utilize Virtual Design and Construction (VDC). Efficient utilization of VDC results in improved management of time, cost, and resources. Figure 1 shows the relationship between, the number of KPIs tracked and monitored by the AEC firms, and performance with respect to efficient VDC utilization (measured by the VDC Scorecard Score). It can be observed that the VDC Score increases as the number of KPIs tracked and measured increase. Tools, such as the Characterization Framework, National Building Information Model (BIM) Standard, Pennsylvania State University BIM Planning Guides, and CIFE’s VDC Scorecard have been formulated due to the need for an evaluation framework using key performance indicators (Kam, McKinney, Xiao & Senaratna, 2013). Average VDC Scorecard Score vs. Number of KPIs Tracked by AEC Firms 64% 52% 46% 37% None 1 - 3 Metrics 4 -7 Metrics 8 or More Metrics Figure 1: Average VDC Scorecard Score versus number of VDC KPIs tracked. 1.1. Problem 1 - Limited usage of quantifiable KPIs/metrics by AEC firms The first problem is that only 40% of the 108 projects scorecard using the VDC Scorecard, tracked and monitored quantifiable KPIs (Kam, Xiao, McKinney & Senaratna, 2013), despite the clear benefits of tracking and monitoring KPIs (Figure 1). Kam, Fischer, Khalessi, Senaratna 2 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process Table 1: Usage of KPIs/metrics in AEC for VDC Description VDC in AEC 40% Measuring Performance (Kam, McKinney, Xiao & Senaratna, 2013) KPI Analysis Software None Other Industries 80% in textile Esin et al’s (2009) In Manufacturing, KPI analysis software greatly improved enterprise performance (Zevenbergen, 2006) Other industries, such as manufacturing and information technology, use metrics or KPIs more systematically and successfully. Esin et al’s (2009) study on the usage of KPIs in the apparel industry pointed out that 80% had some form of quantifiable KPI/metric that was measured, as opposed to just 40% for VDC. It was inferred that though scorecards are available to track and measure BIM/VDC usage and performance, a methodology to analyze correlations and give effective feedback and recommendations is yet to be incorporated into the VDC Scorecard methodology. In other industries, methodologies that instantly analyze a project’s status and provide feedback are both available and effective. For example, in the manufacturing industry, Zevenbergen (2006) explains how KPI analysis software greatly improved enterprise performance. 1.2.Problem 2: Missing links (or correlations) between KPIs The second problem is that the present VDC Scorecard insights and recommendations (see Figure 2 for an example) are based on independent analysis of the VDC Scorecard KPIs. For example, if a KPI scored poorly with regards to VDC, feedback is given without considering the impact of that KPI on other KPIs. Figure 2: Spider diagram showing weaknesses and strengths of a VDC Scorecard project (the red highlighted division is a drawback relative to the other divisions) Kam, Fischer, Khalessi, Senaratna 3 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process Initial statistical analysis show, complex correlations between KPIs. These can be used to provide useful feedback, such as forecasting performance, and benchmarking projects. This is another motivation for this study. Statistical tests used to test correlations are the parametric t-test, chi-squared test, Mann-Whitney test, and Spearman’s rank correlation test. Examples (Figure 3 and Table 2) Stakeholder Correlations: Statistical analysis demonstrates that one of the most significant associations with project performance is, the involvement of stakeholders in VDC. It was found that 95% of the top scored projects use BIM models among multiple stakeholders to resolve engineering challenges. Funding: The magnitude of budget allocations on VDC did not have a significant association with adoption of VDC, the efficient use of VDC technology, or even project performance using VDC. Table 2: Highly significant KPI correlations with Performance Rank KPIs (Metrics) 1. 2. Having VDC Management Objectives Stakeholders involved in VDC based decisionmaking process 3. Efficiency of VDC/BIM integrated Project-Wide Meeting *Significance at 10-8 35% 1 stakeholder involved 46% 47% 51% 2 to 3 stakeholders involved 4 to 5 stakeholders involved 6 to 7 stakeholders involved Statistical Significance (p-value)* 0.0000000000924 0.00000001469 0.000000236 65% All stakeholders involved Figure 3: Average Performance Score versus the number of Stakeholders that Benefit (Note increasing trend) Kam, Fischer, Khalessi, Senaratna 4 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process 1.3.Solution and Contribution to CIFE Our solution to problems 1 and 2 is to develop 3 types of statistical models that use the relationships between KPIs. These 3 types of models will motivate firms to monitor KPIs and make better decisions based on the insights from these models. The three models are: 1. Statistical Model-based prediction: Models that can predict/ forecast Performance, Adoption and Technology measures, based on lagging KPIs (see methodology and Figure 4 for KPI-web model) 2. Prioritizing Models: A dashboard that prioritizes badly performing KPIs on an easy-to-read scale (Red: immediate attention required; Orange: improvements can be made; Green: things are under control) 3. Benchmarking and Ranking Models: Ranking and grouping projects based on their features relative to industry norms. This can be used as a scoring method for selecting Award Winning Projects. Figure 4: A KPI web designed for a selected group of VDC Scorecard KPIs Note: See Section 5 for proposed methods for dissemination of findings (workshops, publications, other contributions) 2. Theoretical and Practical Point of Departure 2.1.The VDC Scorecard: The VDC Scorecard was designed as a holistic, quantifiable, practical, and adaptable tool to track, access, score projects and provide recommendations regarding the effective use of BIM/VDC (Kam, McKinney, Xiao, Senaratna, 2013). At present, VDC Scorecard researchers have collected and analyzed 108 unique case studies using this evaluation framework and have obtained insightful information regarding the use of BIM/VDC in the Kam, Fischer, Khalessi, Senaratna 5 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process AEC industry. This diverse data set with 108 projects covers projects from 13 countries, 11 facility types, and all 7 stages of the construction process. The VDC Scorecard is holistic in nature as it assesses how much the project’s performance is improved by the use of extreme social collaboration instead of focusing only on capturing the performances of the creation and implementing the product model (technology) of a project (Kam, McKinney, Xiao, Senaratna, 2013). The performance of project objectives are quantifiably measured based on communication, cost performance, schedule performance, facility performance, safety, project quality, and other objectives established based on project needs (See Figure 5 for VDC Scorecard structure). The VDC Scorecard is designed to adapt to changing VDC industry practices. Figure 5: VDC Scorecard structure with average Area and Division scores resulting from the 108 cases in 13 countries. The VDC Scorecard is also practical in that the express version can be completed within half an hour, and has the capacity to provide quick quantitative feedback (Kam, McKinney, Xiao, Senaratna, 2013). Further, it is practical and realistic since the VDC Scorecard has a concept called a “Confidence Level,” which quantifies the accuracy of the VDC Scorecard based on the quality of responses obtained. The most significant drawback of the VDC Scorecard at present is the lack of a methodology that provides insightful and powerful information related to the project’s performance. These include the benchmarking of projects, and identifying interdependencies between KPIs that can be used to provide detailed feedback for better project management and decision making. The best data-driven methodologies with Kam, Fischer, Khalessi, Senaratna 6 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process verifiable validation methods for such analysis are in the domain of statistics. Statistical learning would potentially lead to continual evaluation and enhanced application of KPIs in VDC. Current statistical research on sample data give insightful information on how projects with similar features group together, the relative position of a project respect to other projects, and new KPIs that can help define better innovative measuring criteria for a project’s success. Feature Combination Score 2 Projects with similar features will group together. For example projects 1, 37, 56, 60 etc., group together X and Y Axis: Feature combinations, are numerical statistical measures built using a collection of KPIs Feature Combination Score 1 Figure 6: Sample Data Analysis: Grouping of projects (numbered as 1, 2, etc.) into 4 clusters based on K-means clustering method 2.2.Dynamic Performance Monitoring and Management (DPMM): A Metric-based Framework to Better Predict Project Success - Li, et al (2012) The DPMM is a previously funded SEED project that developed a framework which measures, explains and predicts management performance in a dynamic manner. Prior to the development of the DPMM methodology, performance monitoring in general was precedence-based and not client based, intuitively driven and not tactically driven, ad-hoc and not systematic, and performance was statically assessed and not dynamically assessed. The DPMM methodology used 20 metrics on quality, cost, schedule, sustainability, and organization as performance metrics, and defined 7 client satisfaction metrics to integrate the relationships between performance and client satisfaction. In the VDC scorecard detail is given to other leading and lagging metrics, such as model usage and maturity, information exchange between models, and specific stakeholder related information such as attitudes on BIM/VDC usage. The VDC Scorecard has over 50 measurements, summarized into 10 Divisions, and 4 Areas, and a final score (See Figure 5). Similar to the VDC scorecard survey methodology and slightly in contrast to Bloom and Van Reenens’s Kam, Fischer, Khalessi, Senaratna 7 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process (2006) research design surveys managerial practices methodology, this study included all of the members of the design, construction, and owner team that affect the outcome of project execution. The DPMM methodology used self-reported data that were subjective in nature. The VDC Scorecard has an independent scale and objective metrics. This study will include the carefully selected client satisfaction metrics, to obtain additional information, which is the focus of the DPMM methodology. Building upon the DPMM methodology idea, this study will go further and explore the relationships between a web of metrics, instead of only between performance and satisfaction metrics. Figure 7: Performance metrics dashboard and client satisfaction metrics (Li, et al, 2012) 2.3.Statistical Methodology: Designing and benchmarking a platform to select VDC/BIM implementation strategies - Alarcón, et al (2010) The proposed statistical research to be carried out using the VDC Scorecard data is similar to the first phase of the this study, which formulates a benchmarking methodology to better facilitate VDC/BIM implementation strategies, in the AEC industry. Alarcón, et al (2010) used a combination of three statistical methods - Data Envelope Analysis, Factor Analysis, and Structural Equation Modeling. Factor Analysis is commonly used to reduce the data into unobservable variables called factors (See section 3). These factors can be used as the inputs for other analyses such as the structural equation modeling or even multivariate regression. Structural equation modeling was used by Alarcón, et al (2010) as a method to describe and quantify the impacts of these implementation (and un-observable) factors on the company’s and project’s outcomes. A similar methodology will be used to identify the relationships between the BIM/VDC hidden factors and performance outcomes, as well as Kam, Fischer, Khalessi, Senaratna 8 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process to identify relationships between leading and lagging metrics, in order to provide insightful feedback. 2.4.Leading and Lagging Metrics (or KPIs) The DPMM methodology developed their framework by studying the relationships between customer satisfaction metrics and performance metrics. Another angle of viewing the relationships between KPIs is to consider leading and lagging metrics. Leading indicators are indicators that can be corrected (or actionable), while lagging indicators are indicators that measure a situation where it’s too late to carry-out any actions. Metrics such as revenues and number of accidents are lagging indicators, while metrics such as customer satisfaction, number of BIM/VDC meetings, and safety measures are leading variables. A Scorecard should contain both leading indicators that will have an impact on the lagging variables, as well as the lagging variables, that will point to improvements for future endeavors. It is, hence, important to create a holistic dashboard of leading and lagging metrics –the VDC Scorecard provides just that. 3. Methodology The final statistical products will be the following 3 types of Statistical Models 1. Statistical Model-based prediction 2. Prioritizing 3. Benchmarking and ranking The methodology for building these models, to identify correlations is as follows (Figure 8): Figure 8: Methodology and detailed tasks Kam, Fischer, Khalessi, Senaratna 9 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process 3.1.Preliminary Work Using the DPMM methodology and Version 9 of the VDC Scorecard, we will collect additional KPIs to better understand the metric or KPI-web and correlations between KPIs. These include the following client satisfaction metrics used in the DPMM methodology such as quality of management, quality of work and mutual trust and confidence, as well as new KPIs for version 9 of the VDC Scorecard, such as percentage of targets reached under planning and percentage of RFI on time (See Figure 9 for full list). Figure 9: List of additional KPIs /metrics Next, some of the statistical methods were tested on a sample dataset to test the feasibility of the study. These results showed positive signs towards designing the metric web. Testing method: Cluster Analysis: (See Figure 6) Testing method: Factor Analysis: Using a sample from the VDC Scorecard data, a Factor Analysis was carried out on the ten divisions to identify the underlying or hidden factors that may contribute to refining the VDC scorecard and its structure. The identified “Hidden” factors proved to give very insightful results. Some of these factors are: Factor 1: Highly loaded with Preparation (Correlation: 0.830), Process (Correlation: 0.742), Organization (Correlation: 0.822), Integration (Correlation: 0765) and Qualitative (Correlation: 617) Scores. This factor could represent the hidden features that contribute to “Qualitative and Social” aspects of the VDC/BIM process. Factor 2: Highly loaded with Objective (Correlation: 0.798) and Quantitative Scores (Correlation: 0.660). This factor could represent the prior input needed to track quantitative metrics (being Objective) and its outcome (Quantitative Performance). Kam, Fischer, Khalessi, Senaratna 10 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process 3.2.Survey Design In order to obtain information on the additional KPIs/metrics and correlations related to them, we will carry out web-surveys to obtain the data. The survey is to be carried out using the online survey tool, survey monkey. It will involve responding to 14 questions, which will take approximately 15 minutes. The web-survey will first be tested on 5 projects to verify technical soundness and coherence of the questions. The Survey is to be carried out in two phases to obtain information from all stakeholders, in order to have an independent final score. Figure 10 illustrates the plan to collect data: Figure 10: Web-survey methodology 3.3.Model Building and Validation (to study correlation patterns) The model building will be based on the 4 statistical methods: 1. Factor Analysis (Explained in Section 2) 2. Structural Equation Modeling (Explained in Section 2) 3. Cluster Analysis (Explained in Section 2) 4. Classification Trees 5. Other: a. Principal Components Analysis b. Canonical Correlations c. Correspondence Analysis Classification Trees: This method can be used in selecting project types that associate the most with a particular feature. For example, it can identify what are the characteristics of projects that did not meet their schedule based objectives. It is commonly used in Market Analysis to find the best group of customers to target. The type of classification tree method we will use is a CHAID tree (Chi- squared Automatic Interaction Detection). Kam, Fischer, Khalessi, Senaratna 11 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process Others: Principal Component Analysis can be used to rank projects. Canonical Correlation Analysis is used to identify links between groups of project features, which is in turn used to forecast project performance. Finally, Correspondence Analysis is used to identify relationships between categorical features. 3.4.Validation Validating statistical models is essential to ensure good decision making using KPI correlations. Validation will be carried out using two methods: 1. The data-driven validation of the results will use selected test data. The test data are to be collected by the CEE 212A class as their class project for the course. 2. Validation will also be carried out by using expert opinion of CIFE members and industry personnel. The models will be refined until there is agreement between the models, the test data and expert opinion. 3.5.Dissemination of Results To disseminate results, we will carry out two workshops to educate AEC professionals on the value of the findings, and how to use the insights in decision making (See section 5). The first workshop will demonstrate initial findings on correlation patterns. The second will be related to using the insights for decision making. We will complete one journal publication, and contribute towards McGraw and Hill’s Smart Market Report and the National BIM Standards. 4. Relationship to CIFE Goals This study will encourage the efficient use of KPIs in the AEC industry and to improve the benefits of BIM/VDC, so as to facilitate its usage in the AEC industry. The final product will include project-specific KPIs/metrics or benchmarks that identify and quantify both lagging and leading KPIs/metrics. In addition, the study involves innovative statistical modeling methods, such as Factor Analysis and Structural Equation Modeling, to forecast and monitor BIM/VDC performance. 4.1 Intellectual Merit, Relevance and Value This study involves complex multivariate statistical methodology, and comprises of a multidisciplinary team. The statistical methodology will add a novel perspective to the design, analysis and interpretation of KPIs, and to understand the relationships between them. Further, this team comprises of individuals with expertise in statistics, VDC, as well as non-AEC industries such as manufacturing and production management. This will further add to the innovativeness and intellectual merit of the study. This study is directly related to ongoing CIFE research on the VDC Scorecard and will integrate the ideas and outcomes of the previous SEED project on the DPMM methodology. Finally, current data analysis carried out on a sample of the VDC data, especially the clustering nature of the Kam, Fischer, Khalessi, Senaratna 12 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process cases, provides a sign of the feasible nature of carrying out in-depth analysis using the proposed additional survey data. Also See Section 5 on “Dissemination of Findings” 5. Industry Involvement 5.1.Dissemination of Findings We will disseminate the findings of this study by: 1. Publications: Results will be publicly available on the VDC Scorecard website (vdcscorecard.stanford.edu/) and we will write one journal publication 2. Workshops: We will offer two workshops (November ’13, February ’14). They will be conducted by Kam, Fischer and Khalessi, and participation will be possible even remotely via “gotowebinar”. 3. Contributions: The findings of this research will be submitted to the National BIM Standards, as well to the McGraw Hill Construction Market Report. 5.2.Data Collection, Validation and Missing Value Imputation The additional data will be collected from the existing VDC Scorecard cases. This includes both CIFE member organizations and other industry contacts. Results validation will be carried out using two methods, a statistical data-based validation and an expert opinion based validation. For the purpose of validating the recommendations, CIFE members, stakeholders of the projects scored using the VDC Scorecard, students from CEE 112/212, and AEC experts will be required to express their opinions on the feasibility of the results, based on the characteristics of a few test-cases. If missing values are observed, expert opinion may be required, along with statistical data imputation methods, to fill in the data gaps. 6. Research Plan, and Schedule 6.1.Milestones Task Number 1. 2. 3. 4. 5. 6. 7. Task(s) Tentative Deadline August 1st 2013 Initial Preparation: Pilot Literature Review, Statistical Analysis, Initial Survey Forms. Survey Implementation October 30th 2013 Data cleaning and checking for model assumptions November 15th 2013 Workshop I November 2013 Workshop II February 2013 Final Analysis: Factor Analysis, Structural Equation February 7th 2013 Modeling, Clustering and, related results validation Final documentation of publication materiel February 15th 2013 performance feedback methodology. Kam, Fischer, Khalessi, Senaratna 13 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process Documentation Results Validation Statistical Analysis Data Cleaning Survey Implementation Survey Design Tasks Preliminary Work 6.2.Project Plan July August September October November December January February 6.3.Risks and contingencies: 1. Response rate: Due to time issues, it is expected that the response rate could be as low as 30%. Data imputation or bootstrapping will be used to mitigate this risk. 2. Validating results for projects with rare and unique characteristics will be difficult, and carrying-out validation using “matching” will not be logical for all cases. It is possible to archive these cases and test them at the follow-up stage of this project. 7. Next Steps As a next step, the process of developing the 3 statistical model proposed in the SEED proposal will be automated. Though, this SEED Proposal will explore the relationships between the existing KPIs, as VDC is a rapidly developing field, these relationships need to be analyzed and refined. The project team will continue to offer this research to CIFE members, and, additionally, seek external funding grants and joint collaborative research with other universities. The findings of this research will be submitted to the National BIM Standards, as well to the McGraw Hill Smart Market Report (See sections 3 and 5). Kam, Fischer, Khalessi, Senaratna 14 Statistical Analysis of KPIs: the Missing Links in the VDC Decision Making Process References: 1. Abeysekera, S. (2006) Multivariate methods for index construction, UNSTATS, United Nations Statistics Division. 2. Alarcón, L., Mourgues, C., O’Ryan, C., and Fischer, M. (2010) Designing a Benchmarking Platform to select VDC/BIM Implementation Strategies. Proceedings of the CIB W78 2010: 27th International Conference –Cairo, Egypt, 16-18 November. 3. Bloom, N. and Van Reenen, J. (2007) "Why do management practices differ across firms and countries?", Journal of Economic Perspectives, Vol 24, No 1, Winter 2010, 203-224. 4. Bloom, N. and Van Reenen, J. (2006) "Measuring and explaining management practices across firms and countries", NBER Working Paper No. 12216, May, 2006. 5. Esin, C., Von Bergen, M., and Wüthrich, R. (2009) Are KPIs and Benchmarking actively used among organisations of the Swiss apparel industry to assure revenue? PricewaterhouseCoopers AG, Publication. 6. Gao, J. (2011) A Characterization Framework to Document and Compare BIM Implementations on Projects, PhD thesis. Stanford, CA: Center for Integrated Facility Engineering (CIFE), Dept. of Civil and Environmental Engineering, Stanford University. 7. Kam, C., McKinney, B., Xiao, Y., Senaratna, D. (2013) The Formulation and Validation of the VDC Scorecard, CIFE Publications. Stanford, CA: Center for Integrated Facility Engineering (CIFE), Dept. of Civil and Environmental Engineering, Stanford University. 8. Kam, C., Xiao, Y., McKinney, B., Senaratna, D. (2013) The VDC Scorecard Evaluation of AEC Projects and Critical Findings, CIFE Publications. Stanford, CA: Center for Integrated Facility Engineering (CIFE), Dept. of Civil and Environmental Engineering, Stanford University. 9. McGraw and Hill (2012) The Business Value of BIM. McGraw and Hill, Smart Market Report 10. Li, W., Fischer, M., Schwegler, B., Bloom, N., Van Reenen, J. (2012) Dynamic Performance Monitoring and Management: A Metric-Based Framework to Better Predict Project Success. CIFE SEED Proposal. Stanford, CA: Center for Integrated Facility Engineering (CIFE), Dept. of Civil and Environmental Engineering, Stanford University. 11. Zevenbergen, J., Gerry, J., and Buckbee, G., (2006) Automation KPIs Critical for Improvement of Enterprise KPIs. Journees Scientifiques et Techniques. Kam, Fischer, Khalessi, Senaratna 15