STOCHASTIC GRADIENT DESCENT AND THE RANDOMIZED KACZMARZ ALGORITHM

advertisement

STOCHASTIC GRADIENT DESCENT AND THE RANDOMIZED KACZMARZ ALGORITHM

arXiv:1310.5715v2 [math.NA] 15 Feb 2014

DEANNA NEEDELL, NATHAN SREBRO, AND RACHEL WARD

A BSTRACT. We show that the exponential convergence rate of stochastic gradient descent for smooth strongly

convex objectives can be markedly improved by perturbing the row selection rule in the direction of sampling estimates proportionally to the Lipschitz constants of their gradients. That is, we show that partially

biased sampling allows a convergence rate with linear dependence on the average condition number of

the system, compared to dependence on the average squared condition number for standard stochastic

gradient descent. We assume the regime where all stochastic estimates share an optimum and so such an

exponential rate is possible. We then recast the randomized Kaczmarz algorithm for solving overdetermined

linear systems as an instance of stochastic gradient descent, and apply our results to prove its exponential

convergence, but to the solution of a weighted least squares problem rather than the original least squares

problem. We present a modified Kaczmarz algorithm with partially biased sampling which does converge

to the original least squares solution with the same exponential convergence rate.

1. I NTRODUCTION

This paper connects two algorithms which until now have remained remarkably disjoint in the literature: the randomized Kaczmarz algorithm for solving linear systems and the stochastic gradient descent

(SGD) method for optimizing a convex objective using unbiased gradient estimates. The connection enables us to make contributions by borrowing from each body of literature to the other. We extend the

idea of importance sampling from the Kaczmarz literature, and introduce an family of SGD algorithms

with nonuniform row selection rule. We show that by sampling from a hybrid uniform / biased distribution over the estimates, the proposed family of algorithms enjoys the advantages of both importance

sampling (improved rate of convergence) and unbiased sampling (higher noise tolerance), and overall

compares favorably to recent bounds for SGD with uniform row selection in the case of sums of smooth

and strongly convex functions.

Recall that stochastic gradient descent is a method for minimizing a convex objective F (x) based on

access to unbiased stochastic gradient estimates, i.e. to an estimate g for the gradient at a given point

x, such that E[g ] = ∇F (x). Viewing F (x) as an expectation F (x) = Ei [ f i (x)], the unbiased gradient estimate can be obtained by drawing i and using its gradient: g = ∇ f i (x). SGD originated under the banner

of “Stochastic Approximation” in the pioneering work of Robbins and Monroe [30], and has recently received renewed attention for confronting very large scale problems, especially in the context of machine

learning [2, 31, 23, 2].

Classical analysis

p of SGD shows a polynomial rate on the suboptimality of the objective value, F (x k ) −

F (x ⋆ ), namely 1/ k for non-smooth objectives, and 1/k for smooth, or non-smooth but strongly convex

objectives [3]. Such convergence can be ensured even if the iterates x k do not necessarily converge to a

unique optimum x ⋆ , as might be the case if F (x) is not strongly convex. Here we consider the strongly

convex case, where the optimum is unique, and focus on convergence of the iterates x k to the optimum

x⋆.

Date: February 18, 2014.

DN: Department of Mathematical Sciences, Claremont McKenna College (dneedell@cmc.edu). NS: Toyota Technological

Institute at Chicago (nati@ttic.edu). RW: Department of Mathematics, University of Texas at Austin (rward@math.utexas.edu).

1

2

TT

Bach and Moulines [1] recently provided a non-asymptotic bound on the convergence of the iterates

in strongly convex SGD, improving on previous results of this kind [18, Section 2.2], [3, Section 3.2], [32].

In particular, Bach and Moulines showed that if each f i (x) is smooth and if x ⋆ is a minimizer of (almost)

all f i (x), i.e. Pi (∇ f i (x ⋆ ) = 0) = 1, then Ekx k − x ⋆ k goes to zero exponentially, rather then polynomialy,

in k. I.e. reaching a desired accuracy of Ekx k − x ⋆ k2 ≤ ε requires a number of steps that scales only

logarithmically in 1/ε. Bach and Moulines’s bound on the required number of iterations further depends

on the average squared conditioning number κ2i = (L i /µ)2 , where L i is the Lipschitz constant of ∇ f i (x)

(i.e. f i (x) are “L-smooth”), and F (x) is µ-strongly convex. If x ⋆ is not an exact minimizer of each f i (x),

the bound degrades gracefully as a function of σ2 = Ek∇ f i (x)k2 , and includes an unavoidable term that

behaves as σ2 /k.

In a seemingly independent line of research, the Kaczmarz method was proposed as an iterative method

for solving (usually overdetermined) systems of linear equations [14]. The simplicity of the method

makes it useful in a wide array of applications ranging from computer tomography to digital signal processing [11, 19, 13]. Recently, Strohmer and Vershynin proposed a variant of the Kaczmarz method using

a random selection method which select rows with probability proportional to their squared norm [33],

and showed that using this selection strategy, a desired accuracy of ε can be reached in the noiseless

setting in a number of steps that scales like log(1/ε) and linearly in the condition number.

1.1. Contribution of this work. Inspired by the analysis of Strohmer and Vershynin [33] and Bach and

Moulines [1], we prove convergence results for Stochastic Gradient Descent (SGD) and a family of variants of SGD parametrized by the degree to which the random selection strategy chooses estimates proportionally to the Lipschitz constants of their gradients. We show that by perturbing the row selection

strategy towards the conditioning of the constituents in a sum of functions with Lipschitz gradients, we

arrive at improved convergence rates for SGD over the bounds in [1], showing that the convergence rate

P

can be improved to depend on the average conditioning number ( i L i )/µ rather than on the average

P 2 2

squared conditioning number ( i L i )/µ as in [1], without amplifying the dependence on the residual.

Our bounds also improve on those in [1] for SGD with unbiased selection when the condition numbers

L i are comparable. We then show that the randomized Kaczmarz method with uniform i.i.d. row selection can be recast as an instance of Stochastic Gradient Descent acting on a re-weighted least squares

problem and through this connection, provide exponential convergence rates for this algorithm. We

also consider the family of Kaczmarz algorithms corresponding to SGD with hybrid row selection strategy which shares the exponential convergence rates of Strohmer and Vershynin [33] while also sharing a

small error residual term of the SGD algorithm.

1.2. Notation and Fundamentals. We consider the problem of minimizing a smooth convex function,

x ⋆ = argmin F (x)

(1.1)

x

where F (x) is of the form F (x) = Ei ∼D f i (x) for smooth functionals f i : H → R over H = R d endowed

with the standard Euclidean norm k·k2 , or over a Hilbert space H with the norm k·k2 . Here i is drawn

from some source distribution D over an arbitrary probability space. Throughout this manuscript, unless

explicitly specified otherwise, expectations will be with respect to indices drawn from the source distribution D. I.e. we write E f i (x) = Ei ∼D f i (x). We also denote by σ2 the “residual” quantity at the minimum,

σ2 = Ek∇ f i (x ⋆ )k22 .

We will instate the following assumptions on the function F :

(1) Each f i is continuously differentiable and the gradient function ∇ f i has Lipschitz constant L i ;

that is, k∇ f i (x) − ∇ f i (y )k2 ≤ L i kx − yk2 for all vectors x and y .

3

­

®

(2) F has strong convexity parameter µ; that is, x − y , ∇F (x) − ∇F (y ) ≥ µkx − yk22 for all vectors x

and y .

Note in particular that the strong convexity assumption ensures that the minimum of (1.1) is unique.

Formally, since i is random, we have that almost surely, for all vectors x, y , k∇ f i (x) − ∇ f i (y )k2 ≤

L i kx − yk2 . We denote supi L i the supremum of the support of L i , i.e. the smallest L such that L i ≤ L

a.s., and similarly denote inf L i the infimum. We denote the average Lipschitz constant as L = EL i .

A central quantity in our analysis is the conditioning of the problem, which is, roughly speaking, the

ratio of the Lipschitz constant to the parameter of strong convexity. Recall that for a convex quadratic

f (x) = 21 x ′ H x, the Lipschitz constant of the gradient is given by the maximal eigenvalue of the Hessian

H while the parameter of strong convexity is given by its minimal eigenvalue. The conditioning thus

corresponds to the condition number of the Hessian matrix. In the general setting considered here, the

Hessian can vary with x, and our results will depend on the Lipschitz constants of ∇ f i and not only of

the aggregate ∇F . Specifically, our results will depend on the average conditioning L/µ and the uniform

conditioning supi L i /µ.

1.3. Reweighting a Distribution. In stochastic gradient descent, gradient estimates ∇ f i (x) are usually

sampled according to the source distribution D. However, we will analyze also sampling from a weighted

distribution. For a weight function w (i ), which assigns a non-negative weight w (i ) ≥ 0 to each index i ,

the weighted distribution D (w) is defined as the distribution such that

PD (w) (I ) ∝ Ei∼D [1I (i )w (i )] ,

where I is an event (subset of indices) and 1I (·) its indicator function. For a discrete distribution D with

probability mass function p(i ) this corresponds to weighting the probabilities to obtain a new probability

mass function:

p (w) (i ) ∝ w (i )p(i ).

Similarly, for a continuous distribution, this corresponds to multiplying the density by w (i ) and renormalizing.

One way to construct the weighted distribution D (w) , and sample from it, is through rejection sampling: sample i ∼ D, accepting with probability w (i )/W , for some W ≥ supi w (i ), and otherwise rejecting

and continuing to re-sample until a suggestion i is accepted. The accepted samples are then distributed

according to D (w) .

We use E(w) [·] = E i ∼D (w) [·] to denote an expectation where indices are sampled from the weighted distribution D (w) . An important property of such an expectation is that for any quantity X (i ) that depends

on i :

i

h

1

X

(i

)

= E [w (i )] · E [X (i )] ,

(1.2)

E(w) w(i

)

where recall that the expectations on thehr.h.s. areiwith respect to i ∼ D. In particular, when Ew (i ) = 1, as

will be the case for us, we have that E(w)

1

X (i )

w(i )

= E X (i ).

1.4. Organization. The remainder of the paper is organized as follows. In Section 2 we introduce the

stochastic gradient descent (SGD) method as well as our main result which shows exponential convergence of SGD with an improvement in the rate over previous results. Next, Section 3 draws connections

and discusses the application of our result to the randomized Kaczmarz method for linear systems. We

present experimental results in Section 4. The proofs of our main theorems are included in Section 5.

2. S TOCHASTIC G RADIENT D ESCENT

WITH

PARTIALLY B IASED S AMPLING

The standard SGD procedure is to sample i ∼ D, and take a step proportional to −∇ f i (x). That is,

the “selection” of the component i follows the source distribution D specifying the objective F . Such a

sampling assures that ∇ f i (x) is an unbiased estimator of ∇F (x), and so that the method converges to

4

TT

the optimum x ⋆ of F (·). Here we borrow from variants of the randomized Kaczmarz method for solving systems of linear equations, in which various selection strategies for equations have been proposed,

including selection based on Euclidean norm of the coefficients [33]. For SGD, this corresponds to sampling with probability proportional to L i and then re-weighting (“pre-conditioning”) the functions f i (·)

so that the re-weighted gradients still form an unbiased estimator of the true gradient. For the randomized Kaczmarz method, and as we shall see below also for SGD, selection with probability proportional

to the squared Euclidean norm yields better dependence on the conditioning, when there is no residual

error. On the other hand, the uniform selection strategy is more robust to residual error. To analyze these

two types of selection strategies simultaneously, and also leverage the best of both worlds, we consider a

family of interpolative distributions

D (λ) = D (w λ )

(2.1)

specified by the weights

Li

,

(2.2)

L

where λ is a parameter in the range 0 ≤ λ ≤ 1. Note that (2.2) ensures E[w λ (i )] = 1 for all λ.

At λ = 0, the algorithm we propose corresponds to the Kaczmarz algorithm with weighted sampling,

and at λ = 1, corresponds to “standard” SGD with unbiased row selection. At intermediate values of

λ, this algorithm corresponds to a hybrid Kaczmarz-SGD algorithm, and we refer to this algorithm as

Stochastic Gradient Descent with Partially Biased Sampling (PBS-SGD). As we shall see, this algorithms

enjoys the faster convergence rate of Kaczmarz and the lower residual error of SGD. We introduce the

family of algorithms more completely below in Algorithm 2.1.

w λ (i ) = λ1 + (1 − λ)

Algorithm 2.1 Stochastic Gradient Descent with Partially Biased Sampling

Input:

•

•

•

•

•

•

Initial estimate x 0 ∈ Rd

Parameter λ ∈ [0, 1] indicating the degree of uniform sampling

Step size γ > 0

Tolerance parameter δ > 0

Access to the source distribution D

If λ < 1: bounds on the Lipschitz constants L i ; the weights w λ (i ) derived from them (see eq.

2.2); and access to D (λ) (see eq. 2.1).

Output: Estimated solution x̂ to the problem minx F (x)

k ←0

repeat

k ← k +1

Draw an index i ∼ D (λ) .

γ

x k ← x k−1 − w λ (i ) ∇ f i (x k−1 )

until F (x) ≤ δ

x̂ ← x k

Our main result shows expected exponential convergence of this method with a linear dependence on

the average conditioning L/µ = EL i /µ.

Theorem 2.1 (Convergence rate for SGD with partially biased sampling). Let f i be continuously differentiable convex functionals operating on Rd , where each ∇ f i has Lipschitz constant L i , and let F (x) =

5

Ei ∼D f i (x) is µ-strongly convex. Set σ2 = Ei ∼D k∇ f i (x ⋆ )k22 , where x ⋆ is the minimizer of the problem

³

´

x ⋆ = argmin F (x).

x

³

´

sup L

L

, λi i and β = β(λ) = min λ1 , (1−λ)Linfi L i , and consider step size γ < 1/α. Then

Set α = α(λ) = min 1−λ

the iterate x k of Algorithm 2.1 satisfies

h

´ik

γβσ2

¢,

kx 0 − x ⋆ k22 + ¡

Ekx k − x ⋆ k22 ≤ 1 − 2γµ(1 − γα)

(2.3)

µ 1 − γα

where the expectation is with respect to the random sampling in the Algorithm.

If we are given a desired tolerance, kx −x ⋆ k2 ≤ ε, and we know the Lipschitz constants and parameters

of strong convexity, we may optimize the step-size γ. This gives rise to the following corollary.

´

³

´

³

sup L

L

, λi i and β = max λ1 , (1−λ)Linf L . Consider Algorithm 2.1

Corollary 2.2. Fix λ ∈ [0, 1]. Set α = min 1−λ

i i

with step-size

µε

.

γ=

2εµα + 2βσ2

Then Ekx k − x ⋆ k22 ≤ ε is obtained after

³ α βσ2 ´

k = 2 log(ε0 /ε) + 2 .

µ µ ε

(2.4)

iterations of Algorithm 2.1, where ε0 = kx 0 − x ⋆ k2 .

´

³

2

sup L

• Setting λ = 1 (as in standard Stochastic Gradient Descent), k = 2 log(ε0 /ε) µi i + µσ2 ε .

³

´

2

• Setting λ = 0 (as in Kaczmarz with fully biased row selection), k = 2 log(ε0 /ε) µL + (inf Lσ

2

i L i )µ ε

´

³

σ2

L

• Setting λ = 1/2 (partial biasing), k = 4 log(ε0 /ε) µ + µ2 ε .

2.1. Comparison to Prior Work. Bach and Moulines [1, Theorem 1] studied stochastic gradient descent

with unbiased sampling, i.e. using λ = 1, and established that1

³ EL 2

σ2 ´

k = 2 log(ε/ε0 ) 2i + 2

µ

µ ε

(2.5)

steps of Algorithm 2.1 (with λ = 1) are sufficient to obtain an error bound of Ekx k − x ⋆ k22 ≤ ε using a

µε

step-size of γ = 2ε2 L 2 +2σ2 .

• For unbiased sampling (λ = 1), our bound replaces the quadratic dependence on the average

square conditioning (EL 2i /µ2 ) with a linear dependence on the uniform conditioning (sup L i /µ).

This is a quadratic improvement when the Lipschitz constants L i are all of similar magnitude,

but is not an improvement in all situations.

• For fully biased importance sampling (λ = 0), we obtain a linear dependence on the average

conditioning L = EL i /µ, which is always better than the average square conditioning of Bach and

Moulines. However, in this case, if there is a non-zero residual and σ > 0, the residual term is

larger by a factor of (L/ inf L i ).

• For partially biased sampling, with intermediate values of λ such as λ = 1/2, our results leverage the benefits of both uniform and importance sampling. For λ = 1/2, we obtain the desired

linear dependence on L (always improving over Bach and Moulines), without introducing any

1Bach and Moulines’s results are somewhat more general. Their Lipschitz requirement is a bit weaker and more compli-

cated, and in terms of L i yields (2.5). They also study the use of polynomial decaying step-sizes, but these do not lead to

improved runtime if the target accuracy is known ahead of time.

6

TT

additional factor to the residual term, except for a constant factor of two. We thus obtain a result which dominates Bach and Moulines (up to a factor of 2) and substantially improves upon it

(with a linear rather then quadratic dependence on the conditioning).

The crux of the improvement over Bach and Moulines is in a tighter recursive equation. Whereas Bach

and Moulines rely on the recursion

¢

¡

Ekx k+1 − x ⋆ k22 ≤ 1 − 2γµ + 2γ2 L 2 kx k − x ⋆ k22 + 2γ2 σ2 ,

we use the co-coercivity lemma (Lemma 5.1) to obtain the tighter recursion

¡

¢

Ekx k+1 − x ⋆ k22 ≤ 1 − 2γµ + 2γ2 µL kx k − x ⋆ k22 + 2γ2 σ2 ,

where L is the Lipschitz constant of the component used in the current iterate. The significant difference

is that one of the factors of L (an upper bound on the second derivative), in the third term inside the

parenthesis, is replaced by µ (a lower bound on the second derivative).

2.2. Importance Sampling. As discussed above, in some regimes, when the Lipschitz constants L i are

of similar magnitudes, we improve over Bach and Moulines even with unbiased sampling. But when

the magnitudes are highly variable, importance sampling is necessarily in order to obtain a dependence

on the average, rather then uniform, conditioning. In some applications, especially when the Lipschitz

constants are known in advance or easily calculated or bounded, such importance sampling might be

possible. This is the case, for example, in trigonometric approximation problems or linear systems which

need to be solved repeatedly, or when the Lipschitz constant is easily computed from the data, and multiple passes over the data are needed anyway. We do acknowledge that in other regimes, when data is

presented in an online fashion, or when we only have sampling access to the source distribution D (or

the implied distribution over gradient estimates), importance sampling might be difficult.

One option that could be considered, in light of the above results, is to use rejection sampling to simulate sampling from D (λ) . E.g. for λ = 0, this can be done by accepting samples with probability proportional to L i / sup j L j . The overall probability of accepting a sample is then L/ sup L i , introducing an

additional factor of sup L i /L, and thus again obtaining a linear dependence on sup L i . Thus, if we are

presented samples from D, and the cost of obtaining the sample dominates the cost of taking the gradient step, we do not gain (but do not lose much either) from rejection sampling. We might still gain from

rejection sampling if the cost of operating on a sample (calculating the actual gradient and taking a step

according to it) dominates the cost of obtaining it and (a bound on) the Lipschitz constant.

2.3. Tightness. One might hope to obtain a linear dependence on the average conditioning L/µ with

unbiased sampling (i.e. without importance sampling). However, as the following example shows, this

is not possible. Consider a uniform source

distribution over N + 1 quadratics, with the first quadratic f 1

¢2

¡q 1

2

being (x[1] − b) and all others being

N x[2] , and b = ±1. It is clear that for any method one must

consider f 1 in order to recover x to within error less then one, but with unbiased sampling this takes

(N + 1) iterations in expectation (with biased sampling, we have L 1 = N and L i = 1 for 2 ≤ i ≤ N + 1, and

so i = 1 would be selected with probability half). It is easy to verify that in this case, sup L i = L 1 = N ,

L = 2, EL 2i = N , and µ = 1. For large N , a linear dependence on L/µ would mean that a constant number

of iterations suffice (as L/µ → 2 as N → ∞), but we just saw that any method that uses unbiased sampling

must consider at least (N + 1) samples to get non-trivial error. Note that both sup L i /µ = N and EL 2i /µ2 =

N indeed correspond to the correct number of iterations using unbiased sampling.

Returning to the comparison with Bach and Moulines, we see that, with unbiased sampling, the choice

between a dependence on the average quadratic conditioning EL 2i /µ2 , or a linear dependence on the

uniform conditioning sup L i /µ, is unavoidable. A linear dependence on the average conditioning L/µ

is not possible with any method that uses unbiased sampling. Here, we show how to obtain a linear

7

dependence on sup L i /µ with unbiased sampling (improving over Bach and Moulines in some regimes),

and how to obtain a linear dependence on L/µ using biased sampling.

3. T HE LEAST

SQUARES CASE AND THE

R ANDOMIZED K ACZMARZ M ETHOD

A special case of interest is the least squares problem, where

F (x) =

n

1

1X

(⟨a i , x⟩ − b i )2 = kAx − bk22

2 i =1

2

(3.1)

with b an n-dimensional vector, A an n × d overdetermined full-rank matrix with rows a i , and x ⋆ =

argminx 12 kAx − bk22 is the least-squares solution. Writing the least squares problem (3.1) in the form

(1.1), we find that

• The source distribution D is uniform over {1, 2, . . . , n}.

• The components are f i = n2 (⟨a i , x⟩ − b i )2

• The Lipschitz constants are L i = nka i k22 , and the average Lipschitz constant is

1

n

P

i

L i = kAk2F .

1

2

T

−1

k2

• The strong convexity parameter is µ = k(A T A)

−1 k , so that K (A) := L/µ = kAkF k(A A)

2

P

• The residual is σ2 = n i ka i k22 | ⟨a i , x ⋆ ⟩−b i |2 . Observe the bounds σ2 ≤ nkAk2F supi | ⟨a i , x ⋆ ⟩−b i |2

and σ2 ≤ n supi |a i |2 kAx ⋆ − bk22 .

The standard Kaczmarz method for solving the least squares problem (3.1) produces an estimation x̂

to the minimizer x ⋆ of the least squares problem (3.1). Beginning with an arbitrary estimate x 0 , in the

kth iteration it selects a row i = i (k) of the matrix A and projects the current iterate x k onto the solution

space corresponding to the i th row,

x k+1 = x k +

b i − ⟨a i , x k ⟩

ka i k22

ai .

(3.2)

When the Kaczmarz method was introduced, it was proposed to select rows i sequentially, so that

i (k) = k mod n + 1. However, an unfortunate ordering of the rows can lead to slow convergence. It was

later proposed to select rows i.i.d. at random, which was shown empirically to result in significantly

faster convergence rates [5, 12, 19]. A number of asymptotic convergence rates were subsequently obtained, see [36, 6, 34, 10, 37]. Strohmer and Vershynin provided the first nonasymptotic rates, showing

that drawing rows proportionally to their Lipschitz constants leads to provable exponential convergence

in expectation in the noiseless setting Ax = b [33]. Needell extended these first results to inconsistent

systems [20]. Recently, Lee and Sidford use acceleration techniques to improve upon this convergence

rate to obtain a dependence on the square root of the conditioning, at a cost of an additional dependence on the size of the system [16]. Lui and Wright also provide a Nesterov type acceleration which

improves the convergence rate to a dependence on the square root of the smallest singular value (and

linear in n) in the consistent case, as well as computational improvements for sparse matrices [17].

Several other works discuss methods for acceleration and convergence to the least-squares solution,

see [25, 8, 29, 37, 9, 7, 4, 26, 27, 28, 22, 21] and references therein. In this work, we focus on the standard

Kaczmarz method without acceleration techniques.

With fully weighted sampling λ = 0, Algorithm 2.1 reduces to the Kaczmarz algorithm with the row

selection strategy of Strohmer and Vershynin in the least squares setting. Conversely, our main theorem with uniform sampling λ = 1 produces new bounds for the Kaczmarz method (3.2) with uniform

row selection; in particular, our results imply exponential convergence of the Kaczmarz method to the

solution of a weighted least squares problem, rather than to the solution of the original problem (3.1).

Nevertheless, our main results show that by perturbing the Kaczmarz algorithm slightly, and adapting a

slightly biased row selection rule, we arrive at exponential convergence to the unweighted least squares

8

TT

solution without amplifying the noise dependence. We note that a randomized Kaczmarz algorithm with

partially-biased sampling was also recently considered in [15] in the setting σ2 = 0, albeit from a different motivation and using slightly different analysis. It is shown there that accelerated methods using

partially biased sampling can yield even better sampling complexity in some regimes.

3.1. Randomized Kaczmarz with weighted row selection. Here we show that the framework for SGD

with partially biased sampling can be applied to provide non-asymptotic results for the randomized

Kaczmarz method (3.2) proposed by Strohmer and Vershynin, where each row is selected with probability proportional to its squared Euclidean norm, p i = ka i k2 /kAk2F . As shown in [33] and extended in [20],

this method exhibits exponential convergence, but only to within a radius, or convergence horizon, of the

least-squares solution:

¸k

·

1

2

(3.3)

kx 0 − x ⋆ k22 + K (A)r,

Ekx k − x ⋆ k2 ≤ 1 −

K (A)

where e = Ax ⋆ − b and r = supi |e i |2 /ka i k22 , and K (A) = kAk2F k(A T A)−1 k2 . Note that r 6= 0 when the

system is inconsistent and Ekx k − x ⋆ k22 ≤ K (A)r as k → ∞.

It has been shown [36, 6, 34, 10, 22] that using a relaxation parameter (i.e. changing the step size) can

allow for convergence inside of this convergence horizon. However, non-asymptotic results have been

difficult to obtain. We consider here the randomized Kaczmarz algorithm with relaxation parameter

0 < c < 1:

x k+1 = x k + c ·

b i − ⟨a i , x k ⟩

ka i k22

ai

(3.4)

Formulating the randomized Kaczmarz method as an instance of SGD with fully biased sampling, we

can use our main result to derive the following corollary.

Corollary 3.1 (Convergence rate for Kaczmarz with fully biased sampling). Let A be an n×d full (column)

rank matrix with rows a i . Set e = Ax ⋆ − b, where x ⋆ is the minimizer of the problem

1

x ⋆ = argmin kAx − bk22 .

2

x

2

2

2

= supi e i2 . Then the expected error at the k t h iteration of

= supi ka i k22 , and e max

Set a min

= infi ka i k22 , a max

the Kaczmarz method described by (3.4) with row a i selected with probability p i = ka i k22 /kAk2F satisfies

·

¸

2c(1 − c) k

c

Ekx k − x ⋆ k22 ≤ 1 −

kx 0 − x ⋆ k22 +

K (A)r˜,

(3.5)

K (A)

1−c

© 2

ª

2

2

2

with re = (a max

/a min

) min e max

/a max

, kek22 /kAk2F . The expectation is taken with respect to the weighted

distribution over the rows.

c

T

−1

Proof. We apply Theorem 2.1 with f i (x) = n2 (a i x−b i )2 , λ = 0, L i = nka i k22 , γ = c PnL i = kAk

k2 ,

2 , µ = 1/k(A A)

F

P

2

2

2

2

2

2

α = L = kAkF , and β = L/ min L i = kAkF /na min . Recalling that σ = n i ka i k2 |(⟨a i , x ⋆ ⟩ − b i )| , we also

2

use the bounds σ2 ≤ na max

kek22, σ2 ≤ n supi |e i |2 kAk2F .

Remark. When e.g. c = 21 , we recover the known exponential rate (3.3) up to a factor of 2, and nearly the

same convergence horizon. For arbitrary c, Corollary 3.1 implies a tradeoff between a smaller convergence horizon and a slower convergence rate. One can also consider instead of a fixed c, employing a

sequence {c k } which changes the value iteration by iteration, whose analysis we leave for future work.

9

3.2. Randomized Kaczmarz with uniform row selection. One drawback of the approach of Kaczmarz

with weighted row selection is that in general it requires precomputing each row norm, or applying a

diagonal preconditioner matrix2. In this section we analyze the uniform row selection strategy. We

first recast the Kaczmarz method with uniform row selection as an instance of SGD on the renormalized system of functions f i (x) = 2kan k2 (a i x − b i )2 . Using the generalized framework of SGD presented

i

in Theorem2.1, we show that the Kaczmarz algorithm with uniform row selection exhibits exponential

convergence towards the minimizer of a renormalized least squares problem. This is the content of the

following corollary.

Corollary 3.2 (Convergence rate for randomized Kaczmarz with uniform sampling). Let A be an n×d full

(column) rank matrix with rows a i . Let D be the diagonal matrix with terms d j , j = ka i k2 , and consider

the renormalized matrix D −1 A. Set e w = D −1 (Ax ⋆w − b), where x ⋆w is the minimizer of the weighted least

squares problem

1

(3.6)

x ⋆w = argmin kD −1 (Ax − b)k22 .

2

x

Then the expected error after k iterations of the Kaczmarz method described by (3.4) with uniform row

selection satisfies

¸

·

c

2c(1 − c) ´ k

w 2

kx 0 − x ⋆w k22 +

K (D −1 A)r w ,

(3.7)

Ekx k − x ⋆ k2 ≤ 1 −

−1

K (D A)

1−c

where r w = ke w k22 /n.

Proof. We apply Theorem 2.1 with λ = 1, f i (x) =

β = 1, and we observe that σ2 ≤ nke w k22 .

n

(a i x

2ka i k2

− b i )2 , L i = n, γ = nc , µ = 1/kA −1 Dk2 , α = n,

Remarks.

1. The randomized Kaczmarz algorithm with uniform row selection converges exponentially to the

weighted least-squares solution (3.6), to within arbitrary accuracy by choosing sufficiently small stepsize

c. Of course, the convergence rate also decreases for smaller c, and so accuracy and speed must be

balanced.

2. When the system is consistent, the solutions of the unweighted and weighted least squares problems, (3.1) and (3.6) respectively, are the same and equal to x. For arbitrary error, the least squares solution x ⋆ and the weighted least squares solution x ⋆w can be significantly different. Thus, in general, the

randomized Kaczmarz algorithms with uniform and biased row selection converge towards very different solutions.

3. It is difficult

³ to compare the´rates in Corollary 3.1 and Corollary 3.2 directly. It is easy to show that

a

K (A) independent of A, and so we can derive the crude bound

K (D −1 A) ≤ min nK (A), amax

min

³

Ekx k − x ⋆w k22 ≤ 1 −

´´k

2c(1 − c)

nc

2

³

´ kx 0 − x ⋆w k22 +

K (A)((e w )2max /a max

),

a max

1

−

c

min nK (A), amin K (A)

showing that the convergence rate for uniform row selection is no worse than a factor of min(n, a max /a min )

times that for weighted row selection. It should not be surprising that uniform selection performs well

since in the consistent case this is equivalent to normalizing the rows of the matrix, which often comes

close to minimizing the condition number [35].

4. In addition to the difficulty in comparing the rates directly, Corollary 3.2 shows convergence to

the least squares solution of the pre-conditioned problem, not the original problem. However, using the

2Note that for consistent systems, the Kaczmarz iterations are independent of the scaling of the system.

10

TT

notation of the previous corollaries, one can use the bound

¶

µ

q

a max

w

T

−1

kek2

kx k − x ⋆ k2 ≤ kx k − x ⋆ k2 + k(A A) k2 1 +

a min

along with Corollary 3.2 to provide a bound on the expected error from the unweighted least squares

solution for Kaczmarz with uniform row selection. Of course, this error can not be made arbitrarily small

by decreasing c, unless the system is consistent.

3.3. Hybrid Kaczmarz-SGD algorithm. We first compare the result for Kaczmarz with weighted row selection (Corollary 3.1) with the bounds obtained by solving the quadratic system kAx − bk2 using standard SGD, i.e. Algorithm 2.1 with f i (x) = n2 (⟨a i , x⟩ − b i )2 , but with λ = 1 rather than λ = 0. This corresponds to iterating the recursion

c

x k = x k−1 +

(b i − ⟨a i , x k−1⟩)a i

(3.8)

2

na max

with uniform row selection rule. Theorem 2.1 implies the bound

2

³

2c(1 − c) kAkF ´´k

c

Ekx k − x ⋆ k22 ≤ 1 −

K (A)r SG ,

kx 0 − x ⋆ k22 +

2

K (A) na max

1−c

(3.9)

2

2

/a max

}. As expected by Corollary 2.2, the convergence rate in (3.9) is

where r SG = min{kek22 /kAk2F , e max

worse than the rate (3.5) for Kaczmarz for weighted row selection but the convergence horizon r SG in

(3.9) is smaller than that for Kaczmarz with weighted selection strategy. To have small convergence horizon and high convergence rate simultaneously, Corollary 2.2 implies that we may use instead what we

refer to as the hybrid Kaczmarz-SGD algorithm, or Algorithm 2.1 with f i (x) = n2 (⟨a i , x⟩−b i )2 and λ ∈ [0, 1]

with λ = 1/2 balancing accuracy and speed. We analyze the behavior of the hybrid Kaczmarz-SGD algorithm numerically for various matrices A in the following section.

4. N UMERICAL E XPERIMENTS

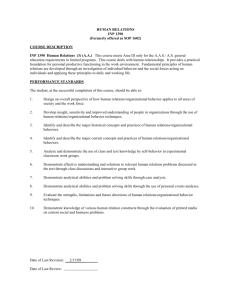

In this section we present some numerical results for the hybrid Kaczmarz-SGD algorithm, or Algorithm 2.1 with f i (x) = n2 (⟨a i , x⟩ − b i )2 and λ ∈ [0, 1], and demonstrate how its behavior depends on λ, the

conditioning of the system, and the residual error at the least squares solution. We focus on exploring the

role of λ on the convergence rate of the algorithm for various types of matrices A. We consider five types

of systems, described below, each using a 1000 × 10 matrix A. In each setting, we create a vector x with

standard normal entries. For the described matrix A and residual e, we create the system b = Ax + e and

run the randomized Kaczmarz method with various choices of λ. Each experiment consists of 100 independent trials and uses the optimal step size as in Corollary 2.2 with ε = .1; the plots show the average

behavior over these trials. The settings below show the various types of behavior the Kazcmarz method

can exhibit.

Case 1: Each row of the matrix A has standard normal entries, except the last row which has normal

entries with mean 0 and variance 102 . The residual vector e has normal entries with mean 0 and

variance 0.12 .

Case 2: Each row of the matrix A has standard normal entries. The residual vector e has normal

entries with mean 0 and variance 0.12 .

Case 3: The j th row of A has normal entries with mean 0 and variance j . The residual vector e has

normal entries with mean 0 and variance 202 .

Case 4: The j th row of A has normal entries with mean 0 and variance j . The residual vector e has

normal entries with mean 0 and variance 102 .

Case 5: The j th row of A has normal entries with mean 0 and variance j . The residual vector e has

normal entries with mean 0 and variance 0.12 .

11

Case 1:

Case 2:

1

1

10

λ=0

λ = 0.4

λ = 0.7

λ = .9

λ=1

0

10

Error (log)

Error (log)

10

λ=0

λ = 0.2

λ = 0.4

λ = 0.7

λ=1

0

10

−1

−1

10

10

0

5000

10000

Iterations

15000

0

500

Case 3:

1

2500

3000

λ=0

λ = 0.2

λ = 0.4

λ = 0.7

λ=1

1

10

Error (log)

Error (log)

1500

2000

Iterations

Case 4:

λ=0

λ = 0.2

λ = 0.4

λ = 0.7

λ=1

10

1000

0

10

−1

0

10

−1

10

10

0

1000

2000

3000

Iterations

4000

5000

0

1000

2000

3000

Iterations

4000

5000

Case 5:

λ=0

λ = 0.2

λ = 0.4

λ = 0.7

λ=1

1

Error (log)

10

0

10

−1

10

0

1000

2000

3000

Iterations

4000

5000

Figure 1 The convergence rates for the randomized Kaczmarz method with various choices of λ in the five settings described above. The vertical axis is in logarithmic scale and depicts the approximation error kxk − x⋆ k22

at iteration k (the horizontal axis).

TT

Iterations (log)

12

4

10

3

10

0

0.2

0.4

λ

0.6

0.8

1

Figure 2 Number of iterations k needed by the randomized Kaczmarz method for various values of λ to obtain

approximation error kxk − x⋆ k22 ≤ ε = 0.1 in the five cases described above: Case 1 (blue with circle marker),

Case 2 (red with square marker), Case 3 (black with triangle marker), Case 4 (green with x marker), and Case 5

(purple with star marker).

Figure 1 shows the convergence behavior of the randomized Kaczmarz method in each of these five

settings. As expected, when the rows of A are far from normalized, as in Case 1, we see different behavior

as λ varies from 0 to 1. Here, weighted sampling (λ = 0) significantly outperforms uniform sampling

(λ = 1), and the trend is monotonic in λ. On the other hand, when the rows of A are close to normalized,

as in Case 2, the various λ give rise to similar convergence rates, as is expected. Out of the λ tested

(we tested increments of 0.1 from 0 to 1), the choice λ = 0.7 gave the worst convergence rate, and again

purely weighted sampling gives the best. Still, the worst-case convergence rate was not much worse, as

opposed to the situation with uniform sampling in Case 1. Cases 3, 4, and 5 use matrices with varying

row norms and cover “high", “medium", and “low" noise regimes, respectively. In the high noise regime

(Case 3), we find that fully weighted sampling, λ = 0, is relatively very slow to converge, as the theory

suggests, and hybrid sampling outperforms both weighted and uniform selection. In the medium noise

regime (Case 4), hybrid sampling still outperforms both weighted and uniform selection. Again, this is

not surprising, since hybrid sampling allows a balance between small convergence horizon (important

with large residual norm) and convergence rate. As we decrease the noise level (as in Case 5), we see that

again weighted sampling is preferred.

Figure 2 shows the number of iterations of the randomized Kaczmarz method needed to obtain a fixed

approximation error. For the choice λ = 1 for Case 1, we cut off the number of iterations after 50,000, at

which point the desired approximation error was still not attained. As seen also from Figure 1, Case 1

exhibits monotonic improvements as we scale λ. For Cases 2 and 5, the optimal choice is pure weighted

sampling, whereas Cases 3 and 4 prefer intermediate values of λ.

5. P ROOFS

The proof of Theorem 2.1 utilizes an elementary fact about smooth functions with Lipschitz continuous gradient, called the co-coercivity of the gradient. We state the lemma and recall its proof for completeness.

5.1. The Co-coercivity Lemma.

13

Lemma 5.1 (Co-coercivity). For a smooth function f whose gradient has Lipschitz constant L,

­

®

k∇ f (x) − ∇ f (y )k22 ≤ L x − y , ∇ f (x) − ∇ f (y ) .

Proof. Since ∇ f has Lipschitz constant L, if x ⋆ is the minimizer of f , then

­

®

1

1

k∇ f (x) − ∇ f (x ⋆ )k22 =

k∇ f (x) − ∇ f (x ⋆ )k22 + x − x ⋆ , ∇ f (x ⋆ ) ≤ f (x) − f (x ⋆ );

2L

2L

(5.1)

see, for example, [[24], page 26]. Now define the convex functions

­

®

G(z) = f (z) − ∇ f (x), z ,

­

®

and H (z) = f (z) − ∇ f (y ), z ,

and observe that both have Lipschitz constants L and minimizers x and y , respectively. Applying (5.1) to

these functions therefore gives that

G(x) ≤ G(y ) −

1

k∇G(y )k22 ,

2L

and H (y ) ≤ H (x) −

1

k∇H (y )k22 .

2L

By their definitions, this implies that

­

®

­

® 1

k∇ f (y ) − ∇ f (x)k22

f (x) − ∇ f (x), x ≤ f (y ) − ∇ f (x), y −

2L

­

®

­

® 1

f (y ) − ∇ f (y ), y ≤ f (x) − ∇ f (y ), x −

k∇ f (x) − ∇ f (y )k22 .

2L

Adding these two inequalities and canceling terms yields the desired result.

5.2. Proof of Theorem 2.1. With the notation of Theorem 2.1, and where i is the random index chosen

at iteration k, and w = w λ , we have

γ

∇ f i (x k )k22

w (i )

γ

γ

(∇ f i (x k ) − ∇ f i (x ⋆ )) −

∇ f i (x ⋆ )k22

= k(x k − x ⋆ ) −

w (i )

w (i )

® ³ γ ´2

γ ­

x k − x ⋆ , ∇ f i (x k ) +

k∇ f i (x k ) − ∇ f i (x ⋆ ) + ∇ f i (x ⋆ )k22

= kx k − x ⋆ k22 − 2

w (i )

w (i )

³ γ ´2

³ γ ´2

®

γ ­

x k − x ⋆ , ∇ f i (x k ) + 2

k∇ f i (x k ) − ∇ f i (x ⋆ )k22 + 2

k∇ f i (x ⋆ )k22

≤ kx k − x ⋆ k22 − 2

w (i )

w (i )

w (i )

®

γ ­

≤ kx k − x ⋆ k22 − 2

x k − x ⋆ , ∇ f i (x k )

w (i )

³ γ ´2

³ γ ´2 ­

®

L i x k − x ⋆ , ∇ f i (x k ) − ∇ f i (x ⋆ ) + 2

k∇ f i (x ⋆ )k22 ,

+2

w (i )

w (i )

kx k+1 − x ⋆ k22 = kx k − x ⋆ −

where we have employed Jensen’s inequality in the first inequality and the co-coercivity Lemma 5.1 in

the final line. We next take an expectation with respect to the choice of i , which is drawn according to

the distribution i ∼ D (λ) . In taking the expectation w.r.t. D (λ) , we recall for the weights defined by (2.2)

14

TT

we have by (1.2) that E(w)

h

i

1

w(i ) X (i )

= E X (i ). We also recall that E∇ f i (x) = ∇F (x), and obtain, for γα ≤ 1,

h

®i

L ­

E(w) kx k+1 − x ⋆ k22 ≤ kx k − x ⋆ k22 − 2γ ⟨x k − x ⋆ , ∇F (x k )⟩ + 2γ2 E w(ii ) x k − x ⋆ , ∇ f i (x k ) − ∇ f i (x ⋆ )

i

h

2

1

+ 2γ2 E w(i

) k∇ f i (x ⋆ )k2

·

µ

¶

¸

­

®

1

1

2

2

≤ kx k − x ⋆ k2 − 2γ ⟨x k − x ⋆ , ∇F (x k )⟩ + 2γ E min

L, L i x k − x ⋆ , ∇ f i (x k ) − ∇ f i (x ⋆ )

1−λ λ

#

!

"

Ã

L

1

k∇ f i (x ⋆ )k22

+ 2γ2 E min ,

λ (1 − λ)L i

Ã

!

­

®

L supi L i

2

2

≤ kx k − x ⋆ k2 − 2γ ⟨x k − x ⋆ , ∇F (x k )⟩ + 2γ min

E x k − x ⋆ , ∇ f i (x k ) − ∇ f i (x ⋆ )

,

1−λ

λ

!

Ã

L

1

Ek∇ f i (x ⋆ )k22

+ 2γ2 min ,

λ (1 − λ) infi L i

= kx k − x ⋆ k22 − 2γ ⟨x k − x ⋆ , ∇F (x k )⟩ + 2γ2 α ⟨x k − x ⋆ , ∇F (x k ) − ∇F (x ⋆ )⟩ + 2γ2 βσ2

where we have set α = min

of F (x) and obtain:

³

L supi L i

, λ

1−λ

´

and β = min

³

L

1

,

λ (1−λ) infi L i

´

. We now utilize the strong convexity

≤ kx k − x ⋆ k22 − 2γµ(1 − γα)kx k − x ⋆ k22 + 2γ2 βσ2

= (1 − 2γµ(1 − γα))kx k − x ⋆ k22 + 2γ2 βσ2

Recursively applying this bound over the first k iterations yields the desired result,

´j

³

´k

k−1

X³

1 − 2γµ(1 − γα) γ2 βσ2

E(w) kx k − x ⋆ k22 ≤ 1 − 2γµ(1 − γα) kx 0 − x ⋆ k22 + 2

j =0

³

´k

γβσ2

¢,

= 1 − 2γµ(1 − γα) kx 0 − x ⋆ k22 + ¡

µ 1 − γα

where the expectation on the l.h.s. above is w.r.t. the indices in each of the k iterations being drawn

i.i.d. from D (λ) = D (w λ ) .

5.3. Proof of Corollary 2.2.

Proof. Recall the main recursive step in the previous proof:

¡

¢

E(w) kx k+1 − x ⋆ k22 ≤ 1 − 2µγ(1 − γα) kx k − x ⋆ k22 + 2βγ2 σ2 ,

(5.2)

provided that γα ≤ 1. The minimal value of the quadratic

¡

¢

F ξ (γ) = 1 − 2γµ(1 − γα) ξ + 2βσ2 γ2

is achieved at

γ∗ξ =

and

µξ

,

2ξµα + 2βσ2

¡

F ξ (γ∗ξ ) = 1 −

¢

µ2 ξ

ξ.

2

2µαξ + 2βσ

(5.3)

(5.4)

15

Note that γ∗ξ α ≤ 1/2. Thus if we choose stepsize γ∗ = γ∗ε ,

E(w) kx k+1 − x ⋆ k22 ≤ F kxk −x⋆ k2 (γ∗ )

2

³

´

= F kxk −x⋆ k2 (γ∗ ) − F ε (γ∗ ) + F ε (γ∗ )

2

³

≤ 1−

´

µ2 ε

kx k − x ⋆ k22

2µεα + 2βσ2

(5.5)

(5.6)

(5.7)

(5.8)

and, iterating the expectation,

³

Ekx k+1 − x ⋆ k22 ≤ 1 −

´k

µ2 ε

ε0 ,

2µεα + 2βσ2

(5.9)

where again the expectation on the l.h.s. is w.r.t to the random indices in all iterations being drawn from

D (λ) . It follows that if ε ≤ Ekx k+1 − x ⋆ k22 , then

´

³

µ2 ε

(5.10)

log(ε/ε0 ) ≤ k log 1 −

2µεα + 2βσ2

¡

¢

µ2 ε

≤ −k

(5.11)

2

2µαε + 2βσ

or, equivalently

¡ 2µαε + 2βσ2 ¢

k ≤ log(ε0 /ε)

µ2 ε

³ 2α 2βσ2 ´

+ 2 .

= log(ε0 /ε)

µ

µ ε

In particular, setting λ = 1/2, one arrives at the bound

³ 4L 4σ2 ´

k ≤ log(ε0 /ε)

+ 2 .

µ

µ ε

(5.12)

(5.13)

ACKNOWLEDGEMENTS

DN was partially supported by a Simons Foundation Collaboration grant, NS was partially supported

by a Google Research Award, and RW was supported in part by ONR Grant N00014-12-1-0743 and an

AFOSR Young Investigator Program Award.

R EFERENCES

[1] F. Bach and E. Moulines. Non-asymptotic analysis of stochastic approximation algorithms for machine learning. Advances

in Neural Information Processing Systems (NIPS), 2011.

[2] L. Bottou. Large-scale machine learning with stochastic gradient descent. In Proceedings of COMPSTAT’2010, pages 177–

186. Springer, 2010.

[3] L. Bottou and O. Bousquet. The tradeoffs of large-scale learning. Optimization for Machine Learning, page 351, 2011.

[4] C. L. Byrne. Applied iterative methods. A K Peters Ltd., Wellesley, MA, 2008.

[5] C. Cenker, H. G. Feichtinger, M. Mayer, H. Steier, and T. Strohmer. New variants of the POCS method using affine subspaces

of finite codimension, with applications to irregular sampling. In Proc. SPIE: Visual Communications and Image Processing,

pages 299–310, 1992.

[6] Y. Censor, Paul PB Eggermont, and Dan Gordon. Strong underrelaxation in Kaczmarz’s method for inconsistent systems.

Numerische Mathematik, 41(1):83–92, 1983.

[7] P. P. B. Eggermont, G. T. Herman, and A. Lent. Iterative algorithms for large partitioned linear systems, with applications to

image reconstruction. Linear Algebra Appl., 40:37–67, 1981.

16

TT

[8] Y. C. Eldar and D. Needell. Acceleration of randomized Kaczmarz method via the Johnson-Lindenstrauss lemma. Numer.

Algorithms, 58(2):163–177, 2011.

[9] T. Elfving. Block-iterative methods for consistent and inconsistent linear equations. Numer. Math., 35(1):1–12, 1980.

[10] M. Hanke and W. Niethammer. On the acceleration of Kaczmarz’s method for inconsistent linear systems. Linear Algebra

and its Applications, 130:83–98, 1990.

[11] G. T. Herman. Fundamentals of computerized tomography: image reconstruction from projections. Springer, 2009.

[12] G.T. Herman and L.B. Meyer. Algebraic reconstruction techniques can be made computationally efficient. IEEE Trans.

Medical Imaging, 12(3):600–609, 1993.

[13] G. N Hounsfield. Computerized transverse axial scanning (tomography): Part 1. description of system. British Journal of

Radiology, 46(552):1016–1022, 1973.

[14] S. Kaczmarz. Angenäherte auflösung von systemen linearer gleichungen. Bull. Int. Acad. Polon. Sci. Lett. Ser. A, pages 335–

357, 1937.

[15] Y. Lee and A. Sidford. Efficient accelerated coordinate descent methods and faster algorithms for solving linear systems.

arXiv preprint arXiv:1305.1922, 2013.

[16] Y. T. Lee and A. Sidford. Efficient accelerated coordinate descent methods and faster algorithms for solving linear systems.

Submitted, 2013.

[17] J. Liu and S. J. Wright. An accelerated randomized kaczmarz algorithm. Submitted, 2013.

[18] N. Murata. A statistical study of on-line learning. Cambridge University Press, Cambridge,UK, 1998.

[19] F. Natterer. The mathematics of computerized tomography, volume 32 of Classics in Applied Mathematics. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA, 2001. Reprint of the 1986 original.

[20] D. Needell. Randomized Kaczmarz solver for noisy linear systems. BIT, 50(2):395–403, 2010.

[21] D. Needell and J. A. Tropp. Paved with good intentions: Analysis of a randomized block kaczmarz method. Linear Algebra

and its Applications, 2013.

[22] D. Needell and R. Ward. Two-subspace projection method for coherent overdetermined linear systems. Journal of Fourier

Analysis and Applications, 19(2):256–269, 2013.

[23] A. Nemirovski, A. Juditsky, G. Lan, and A. Shapiro. Robust stochastic approximation approach to stochastic programming.

SIAM Journal on Optimization, 19(4):1574–1609, 2009.

[24] Y. Nesterov. Introductory Lectures on Convex Optimization. Kluwer, 2004.

[25] C. Popa. Extensions of block-projections methods with relaxation parameters to inconsistent and rank-deficient leastsquares problems. BIT, 38(1):151–176, 1998.

[26] C. Popa. Block-projections algorithms with blocks containing mutually orthogonal rows and columns. BIT, 39(2):323–338,

1999.

[27] C. Popa. A fast Kaczmarz-Kovarik algorithm for consistent least-squares problems. Korean J. Comput. Appl. Math., 8(1):9–

26, 2001.

[28] C. Popa. A Kaczmarz-Kovarik algorithm for symmetric ill-conditioned matrices. An. Ştiinţ. Univ. Ovidius Constanţa Ser.

Mat., 12(2):135–146, 2004.

[29] Constantin Popa, T Preclik, H Köstler, and U Rüde. On KaczmarzŠs projection iteration as a direct solver for linear least

squares problems. Linear Algebra and Its Applications, 436(2):389–404, 2012.

[30] H. Robbins and S. Monroe. A stochastic approximation method. Ann. Math. Statist., 22:400–407, 1951.

[31] S. Shalev-Shwartz and N. Srebro. Svm optimization: inverse dependence on training set size. In Proceedings of the 25th

international conference on Machine learning, pages 928–935, 2008.

[32] O. Shamir and T. Zhang. Stochastic gradient descent for non-smooth optimization: Convergence results and optimal averaging schemes. arXiv preprint arXiv:1212.1824, 2012.

[33] T. Strohmer and R. Vershynin. A randomized Kaczmarz algorithm with exponential convergence. J. Fourier Anal. Appl.,

15(2):262–278, 2009.

[34] K. Tanabe. Projection method for solving a singular system of linear equations and its applications. Numerische Mathematik, 17(3):203–214, 1971.

[35] A. van der Sluis. Condition numbers and equilibration of matrices. Numerische Mathematik, 14(1):14–23, 1969.

[36] T. M. Whitney and R. K. Meany. Two algorithms related to the method of steepest descent. SIAM Journal on Numerical

Analysis, 4(1):109–118, 1967.

[37] A. Zouzias and N. M. Freris. Randomized extended Kaczmarz for solving least-squares. SIAM Journal on Matrix Analysis

and Applications, 2012.